Abstract

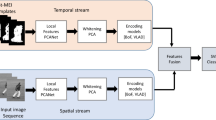

Human action recognition has received significant attention because of its wide applications in human–machine interaction, visual surveillance, and video indexing. Recent progress in deep learning algorithms and convolutional neural networks (CNNs) significantly affects the performance of many action recognition systems. However, CNNs are designed to learn features from 2D spatial images, while action videos are considered as 3D spatiotemporal signals. In addition, the complex structure of most deep networks and their dependency on backpropagation learning algorithm have a negative impact on the efficiency of real-time human action recognition systems. To avoid these limitations, we propose a new human action recognition method based on principal component analysis network (PCANet) which is a simple architecture of CNN exploiting unsupervised learning instead of the commonly used supervised algorithms. However, PCANet is originally designed to solve 2D image classification problems. In order to make it suitable for solving action recognition problem in videos, the temporal information of the input video is appropriately represented using motion energy templates. Multiple short-time motion energy image (ST-MEI) templates are computed to capture human motion information. The deep structure of PCANet helps to learn hierarchical local motion features from the input ST-MEI templates. The dimension of the feature vector learned from PCANet is reduced using whitening principal component analysis algorithm and finally fed to linear support vector machines classifier for classification. The proposed method is evaluated using three different benchmark datasets, namely KTH, Weizmann, and UCF sports action. Experimental results using leave-one-out strategy demonstrate the effectiveness of the proposed method compared with other state-of-the-art methods.

Similar content being viewed by others

References

Aggarwal JK, Ryoo MS (2011) Human activity analysis: a review. ACM Comput Surv CSUR 43(3):16

Ahad MAR, Tan JK, Kim H, Ishikawa S (2009) Temporal motion recognition and segmentation approach. Int J Imaging Syst Technol 19(2):91–99

Ahmad M, Lee SW (2008) Human action recognition using shape and CLG-motion flow from multi-view image sequences. Pattern Recognit 41(7):2237–2252

Aly S, Mohamed A (2019) Unknown-length handwritten numeral string recognition using cascade of PCA-SVMNet classifiers. IEEE Access 7:52024–52034

Aly S, Sayed A (2019) Human action recognition using bag of global and local Zernike moment features. Multimed Tools Appl 78(17):24923–24953

Aly W, Aly S, Almotairi S (2019) User-independent American sign language alphabet recognition based on depth image and PCANet features. IEEE Access 7:123138–123150

Asadi-Aghbolaghi M, Clapes A, Bellantonio M, Escalante HJ, Ponce-López V, Baró X, Guyon I, Kasaei S, Escalera S (2017) A survey on deep learning based approaches for action and gesture recognition in image sequences. In: 2017 12th IEEE international conference on automatic face & gesture recognition (FG 2017). IEEE, pp 476–483

Bobick A, Davis J (1996) An appearance-based representation of action. In: Proceedings of the 13th international conference on pattern recognition. IEEE, vol 1, pp 307–312

Bobick AF, Davis JW (2001) The recognition of human movement using temporal templates. IEEE Trans Pattern Anal Mach Intell 23(3):257–267

Chan TH, Jia K, Gao S, Lu J, Zeng Z, Ma Y (2015) PCANet: a simple deep learning baseline for image classification? IEEE Trans Image Process 24(12):5017–5032

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: IEEE computer society conference on computer vision and pattern recognition, 2005. CVPR 2005. IEEE, vol 1, pp 886–893

Dollár P, Rabaud V, Cottrell G, Belongie S (2005) Behavior recognition via sparse spatio-temporal features. In: 2nd joint IEEE international workshop on visual surveillance and performance evaluation of tracking and surveillance, 2005. IEEE, pp 65–72

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 580–587

Gorelick L, Blank M, Shechtman E, Irani M, Basri R (2007) Actions as space-time shapes. IEEE Trans Pattern Anal Mach Intell 29(12):2247–2253

Han Y, Zhang P, Zhuo T, Huang W, Zhang Y (2018) Going deeper with two-stream convnets for action recognition in video surveillance. Pattern Recognit Lett 107:83–90

Herath S, Harandi M, Porikli F (2017) Going deeper into action recognition: a survey. Image Vis Comput 60:4–21

Huang J, Yuan C (2015) Weighted-pcanet for face recognition. In: International conference on neural information processing. Springer, pp 246–254

Jarrett K, Kavukcuoglu K, LeCun Y et al (2009) What is the best multi-stage architecture for object recognition? In: 2009 IEEE 12th international conference on computer vision. IEEE, pp 2146–2153

Jhuang H, Serre T, Wolf L, Poggio T (2007) A biologically inspired system for action recognition. In: IEEE 11th international conference on computer vision, 2007. ICCV 2007. IEEE, pp 1–8

Ji S, Xu W, Yang M, Yu K (2013) 3D convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231

Jia K, Yeung DY (2008) Human action recognition using local spatio-temporal discriminant embedding. In: IEEE conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE, pp 1–8

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1725–1732

Kavukcuoglu K, Sermanet P, Boureau YL, Gregor K, Mathieu M, Cun YL (2010) Learning convolutional feature hierarchies for visual recognition. In: Advances in neural information processing systems, pp 1090–1098

Klaser A, Marszałek M, Schmid C (2008) A spatio-temporal descriptor based on 3D-gradients. In: BMVC 2008-19th British machine vision conference. British Machine Vision Association, p 275:1

Koohzadi M, Charkari NM (2017) Survey on deep learning methods in human action recognition. IET Comput Vis 11(8):623–632

Krizhevsky A, Sutskever I, Hinton G.E (2012) ImageNet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Laptev I, Marszalek M, Schmid C, Rozenfeld B (2008) Learning realistic human actions from movies. In: IEEE conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE, pp 1–8

Le QV, Zou WY, Yeung SY, Ng AY (2011) Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 3361–3368

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Lin Z, Jiang Z, Davis LS (2009) Recognizing actions by shape-motion prototype trees. In: 2009 IEEE 12th international conference on computer vision. IEEE, pp 444–451

Lipton AJ, Fujiyoshi H, Patil RS (1998) Moving target classification and tracking from real-time video. In: Proceedings of the fourth IEEE workshop on applications of computer vision, 1998. WACV’98. IEEE, pp 8–14

Mikolajczyk K, Schmid C (2005) A performance evaluation of local descriptors. IEEE Trans Pattern Anal Mach Intell 27(10):1615–1630

Niebles JC, Wang H, Fei-Fei L (2008) Unsupervised learning of human action categories using spatial-temporal words. Int J Comput Vis 79(3):299–318

Rodriguez MD, Ahmed J, Shah M (2008) Action mach a spatio-temporal maximum average correlation height filter for action recognition. In: IEEE conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE, pp 1–8

Sargano AB, Angelov P, Habib Z (2017) A comprehensive review on handcrafted and learning-based action representation approaches for human activity recognition. Appl Sci 7(1):110

Schindler K, Van Gool L (2008) Action snippets: how many frames does human action recognition require? In: IEEE conference on computer vision and pattern recognition, 2008. CVPR 2008. IEEE, pp 1–8

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: a local svm approach. In: Proceedings of the 17th international conference on pattern recognition, 2004. ICPR 2004. IEEE, vol 3, pp 32–36

Shi J, Wu J, Li Y, Zhang Q, Ying S (2017) Histopathological image classification with color pattern random binary hashing-based PCANet and matrix-form classifier. IEEE J Biomed Health Inform 21(5):1327–1337

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: Advances in neural information processing systems, pp 568–576

Sun L, Jia K, Yeung DY, Shi BE (2015) Human action recognition using factorized spatio-temporal convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp 4597–4605

Sun X, Chen M, Hauptmann A (2009) Action recognition via local descriptors and holistic features. In: IEEE computer society conference on computer vision and pattern recognition workshops, 2009. CVPR Workshops 2009. IEEE, pp 58–65

Taylor GW, Fergus R, LeCun Y, Bregler C (2010) Convolutional learning of spatio-temporal features. In: European conference on computer vision. Springer, pp 140–153

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of the IEEE international conference on computer vision, pp 4489–4497

Wang H, Ullah MM, Klaser A, Laptev I, Schmid C (2009) Evaluation of local spatio-temporal features for action recognition. In: BMVC 2009-British machine vision conference. BMVA Press, p 124:1

Wang S, Chen L, Zhou Z, Sun X, Dong J (2016) Human fall detection in surveillance video based on pcanet. Multimed Tools Appl 75(19):11603–11613

Wang Y, Song J, Wang L, Van Gool L, Hilliges O (2016) Two-stream SR-CNNS for action recognition in videos. In: BMVC

Wu J, Qiu S, Zeng R, Kong Y, Senhadji L, Shu H (2017) Multilinear principal component analysis network for tensor object classification. IEEE Access 5:3322–3331

Zhang K, Zhang L (2018) Extracting hierarchical spatial and temporal features for human action recognition. Multimed Tools Appl 77(13):16053–16068

Zheng D, Du J, Fan W, Wang J, Zhai C (2016) Deep learning with PCANet for human age estimation. In: International conference on intelligent computing. Springer, pp 300–310

Zhou B, Lapedriza A, **ao J, Torralba A, Oliva A (2014) Learning deep features for scene recognition using places database. In: Advances in neural information processing systems, pp 487–495

Zhu F, Shao L, **e J, Fang Y (2016) From handcrafted to learned representations for human action recognition: a survey. Image Vis Comput 55:42–52

Acknowledgements

The second author extends their appreciation to the Deanship of Scientific Research at Majmaah University for funding this work under Project Number No. RGP-2019-24.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abdelbaky, A., Aly, S. Human action recognition using short-time motion energy template images and PCANet features. Neural Comput & Applic 32, 12561–12574 (2020). https://doi.org/10.1007/s00521-020-04712-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-04712-1