Abstract

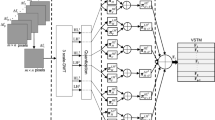

Human action recognition is a key step in video analytics, with applications in various domains. In this work, a deep learning approach to action recognition using motion history information is presented. Temporal templates capable of capturing motion history information in a video as a single image, are used as input representation. In contrast to assigning brighter values to recent motion information, we use fuzzy membership functions to assign brightness (significance) values to the motion history information. New temporal templates highlighting motion in various temporal regions are proposed for better discrimination among actions. The features extracted by a convolutional neural network from the RGB and depth temporal templates are used by an extreme learning machine (ELM) classifier for action recognition. Evidences across classifiers using different temporal templates (i.e. membership function) are combined to optimize the performance. The effectiveness of this approach is demonstrated on MIVIA video action dataset.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Han, J., L. Shao, D. Xu, and J. Shotton. 2013. Enhanced Computer Vision With Microsoft Kinect Sensor: A Review. IEEE Transactions on Cybernetics 43(5): 1318–1334.

Laptev, I., and T. Lindeberg. 2003. Space-Time Interest Points. In International Conference on Computer Vision (ICCV), 432–439.

Dalal, N., B. Triggs, and C. Schmid. 2006. Human Detection Using Oriented Histograms of flow and Appearance. In European Conference on Computer Vision, ECCV 06, II, 428–441.

Laptev, I., M. Marszalek, C. Schmid, and B. Rozenfeld. 2008. Learning Realistic Human Actions from Movies. In Conference on Computer Vision and Pattern Recognition, June 2008.

Dalal, N., and Triggs, B.: Histograms of oriented gradients for human detection. In Computer Vision and Pattern Recognition (CVPR), 886–893.

Yuan, C., X. Li, W. Hu, H. Ling, and S.J. Maybank. 2014. Modeling Geometric-Temporal Context With Directional Pyramid Co-Occurrence For Action Recognition. IEEE Trans-actions on Image Processing 23(2): 658–672.

Klaser, A., M. Marszalek, and C. Schmid. 2008. A Spatio-Temporal Descriptor Based on 3D-Gradients. In British Machine Vision Conference (BMVC), 995–1004, Sept 2008.

Bobick, A.F., and J.W. Davis. 2001. The Recognition Of Human Movement Using Temporal Templates. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI) 23 (3): 257–267.

Tran, D., and L. Torresani. 2013. EXMOVES: Classifier-Based Features for Scalable Action Recognition, CoRR abs/1312.5785.

Tran, D., L.D. Bourdev, R. Fergus, L. Torresani, and M. Paluri. 2014. C3D: Generic Features for Video Analysis. CoRR abs/1412.0767.

Ij**a, E.P., and C.K. Mohan. 2014. Human Action Recognition Based on Recognition of Linear Patterns in Action Bank Features Using Convolutional Neural Networks. In International Conference on Machine Learning and Applications (ICMLA), 178–182, Dec 2014.

Sadanand, S., and J.J. Corso. 2012. Action Bank: A High-Level Representation of Activity in Video. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1234–1241, June 2012.

Zhu, W., J. Miao, L. Qing, and G.B. Huang. 2015. Hierarchical Extreme Learning Machine for Unsupervised Representation Learning. In International Joint Conference on Neural Networks (IJCNN), 1–8, July 2015.

Jia, C., M. Shao, and Y. Fu. 2017. Sparse Canonical Temporal Alignment With Deep Tensor Decomposition for Action Recognition. IEEE Transactions on Image Processing 26(2): 738–750.

McDonnell, M.D., and T. Vladusich. 2015. Enhanced Image Classification With A Fast-Learning Shallow Convolutional Neural Network. CoRR abs/1503.04596 2015.

Huang, G.B., H. Zhou, X. Ding, and R. Zhang. 2012. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics) 42(2); 513–529, Apr 2012. https://doi.org/10.1109/TSMCB.2011.2168604.

Huang, G.B., Q.Y. Zhu, and C.K. Siew. 2006. Extreme Learning Machine: Theory and Applications. Neurocomputing 70 (1): 489–501.

Zhu, W., J. Miao, and L. Qing. 2015. Constrained Extreme Learning Machines: A Study on Classification Cases. CoRR abs/1501.06115.

Carletti, V., P. Foggia, G. Percannella, A. Saggese, and M. Vento. 2013. Recognition of Human Actions from RGB-D Videos Using a Reject Option, 436–445, Springer: Berlin, Heidelberg.

Foggia, P., G. Percannella, G., A. Saggese, and M. Vento. 2013. Recognizing Human Actions by a Bag of Visual Words. In IEEE International Conference on Systems, Man, and Cybernetics, 2910–2915, Oct 2013.

Brun, L., G. Percannella, A. Saggese, M. Vento, and A. Hack. 2014. A System for the Recognition of Human Actions by Kernels of Visual Strings. In IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), 142–147, Aug 2014.

Foggia, P., A. Saggese, N. Strisciuglio, and M. Vento. 2014. Exploiting the Deep Learning Paradigm for Recognizing Human Actions. In IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), 93–98, Aug 2014.

Brun, L., P. Foggia, A. Saggese, and M. Vento. 2015. Recognition of Human Actions Using Edit Distance on Aclet Strings. In International Conference on Computer Vision Theory and Applications (VISAPP), 97–103.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Ij**a, E.P. (2020). Action Recognition Using Motion History Information and Convolutional Neural Networks. In: Raju, K., Govardhan, A., Rani, B., Sridevi, R., Murty, M. (eds) Proceedings of the Third International Conference on Computational Intelligence and Informatics . Advances in Intelligent Systems and Computing, vol 1090. Springer, Singapore. https://doi.org/10.1007/978-981-15-1480-7_71

Download citation

DOI: https://doi.org/10.1007/978-981-15-1480-7_71

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-1479-1

Online ISBN: 978-981-15-1480-7

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)