Abstract

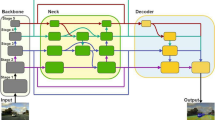

Beneficial from fusion of various external sensors such as LiDAR and camera, intelligent vehicles are able to be informed of their surroundings with details in real time. The characteristics of each type of sensors determine its specificity in raw data, leading to inconformity of content, precision, range and timing, which eventually increases the complexity of environment descriptions and effective information extraction for further applications. In order to efficiently describe the environment, as well as remain the diversity of necessary information, we propose a unified description model independent of specific sensors for intelligent vehicle environment perception, containing 3D positions, semantics and time. The spatio-temporal relationship between different types of collected data are established to express all the elements in the same system. As a potential implementation scheme, we take advantages of LiDAR point cloud and color images to acquire the positions and semantics of the environment components. Semantic segmentation based on convolutional neural networks (CNN) provides rich semantics for the model, which could also be enhanced with the fusion of LiDAR point cloud. We validate the effectiveness of our proposed model on the KITTI dataset and our own collected data and exhibit qualitative results.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Varga R, Costea A, Florea H, Giosan I, Nedevschi S (2017) Super-sensor for 360-degree environment perception: point cloud segmentation using image features. In: International IEEE conference on intelligent transportation systems (ITSC)

Leonard J, How J, Teller S, Berger M et al (2009) A perception-driven autonomous urban vehicle. In: The DARPA urban challenge. Springer Tracts in Advanced Robotics. Springer, Heidelberg

**e S, Yang D, Jiang K, Zhong Y (2018) Pixels and 3-D points alignment method for the fusion of camera and LiDAR data. IEEE Trans Instrum Measur 68(10):1–16. https://doi.org/10.1109/TIM.2018.2879705

Natour GE, Aider OA, Rouveure R, Berry F, Faure P (2015) Radar and vision sensors calibration for outdoor 3D reconstruction. In: IEEE international conference on robotics and automation (ICRA)

Eckelmann S, Trautmann T, Ußler H, Reichelt B, Michler O (2017) V2V-communication, LiDAR system and positioning sensors for future fusion algorithms in connected vehicles. Transp Res Procedia 27:69–76

Geiger A, Lenz P, Stiller C, Urtasum R (2013) Vision meets robotics: the KITTI dataset. Int J Robot Res 32(11):1231–1237

Oniga F, Nedevschi S (2010) Processing dense stereo data using elevation maps: road surface, traffic isle, and obstacle detection. IEEE Trans Veh Technol 59(3):1172–1182

Broggi A, Cattani S, Patander M, Sabbatelli M, Zani P (2013) A full-3D voxel-based dynamic obstacle detection for urban scenario using stereo vision. In: International IEEE conference on intelligent transportation systems (ITSC)

Chen X, Ma H, Wan J, Li B, Xa T (2017) Multi-view 3D object detection network for autonomous driving. In: IEEE conference on computer vision and pattern recognition (CVPR)

Haberjahn M, Junghans M (2011) Vehicle environment detection by a combined low and mid level fusion of a laser scanner and stereo vision. In: International IEEE conference on intelligent transportation systems

Sun Z, Bebis G, Miller R (2006) On-road vehicle detection: a review. IEEE Trans Pattern Anal Mach Intell 28(5):694–711

Du X, Ang MH, Karaman S, Rus D (2018) A general pipeline for 3D detection of vehicles. In: IEEE international conference on robotics and automation (ICRA)

Zhao H, Shi J, Qi X, Wang X, Jia J (2017) Pyramid scene parsing network. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Cordts M, Omran M, Ramos S, Rehfeld T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B: The cityscapes dataset for semantic urban scene understanding. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? The KITTI vision benchmark suite. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Zhong Y, Wang S, **e S, Cao Z, Jiang K, Yang D (2017) 3D scene reconstruction with sparse LiDAR data and monocular image in single frame. SAE Int J Passeng Cars Electron Electr Syst. 11(1):48–56

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China under Contract 2018YFB0105000, in part by the National Natural Science Foundation of China under Grant U1864203 and Grant 61773234, and in part by the Bei**g Municipal Science and Technology Program under Grant Z181100005918001 and Grant D171100005117002.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Editor(s) (if applicable) and The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, S. et al. (2021). A Unified Spatio-Temporal Description Model of Environment for Intelligent Vehicles. In: Proceedings of China SAE Congress 2019: Selected Papers. Lecture Notes in Electrical Engineering, vol 646. Springer, Singapore. https://doi.org/10.1007/978-981-15-7945-5_41

Download citation

DOI: https://doi.org/10.1007/978-981-15-7945-5_41

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-7944-8

Online ISBN: 978-981-15-7945-5

eBook Packages: EngineeringEngineering (R0)