Abstract

Previous results suggest that the monitoring of one’s own performance during self-regulated learning is mediated by self-agency attributions and that these attributions can be influenced by poststudy effort-framing instructions. These results pose a challenge to the study of issues of self-agency in metacognition when the objects of self-regulation are mental operations rather than motor actions that have observable outcomes. When participants studied items in Experiment 1 under time pressure, they invested greater study effort in the easier items in the list. However, the effects of effort framing were the same as when learners typically invest more study effort in the more difficult items: Judgments of learning (JOLs) decreased with effort when instructions biased the attribution of effort to nonagentic sources but increased when they biased attribution to agentic sources. However, the effects of effort framing were constrained by parameters of the study task: Interitem differences in difficulty constrained the attribution of effort to agentic regulation (Experiment 2) whereas interitem differences in the incentive for recall constrained the attribution of effort to nonagentic sources (Experiment 3). The results suggest that the regulation and attribution of effort during self-regulated learning occur within a module that is dissociated from the learner’s superordinate agenda but is sensitive to parameters of the task. A model specifies the stage at which effort framing affects the effort–JOL relationship by biasing the attribution of effort to agentic or nonagentic sources. The potentialities that exist in metacognition for the investigation of issues of self-agency are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In everyday life, we normally feel that we are in control of our own actions. When we intend to achieve a certain goal, we have the experience of performing our actions willfully toward the achievement of that goal. Interestingly, we have the same feeling of self-agency when we make an effort to study a new piece of information, to retrieve an item from memory, or to solve a problem. Thus, feelings of agency are associated with many tasks involving metacognitive regulation. Nevertheless, little experimental work exists on the self-agency attributions that occur for tasks in which metacognitive control has few observable outcomes. The present study attempts to explore questions about self-agency in connection with the monitoring and control processes that occur in self-regulated learning.

Agency attributions during self-regulated learning

When learners are allowed control over study time (ST), they usually allocate more ST to the items that are judged to be difficult to remember (Son & Metcalfe, 2000). The typical account of this finding is that learners deliberately invest more ST in the items associated with lower judgments of learning (JOLs) in order to compensate for their difficulty (Dunlosky & Hertzog, 1998). However, the added ST invested in difficult items failed to improve the recall of these items, and what is more, failed to enhance the JOLs associated with these items (Koriat, Ma’ayan, & Nussinson, 2006). To explain what appears to be a “labor-in-vain” effect (Nelson & Leonesio, 1988), Koriat et al. (2006) distinguished between two modes of regulation that have important implications for agency attributions. In self-paced learning, the allocation of ST is typically data driven: Learners spend as much time and effort as the item calls for, and JOLs are based retrospectively on the amount of effort invested under the heuristic that the more effort needed to study an item the lower its likelihood to be recalled at test. Therefore JOLs decrease with increasing ST. Indeed, both the JOLs and recall associated with different items typically decrease with the ST invested in these items (Koriat, 2008; Koriat et al., 2006; Koriat, Nussinson & Ackerman, 2014; Undorf & Erdfelder, 2013, 2015).

The opposite pattern is observed when the allocation of ST is goal driven, strategically used by the learner to regulate memory performance in accordance with different goals (see Bjork, Dunlosky, & Kornell, 2013; Dunlosky & Hertzog, 1998). Strategic, top-down regulation occurs, for example, when different incentives are attached to the recall of different items (Ariel, Dunlosky, & Bailey, 2009; Dunlosky & Thiede, 1998). In that case, learners allocate more ST to the high-incentive items and report higher JOLs for these items than for the low-incentive items (Castel, Murayama, Friedman, McGillivray, & Link, 2013; Dunlosky & Thiede, 1998; Soderstrom & McCabe, 2011). The result is that JOLs increase with ST across different incentives.

These results suggest that JOLs during self-regulated learning are mediated by agency attributions. First, learners seem to discriminate between the effort that is required by the task at hand and the effort that is due to their own self-agency. They increase their JOLs with study effort when effort is attributed to their own agency, but decrease their JOLs with increased study effort when effort is attributed to external factors. Second, when the amount of effort is conjointly determined by both types of factors (see Muenks, Miele, & Wigfield, 2016), learners seem to parcel out the total amount of effort into its two components, deriving diametrically opposed metacognitive inferences from these components. Thus, the same amount of effort was associated with different levels of JOLs depending on whether it was primarily due to data-driven regulation or to goal-driven regulation (Koriat, Ackerman, Adiv, Lockl, & Schneider, 2014; Koriat et al., 2006).

These results pose a challenge to self-agency theories because it is unclear what cues learners use for distinguishing between data-driven and goal-driven effort. A common assumption in self-agency research is that the experience of agency depends on the congruence between one’s intentions and their outcomes (Frith, Blakemore, & Wolpert, 2000; Haggard & Tsakiris, 2009; Sato & Yasuda, 2005; Wegner, 2002; see Haggard & Eitam, 2015). However, it is unclear how this assumption applies when one’s control has little tangible outcomes.

The question of the cues underlying agency attributions in self-paced learning is beyond the scope of the present study and will have to await further theoretical developments. Here, however, we wish to specify how the monitoring and control processes that occur in studying a particular item fit into the overall organization of the processes involved in self-regulated learning. We examine the hypothesis that the effort regulation and effort attribution that occur during self-regulated learning constitute a facet of self-agency that is specific to task performance and is independent of the overall agenda of the learner. In the area of self-agency, researchers distinguished between different components of the sense of self-agency that may be partly dissociable. These include volitional choice, the experience of authorship, the sense of control, and the experience of effort (Bayne & Levy, 2006; Frith, 2013; Pacherie, 2007). Chambon, Sidarus, and Haggard, (2014) stressed the action selection that occurs before the action itself as an important contributor to the sense of agency.

Indeed, according to the agenda-based-regulation framework of self-regulated learning (Ariel et al., 2009; see also Ariel & Dunlosky, 2013; Castel et al., 2013; Thiede & Dunlosky, 1999), learners generally construct an agenda during prestudy planning, and the execution of that agenda controls top down a variety of metacognitive processes during learning. Clearly, the construction and execution of that agenda is an important component of self-agency. We propose, however, that the effort regulation that occurs in studying different items is impervious to the learner’s superordinate agenda and constitutes a separate module of self-agency that is specific to task performance.

This idea can be illustrated by an observation from the study of Thiede and Dunlosky (1999; see also Dunlosky & Thiede, 2004) in which participants first selected the items that they wished to restudy, and then studied these items under self-paced instructions. Of particular interest is a condition in which participants were given an easy goal (e.g., to recall at least 10 items from a list of 30 items). In that condition, participants tended to choose the easier items for restudy. However, during restudy, they invested more time studying the more difficult items among those selected for restudy. Thus, despite the superordinate agenda to prioritize the easier items, the actual allocation of effort to different items was mostly data driven, dictated by interitem differences in difficulty. We argue that JOLs in that condition should also exhibit the same dependence on effort attribution as when the agenda is to focus on the more difficult items.

To examine this hypothesis, we took advantage of the results of Koriat, Nussinson, et al. (2014), which echo the idea in the context of self-agency research (Wegner, 2002, 2003) that the sense of agency is a product of post hoc inference rather than reflecting direct access to one’s conscious will. After studying an item, participants were asked to rate either the amount of study effort that “the item required” (data-driven framing) or the amount of study effort that they “chose to invest” in the item (goal-driven framing). JOLs were found to decrease with rated effort for data-driven framing but to increase with rated effort for goal-driven framing, suggesting that effort attribution during self-paced learning can be biased by poststudy instructions.

The experiments in this study focused on the initial study (rather than restudy) of lists of items, and examined the effects of effort framing on the JOL–effort relationship. Experiment 1 used a condition that induced participants to give priority to the study of the easier items in a list. We hypothesized that this condition should yield the same effects of effort framing on the JOL–effort relationship as what has been observed under conditions in which more ST is invested in the more difficult items. Experiments 2 and 3, in turn, examined the effects of interitem differences on the effectiveness of the effort framing manipulation. The previous studies indicated that ST is very sensitive to interitem differences in both intrinsic difficulty and incentive. This sensitivity would be expected to constrain the effects of the effort framing manipulation. We expect strong interitem differences in difficulty to constrain the attribution of these differences to goal-driven regulation (Experiment 2). This expectation is based on the assumption that the attribution of differences in intrinsic difficulty to data-driven regulation is partly mandatory. Likewise interitem differences in incentive should constrain the attribution of these differences to data-driven regulation (Experiment 3). These constraints should be reflected in the JOL–effort relationships. Results consistent with these predictions would suggest that the regulation of effort and the attribution of effort to data-driven and goal-driven sources constitute a facet of self-agency that is specific to task performance: They should be relatively independent of the learner’s agenda but sensitive to the characteristics of the studied material.

Experiment 1

In Experiment 1, participants studied a list of items under time pressure. Consistent with previous studies, we expect time pressure to induce the allocation of more ST to the easier items in a list (Metcalfe, 2002; Son & Metcalfe, 2000). The agenda to prioritize the easier items is goal driven, and indeed, was found to yield a pattern in which JOLs increase with ST (Koriat et al., 2006). We expect, however, that when learners are probed to attribute study effort to data-driven regulation, JOL should nevertheless decrease with the amount of effort invested in the item. Thus, the effects of effort attribution on the JOL–effort relationship should be the same as those observed under typical conditions (without time pressure) in which greater effort is invested in the more difficult items.

Experiment 1 attempted also to distinguish between two control processes, the choice of items for continued study, and the regulation of effort. Each of these is expected to contribute to JOLs increasing with ST. However, as suggested in the context self-agency research, these control processes may constitute different components of the sense of agency. The experiment included two conditions. In the differential-incentive condition, participants received a 1-point bonus for half the items and a 5-point bonus for the remaining items, whereas in the constant-incentive condition participants received a 3-point bonus for each item. Half of the items were easy and half were difficult.

Method

Participants and stimulus materials

Ninety-six Hebrew-speaking University of Haifa undergraduates (77 women) participated in the experiment, 24 for course credit and 72 for pay, divided equally between the constant and differential conditions. The assignment of items to different incentives was counterbalanced across participants in the differential condition. In each incentive condition, participants were divided randomly so that half of them received data-driven framing instructions and the rest received goal-driven framing instructions. The determination of sample size in this and the following experiments was based on the sample sizes of previous studies (e.g., Koriat, Ackerman et al., 2014; Koriat et al., 2006; Koriat, Nussinson, et al., 2014).

The study materials were those used in Experiment 6 of Koriat et al. (2006). Twenty sets of stimuli were used, each consisting of six Hebrew words. Half of the sets (easy sets) were composed of words that belonged to a common semantic domain (e.g., newspaper, note, letter, library, poem, translation), whereas the other sets (difficult sets) consisted of unrelated words (e.g., road, joke, computer, cup, box, glue). For each set, a test item consisting of five words was constructed by eliminating one of the words in that set. For the differential condition, half of the items in each difficulty category were assigned a 1-point incentive for recall, and half were assigned a 5-point incentive, with the assignment counterbalanced across participants. In addition, two items were used for practice at the beginning of the study list.

Apparatus and procedure

The experiment was conducted on a personal computer. In a practice block, participants studied four short stories. They were told to assume that they were studying for an exam in which some of the items were more important to remember than others. The importance of each item would be marked by an incentive value representing the number of points earned for correct recall. The four stories were presented in turn with an incentive value marked at the top, 1 (in blue) or 5 (in red), for the differential condition, and 3 (in black) for the constant condition. Participants were instructed to study each story as long as they needed, taking into account its associated incentive and to press a key when they were through studying.

When participants pressed the key, the story disappeared. The goal-driven participants rated the amount of study effort they had chosen to invest in the paragraph on a vertical scale, with buttons marked from 1 (I chose to invest little study) to 9 (I chose to invest a great deal of study). In contrast, the data-driven participants rated the amount of study effort that the paragraph required, using a similar scale from 1 (The paragraph required little study) to 9 (The paragraph required a great deal of study). The rating scale was then replaced with a JOL question: “Chances to answer correctly (0%–100%)?” Participants indicated the chances that they would be able to answer correctly a question about the paragraph by sliding a pointer on a horizontal slider using the mouse. At the end of the study task, participants were presented with four open-ended test questions, one about each paragraph.

For the experiment proper, participants were instructed to study word sets consisting of six words each, so that when presented with five of them, they would be able to recall the missing sixth word. They were told that the importance of each set would be indicated by an incentive value (as in the practice task). They were informed that some of the sets are easier than others and were warned that there was little chance that they would be able to study all the items during the time allotted. To create time pressure, they were led to believe that the study list included 40 sets, but because they would have only 15 minutes for study, it is unlikely that they would be able to see all the sets. Because their task was to gain as many points as possible, they should avoid spending too much time on each item. To maintain severe time pressure throughout the study phase, two counters presented the amount of time, and the number of items allegedly remaining (see Koriat et al., 2006, Experiment 6). Participants were told that only the time used for study proper would be subtracted from the “allotted” time. In actuality, however, the study phase ended when participants finished studying the 22 sets.

In each study trial, the incentive value (1, 3, or 5) appeared on the screen for 1 s, after which the set was presented. When participants pressed the left mouse button to indicate end of study, the study set was replaced by a vertical effort-rating scale (similar to the one used in the practice phase), and participants made their rating as they had done in the practice phase. The vertical scale was then replaced with the question Chances to recall (0%–100%)? and participants indicated their JOLs (the chance of recalling the missing word). In the test phase, the test items were presented in turn until the participant announced the missing word or until 20 s had elapsed.

Results

The allocation of ST

Responses for which ST was below or above 2.5 standard deviations (SDs) from each participant’s mean were eliminated from all analyses (0.89% across all participants). ST was not affected by the effort framing manipulation.

As expected, more ST was allocated to the easy items than to the difficult items (22.74 s and 27.87, respectively for the constant condition, and 23.21 s and 25.98, respectively, for the differential condition). A Difficulty × Condition analysis of variance (ANOVA) yielded a significant effect only for difficulty, F(1, 94) = 18.97, MSE = 39.49, p < .0001, \( {\eta}_p^2 \) = 0.17.

Assuming that the effects of difficulty derive in part from a quick decision to discontinue studying the more difficult items (see Undorf & Ackerman, 2017), we identified for each participant the items with 1 SD below his or her mean ST. For the constant condition, the number of such “fast” responses averaged 18.33 for the difficult items, and 8.33 for the easy items, t(47) = 3.02, p < .005, d = 0.44. For the differential condition, the respective means were 20.21 and 11.46, t(47) = 2.65, p < .05, d = 0.38. These results are consistent with the idea that fast responses represented an attempt to avoid investing precious ST studying difficult items.

In addition, for the differential condition, the number of fast responses averaged 5.83 for the high-incentive condition, and 25.83 for the low-incentive condition, t(47) = 7.46, p < .0001, d = 1.08, suggesting that incentive also affected the decision whether to continue studying an item.

The relationship between ST and JOL

JOLs increased rather than decreased with ST. Across the two framing conditions, JOLs for the constant condition averaged 34.20 for items with below-median ST and 49.47 for items with above-median ST, t(47) = 4.87, p < .0001, d = 0.70. This increase was about the same as that observed for the differential condition between the low-incentive items (35.42) and the high-incentive items (50.93), t(47) = 5.50, p < .0001, d = 0.79.

We examined the possibility that the increase observed for the constant condition was due partly to the choice of the easier items for continued study. That is, the increase reflects primarily interitem differences: The tendency of the easier items to be associated with higher JOLs. In contrast, the increase in JOLs with increased incentive is due mostly to the goal-driven regulation of effort.

To examine this idea, we calculated for each participant in the constant condition the percentage of easy items among the items receiving long (above median) ST and among those receiving short (below median) ST. Among the long ST items, 60.1% of the items on average were easy items, whereas among the short ST items, only 39.9% were easy items, t(47) = 3.60, p < .001, d = 0.52. Thus, the choice of the easier items for continued study possibly contributed to the higher JOLs for these items. For the differential condition, in contrast, the increase in JOL with ST was additionally due to goal-driven regulation because easy and difficult items were equally represented in each incentive condition.

The effects of effort framing on JOLs

We now test the hypothesis that the effects of effort framing are impervious to the superordinate agenda to choose the easier items for continued study. We analyzed the results in the same way as in the previous study (Koriat, Nussinson, et al., 2014), dividing items for each participant at the median of effort rating into low and high effort ratings. Figure 1 presents mean JOL as a function of mean effort rating for the low and high effort ratings. The results are plotted separately for the data-driven condition (Fig. 1a) and the goal-driven condition (Fig. 1b).Footnote 1

Mean judgment of learning (JOL) for mean below-median and mean above-median effort rating for the constant and differential conditions. The results are presented for the data-driven condition (a) and for the goal-driven condition (b) (Experiment 1). Error bars represent +1 SEM

The overall pattern was similar across the constant and differential conditions: A three-way ANOVA, Incentive Condition (constant vs. differential) × Framing Condition (data driven vs. goal driven) × Effort Rating (low vs. high) using 89 participants who had means for all cells yielded a nonsignificant triple interaction, F(1, 85) = 1.24, MSE = 303.30, p < .28, \( {\eta}_p^2 \)= 0.01. The only significant effect was the interaction between framing condition and effort rating, F(1, 85) = 87.58, MSE = 303.30, p < .0001, \( {\eta}_p^2 \)= 0.51. Across the two incentive conditions, JOLs increased with effort ratings for goal-driven framing, t(45) = 9.06, p < .0001, d = 1.34, an increase that was significant for the constant condition, t(22) = 5.48, p < .0001, d = 1.14, as well as for the differential condition, t(22) = 7.46, p < .0001, d = 1.56. In contrast, for the data-driven framing, JOLs decreased significantly with effort ratings, t(42) = 4.87, p < .0001, d = 0.74; the decrease was significant for the constant condition, t(19) = 2.99, p < .01, d = 0.67, as well as for the differential condition, t(22) = 3.84, p < .001, d =0.80.

The interactive pattern depicted in Fig. 1 is also reflected in the within-person correlation between rated effort and JOL, which was calculated across the constant and differential conditions. This correlation averaged +.68, t(47) = 12.36, p < .0001, for the goal-driven condition, and −.49, t(47) = 6.25, p < .0001, for the data-driven condition.

Discussion

The results of Experiment 1 suggest that the learners’ agenda exerted a strategic, top-down influence on the processing that took place during learning: Learners avoided spending precious time studying the more difficult items (choice) and invested more ST in the study of the easier items (regulation). Nevertheless, the effects of effort framing were in the same direction as in Koriat, Nussinson, et al. (2014) that did not involve time pressure. In particular, JOLs decreased significantly with rated effort for the data-driven framing condition. Thus, effort attribution seems to operate like a closed-loop module in which the calculation of self-agency reflects the specific item–learner interaction in attempting to commit the items to memory. Indeed, previous results suggest that JOLs are insensitive to some of the major factors that affect memory: They fail to reflect the fact that learning improves with repeated presentations, or the fact that memory declines with time (Koriat, Bjork, Sheffer, & Bar, 2004; Kornell, & Bjork, 2009). Rather, JOLs rely primarily on the mnemonic cues that derive from the study of a particular item and are sensitive to the interitem differences within the studied list.

Experiment 2

In Experiments 2 and 3, we used a typical self-paced study task (i.e., with no time pressure), but manipulated the structure of the study list. In Experiment 2 the list included paired associates that differed in intrinsic difficulty whereas in Experiment 2 the pairs differed in the incentive for recall. Because ST regulation is typically responsive to interitem differences in both difficulty and incentive, we expect these differences to constrain the effects of effort framing. In Experiment 2, participants are expected to have a limited success attributing interitem differences in difficulty to variations in their own self-agency, whereas in Experiment 3 they are expected to have a limited success attributing interitem differences in incentive to data-driven regulation. Results consistent with this pattern will reinforce the idea that effort regulation and attribution are tied to task performance. They are impervious to the superordinate agenda but sensitive to the characteristics of the studied material.

Method

Participants and stimulus materials

Eighty Hebrew-speaking University of Haifa undergraduates (15 males and 65 females) participated in the experiment, 47 for pay and 33 for course credit. They were divided randomly between the goal-driven and the data-driven conditions of the experiment.

A list of 60 Hebrew paired associates (from Koriat et al., 2006) was used, with a wide range of associative strength between the members of each pair. For 30 pairs (related), associative strength was greater than zero according to Hebrew word-association norms and ranged from .012 to .635 (M =.144). The remaining 30 pairs (unrelated) were formed such that the two members were judged intuitively as unrelated.

Apparatus and procedure

The apparatus was the same as in Experiment 1. The practice block and the experimental procedure were the same as in Experiment 1 of Koriat, Nussinson, et al. (2014). Briefly, participants studied the 60 paired associates under self-paced instructions. When they signaled that they had finished studying a given pair, a vertical effort-rating scale appeared, as in Experiment 1, which differed for the data-driven and goal-driven participants. The participants rated their degree of effort and then indicated their JOLs on a 0%–100% scale by sliding a pointer on a horizontal slider.

Results

The relationship between ST and effort rating

Responses with ST below or above 2.5 SDs from each participant’s mean were eliminated from the analyses (2.42% of all responses). ST averaged 9.30 s for the data-driven condition and 9.90 s for the goal-driven condition, t(78) = 0.60, p < .56, d = 0.14.

Effort ratings increased with ST, but the increase was more moderate for the goal-driven condition. For the data-driven condition, ST increased from 3.11 for below-median STs to 5.44 for above-median STs. The respective increase for the goal-driven condition was from 3.72 to 5.21. A Condition × ST (short vs. long) ANOVA yielded F < 1, for condition, and F(1, 78) = 275.43, MSE = 0.53, p < .0001, \( {\eta}_p^2 \)= 0.78, for ST. The interaction, however, was significant, F(1, 78) = 13.41, MSE = 0.53, p < .0005, \( {\eta}_p^2 \)= 0.15. These results differ from those previously reported (Koriat, Nussinson, et al., 2014), in which the effects of ST on rated effort were very similar for the two conditions. It would seem that the use of items that differed in difficulty in the present experiment constrained the attribution of ST to goal-driven regulation, as will be shown later.

The relationship between effort rating and JOLs

Figure 2 presents mean JOLs as a function of effort rating, comparing trials with below median (low) and those with above median (high) effort ratings. A Condition × Rated Effort (low effort vs. high effort) ANOVA yielded F < 1 for condition; F(1, 78) = 113.13, MSE = 103.67, p < .0001, \( {\eta}_p^2 \)= 0.59, for effort rating; and F(1, 78) = 39.12, MSE = 103.67, p < .0001, \( {\eta}_p^2 \)= 0.33, for the interaction. JOLs decreased with rated effort for both conditions, but the decrease was more moderate for the goal-driven condition. This difference is also reflected in the within-person correlation between JOL and rated effort, which averaged −.74 for the data-driven condition, and −.25 for the goal-driven condition, t(78) = 6.40, p < .0001, d = 1.46.

Mean judgments of learning (JOL) for the data-driven and goal-driven effort framing for below-median (low) and above-median (high) effort rating. a Results across all items. Results for the related (b) and unrelated (c) items, respectively (Experiment 2). Error bars represent +1 SEM

We repeated the analysis separately for the related and unrelated items (using 78 participants who had means for all cells, and dividing responses into the two levels of effort ratings separately for related and unrelated items for each condition). For the related items (Fig. 2b), the results were similar to those obtained across all items: JOLs decreased with rated effort for the data-driven condition, t(38) = 10.60, p < .0001, d = 1.70, as well as for the goal-driven condition, t(38) = 4.09, p < .0005, d = 0.67. In contrast, for the unrelated items (Fig. 2c), JOLs decreased with rated effort for the data-driven condition, t(38) = 9.89, p < .0001, d = 1.58, but tended to increase with rated effort for the goal-driven condition, t(38) = 1.74, p < .10, d = 0.28. A two-way ANOVA that compared the effects of effort rating (low vs. high) for related and unrelated items in the goal-driven condition yielded F < 1, for effort rating; F(1, 38) = 86.14, MSE = 132.18, p < .0001, \( {\eta}_p^2 \) = 0.69, for relatedness; but F(1, 38) = 32.93, MSE = 30.67, p < .0001, \( {\eta}_p^2 \) = 0.46, for the interaction.

The difference between the results for the related and unrelated items possibly stems from the fact that the related items represented a wider range of pair relatedness as measured by associative strength, whereas associative strength was very weak for all the unrelated pairs. These results suggest that the effects of effort framing were constrained by the existence of even small between-item differences in intrinsic item difficulty.

The combined effects of effort framing and item difficulty

We examined the effects of item difficulty by comparing the results for the related and unrelated items. Figures 3a and b present the results for ST and effort rating, respectively. A Condition × Relatedness (related vs. unrelated) ANOVA on ST yielded a nonsignificant interaction, F(1, 78) = 1.78, MSE = 7.16, p < .20, \( {\eta}_p^2 \)= 0.02, whereas the same ANOVA on effort rating yielded a significant interaction, F(1, 78) = 35.68, MSE = 0.50, p < .0001, \( {\eta}_p^2 \)= 0.31. These results suggest that differences in ST between the related and unrelated items were attributed to a lesser extent to data-driven effort in the goal-driven framing condition than in the data-driven framing condition.

Mean study time (a) effort rating (b) and JOL (c) for the related and unrelated items for the data-driven and goal-driven conditions (Experiment 2). Error bars represent +1 SEM

Finally, to complete the picture, Fig. 3c, presents the results for JOLs. A Condition × Relatedness ANOVA yielded F < 1, for condition, and F(1, 78) = 228.03, MSE = 76.34, p < .0001, \( {\eta}_p^2 \)= 0.75, for relatedness, but the interaction was significant, F(1, 78) = 4.77, MSE = 76.34, p < .05, \( {\eta}_p^2 \)= 0.06. JOLs were higher for the related items than for the unrelated items, but the difference was smaller for the goal-driven condition.

We return to the idea that differences in ST that are associated with relatedness were attributed to a lesser extent to data-driven effort in the goal-driven condition. This idea is brought to the fore in Fig. 4, which plots the overall effect of relatedness on effort rating (mean effort rating for unrelated items minus mean effort rating for related items) as a function of the effects of relatedness on ST (mean ST for unrelated items minus mean ST for related items). It can be seen that the increase in ST that derives from increased item difficulty resulted in a smaller increase in rated effort in the goal-driven condition than in the data-driven condition. The increase in rated effort for each added second of ST that was due to relatedness averaged 0.225 for the goal-driven condition, and 0.382 for the data-driven condition. Excluding one participant for whom the ratio was inordinately high (66.01, because of very small differences in ST between related and unrelated items), this ratio averaged 0.66 (SD = 0.90) for the data-driven participants, and 0.23 (SD = 0.28) for the goal-driven participants, t(77) = 2.90, p < .005, d = 0.66.

The overall effect of relatedness on effort rating (mean effort rating for unrelated items minus mean effort rating for related items) as a function of the effects of relatedness on study time (mean ST for unrelated items minus mean ST for related items) for the goal-driven and data-driven conditions (Experiment 2)

The effects of effort rating on JOLs for the related and unrelated items

We now examine the hypothesis that the effects of effort framing on the JOL–effort relationship are entirely accounted for by the effects of effort framing on effort attribution. Figure 5 plots the function relating mean JOL and mean effort rating for the related items to mean JOL and mean effort rating for the unrelated items. This function is plotted separately for the data-driven and goal-driven conditions. It can be seen that the difference between related and unrelated items was smaller for the goal-driven than for the data-driven condition, as noted earlier. However, there is no indication that the slope of the function is any shallower for the goal-driven condition. The decrease in JOLs for each added unit of effort rating that was due to differences in relatedness was 13.8 for the goal-driven condition and 9.1 for the data-driven condition. The implication is that, given that differences in item difficulty constrained the attribution of differences in ST to goal-driven regulation, these differences did not exert additional constraints on the effects of rated effort on JOLs. The results highlight the idea that metacognitive monitoring rests heavily on agency attributions.

The function relating mean JOL and mean effort rating for related items to mean JOL and mean effort rating for unrelated items. The function is plotted separately for the data-driven and goal-driven conditions (Experiment 2). Error bars represent +1 SEM

Recall performance

Recall (see Fig. 6) yielded the same interactive pattern as that observed for JOLs (Fig. 2a). A two-way ANOVA yielded F < 1, for condition; F(1, 78) = 72.41, MSE = 94.62, p < .0001, \( {\eta}_p^2 \)= 0.48, for rated effort; and F(1, 78) = 23.52, MSE = 94.62, p < .0001, \( {\eta}_p^2 \)= 0.23, for the interaction. Recall decreased with rated effort for the data-driven condition, t(39) = 8.58, p < .0001, d = 1.36, as well as for the goal-driven condition, t(39) = 2.92, p < .01, d = 0.46, but the decrease was more moderate for the goal-driven condition.

Mean percentage recall for below-median (low) and above-median (high) effort rating for the data-driven and goal-driven effort framing conditions (Experiment 2). Error bars represent +1 SEM

Discussion

Experiment 2 confirmed the effects of effort framing, consistent with the view that the sense of self-agency can be based on postdictive inference (Wegner, 2002). However, the effects of effort framing did not transpire across the board. Specifically, interitem differences in intrinsic difficulty constrained the effects of goal-driven framing on the ST–JOL relationship. Possibly strong interitem differences in memorizing effort invite data-driven attribution (Koriat et al., 2006) and constrain the learner’s ability to attribute these differences to one’s own agency.

Experiment 3

Experiment 3, in turn, examined the hypothesis that data-driven attribution is constrained by interitem differences in incentive. Unrelated paired associates were used, but different items were associated with different incentives for recall. Learners were expected to invest greater effort in studying high-incentive than low-incentive items and, in parallel, to give higher JOLs to the high-incentive items. Can data-driven effort framing reverse this trend, yielding lower JOLs for the high-incentive items than for the low-incentive items?

Method

Participants

Eighty Hebrew-speaking University of Haifa undergraduates (27 males) participated in the experiment, 76 for pay and four for course credit. They were divided randomly between the goal-driven and the data-driven conditions.

Apparatus, procedure, and materials

The apparatus and procedure were the same as in Experiment 2 with two exceptions. First, the list consisted of 60 unrelated paired associates (the same as in Koriat, Nussinson, et al., 2014, Experiment 1). Second, incentive was manipulated between items so that half of the items were associated with an incentive of 1 (low), and the remaining items were associated with an incentive of 3 (high). The instructions were similar to those for the differential condition of Experiment 1, with the exception that no time pressure was imposed. The practice block was similar to that of Experiment 1 but included differences in incentive as well as instructions that asked participants to regulate their study effort according to the designated incentive. The procedure was similar to that of Experiment 2, except that each trial began with the presentation of the incentive value at the top of the screen, and the word pair was added on the screen 50 s later.

Because the list of items used in this study had been shown to yield reliable interitem differences in ST in the previous study (see Table 1 in Koriat, Nussinson, et al., 2014), these pairs were divided into two groups that were matched in terms of mean ST in that study. The assignment of incentive to each set of word pairs was counterbalanced across all participants in both the data-driven condition and the goal-driven condition.

Results

The relationship between ST and effort rating

Responses with ST below or above 2.5 SDs from each participant’s mean were eliminated from the analyses (2.33%). ST averaged 12.77 s for the data-driven condition and 12.65 s for the goal-driven condition, t(78) = 0.1, p < .95. d = 0.02.

Effort ratings increased with ST to about the same extent in the two framing conditions: A Condition × ST (short vs. long) ANOVA yielded F(1, 78) = 124.68, MSE = 0.32, p < .0001, \( {\eta}_p^2 \)= .62, for ST, and no other effect. For the data-driven condition, effort rating increased from 4.78 for short ST to 5.78 for long ST, t(39) = 8.40, p < .0001, d = 1.32. The respective increase for the goal-driven condition was from 4.47 to 5.46, t(39) = 7.47, p < .0001, d = 1.18.The relationship between JOL and rated effort

Figure 7 presents mean JOLs as a function of effort rating. A Condition × Rated Effort ANOVA yielded only a significant interaction, F(1, 78) = 95.13, MSE = 75.51, p < .0001, \( {\eta}_p^2 \) = .55. JOLs decreased with rated effort for the data-driven condition, t(39) = 7.31, p < .0001, d = 1.16, but increased significantly with rated effort for the goal-driven condition, t(39) = 6.46, p < .0001, d = 1.02. The within-person correlation between JOL and rated effort averaged −.51, t(39) = 8.92, p < .0001 p < .0001, for the data-driven condition, and +.48, p < .0001, t(39) = 9.36, p < .0001, for the goal-driven condition.

Mean judgments of learning (JOL) for below-median (low) and above-median (high) effort rating for the data-driven and goal-driven effort framing conditions (Experiment 3). Error bars represent +1 SEM

These results provide a clear support for the hypothesized effects of effort framing. Although the effect of ST on effort ratings was similar in the two framing conditions, the attribution of effort to data-driven or to goal-driven regulation resulted in diametrically opposed relationships between effort ratings and JOLs. In particular, the results document the increase in JOLs with effort ratings that is expected to occur when differences in effort are attributed to one’s own agency. Possibly, Experiment 2 did not yield such increase because of the existence of interitem differences in degree of relatedness. In addition, the manipulation of incentive in the present experiment may have strengthened awareness of the option of attributing differences in effort to one’s own agency.

The interactive pattern depicted in Fig. 7 was observed for each of the two levels of incentive. For each level, JOLs decreased significantly with rated effort for the data-driven condition, but increased significantly with rated effort for the goal-driven condition. In fact, the triple interaction in the three-way ANOVA, Condition × Rated Effort × Incentive (based on 76 participants who had means for all cells) was not significant, F < 1.

However, these results may hide differences between the data-driven and goal-driven conditions that can emerge when the analysis focuses on the effects of incentive. We turn now to examination of the direct and interactive effects of incentive.

The effects of incentive on ST, effort ratings and JOL

Mean ST for the low incentive and high incentive items is plotted in Fig. 8a. A Condition × Incentive ANOVA yielded F < 1, for condition; F(1, 78) = 45.52, MSE = 3.04, p < .0001, \( {\eta}_p^2 \)= 0.37, for incentive; and F(1, 78) = 2.97, MSE = 3.04, p < .10, \( {\eta}_p^2 \)= 0.04, for the interaction. ST increased with incentive for the data-driven condition, t(39) = 3.69, p < .001, d = 0.57, as well as for the goal-driven condition, t(39) = 5.79, p < .0001, d = 0.92.

Mean study time (a), effort rating (b), and JOL (c) as a function of incentive for the data-driven and goal-driven effort framing conditions (Experiment 3). Error bars represent +1 SEM

The same ANOVA on effort ratings (Fig. 8b) yielded F(1, 78) = 1.02, MSE = 3.81, p=.32, \( {\eta}_p^2 \)= .01, for condition; and F(1, 78) = 69.60, MSE = 0.28, p < .0001, \( {\eta}_p^2 \) = .47, for incentive. The interaction, however, was highly significant: F(1, 78) = 19.60, MSE = 0.28, p < .0001, \( {\eta}_p^2 \)= .20. Effort ratings increased with incentive for the data-driven condition, t(39) = 3.57, p < .005, d = 0.57, and the goal-driven condition, t(39) = 7.63, p < .0001, d = 1.20, but the increase was smaller for the data-driven condition. This difference can also be seen in the within-person correlation between incentive and rated effort, which averaged .12 for the data-driven participants, and .37 for the goal-driven participants, t(78) = 4.81, p < .0001, d = 1.09, for the difference between the two correlations. These results suggest that learners’ had a limited success attributing between-incentive differences in ST to data-driven regulation.

The results for JOLs, in turn (Fig. 8c), suggest that data-driven framing had some success in offsetting the positive effects of incentive on JOLs, as indicated by the near-significant interaction between condition and incentive, F(1, 78) = 3.51, MSE = 28,16, p < .07, \( {\eta}_p^2 \)= 0.04. However, a comparison of the results in Fig. 8c with those in Fig. 7 clearly discloses the limited effectiveness of data-driven effort framing. The results in the latter figure indicated that JOLs decreased significantly with effort ratings in the data-driven condition. In contrast, as far as the difference between incentives is concerned, JOLs actually increased with incentive even in the data-driven condition (Fig. 8c). Thus, high-incentive items (which were associated with relatively high effort ratings. Fig. 8b) actually yielded higher JOLs than low-incentive items even for the data-driven framing condition, t(39) = 2.41, p < .05, d = 0.38.

The effects of effort rating on JOLs: Comparing low-incentive and high-incentive items

As in Experiment 2, we examined the question whether the effects of effort framing on JOL can be accounted for entirely by the effects of effort framing on effort ratings.

Figure 9 presents the function relating mean JOL and mean effort rating for high-incentive items to mean JOL and mean effort rating for low-incentive items. This function is plotted separately for the data-driven and goal-driven conditions. It can be seen that the difference in effort rating between the two incentive levels was larger for the goal-driven than for the data-driven condition. However, the slope of the function is similar for the two conditions. The increase in JOLs for each added unit of effort rating that was due to increased incentive was 5.25 for the goal-driven condition and 7.56 for the data-driven condition, so that this slope was not steeper for the goal-driven condition. Given that differences in incentive exerted different effects on effort ratings in the data-driven and goal-driven conditions, JOLs in the two conditions seem to be entirely determined by the respective effort ratings. Thus, the constraints that are imposed by differences in incentive are confined to the stage at which effort framing biases the attribution of effort to its two sources.

The function relating mean JOL and mean effort rating for high incentive items to mean JOL and mean effort rating for low incentive items. The function is plotted separately for the data-driven and goal-driven framing conditions (Experiment 3). Error bars represent +1 SEM

Recall performance

Recall performance (Fig. 10) yielded similar results to those of Experiment 2, mirroring the pattern of effects observed for JOLs (Fig. 7). A two-way ANOVA yielded F(1, 78) = 13.95, MSE = 119.21, p < .0005, \( {\eta}_p^2 \)= 0.15, for the interaction. Recall decreased with rated effort for the data-driven condition, t(39) = 2.77, p < .01, d = 0.44, but increased with rated effort for the goal-driven condition, t(39) = 2.52, p < .05, d = 0.40.

Mean recall for below-median (low) and above-median (high) effort rating for the data-driven and goal-driven effort framing conditions (Experiment 3). Error bars represent +1 SEM

Discussion

The results of Experiment 3 confirmed the expected effect of effort framing on the JOL–effort relationship. JOLs decreased with rated effort for the data-driven framing but increased significantly with rated effort for the goal-driven framing. This interactive pattern was observed across all items and also for each incentive level separately. The goal-driven framing instructions were successful in inducing a self-agency attribution that reversed the relationship typically observed for self-paced study. These results were similar to those observed in Experiment 1 (Fig. 1), but because of the use of time pressure in that experiment, those results should be interpreted with caution.

Note that in Experiment 2, the goal-driven effort framing failed to yield an increase in JOLs with effort ratings except when the analysis was based only on the unrelated items (Fig. 2). This is possibly because incentive, which represents an extrinsic factor (see Koriat, 1997), could be manipulated in a counterbalanced fashion across items and conditions in Experiment 3. In contrast, differences in item difficulty, as an intrinsic factor, remained stable across the data-driven and goal-driven framing conditions in Experiment 2. This difference is not merely methodological. In fact, in social-psychological theories of attribution (Weiner, 1985), task difficulty is assumed to represent a stable, external factor, whereas effort (which refers specifically to goal-driven effort; see Koriat, Nussinson, et al., 2014) is assumed to represent a variable, internal factor. These differences should be taken into account in the study of self-agency attributions.

However, the results also documented the expected constraints on agency attribution. Two observations suggested that participants had greater difficulty attributing between-incentive differences in effort to data-driven regulation than to goal-driven regulation. First, although effort ratings increased with incentive for both conditions, they increased less strongly in the data-driven condition (Fig. 8b). Because the effects of incentive on ST were similar for the two conditions, this result suggests that participants were less successful in attributing the ST difference between incentives to data-driven regulation than to goal-driven regulation.

The second observation is that the data-driven framing instructions failed to offset the typical pattern of JOLs increasing with incentive. Rather, these instructions yielded a pattern that is more typical of goal-driven attribution (Koriat, Ackerman, et al., 2014; Koriat et al., 2006; Koriat, Nussinson, et al., 2014): JOL increased with incentive even for the data-driven condition, although less strongly so than in the goal-driven condition.

In sum, like Experiment 2, Experiment 3 yielded evidence for the effects of effort framing as well as for their limitations. Post-study instructions exerted marked effects on the JOL–effort relationship but the effects were constrained by parameters of the study situation that invited self-agency attribution.

General discussion

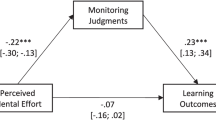

In self-regulated learning, learners typically have the option to control a variety of cognitive operations (Bjork et al., 2013). They can decide which items to study (Metcalfe & Kornell, 2005; Son & Metcalfe, 2000); they can choose to mass or space their study (Benjamin & Bird, 2006; Son, 2004), whether to restudy or self-test (Kornell & Son, 2009), which learning strategies to use (Fiechter, Benjamin, & Unsworth, 2015), and which items to restudy (Thiede & Dunlosky, 1999). In all likelihood, these choices have implications for the sense of agency. In this study, we focused on a circumscribed segment of self-regulated learning: the allocation of study effort to different items during self-paced learning. Previous results suggested that learners are sensitive to the source of their study effort, drawing different conclusions from the effort that derives from their own volition versus the effort that seems to be due to the task. Furthermore, effort attribution could be influenced by a poststudy effort framing manipulation (Koriat, Ackerman, et al., 2014; Koriat et al., 2006, Koriat, Nussinson, et al., 2014; Muenks et al., 2016). In this study, we used the effort framing manipulation to help delimit the stage at which the regulation of study effort and its attribution occur in the context of the learners’ superordinate agenda. We examined the constraints on effort attribution and specified the locus at which effort attributions affect the monitoring of future recall. Figure 11 presents a schematic stage model that can help in summarizing the results.

The results suggested that effort regulation constitutes a component of self-agency that is specific to task performance, and is dissociable from the learner’s superordinate agenda. Although time pressure in Experiment 1 induced learners to choose the easier items for continued study and to invest more ST in these items, the effects of effort framing on JOLs were similar to those observed under typical self-regulated study conditions in which participants invest more ST in the more difficult items (Koriat, Nussinson, et al., 2014). Thus, data-driven effort framing resulted in JOLs decreasing with rated effort.

Experiments 2 and 3 confirmed the finding suggesting that effort attribution can be biased by poststudy instructions. However, they also indicated that the mutability of effort attribution operates within boundaries that are imposed by parameters of the study situation. Experiment 2 indicated that although learners could be induced to attribute study effort to their own agency or to external factors, the attribution of effort to self-agency was constrained by interitem differences in intrinsic item difficulty. These results suggest that the general tendency of learners to attribute interitem differences in difficulty to data-driven regulation is partly mandatory; learners cannot simply attribute to their own agency large differences in ST that derive from differences in the ease with which different items are committed to memory. Possibly, a runner who runs faster downhill than uphill cannot attribute these differences entirely to his own agency. Experiment 3, in turn, indicated that the attribution of study effort to external factors is constrained by interitem differences in the incentive for recall. The results suggest the existence of inherent boundaries that place restrictions on postdictive agency inferences.

The results also suggested that the effects of effort framing on the JOL–effort relationship occur at the stage at which study effort is attributed to goal-driven or to data-driven regulation (Figs. 5 and 9; see Fig. 11). Once the effort framing instructions have biased the retrospective attribution of effort to goal-driven or to data-driven regulation, conditional on the constraints exerted by the study environment, JOLs then varied as a function of the resultant effort ratings.

These results leave open many issues about the agency attributions that seem to mediate item-by-item JOLs during self-paced learning. We only mention some of these issues.

One issue concerns the link between agency monitoring and metacognition (see Metcalfe & Greene, 2007; Pacherie, 2013; Proust, 2013). The assumption underlying the present study is that agency monitoring constitutes an integral part of metacognitive monitoring (see Proust, 2013) to the extent that agency attributions can be inferred from metacognitive judgments, for example, from the relationship between ST and JOLs, or from that between decision time and confidence (see Koriat et al., 2006). These relationships can be said to constitute implicit markers of the self-agency attributions underlying metacognitive monitoring. However, more research is needed to strengthen the link between the two research traditions, metacognition and the sense of agency. As Pacherie (2013) argued, “the two traditions can only benefit from reinforced mutual interactions” (p. 341).

Another issue concerns the cues for goal-driven and data-driven attributions. As noted earlier, results in the area of self-agency generally indicate that the sense of agency depends on the congruence between one’s intentions and their outcomes (Frith et al., 2000; Haggard & Tsakiris, 2009; Metcalfe, 2013; Wegner, 2002). However, it is unclear how learners distinguish between the effort that is due to their own volition and the effort that is called for by the studied item in the absence of immediate observable outcomes. This question is important particularly in view of the predictive validity of JOLs: The pattern of results obtained for JOLs, which seems to be based on the partitioning of study effort between data-driven and goal-driven sources, sometimes mirrors very closely the pattern observed for recall (see Fig. 6, Koriat, Ackerman, et al., 2014). Also, the effectiveness of the effort framing manipulation in affecting the JOL–effort relationship suggests that learners can discriminate between the effort that is called for and the effort that one chooses to invest. The issue of the basis of this discrimination is fundamental and invites serious experimental efforts.

Finally, the results suggest that some of the agency components involved in metacognitive self-regulation are partly dissociable. Possibly, the agenda to choose the easier items for continued study in Experiment 1 is associated with a strong sense of self-agency. However, although this agenda controls top-down the selection and regulation of ST during self-paced learning, the learner–item interaction that occurs in studying a particular set of items brings to the fore data-driven constraints that exert their influence independent of that of the superordinate agenda. Other dissociations may also exist between different control processes that are involved in self-regulated learning, and these dissociations can shed light on the organization and modularity of metacognitive monitoring and regulation in general.

Altogether, the present study attempted to bridge between metacognition research and self-agency research by exploring issues of self-agency in the context of self-regulated learning. However, metacognition research invites further investigation of self-agency issues in many tasks that involve self-regulation. This investigation is of interest in its own right and also presents a challenge to the study of self-agency issues in general.

Notes

In all analyses using median splits in this article, preliminary analyses yielded no clear indication for a nonlinear relationship. The within-individual correlations that are reported, however, are calculated across the full range of the variables (ST, effort, and JOL).

References

Ariel, R., & Dunlosky, J. (2013). When do learners shift from habitual to agenda-based processes when selecting items for study? Memory & Cognition, 41, 416–428.

Ariel, R., Dunlosky, J., & Bailey, H. (2009). Agenda-based regulation of study-time allocation: When agendas override item-based monitoring. Journal of Experimental Psychology: General, 138, 432–447.

Bayne, T. J., & Levy, N. (2006). The feeling of doing: Deconstructing the phenomenology of agency. In N. Sebanz & W. Prinz (Eds.), Disorders of volition (pp. 49–68). Cambridge: MIT Press.

Benjamin, A. S., & Bird, R. D. (2006). Metacognitive control of the spacing of study repetitions. Journal of Memory and Language, 55, 126–137.

Bjork, R. A., Dunlosky, J., & Kornell, N. (2013). Self-regulated learning: Beliefs, techniques, and illusions. Annual Review of Psychology, 64, 417–444.

Castel, A. D., Murayama, K., Friedman, M. C., McGillivray, S., & Link, I. (2013). Selecting valuable information to remember: Age-related differences and similarities in self-regulated learning. Psychology and Aging, 28, 232–242.

Chambon, V., Sidarus, N., & Haggard, P. (2014). From action intentions to action effects: How does the sense of agency come about? Frontiers in Human Neuroscience, 8, 320.

Dunlosky, J., & Hertzog, C. (1998). Aging and deficits in associative memory: What is the role of strategy use? Psychology and Aging, 13, 597–607.

Dunlosky, J., & Thiede, K. W. (1998). What makes people study more? An evaluation of factors that affect self-paced study. Acta Psychologica, 98, 37–56.

Dunlosky, J., & Thiede, K. W. (2004). Causes and constraints of the shift-to-easier-materials effect in the control of study. Memory & Cognition, 32, 779–788.

Fiechter, J. L., Benjamin, A. S., & Unsworth, N. (2015). The metacognitive foundations of effective remembering. In J. Dunlosky & S. K. Tauber (Eds.), Handbook of metamemory (pp. 62–77). Oxford: Oxford University Press.

Frith, C. (2013). The psychology of volition. Experimental Brain Research, 229, 289–299.

Frith, C. D., Blakemore, S., & Wolpert, D. (2000). Abnormalities in the awareness and control of action. Philosophical Transactions of the Royal Society of London, 355, 1771–1788.

Haggard, P., & Eitam, B. (Eds.). (2015). The sense of agency. New York: Oxford University Press.

Haggard, P., & Tsakiris, M. (2009). The experience of agency: Feelings, judgments, and responsibility. Current Directions in Psychological Science, 18, 242–246.

Koriat, A. (1997). Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126, 349–370.

Koriat, A. (2008). Easy comes, easy goes? The link between learning and remembering and its exploitation in metacognition. Memory & Cognition, 36, 416–428.

Koriat, A., Ackerman, R., Adiv, S., Lockl, K., & Schneider, W. (2014). The effects of goal-driven and data-driven regulation on metacognitive monitoring during learning: A developmental perspective. Journal of Experimental Psychology: General, 143, 386–370.

Koriat, A., Bjork, R. A., Sheffer, L., & Bar, S. K. (2004). Predicting one’s own forgetting: The role of experience-based and theory-based processes. Journal of Experimental Psychology: General, 133, 646–653.

Koriat, A., Ma’ayan, H., & Nussinson, R. (2006). The intricate relationships between monitoring and control in metacognition: Lessons for the cause-and-effect relation between subjective experience and behavior. Journal of Experimental Psychology: General, 135, 36–69.

Koriat, A., Nussinson, R., & Ackerman, R. (2014). Judgments of learning depend on how learners interpret study effort. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40, 1624–1637.

Kornell, N., & Bjork, R. A. (2009). A stability bias in human memory: Overestimating remembering and underestimating learning. Journal of Experimental Psychology: General, 138, 449–468.

Kornell, N., & Son, L. K. (2009). Learners’ choices and beliefs about self-testing. Memory, 17, 493–501.

Metcalfe, J. (2002). Is study time allocated selectively to a region of proximal learning? Journal of Experimental Psychology: General, 131, 349–363.

Metcalfe, J. (2013). “Knowing” that the self is the agent. In J. Metcalfe & H. S. Terrace (Eds.), Agency and joint attention (pp. 238–255). New York: Oxford University Press.

Metcalfe, J., & Greene, M. J. (2007). Metacognition of agency. Journal of Experimental Psychology: General, 136, 184–199.

Metcalfe, J., & Kornell, N. (2005). A region of proximal learning model of study time allocation. Journal of Memory and Language, 52, 463–477.

Muenks, K., Miele, D. B., & Wigfield, A. (2016). How students’ perceptions of the source of effort influence their ability evaluations of other students. Journal of Educational Psychology, 108, 438–454.

Nelson, T. O., & Leonesio, R. J. (1988). Allocation of self-paced study time and the “labor-in-vain effect”. Journal of Experimental Psychology: Learning, Memory, and Cognition, 14, 676–686.

Pacherie, E. (2007). The sense of control and the sense of agency. Psyche, 13, 1–30.

Pacherie, E. (2013). Sense of agency: Many facets, multiple sources. In J. Metcalfe & H. S. Terrace (Eds.), Agency and joint attention (pp. 321–345). New York: Oxford University Press.

Proust, J. (2013). The philosophy of metacognition: Mental agency and self-awareness. Oxford: Oxford University Press.

Sato, A., & Yasuda, A. (2005). Illusion of sense of self-agency: Discrepancy between the predicted and actual sensory consequences of actions modulates the sense of self-agency, but not the sense of self-ownership. Cognition, 94, 241–255.

Soderstrom, N. C., & McCabe, D. P. (2011). The interplay between value and relatedness as bases for metacognitive monitoring and control: Evidence for agenda-based monitoring. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 1236–1242.

Son, L. K. (2004). Spacing one’s study: Evidence for a metacognitive control strategy. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30, 601.

Son, L. K., & Metcalfe, J. (2000). Metacognitive and control strategies in study-time allocation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 26, 204–221.

Thiede, K. W., & Dunlosky, J. (1999). Toward a general model of self-regulated study: An analysis of selection of items for study and self-paced study time. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25, 1024–1037.

Undorf, M., & Ackerman, R. (2017). The puzzle of study time allocation for the most challenging items. Psychonomic Bulletin & Review, 1–9. doi:https://doi.org/10.3758/s1342

Undorf, M., & Erdfelder, E. (2013). Separation of encoding fluency and item difficulty effects on judgements of learning. The Quarterly Journal of Experimental Psychology, 66, 2060–2072.

Undorf, M., & Erdfelder, E. (2015). The relatedness effect on judgments of learning: A closer look at the contribution of processing fluency. Memory & Cognition, 43, 647–658.

Wegner, D. M. (2002). The illusion of conscious will. Cambridge: MIT Press.

Wegner, D. M. (2003). The mind’s best trick: How we experience conscious will. Trends in Cognitive Sciences, 7, 65–69.

Weiner, B. (1985). An attributional theory of achievement motivation and emotion. Psychological Review, 92, 548–573.

Acknowledgements

The work reported in this article was supported by the Max Wertheimer Minerva Center for Cognitive Processes and Human Performance at the University of Haifa. I am grateful to Miriam Gil for her help in the analyses. Tamar Jermans, Mor Peled, and Shai Raz helped in the collection of the data. I also thank Etti Levran (Merkine) for her help in copyediting.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Koriat, A. Agency attributions of mental effort during self-regulated learning. Mem Cogn 46, 370–383 (2018). https://doi.org/10.3758/s13421-017-0771-7

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-017-0771-7