Abstract

Background

Pulmonary nodule detection in CXR is challenging. Recently, the use of artificial intelligence (AI) has been a major attraction. The current study aimed to evaluate the diagnostic performance of the AI in the detection of pulmonary nodules or masses on CXR compared to the radiologist’s interpretation and to assess its impact on the reporting process. The current study included 150 patients who had CXR interpreted by radiologists and by AI software.

Results

CT detected pulmonary nodules in 99 cases (66%) while the visual model of analysis, as well as AI, detected nodules among 92 cases (61.3%) compared to 93 (62%) cases detected by combined visual/AI model. A total of 216 nodules were detected by CT (64.4% solid and 31.5% GG). Only 188 nodules were detected by the AI while 170 nodules were detected by visual analysis. As per case classification or nodule analysis, the AI showed the highest area under curve (AUC) (0.890, 95% CI) and (0.875, 95% CI), respectively, followed by the combined visual/AI model. Regarding the nodules’ texture, the AI model’s sensitivity for solid nodules was 91.4% which was greater than the combined visual/AI and visual models alone, while in GG nodules, the combined visual/AI model’s sensitivity was higher than the AI and visual models. The probability score using the combined visual/AI model was significantly higher than using the visual model alone (P value = 0.001).

Conclusions

The use of the AI model in CXR interpretation regarding nodules and masses detection helps in more accurate decision-making and increases the diagnostic performance affecting the patient’s morbidity and mortality.

Similar content being viewed by others

Background

Chest radiography (CXR) is usually the first step in the assessment of almost any patient complaining of respiratory symptoms, in a pre-occupational setting and even in the routine follow-up of most cancer patients [1]. Also, multiple large clinical trials have been carried out for assessing its possible use as a screening tool for lung cancer detection in high-risk populations, especially with its low cost and availability [2].

Pulmonary nodule detection in CXR is still challenging as some nodules can be obscured by the anatomical structures while others not detected because of the nodule size and nature [3]. This might explain the results of the National Lung Screening Trial (NLST) which revealed the lower sensitivity of the CXRs compared to low-dose CT (LDCT) in nodule detection [4].

LDCT is considered the screening modality for lung cancer among high-risk populations in most countries [5]. However, LDCT has higher cost and a higher radiation dose compared with CXR with higher false-positive nodules detection leading to additional laboratory workup and increased patient anxiety [6].

CXR can play an important role in detecting lung cancers presenting as incidental pulmonary nodules with a better prognosis as they are likely to have earlier stages of cancer and smaller tumors [7].

Recently, the artificial intelligence (AI) algorithms for the detection of pulmonary nodules in CXRs images by optimizing data based on previous observations and deep learning techniques have been a major attraction for medical applications [8] Some studies have demonstrated the ability of the AI algorithm to improve speed and accuracy in image reading, increase the detection rate of pulmonary nodules, and reduce radiologist errors [9, 10].

Despite the wide steps in the research on the use of such artificial intelligence algorithms in thoracic imaging have been taken over the last few decades, we rarely see them in clinical practice [8].

Aim of the work

This study aimed to primarily evaluate the diagnostic performance and efficacy of the artificial intelligence (AI) software for automatic detection of pulmonary nodules or masses on CXR compared to the radiologist’s interpretation using the computed tomography (CT) as a reference being more accurate compared to CXR.

Furthermore, we aimed to assess the impact of using the AI software in the diagnostic performance of the reporting process.

Methods

Patients

This was a retrospective study that was done in the Radiology Department of Ain Shams University. A total of 150 patients who came to do CXR followed by chest CT over the period from 05/2021 to 07/2022 were retrospectively selected from the PACS. The Ethical Committee of the Faculty of Medicine- Ain Shams University approved this retrospective study and the use of patients’ data archived in the picture archiving and communication system (PACS). The informed consent was waived being a retrospective study.

The inclusion criteria were any patients above 18 years old who did a CXR for any respiratory complaint or pre-occupational. Also, patients with known cancer elsewhere in the body came from the oncology department for lung metastases screening. No sex predilection.

The chest radiographs included in this study comprised patients without as well as with different lung diseases such as mild free and encysted effusion, cardiomegaly, consolidation, emphysema, sub-segmental and segmental atelectasis as well as calcified granuloma to be more matching with the encountered in real clinical practice.

The excluded patients were patients with poor image quality, image artifacts caused by any instrument such as wires, and patients with massive pleural effusion, or massive fibrosis. Also, patients with more than 6 nodules were excluded (maximum one nodule per lung zone on either side was the best statistically for better reflection of the nodule detection and assigning the nodules to lung zone in statistics). Any patients with unavailable CT within 2 weeks from the CXR were not included in the study. Lastly, patients with nodules < 5 mm were excluded (being insignificant).

Chest X-ray (CXR)

All the CXRs were selected from the PACS fulfilling the inclusion criteria and all were in a postero-anterior view (PA view). The CXR was reviewed by two radiologists with 6–8 years of experience in consensus. A training set of 20 chest radiographs unrelated to the study collected data was given to each radiologist to get the radiologist more familiar with the study. The radiologists were instructed to mark any significant nodule (by a circle) in the chest radiographs including pulmonary nodule (nodule = / > 5mm, non-calcified), pleural nodule/nodular thickening, or hilar lymphadenopathy. If multiple nodules are noted, they were instructed to highlight the most obvious nodules. The following points were required for the CXR assessment:

-

Presence or absence of pulmonary nodules or masses.

-

The number and location (right versus left and the affected upper/middle/lower lung zone) of the pulmonary nodules or masses.

-

The degree of confidence regarding the diagnosis of a nodule presence: confidence score (1 to 4) for each nodule based on how confident they were about the nodule [1 = uncertain (0–25% abnormality confidence score), 2 = mildly confident (26–50%), 3 = moderately confident (51–75%), 4 = very confident (76–100%)]. The normalized confidence score for the readers was calculated by the mean confidence score for both readers.

-

The data obtained from the radiologists alone were collected.

After 3 weeks the radiologists were given the same set of radiographs in different randomized arrangements and this time the results of the AI algorithm analysis were exposed to them while reading. Again the results of their reading after the aid of the AI algorithm were collected (nodules number, location, and confidence score).

Artificial intelligence (AI) software

-

In the current study, Lunit INSIGHT CXR (version 2.4.11.0; Lunit Inc.) was used which is a commercially available deep learning-based algorithm that is FDA cleared as well as Korea Food and Drug Administration approved [11]. This version was installed in our department in 2022. It is a deep convolutional neural network that uses residual neural network 34 (ResNet-34) as its foundational architecture with a self-attention mechanism to generate a more distinguishable image representation [12]. This algorithm was trained using 72,704 normal CXRs and 12,408 abnormal CXRs with lung nodules or masses obtained from multiple hospitals in South Korea that included both digital and computed radiographs annotated by at least 1 of 15 board-certified radiologists [13, 14].

-

This AI algorithm uses Digital Imaging and Communications in Medicine (DICOM) file as input and produces for each radiograph a heat (abnormality) colored hue map overlaid on the input chest radiographs identifying the locations of the abnormality (using ascending color scale blue, green, yellow and red according to the probability) as well as a separate abnormality score (0–100%) for each of these 10 CXR abnormalities including atelectasis, calcification, cardiomegaly, consolidation, fibrosis, mediastinal widening, nodule or mass, pleural effusion, pneumoperitoneum, and pneumothorax. This algorithm does not produce an output map when the abnormality score is below 15% (the operating point chosen using Youden criteria in the internal validation set) [15, 16].

-

The entire studied CXRs underwent assessment using the AI software without any radiologist interference or modification. The results were recorded separately by another radiologist after being instructed to annotate each nodule appearing on the probability color hue map by its location and color hue considering the color hue to be representative of the probability/confidence of AI for the diagnosis of the presence of each nodule [giving a corresponding number and probability range to each color score as follows: Blue = 1 (0–25%), green = 2 (26–50%), yellow = 3 (51–75%), red = 4 (76–100%)].

CT of the chest

CT chest studies were obtained within 2 weeks duration from the CXR and were used as a standard reference for validation of the radiologists' and AI algorithms results. The CT images were revised by two radiologists with 6–8 years of experience in consensus. The following parameters were recorded:

-

Presence or absence of pulmonary nodules or masses.

-

The number, location, size, and consistency of the detected nodules.

Statistical analysis

Data were analyzed using Statistical Package for Social Science (IBM SPSS statistics for windows, V. 25.0. Armonk, NY. USA). The qualitative data were described as numbers and percentages. The nonparametric continuous data were described as median and range. The performance of the different models was evaluated using the area under the receiver operating characteristic (ROC) curve (AUC) and the paired-sample area difference was performed to determine the difference between the two models. Cross-tabulation was used to assess the diagnostic performance of the categorical variable. Following that, the sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and accuracy were calculated and the 95% confidence interval (CI) was determined. Mann–Whitney U test was used to evaluate the differences in nonparametric ordinal variables. All P values were two-tailed and P value < 0.05 was considered statistically significant.

Results

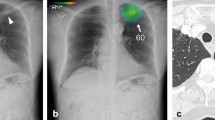

The current study included 150 patients having CXR with a patient median age of 48 years (age range: 18 to 83 years). Male patients represented 54% of the patients. CT detected pulmonary nodules in 99 cases representing 66% of the cases while the visual model of analysis, as well as AI analysis, detected nodules among 92 (61.3%) cases compared to 93 (62%) cases detected by combined visual/AI model. In all different models of interpretations, the solitary lung nodule and the right lung lobe site were the most common finding (Figs. 1, 2, 3, 4 and 5).

A Chest radiograph PA view showing right lower lung zone nodules (Marked by a radiologist). B Chest radiograph PA view interpreted by AI showing right lower lung zone nodules (probability level 4) and left lower lung zone nodules (probability level 2). C Chest CT axial cuts showing right and left variable-sized lower lung lobes nodules

According to per nodule analysis, 216 nodules were detected in the CT examination with a median nodule diameter of 10 mm (5–89 mm), and the most common nodule texture was solid (64.4%) followed by GG nodule (31.5%). This was compared to 188 nodules that were detected by the AI model and only 170 nodules detected by visual CXR analysis (Table 1).

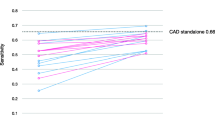

As per nodule analysis, the AI model had the highest AUC (0.875, 95% CI:0.820–0.930), followed by the visual/AI model with AUC (0.834, 95%: 0.771–0.897), while the visual model had the lowest AUC (0.772, 95%: 0.701–0.844) (Fig. 1), the paired-sample area difference revealed that the AUC of the visual model is consistently lower than that of the AI model (P = 0.002) and visual/AI (P = 0.002), yet no significant difference in the AUC between AI and visual/AI models (P = 0.185) (Table 2).

As per case (CXR) classification, the AI model showed the highest AUC (0.890, 95% CI:0.830–0.951), followed by the combined visual/AI model with AUC (0.866, 95%: 0.799–0.933), while the visual model showed the lowest AUC (0.816, 95%: 0.740–0.893) (Fig. 6), the paired-sample area difference showed that the AUC of the visual model is statistically lower than that of AI model (P value = 0.037) and visual/AI model (P value = 0.023), yet no significant difference in the AUC between AI and visual/AI models (P value = 0.437) (Table 2).

As per the case (CXR) analysis, the diagnostic performance parameters of the AI model were found to be the highest followed by the combined visual/AI model with the visual model showing the least diagnostic performance. This was also the same as per nodule analysis (Table 3).

Regarding the texture and nature of the nodule, the AI model's sensitivity for solid nodule localization was 91.4% which was greater than the combined visual/AI and visual models, while in GG nodules, the combined visual/AI model's sensitivity was higher than the AI and visual models (Table 4).

According to nodule localization; the right middle lung zone was the highest zone with lung nodule prevalence in CT, AI, visual/AI, and visual models (20.8%, 21.3%, 22.1%, and 22.4%, respectively), while the left lower lung zone was the lowest zone (14.6%, 11.2%, 11.6%, and 12.9%, respectively). There was no statistically significant difference in the distribution of lung nodules among visual and AI models or between visual and combined visual/AI models, but there was a statistically significant difference in the distribution of lung nodules between the visual model and CT (P < 0.001) (Table 5).

According to the study's findings, the probability score using the combined visual/AI model was significantly higher than using the visual model alone (P value = 0.001) (Table 6).

Discussion

The current applications of AI algorithms in radiology have demonstrated their efficacy in better, rapid, and more accurate reporting with this study aiming primarily at evaluating the diagnostic performance and efficacy of AI software in automatic detection of pulmonary nodules or masses on CXR compared to the radiologist visual reading using the CT as a reference. Second, we assessed the impact of using the AI software as a hel** tool with the radiologist during the reporting process for the detection of chest nodules and masses in the CXR.

The results of the current study showed that AI software had better efficacy than the radiologists' visual analysis alone in the detection of the pulmonary nodules and masses in CXR and it also improved the radiologists' performance in detecting these lesions when used in combination with the visual analysis.

The nodule incidence in the current study was 66% using the CT, 61.3% using the AI, and 62% using combined visual/AI model. This was higher as compared to Hendrix et al. [17] who reported 50% incidence of pulmonary nodule in 2019. The higher incidence on the current study can be explained by the sample size characteristics with the presence of patients with cancer and came for pulmonary metastases and this raised the incidence of nodules.

The median nodule diameter in this study was 10 mm (5–89 mm) and this was different from the results of Liang et al. [1] who detected pulmonary nodules/masses with an average diameter of 4.872 cm with limited ability to detect smaller lesions and this can be explained by the use of different AI algorithms in this study.

Regarding the AUC using per case (CXR) classification or even per nodule classification, the AI model showed the highest AUC with 0.890 (0.830–0.951) and 0.875 (0.820–0.930), respectively, and P value < 0.001, followed by the combined visual/AI model.

These results were almost similar to the study done by Choi et al. [18] which showed the improvement of performance in image classification using the AI models with AUC, 0.8679–0.9285; P value = 0.006 as well as lesion localization with AUC, 0.8426–0.9112; P value = 0.0287. This was also close to ** et al. [19] that showed that the AI solution itself showed acceptable performance with AUC, 0.863–0.873; also the study of Hwang et al. [14] showed improvements in diagnostic performance in both image-wise classifications (AUC, 0.814–0.932 to 0.904–0.958; all P < 0.005) and lesion-wise localization (AUC, 0.781–0.907 to 0.873–0.938; all P < 0.001) with the assistance of the algorithm.

As per the case (CXR) classification, we found that the sensitivity of the radiologists to detect pulmonary nodules improved from 82.8 to 86.9%, also the specificity improved from 80.4 to 86.3%. Also, per nodule detection the sensitivity, specificity PPV, NPV, and accuracy were better in the combined visual/AI model (80.6%, 86.3%, 96.1%, 51.2%, and 81.7%, respectively), compared to visual models alone (74.1%, 80.4%, 94.1%, 42.3%, and 75.3%, respectively).

This was in agreement with Choi et al. [18] results for lesion detection which showed improvement in radiologists’ sensitivity from 78.13% to 88.75% for nodule/mass and the study of Sim et al. [10] who revealed an increase in the sensitivity of radiologists from 65.1 to 70.3% with a decline in the number of false-positive findings (from 0.2 to 0.18, P < 0.001) when the radiologists re-reviewed radiographs with the deep learning algorithm for pulmonary nodules.

Nam et al. [8] also reported that the AI algorithm system showed significantly higher specificity than that of the pooled radiologists (94% vs 78%; P = 0.01). The sensitivity and specificity demonstrated by the radiologists were significantly improved when they were aided by the algorithm (P < 0.001 and P = 0.01, respectively), and the algorithm showed good sensitivity that was higher than that of the thoracic radiologists (69.6% vs 47.0%; P < 0.001) as well as higher specificity (94.0% vs 78.0%; P = 0.01).

The current study also showed that the diagnostic performance parameters of the AI were the highest compared to the visual analysis alone or even the combined visual/AI model with acceptable good performance compared to the CT results and this agreed with the study of Kim et al. [20] that demonstrated an overall fair to good AI stand-alone performance to determine the presence of referable thoracic abnormalities.

Regarding the nature of the nodules detected, the AI model showed the highest sensitivity for solid nodule localization, while the visual/AI model showed a higher sensitivity for GG nodule localization. Lastly, for the cavitating nodules, the AI and AI/visual models had the same sensitivity which was higher than the visual model alone.

And this agreed with the study of Liang et al. [1] who reported that of the 47 pulmonary nodules in their study, 39 (82.97%) were solid nodules and eight (17.02%) were part-solid nodules, and Nam et al. [8] whom study showed 80% solid nodules (149 out of 187) versus 14% subsolid nodules (26 of 187) and 6.4% cavitations [12].

For the location of lung nodules, the right middle lung zone was the highest in all different models while the left lower lung zone had the lowest prevalence. This was quite different from the study of Nam et al. [8] who showed that the RLL had the highest number of nodules detected and the RML had the lowest number. But we agreed with them regarding the AI higher detection rate than readers for obscured nodules and pooled readers successfully detected more than 10% more nodules when assisted with the algorithm for the hilar and apical nodules.

The degree of confidence (the probability score) in the current study was significantly higher with the visual/AI model than that with the visual model alone. This was also demonstrated by Liang et al. [1] who reported that for plain chest films with the lowest probability score, radiologists can exclude lesions with greater confidence.

The difference of the results between the visual analysis and AI can be explained by the presence of blind or obscured areas on chest radiographs for both the radiologist and AI algorithms and this is a limitation of the X-ray itself. These obscured areas showed more limitation to the visual analysis. Also, AI due to the deep learning showed higher ability to detect less dense nodules and smaller nodules compared to the visual assessment.

To our knowledge, there was no sufficient number of studies that focused on the radiologist's diagnostic performance with and without the aid of AI algorithms in the area of Africa. However, several limitations were encountered in the current study being a single-center retrospective study. Also, we studied only pulmonary nodules ignoring the other pulmonary abnormalities. Also, nodule characterization either benign versus malignant was not included in the aim. Lastly, we studied chest radiographs using the PA projection, without lateral or any other added projections and this may be one of the reasons for the lower diagnostic performance of the radiologists alone compared to the AI models.

Conclusions

The performance of the AI model in CXR interpretation regarding nodules and masses detection is acceptable if used alone or even as an assistant to the radiologist as it enhances the radiologist's confidence and helps in more accurate decision-making in real clinical situations. It increases the diagnostic performance of the CXR interpretation which of course will make a positive impact on the patient’s morbidity and mortality. Using AI will not replace radiologists but it will improve the diagnostic performance and enhance the diagnostic accuracy especially with the uprising in the workload.

Availability of data and materials

Available on request with the corresponding author.

Abbreviations

- AI:

-

Artificial intelligence

- AUC:

-

Area under the curve

- CT:

-

Computed tomography

- CXR:

-

Chest X-ray

- GG:

-

Ground glass

- LDCT:

-

Low Dose Computed tomography

- PACS:

-

Picture archiving and communication system

References

Liang CH, Liu YC, Wu MT et al (2020) Identifying pulmonary nodules or masses on chest radiography using deep learning: external validation and strategies to improve clinical practice. Clin Radiol 75(1):38–45. https://doi.org/10.1016/j.crad.2019.08.005

Yoo H, Kim KH, Singh R et al (2020) Validation of a deep learning algorithm for the detection of malignant pulmonary nodules in chest radiographs. JAMA Netw Open 3(9):e2017135–e2017135

Gavelli G, Giampalma E (2000) Sensitivity and specificity of chest X-ray screening for lung cancer. Cancer 89:2453–2456. https://doi.org/10.1038/sj.bjc.6604351

Aberle DR, DeMello S, Berg CD et al (2013) Results of the two incidence screenings in the National Lung Screening Trial. N Engl J Med 369:920–931. https://doi.org/10.1056/NEJMoa1208962

De Koning H, Van Der Aalst C, Ten Haaf K et al (2018) Effects of volume CT lung cancer screening: mortality results of the NELSON randomized-controlled population-based trial. J Thorac Oncol 13(10):S185. https://doi.org/10.1016/j.jtho.2018.08.012

Bach PB, Mirkin JN, Oliver TK et al (2012) Benefits and harms of CT screening for lung cancer: a systematic review. JAMA 307(22):2418–2429. https://doi.org/10.1001/jama.2012.5521

Quadrelli S, Lyons G, Colt H et al (2015) Clinical characteristics and prognosis of incidentally detected lung cancers. Int J Surg Oncol. https://doi.org/10.1155/2015/287604

Nam JG, Park S, Hwang EJ et al (2019) Development and validation of deep-learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology 290(1):218–228. https://doi.org/10.1148/radiol.2018180237

Ather S, Kadir T, Gleeson F (2020) Artificial intelligence and radiomics in pulmonary nodule management: current status and future applications. Clin Radiol 75(1):13–19. https://doi.org/10.1016/j.crad.2019.04.017

Sim Y, ChungMJ KE et al (2020) Deep convolutional neural network-based software improves radiologist detection of malignant lung nodules on chest radiographs. Radiology 294(1):199–209. https://doi.org/10.1148/radiol.2019182465

Kim JH, Han SG, Cho A et al (2021) Effect of deep learning-based assistive technology use on chest radiograph interpretation by emergency department physicians: a prospective interventional simulation-based study. BMC Med Inf Decis Mak 21:311. https://doi.org/10.1186/s12911-021-01679-4

He K, Zhang X, Ren S et al (2016). Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR). Institute of Electrical and Electronics Engineers, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Yoo H, Kim KH, Singh R et al (2020) Validation of a deep learning algorithm for the detection of malignant pulmonary nodules in chest radiographs. JAMA Netw Open 3:e2017135–e2017135. https://doi.org/10.1001/jamanetworkopen.2020.17135

Hwang EJ, Park S, ** K-N et al, DLAD Development, and Evaluation Group (2019) Development and validation of deep-learning-based automated detection algorithm for major thoracic diseases on chest radiographs. JAMA Network Open. 2(3):e191095. https://doi.org/10.1001/jamanetworkopen.2019.1095

Yoo H, Lee SH, Arru CD et al (2021) AI-based improvement in lung cancer detection on chest radiographs: results of a multi-reader study in NLST dataset. Eur Radiol 31:9664–9674. https://doi.org/10.1007/s00330-021-08074-7

Nam JG, Hwang EJ, Kim DS et al (2020) Undetected lung cancer at posteroanterior chest radiography: potential role of a deep learning–based detection algorithm. Radiol Cardiothorac Imaging 2(6):e190222. https://doi.org/10.1148/ryct.2020190222

Hendrix W, Rutten M, Hendrix N et al (2023) Trends in the incidence of pulmonary nodules in chest computed tomography: 10-year results from two Dutch hospitals. Eur Radiol. https://doi.org/10.1007/s00330-023-09826-3

Choi SY, Park S, Kim M et al (2021) Evaluation of a deep learning-based computer-aided detection algorithm on chest radiographs: case-control study. Medicine 100(16):e25663. https://doi.org/10.1097/MD.0000000000025663

** KN, Kim EY, Kim YJ et al (2022) Diagnostic effect of artificial intelligence solution for referable thoracic abnormalities on chest radiography: a multicenter respiratory outpatient diagnostic cohort study. Eur Radiol 32(5):3469–3479. https://doi.org/10.1007/s00330-021-08397-5

Kim EY, Kim YJ, Choi WJ et al (2021) Performance of a deep-learning algorithm for referable thoracic abnormalities on chest radiographs: a multicenter study of a health screening cohort. PLoS ONE. https://doi.org/10.1371/journal.pone.0246472

Acknowledgements

Not applicable.

Funding

All authors declare that no funding or any source of financial interest. All authors declare that: they all read and approved the final version of the submitted manuscript. The authors declare that this is an original work, hasn't received prior publication, and isn't under consideration for publication elsewhere. The authors confirm sole submission to Egyptian Journal of Radiology and Nuclear Medicine. They all confirm that the article is not under consideration for publication elsewhere. All authors declare no subject overlap with previously published work.

Author information

Authors and Affiliations

Contributions

SF contributed to the idea, organization, data collection, and writing. AMO contributed to the idea, writing, and corresponding author. SMA helped in data collection and writing. ASA involved in statistical analysis and helped in writing and revision.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval were taken from our institute ethical committee (Faculty of Medicine-Ain shams university) and being a retrospective study, the informed written consents were waived.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Farouk, S., Osman, A.M., Awadallah, S.M. et al. The added value of using artificial intelligence in adult chest X-rays for nodules and masses detection in daily radiology practice. Egypt J Radiol Nucl Med 54, 142 (2023). https://doi.org/10.1186/s43055-023-01093-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43055-023-01093-y