Abstract

Digital data processing has revolutionized medical documentation and enabled the aggregation of patient data across hospitals. Initiatives such as those from the AO Foundation about fracture treatment (AO Sammelstudie, 1986), the Major Trauma Outcome Study (MTOS) about survival, and the Trauma Audit and Research Network (TARN) pioneered multi-hospital data collection. Large trauma registries, like the German Trauma Registry (TR-DGU) helped improve evidence levels but were still constrained by predefined data sets and limited physiological parameters. The improvement in the understanding of pathophysiological reactions substantiated that decision making about fracture care led to development of patient’s tailored dynamic approaches like the Safe Definitive Surgery algorithm. In the future, artificial intelligence (AI) may provide further steps by potentially transforming fracture recognition and/or outcome prediction. The evolution towards flexible decision making and AI-driven innovations may be of further help. The current manuscript summarizes the development of big data from local databases and subsequent trauma registries to AI-based algorithms, such as Parkland Trauma Mortality Index and the IBM Watson Pathway Explorer.

Similar content being viewed by others

Introduction

Along with the availability of hospital administration systems, the options for big data management have substantially improved [1]. Modern patient documentation systems are usually able to combine general clinical information that covers the hospital course, laboratory data and other sets, such as core radiological data [2]. Moreover, some recent systems include the option of develo** apps to describe clinical syndromes and further diagnostic aids [3, 4].

Registries have frequently been generated alongside certification processes and have provided large volumes of information from multiple hospitals of a given country. The range of their given data sets is usually limited, as they focus on certain clinically relevant aspects (registries for trauma, geriatric trauma, or other diseases). Thereby, registry-based data bases frequently provide unidirectional information and can be used only for their given purpose [5, 6]. Along these lines, the most expanded sets of data are mostly generated by insurance companies [7]. Some of them combined the results after trauma or other diseases to assess a prognosis of the medical treatment result [8]. Others primarily focus on selecting quality programs and some are even developed to cover issues of reimbursement [9].

Prior to the development of registries, physicians were involved in several manners, and some focused on the results of their given treatments. In this line, the AO Foundation was the first institution collecting data sets that go beyond the purpose of their development. The first so-called “femur fracture collection study” summarized data from 1127 patients with femur shaft fractures treated with intramedullary nails. Although this study was designed to document healing issues after intramedullary fixation, their results demonstrated an unusually high rate of pulmonary complications – namely ARDS and pulmonary embolism – in young patients after isolated femur fractures. This data set triggered the discussion about the fracture fixation influence on the development of complications. Of note, this discussion initiated a large number of publications about 10 years before the first article from the major trauma outcome study and 15 years before the initiation of the concept for damage control orthopaedics [10].

Others are designed to provide data for patient assessment and teaching purposes or to develop guidelines and management tools for future use [11]. Furthermore, assessment of the quality of care following secondary referral could be performed recently [12]. Also, evaluations on rare injury combinations and their management have been performed [13] even within the frame of international comparisons [14]. Finally, quality assessment in terms of surgeon`s experiences and outcomes has been performed.

Another new approach generating big imaging data sets has been suggested to improve the quality of surgical studies that have been proved as biased by the skills level of the performing surgeons [15]. Complete intraoperative documentation of surgical procedures is disseminated in a standardized way. A subsequent access and electronic management of data sets online have been suggested by a group of experienced surgeons [16], allowing for post hoc analysis by artificial intelligence (AI) [17]. Moreover, recent progress in biochemical and molecular biological analytics is known as a provider of big data sets for characterization of the genomic-, transcriptomic-, and metabolomic patients’ status. When combined with clinical data, these translational approaches are intended to improve the prognostic fidelity, allowing for individual risk stratification of trauma patients and safe definitive surgery decisions [18, 19].

The current research article summarizes the development of options for generation of big data in the clinical setting of trauma patients. More specifically, it provides an analysis of the most pertinent data sets utilized for trauma patients. Moreover, it compares the options among registries and local data sets and analyzes the role of the data sets for the concept development of safe definitive surgeries in trauma patients. Finally, it emphasizes a new approach for surgical quality control (ICUC), scores that were generated based on the initiatives listed above, and a brief outlook on future AI options [20].

Methods

Inclusion criteria

-

a.

Big data were included if they were generated from more than 500 patients, if the individual numbers were limited, or if the combination of multiple time points and parameters in a given data set exceeded 50,000.

-

b.

Systematic literature review.

1) Studies that investigate registry data and data exceeding the ability to be covered by one facility only;

2) Studies providing reliable information assessing diagnostic tools, including scoring systems, to facilitate profiling of injuries;

3) Studies that provide information to assess injury and fracture management. Case reports, defined as studies reporting data on a sample size of less than 5 patients were excluded. Results from meeting abstracts were also excluded.

The approach to generate the data was two-fold:

First, a systematic literature review was performed by the authors, using Medline database with the language selection being English or German. The authors focused on certain eligibility criteria for multiply injured patients as indicated before. Original articles were included if published between Jan 1, 1985 and May 15, 2023. No language restrictions were applied. Data sources included MEDLINE, Embase, and the Web of Science. Disagreements were resolved in discussion.

Second, an expert analysis has been generated on the concepts of known data analysis options. All authors are experts in the field of data analysis. The expert analysis was then combined with the predefined data interpretation to describe the way experienced clinicians react to the technical progress in data management.

The authors have had involvement in this manuscript as follows:

AS is an expert in development of a hospital index to monitor the risk of mortality (Parkland mortality index) [21]. Moreover, he is the developer in a frame to facilitation reduction for pelvic fractures ( [22] Starr frame).

BG is vice director of the AO Research Institute Davos, Switzerland. He is also in charge of the Biomedical Development program at the Institute and has been involved in multiple studies with a multi-center design.

GAW has been a leader in the Network of the German Trauma System, where he chaired a cross-border trauma network as organizer and referral hospital (Schwarzwald-Baar Hospital, Level-1 trauma Center). In terms of generating data on a hospital level, he has generated a data base to develop data on posttraumatic complications [23]. Moreover, he has recently developed a new method to perform intraoperative visualization and documentation in pelvic surgery [24] based on mixed reality technology.

HCP is a founding member of the German Trauma Registry and was involved in the development of scores and concepts, which were defined on the basis of a big data set. Among these are the Thoracic trauma score (TTS) [25], the evidence based Definition of Polytrauma [26], the damage control concept [27], and the Safe Definitive Surgery concept [28].

Definitions

Big data were defined as digitally available data, as described in the inclusion criteria.

The Injury Severity Score was used to determine injury severity.

The Glasgow Coma Scale [29] was utilized to assess head injuries, if available.

For definition of Multiple organ failure (MOF), the Sequential Organ Failure Assessment score was used (SOFA), or other scores, as indicated in the given manuscript [30].

Sepsis was diagnosed in patients having three or more points in a specific organ with at least two organs failing at the same time.

In hospital mortality was defined as the passing of a patient during the treatment in the primary care hospital.

Inclusion criteria

Studies were included if they overlooked data from more than 100 patients from a single institution or 300 from a multicenter data base, if they were generated by registries, or if their size was large enough to develop a new clinical concept or a definition of a disease.

Exclusion criteria

Studies were excluded if their results were not reconfirmed after publication, or if they were inconclusive.

Distribution of data collections

-

local distribution was defined as data collection on a hospital level.

-

regional distribution was defined as data collection among several hospitals.

-

country wide distribution was defined as data collection within the borders of a given country.

-

registry based distribution was defined as data collection within a defined registry, independent of regions or borders.

Data collection processes were further divided into those that are determined to develop general patient data, or those on local injury severity, to predict the hospital course (complications, sepsis), to predict general outcome after trauma, or to develop scoring systems.

Artificial narrow intelligence (narrow AI; ANI)

Narrow AI (ANI) is AI programmed to perform a single task (e.g. to play chess). ANI systems can attend a task in real-time but pulling information from a specific data set. ANI systems process data and complete tasks at a significantly quicker pace than any human being can. The main purpose of it is to enable humans to improve overall productivity, efficiency, and quality of life. ANI systems, such as IBM’s Watson, for example, is able to harness the power of AI to assist doctors to make data-driven decisions [2].

Artificial general intelligence (strong AI; AGI)

Artificial General Intelligence (AGI) or “strong” AI refers to machines that exhibit human intelligence. AGI can successfully perform intellectual tasks that are covered by a human being. This sort of AI that can be seen in movies such as “Her” (where humans interact with machines and operating systems that are conscious, sentient, and driven by emotion).

Artificial super intelligence (super AI; ASI)

Artificial Super Intelligence (ASI) is defined as “any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest” (Nick Bostrom). This condition surpasses human intelligence in all aspects — such as creativity, general wisdom, and problem-solving [31].

Results

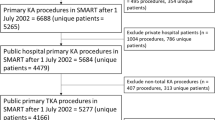

The first larger database in trauma patients that exceeds the numbers defined above has been the AO collection of patients with femur fractures (Table Ia), published in 1986, i.e. about a decade before the MTOS was generated. The initial purpose of this data base has been to document the healing process in patients who sustained isolated femur fractures. The AO has subsequently performed multiple studies that include multiple centers and has focused on different topics, as indicated in Table Ia. All these studies have been generated, funded and developed by the AO with no external funding. It was then followed by multiple other clinical studies and the FROST initiative, which is about to be completed. It summarizes all patients with tibial fractures regardless of the type of fixation. Likewise, the periprosthetic fracture registry documents patients with these kind of geriatric injuries (Table 1a).

The Major Trauma Outcome Study (MTOS) [32] summarized information on trauma patients (Table 1b). It collected data from hospitals in the USA and its results proved to be helpful for several reasons. On one hand, they served help to develop criteria to identify those hospitals where trauma patients were to be concentrated. On the other hand, it helped develop new scores to assess patients early and reduce issues that occurred when using the Injury severity score based on AIS (AIS/ISS). As it was developed to score patients with life threatening injuries, it became evident that by using the maximum AIS of a single body region only, a certain subset of patients was underdiagnosed, namely those with multiple extremity fractures [33]. This was the main reason why the New ISS (NISS) was developed [34].

On a separate note, it was thought that physiological data should be added. This has lead to the development of a “Severity Characterization of Trauma” (ASCOT) [35], which provided a physiologic and anatomic characterization of injury severity. This score combined emergency department admission values of Glasgow Coma Scale, systolic blood pressure, respiratory rate, patient age, and AIS-85 anatomic injury scores to minimize the shortcomings of ISS [35].

Independent of the issues described above, the ISS has enabled authorities to describe and monitor different levels of hospitals and focus on those that require a larger volume and associated overhead costs. These regional distributions were associated with sharing of data between several hospitals, or hospital systems – they lead to the development of trauma systems and their associated certifications [36].

Associated with these developments was a subsequent country wide distribution. In the USA, the National Trauma Database was strictly based in the country of development [37]. In Europe, Great Britain developed the Trauma Audit and Research Network [38] and the network originated in Germany [39], which was the first to document across borders, i.e. in Belgium, Netherlands, Switzerland, and other countries including outside Europe (Table 1b).

These registry based distributions lead to distinct sets of data frequently used for quality control and assessment of mortality rates. Moreover, they have been used during a consensus process during the development of a definition for polytrauma patients. In this consensus process, a data set of more than 28,000 patients was used to test a hypothesis generated upon a suggestion developed by experts [26] (Table 2). Table 2 lists scoring systems developed to address clinical problems [40]. The underlying data bases are also documented [41]. As these have been described in various publications, we hereby refrain from describing them in detail [42,43,44].

Table 3 summarizes surgical tools for decision making in fracture care. The principles of surgical management of major fractures in multiple injured patients are described and set in relation to available parameters and data size [42, 45]. The use of databases can also be seen in the development of the fracture care of major fractures, where a development trend was seen towards the use of multiple parameters at multiple time points after admission in order to achieve the best possible patient safety. Subsequently, it was able to calculate threshold levels for distinct parameters, which were able to separate mortality rates and other clinical outcome parameters [43] (Table 3).

Figure 1. Direct access to the online patient chart to combine patient data on a local level can be achieved by data warehouse and requires constant data exchange (unpublished data from Zurich University hospital, mentioned in Niggli et al., 2021 ().

Figure 2 depicts the Sankey visualization tool named IBM Watson. It utilizes acute data extracted from the hospital information system. However, it is not collected to verify a certain parameter. Instead, it utilizes the data to perform a visualization of the expected hospital course. This approach has been derived from the development of turbines, where a developer used pressure and flow diagnostics to improve the thrust of turbines of various shapes. This Sankey diagram can be used in various fields of medicine. Figure 2 shows a Sankey projection that was based on (about) 10 clinical laboratory values, and is able to determine a risk scenario based on a previous large group of more than 1000 patients with polytrauma as a comparison [1] (Fig. 2). It projects the possible clinical course in the determinants listed under “pathway”, where coagulation, ATLS Shock severity, surgical strategy and outcome are determined. Although it is not justified to use these data to predict outcome, it may serve as a valuable tool to mimic clinical scenarios for physicians in training and interdisciplinary groups [46].

Example for the IBM Watson Health TRAUMA explorer, developed according to a data base of 3650 patients. The IBM Watson Health TRAUMA explorer can have access to the hospital data base system. Explanation: Level A: On the left side, the patient status can be assessed and the background of underlying patients can be selected (geriatric versus young). Then the surgical tactic can be selected and the system will provide information about the outcome seen in the selected patient group. Level B: The underlying patient population can be changed according to the expectations, such as age range, ISS range etc. This will determine the initial data of the pathway. Level C (visualization and Sankey diagram): According to different treatment options (resuscitation, surgical strategy etc.), the outcome can vary. Currently, the options for endpoints have been set to mortality, Sepsis and SIRS

Figure 3 demonstrates a clinical scenario determined in the Parkland trauma index. It determines the risk of mortality online and is underlying in the hospital documentation system. Acute patient data are thus collected to determine issues of in house mortality [47].

Discussion

Prior to the availability of digital processing of data, clinicians usually reported on a rather small number of patient and almost all of them summarized personal experience, or an evidence level up to level IV. Along with the availability of digital data and their processing, data generated in the local hospital setting were usually documented in local databases. Among the first attempts to collect data across hospitals was the Major Trauma Outcome Study (MTOS), which collected data from multiple regions in the USA. The documentation was collected by study nurses and other documentation experts [48].

Subsequently, digital documentation improved the options to process information across hospitals and was pursued on a regular basis. Trauma registries used the same principles, as seen in Great Britain in the development of a nationwide database, the Trauma audit and research network (TARN). Within Europe, the largest was the German Trauma Registry, which was the first to document beyond borders, mostly within European countries [49].

One of the drawbacks of Trauma registries has been the availability of data that require information beyond the data sets obtained. In addition, the quality control is usually not available until all information has been obtained from every single hospital, which takes usually about a year after collection of the data. Therefore, any additional research questions that went beyond these data sets could not be answered.

In trauma and orthopaedic care for acute major fractures, surgical decision making is an important factor to ensure an uneventful hospital course. This holds especially true for the transition of principles to stabilize major fractures, which may serve as a good example. The concepts moved from an “Early Total Care versus Damage Control Orthopaedics” discussion towards “Early appropriate care”, followed by “Safe definitive Surgery” (Table 3). The development of these concepts has been made available by specialized documentation of parameters used to determine the effect of surgery on parameters indicative of the clinical course. These parameters covered several pathogenetic cycles, namely “shock”, “coagulopathy”, “hypothermia”, and parameters indicative of “severe soft tissue injury”.

The belief was that inflammatory changes are set at the time of injury and can be influenced if another infect of clinical impact was too strong (second hit theory). Subsequently, the genetic storm theory hypothesized that the initial impact of trauma sets up the patient for any possible complications and the influence of therapy was doubted [50]. Nevertheless, later it became obvious that numerous secondary effects are certainly able to influence the further course, i.e. by infectious stimuli (PAMPS).

The development of these different concepts went along with improved evidence levels, i.e. from level IV to level II. The data warehouse concept (Table 2), although based on a single center only, used deductive information from a hospital database that covers all laboratory parameters and clinical data [51].

On a different level, prediction of complications has been a very important issue. In early attempts, expert opinion has been used to specify diagnoses. Among the most important ones relevant for an uneventful hospital course has been the consensus for pulmonary failure, i.e. adult respiratory distress syndrome, which lead to an ARDS consensus definition. In trauma patients, the severity of chest trauma can be crucial to determine the given risk for pulmonary complications. Although based on a local database, the development of the Thoracic Trauma Score (TTS) has been proven to have a predictive ability towards the development of ARDS [41]. These scorings required the availability of certain isolated factors but did not respect the time dependent changes of serial parameters. This can be overcome by having direct access to hospital data, which is currently only achieved by 2 different projects. Both have the privilege of using direct access to hospital data.

The WATSON visualization tool has been developed by IBM and was thought to utilize data in order to visualize possible upcoming complications during the hospital course. It is accepted as a teaching tool for residents in training and offers both, the use of a set database, and the utilization of the hospital system in the background [11]. The visualization originally derives from the development of turbines, which was invented in order to document how different shapes may lead to different types of thrust and outflow of air. From that, it has been modified to cover the course of patients for different indications. The implementation of the Trauma tool was covered in a time course between 2018 and 2022, with the first 2 year serving for development, followed by application within the hospital system and use for teaching and research [2]. It covers two different layers of information. At first, the true data of a patient are included and cover age, injury severity and other basic laboratory values (Fig. 2).

The tool developed in Dallas is named the Parkland Trauma Index of Mortality (PTIM) [21] and was installed around the same time and also uses a hospital information system (EPIC). The PTIM is a machine learning algorithm using emergency room data to predict mortality within 48 h in trauma patients during the first 3 days of their hospitalization. As a novel feature, the tool is integrated directly into the electronic health record of the hospital, extracts the data (i.e. 23 parameters) automatically, and calculates the PTIM score, thus requiring no input from the clinician (Fig. 3). Similar to the WATSON visualization tool, the PTIM may be used in the future to guide decision-making for important treatment strategies.

One may argue that the large data sets created by registries provide sustained evidence, as outlined by their country wide distributions. In contrast, they are also associated with well described drawbacks. As deductive data sets, their content has usually been consented by an expert group prior to testing and thus may have been subject to limitations. Moreover, the number of parameters is usually focused on basic information to describe patients – and their injury distribution and injury severity – rather than focusing on physiology. If physiological data are documented, the clinical parameters highlight those indicative of haemorrhage and cardiovascular parameters, maybe oxygenation, but these are usually limited to the admission period, thus precluding from making meaningful conclusions regarding the effect of treatment [52]. In addition, any cofactors that may affect outcome, are not available. These may include comorbidities that go beyond Diabetes mellitus and similar ones, or parameters descriptive of the clinical course. This is important, as parameters thought to be of sustained value for the severity of trauma and haemorrhage, such as lactate and pH, are modified by treatment within the first 24 h after admission. Dezman and colleagues were able to convincingly demonstrate that serial lactate levels outweigh the predictive ability of admission lactate values by far [53]. Furthermore, a connection between data regarding the severity of injury and clinical data is difficult to achieve. Thereby, if registries alone had been utilized to develop treatment concepts, these would have provided insufficient information.

In this line, it may be worth considering the importance of a local data distribution on a hospital level. These allow to collect a much more complex set of individual data that would include laboratory values and organ specific parameters. This approach has been used to test large local data sets, e.g. for development of scores, such as the Moore score for abdominal injuries, the Thoracic Trauma Score to evaluate chest injuries, and the Watson Analytics trauma tool (Table 2). The basis for being able to use a data set is the ability to extract data from a hospital system, as can be done using a data warehouse project (Fig. 1). This may allow for selecting clinically relevant data on admission, which may be extended throughout the hospital course. Local databases have been used to test the clinical relevance of subclinical parameters relevant for the risk of complications. These have helped understand how important the reactions of the inflammatory cascade are for the development of clinical complications. The prediction of SIRS, ARDS, and multiple organ failure was enabled by testing certain variables that had to be measured in specifically identified trauma patients [54] (Table 2).

Limitations

We are aware that this review may not be complete and represents a selection that has a trauma specific background. It is evident that other subspecialties have generated larger registries, more focused and equally associated with certification processes (e.g. certain oncologic diseases, ICU data regarding sepsis etc.). Among the surgical subspecialties, our review may be limited because it deals predominantly with a more orthopaedic background, rather than focusing on truncal injuries. Moreover, its span may be limited due to the fact that search terms for this particular topic are not readily available. Finally, the focus of our review may have been based on the experience of the authors. However, since all they have contributed to the topic in various ways, we feel that this view still may represent many general trends in the reaction to the availability of big data.

Conclusions

The development of several online tools to assess patients in a parallel fashion may suggest that the development of acute data acquisition will be helpful in the management of patients with complex, and rapidly changing, clinical situations. The development of a safe definitive surgery concept and its inclusion of multiple pathogenetic pathways can be regarded as a mode of development into flexible decision making, when compared with previous dichotomic approaches.

In addition, the development of AI has made a vast progress. While the projects listed above, PTIM and WATSON, both represent general AI, further steps are to be expected. It is possible that fracture recognition will belong to the easiest achievements of AI in the near future. In addition, machine learning might outgrow the current options of outcome prediction. It will be interesting to see how fast the changes will occur.

Data availability

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

References

Mica L, Niggli C, Bak P, Yaeli A, McClain M, Lawrie CM, Pape HC. Development of a Visual Analytics Tool for Polytrauma patients: Proof of Concept for a New Assessment Tool using a multiple layer Sankey Diagram in a single-center database. World J Surg. 2020;44:764. https://doi.org/10.1007/s00268-019-05267-6

Niggli C, Vetter P, Hambrecht J, Niggli P, Vomela J, Chaloupka R, Pape HC, Mica L. IBM WATSON Trauma Pathway Explorer© as a predictor for Sepsis after Polytrauma - Is procalcitonin useful for identifying septic polytrauma patients? J Surg Res (Houst). 2022;5:637. https://doi.org/10.26502/jsr.10020272

Regazzoni P, Giannoudis PV, Lambert S, Fernandez A, Perren SM. The ICUC((R)) app: can it pave the way for quality control and transparency in medicine? Injury 2017, 48:1101 https://doi.org/10.1016/j.injury.2017.04.058

Hasler RM, Rauer T, Pape HC, Zwahlen M. Inter-hospital transfer of polytrauma and severe traumatic brain injury patients: retrospective nationwide cohort study using data from the Swiss Trauma Register. PLoS ONE. 2021;16:234. https://doi.org/10.1371/journal.pone.0253504. e0253504.

Hartel MJ, Jordi N, Evangelopoulos DS, Hasler R, Dopke K, Zimmermann H, Exadaktylos AK. Optimising care in a Swiss University Emergency Department by implementing a multicentre trauma register (TARN): report on evaluation, costs and benefits of trauma registries. Emerg Med J. 2011;28:221. https://doi.org/10.1136/emj.2009.083030

Tjardes T, Meyer LM, Lotz A, Defosse J, Hensen S, Hirsch P, Salge TO, Imach S, Klasen M, Stead S. Anwendung Von Systemen Der künstlichen Intelligenz Im Schockraum. Die Unfallchirurgie. 2023;5:1.

Sulzberger L, Schmidt D, Mantovani GL, Pfäffli M, Lich T. Retrospective benefit estimation of motorcycle ABSs based on Swiss insurance data. Traffic Inj Prev. 2023;24:423. https://doi.org/10.1080/15389588.2023.2199111

Branca-Dragan S, Koller MT, Danuser B, Kunz R, Steiger J, Hug BL. Evolution of disability pension after renal transplantation: methods and results of a database linkage study of the Swiss transplant cohort study and Swiss disability insurance. Swiss Med Wkly. 2021;151(23 w30027). https://doi.org/10.4414/smw.2021.w30027

Gross T, Morell S, Scholz SM, Amsler F. The capacity of baseline patient, injury, treatment and outcome data to predict reduced capacity to work and accident insurer costs - a Swiss prospective 4-year longitudinal trauma centre evaluation. Swiss Med Wkly. 2019;149:21w20144. https://doi.org/10.4414/smw.2019.20144

Ecke H, Faupel L, Quoika P. Considerations on the time of surgery of femoral fraqctures. AO Sammelstudie Unfallchirurgie. 1985;2:89.

Mica L, Pape HC, Niggli P, Vomela J, Niggli C. New Time-related insights into an Old Laboratory parameter: early CRP discovered by IBM Watson Trauma Pathway Explorer© as a predictor for Sepsis in Polytrauma patients. J Clin Med. 2021;10:21. https://doi.org/10.3390/jcm10235470

Halvachizadeh S, Störmann PJ, Özkurtul O, Berk T, Teuben M, Sprengel K, Pape HC, Lefering R, Jensen KO. Discrimination and calibration of a prediction model for mortality is decreased in secondary transferred patients: a validation in the TraumaRegister DGU. BMJ Open. 2022;12(21). https://doi.org/10.1136/bmjopen-2021-056381. e056381.

Hax J, Halvachizadeh S, Jensen KO, Berk T, Teuber H, Di Primio T, Lefering R, Pape HC, Sprengel K, TraumaRegister D. Curiosity or underdiagnosed? Injuries to Thoracolumbar Spine with concomitant trauma to Pancreas. J Clin Med. 2021;10. https://doi.org/10.3390/jcm10040700

Raghupathy A, Nienaber CA, Harris KM, Myrmel T, Fattori R, Sechtem U, Oh J, Trimarchi S, Cooper JV, Booher A, et al. Geographic differences in clinical presentation, treatment, and outcomes in type a acute aortic dissection (from the International Registry of Acute Aortic dissection). Am J Cardiol. 2008;102:1562. https://doi.org/10.1016/j.amjcard.2008.07.049

Canal C, Kaserer A, Ciritsis B, Simmen HP, Neuhaus V, Pape HC. Is there an influence of Surgeon’s experience on the clinical course in patients with a proximal femoral fracture? J Surg Educ. 2018;75:1566. https://doi.org/10.1016/j.jsurg.2018.04.010

Regazzoni P, Giannoudis PV, Lambert S, Fernandez A, Perren SM. The ICUC(®) app: can it pave the way for quality control and transparency in medicine? Injury. 2017;48:1101. https://doi.org/10.1016/j.injury.2017.04.058

Regazzoni P, Liu WC, Jupiter JB, Fernandez dell’Oca AA. Complete intra-operative Image Data including 3D X-rays: a New Format for Surgical Papers needed? J Clin Med. 2022;11. https://doi.org/10.3390/jcm11237039

Rittirsch D, Schoenborn V, Lindig S, Wanner E, Sprengel K, Günkel S, Blaess M, Schaarschmidt B, Sailer P, Märsmann S, et al. An Integrated Clinico-Transcriptomic Approach identifies a central role of the Heme Degradation Pathway for Septic complications after Trauma. Ann Surg. 2016;264:1125. https://doi.org/10.1097/sla.0000000000001553

Pape HC, Moore EE, McKinley T, Sauaia A. Pathophysiology in patients with polytrauma. Injury. 2022;53:2400. https://doi.org/10.1016/j.injury.2022.04.009

Ramesh AN, Kambhampati C, Monson JR, Drew PJ. Artificial intelligence in medicine. Ann R Coll Surg Engl. 2004;86:334. https://doi.org/10.1308/147870804290

Starr AJ, Julka M, Nethi A, Watkins JD, Fairchild RW, Rinehart D, Park C, Dumas RP, Box HN, Cripps MW. Parkland Trauma Index of Mortality: real-time predictive model for Trauma patients. J Orthop Trauma. 2022;36:280. https://doi.org/10.1097/bot.0000000000002290

Lefaivre KA, Starr AJ, Reinert CM. Reduction of displaced pelvic ring disruptions using a pelvic reduction frame. J Orthop Trauma. 2009;23:299. https://doi.org/10.1097/BOT.0b013e3181a1407d

Rittirsch D, Schoenborn V, Lindig S, Wanner E, Sprengel K, Günkel S, Schaarschmidt B, Märsmann S, Simmen HP, Cinelli P, et al. Improvement of prognostic performance in severely injured patients by integrated clinico-transcriptomics: a translational approach. Crit Care. 2015;19:414. https://doi.org/10.1186/s13054-015-1127-y

Wanner GA, Heining SM, Raykov V, Pape HC. Back to the future - augmented reality in orthopedic trauma surgery. Injury 2023. 2023;54:110924. https://doi.org/10.1016/j.injury

Pape HC, Remmers D, Rice J, Ebisch M, Krettek C, Tscherne H. Appraisal of early evaluation of blunt chest trauma: development of a standardized scoring system for initial clinical decision making. J Trauma. 2000;49:496. https://doi.org/10.1097/00005373-200009000-00018

Pape HC, Lefering R, Butcher N, Peitzman A, Leenen L, Marzi I, Lichte P, Josten C, Bouillon B, Schmucker U, et al. The definition of polytrauma revisited: an international consensus process and proposal of the new ‘Berlin definition’. J Trauma Acute Care Surg. 2014;77:780. https://doi.org/10.1097/ta.0000000000000453

Pape HC, Hildebrand F, Pertschy S, Zelle B, Garapati R, Grimme K, Krettek C, Reed RL. 2nd. Changes in the management of femoral shaft fractures in polytrauma patients: from early total care to damage control orthopedic surgery. J Trauma. 2002;53:452. https://doi.org/10.1097/00005373-200209000-00010. discussion 461 – 452.

Pape HC, Pfeifer R. Safe definitive orthopaedic surgery (SDS): repeated assessment for tapered application of early definitive care and damage control? An inclusive view of recent advances in polytrauma management. Injury. 2015;46:1–3. https://doi.org/10.1016/j.injury.2014.12.001

Teasdale G, Maas A, Lecky F, Manley G, Stocchetti N, Murray G. The Glasgow Coma Scale at 40 years: standing the test of time. Lancet Neurol. 2014;13:844. https://doi.org/10.1016/s1474-4422(14)70120-6

Raith EP, Udy AA, Bailey M, McGloughlin S, MacIsaac C, Bellomo R, Pilcher DV. Prognostic accuracy of the SOFA score, SIRS Criteria, and qSOFA score for In-Hospital mortality among adults with suspected infection admitted to the Intensive Care Unit. JAMA. 2017;317:290. https://doi.org/10.1001/jama.2016.20328

Sandberg A, Bostrom N. Converging cognitive enhancements. Ann N Y Acad Sci. 2006;1093:201. https://doi.org/10.1196/annals.1382.015

Gennarelli TA, Champion HR, Copes WS, Sacco WJ. Comparison of mortality, morbidity, and severity of 59,713 head injured patients with 114,447 patients with extracranial injuries. J Trauma. 1994;37:962. https://doi.org/10.1097/00005373-199412000-00016

Li H, Ma YF. New injury severity score (NISS) outperforms injury severity score (ISS) in the evaluation of severe blunt trauma patients. Chin J Traumatol. 2021;24:261. https://doi.org/10.1016/j.cjtee.2021.01.006

Abajas Bustillo R, Amo Setién FJ, Ortego Mate MDC, Seguí Gómez M, Durá Ros MJ, Leal Costa C. Predictive capability of the injury severity score versus the new injury severity score in the categorization of the severity of trauma patients: a cross-sectional observational study. Eur J Trauma Emerg Surg. 2020;46:903. https://doi.org/10.1007/s00068-018-1057-x

Champion HR, Copes WS, Sacco WJ, Lawnick MM, Bain LW, Gann DS, Gennarelli T, Mackenzie E, Schwaitzberg. A new characterization of injury severity. J Trauma. 1990;30:539. https://doi.org/10.1097/00005373-199005000-00003. discussion 545 – 536.

Pape HC, Halvachizadeh S, Leenen L, Velmahos GD, Buckley R, Giannoudis PV. Timing of major fracture care in polytrauma patients - an update on principles, parameters and strategies for 2020. Injury. 2019;50:1656. https://doi.org/10.1016/j.injury.2019.09.021

Sarwahi V, Atlas AM, Galina J, Satin A, Dowling TJ, 3rd, Hasan S, Amaral TD, Lo Y, Christopherson N, Prince J. Seatbelts save lives, and spines, in Motor Vehicle accidents: a review of the National Trauma Data Bank in the Pediatric Population. Spine (Phila Pa 1976). 2021;46:1637–44. https://doi.org/10.1097/brs.0000000000004072

Shah A, Judge A, Griffin XL, Incidence. and quality of care for open fractures in England between 2008 and 2019: a cohort study using data collected by the Trauma Audit and Research Network. Bone Joint J 2022, 104–b, 736–746, https://doi.org/10.1302/0301-620x.104b6.Bjj-2021-1097.R2

Moore EE. The outstanding achievements of the TraumaRegister. Injury. 2014;45(Suppl 3). https://doi.org/10.1016/j.injury.2014.08.008

Coccolini F, Coimbra R, Ordonez C, Kluger Y, Vega F, Moore EE, Biffl W, Peitzman A, Horer T, Abu-Zidan FM, et al. Liver trauma: WSES 2020 guidelines. World J Emerg Surg. 2020;15:24. https://doi.org/10.1186/s13017-020-00302-7

Bernard GR, Artigas A, Brigham KL, Carlet J, Falke K, Hudson L, Lamy M, Legall JR, Morris A, Spragg R. The American-European Consensus Conference on ARDS. Definitions, mechanisms, relevant outcomes, and clinical trial coordination. Am J Respir Crit Care Med 1994, 149:818, https://doi.org/10.1164/ajrccm.149.3.7509706

Pape HC, Giannoudis PV, Krettek C, Trentz O. Timing of fixation of major fractures in blunt polytrauma: role of conventional indicators in clinical decision making. J Orthop Trauma. 2005;19:551. https://doi.org/10.1097/01.bot.0000161712.87129.80

Dienstknecht T, Rixen D, Giannoudis P, Pape HC. Do parameters used to clear noncritically injured polytrauma patients for extremity surgery predict complications? Clin Orthop Relat Res. 2013;471:2878–84. https://doi.org/10.1007/s11999-013-2924-8

Hildebrand F, Lefering R, Andruszkow H, Zelle BA, Barkatali BM, Pape HC. Development of a scoring system based on conventional parameters to assess polytrauma patients: PolyTrauma Grading score (PTGS). Injury. 2015;46(Suppl 4):S93–98. https://doi.org/10.1016/s0020-1383(15)30025-5

Pfeifer R, Kalbas Y, Coimbra R, Leenen L, Komadina R, Hildebrand F, Halvachizadeh S, Akhtar M, Peralta R, Fattori L, et al. Indications and interventions of damage control orthopedic surgeries: an expert opinion survey. Eur J Trauma Emerg Surg. 2021;47:2081–92. https://doi.org/10.1007/s00068-020-01386-1

Niggli C, Pape HC, Niggli P, Mica L. Validation of a visual-based Analytics Tool for Outcome Prediction in Polytrauma patients (WATSON Trauma Pathway Explorer) and comparison with the predictive values of TRISS. J Clin Med. 2021;10. https://doi.org/10.3390/jcm10102115

Tiziani S, Hinkle AJ, Mesarick EC, Turner AC, Kenfack YJ, Dumas RP, Grewal IS, Park C, Sanders DT, Sathy AK, Starr AJ. Parkland Trauma Index of Mortality in Orthopaedic Trauma patients: an initial report. J Orthop Trauma. 2023;37:S23–7. https://doi.org/10.1097/bot.0000000000002690

Champion HR, Copes WS, Sacco WJ, Lawnick MM, Keast SL, Bain LW Jr., Flanagan ME, Frey CF. The Major Trauma Outcome Study: establishing national norms for trauma care. J Trauma. 1990;30:1356–65.

Ziegenhain F, Scherer J, Kalbas Y, Neuhaus V, Lefering R, Teuben M, Sprengel K, Pape HC, Jensen KO. The TraumaRegister, D. Age-Dependent patient and trauma characteristics and Hospital Resource requirements-can Improvement be made? An analysis from the German Trauma Registry. Med (Kaunas). 2021;57. https://doi.org/10.3390/medicina57040330

Xu W, Seok J, Mindrinos MN, Schweitzer AC, Jiang H, Wilhelmy J, Clark TA, Kapur K, **ng Y, Faham M, et al. Human transcriptome array for high-throughput clinical studies. Proc Natl Acad Sci U S A. 2011;108:3707–12. https://doi.org/10.1073/pnas.1019753108

Nauth A, Hildebrand F, Vallier H, Moore T, Leenen L, McKinley T, Pape HC. Polytrauma: update on basic science and clinical evidence. OTA Int. 2021;4:e116. https://doi.org/10.1097/oi9.0000000000000116

Knoepfel A, Pfeifer R, Lefering R, Pape HC. The AdHOC (age, head injury, oxygenation, circulation) score: a simple assessment tool for early assessment of severely injured patients with major fractures. Eur J Trauma Emerg Surg. 2022;48:411–21. https://doi.org/10.1007/s00068-020-01448-4

Dezman ZDW, Comer AC, Smith GS, Hu PF, Mackenzie CF, Scalea TM, Hirshon JM. Repeat lactate level predicts mortality better than rate of clearance. Am J Emerg Med. 2018;36:2005–9. https://doi.org/10.1016/j.ajem.2018.03.012

Hirsiger S, Simmen HP, Werner CM, Wanner GA, Rittirsch D. Danger signals activating the immune response after trauma. Mediators Inflamm 2012. 2012;315941. https://doi.org/10.1155/2012/315941

Funding

This research received no external funding.

Author information

Authors and Affiliations

Contributions

Conceptualization, HCP, AS, BG and GAW; methodology, HCP, AS, BG and GAW; investigation, HCP, AS, BG and GAW; writing—original draft preparation, HCP, AS, BG and GAW; writing—review and editing, HCP, AS, BG and GAW; visualization, HCP, AS, BG and GAW. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Institutional review board statement

Not applicable. According to the regional ethical committee, there has been no need for approval for literature reviews. The database analysis was approved by the local institutional review board (IRB) according to IRC guidelines (No. St. V. 01-2008).

Informed consent

Not applicable.

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Pape, HC., Starr, A.J., Gueorguiev, B. et al. The role of big data management, data registries, and machine learning algorithms for optimizing safe definitive surgery in trauma: a review. Patient Saf Surg 18, 22 (2024). https://doi.org/10.1186/s13037-024-00404-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13037-024-00404-0