Abstract

Background

Medication errors and associated adverse drug events (ADE) are a major cause of morbidity and mortality worldwide. In recent years, the prevention of medication errors has become a high priority in healthcare systems. In order to improve medication safety, computerized Clinical Decision Support Systems (CDSS) are increasingly being integrated into the medication process. Accordingly, a growing number of studies have investigated the medication safety-related effectiveness of CDSS. However, the outcome measures used are heterogeneous, leading to unclear evidence. The primary aim of this study is to summarize and categorize the outcomes used in interventional studies evaluating the effects of CDSS on medication safety in primary and long-term care.

Methods

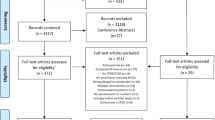

We systematically searched PubMed, Embase, CINAHL, and Cochrane Library for interventional studies evaluating the effects of CDSS targeting medication safety and patient-related outcomes. We extracted methodological characteristics, outcomes and empirical findings from the included studies. Outcomes were assigned to three main categories: process-related, harm-related, and cost-related. Risk of bias was assessed using the Evidence Project risk of bias tool.

Results

Thirty-two studies met the inclusion criteria. Almost all studies (n = 31) used process-related outcomes, followed by harm-related outcomes (n = 11). Only three studies used cost-related outcomes. Most studies used outcomes from only one category and no study used outcomes from all three categories. The definition and operationalization of outcomes varied widely between the included studies, even within outcome categories. Overall, evidence on CDSS effectiveness was mixed. A significant intervention effect was demonstrated by nine of fifteen studies with process-related primary outcomes (60%) but only one out of five studies with harm-related primary outcomes (20%). The included studies faced a number of methodological problems that limit the comparability and generalizability of their results.

Conclusions

Evidence on the effectiveness of CDSS is currently inconclusive due in part to inconsistent outcome definitions and methodological problems in the literature. Additional high-quality studies are therefore needed to provide a comprehensive account of CDSS effectiveness. These studies should follow established methodological guidelines and recommendations and use a comprehensive set of harm-, process- and cost-related outcomes with agreed-upon and consistent definitions.

Prospero registration

CRD42023464746

Similar content being viewed by others

Introduction

Medication errors are a common problem in health care and a frequent cause of mortality and morbidity [1,2,3]. Due to inconsistent definitions and classification systems, differences in populations studied and varying outcome measures, the reported prevalence of medication errors and adverse drug events (ADE) varies widely (from 2% to 94%) across different studies [1, 2, 4,5,6]. Given the high number of prescriptions in primary care, medication errors have the potential to cause considerable harm [7,8,9], contributing to substantial health and economic consequences, including an increased utilization of health care services and, in the worst case, patient death [10,11,12].

The use of digital health technologies can help overcome shortcomings at each stage of the medication management process [13]. Digital health technologies have the potential to reduce medication errors and adverse drug reactions (ADR), improve patient safety and thus contribute to higher quality and efficiency in health care [14, 15]. In particular, Clinical Decision Support Systems (CDSS) are used to improve medication safety by providing direct medication related advice to physicians, pharmacists or other participants involved in the medication process [16, 17]. Current research demonstrates the potential of CDSS to enhance health care processes [18,19,20,21,22,23]. In particular, CDSS that are integrated into the clinical workflow and include messages or alerts that are automatically presented during clinical decision making can have beneficial effects [24].

While a variety of studies have examined the effects of CDSS on medication safety, significant heterogeneity exists concerning the outcome measures used, leading to an ambiguous body of evidence [16, 25, 26] – particularly in primary care [27,28,29] and long-term care (LTC) [29,30,31]. According to Seidling and Bates [32], outcomes used by studies investigating the impact of digital health technologies on medication safety can be grouped into three categories: process-related, harm-related, and cost-related outcomes. These categories differ regarding their relevance for patient health [32]. In particular, harm-related outcomes are more directly relevant for patient health than process- or cost-related outcomes.

As of yet, no review has comprehensively summarized the outcome measures used in studies on medication safety-related CDSS effectiveness in primary care and LTC. Therefore, the primary objective of this systematic review is to summarize and categorize the outcome measures used in these studies. Thereby, we contribute to a more standardized approach in the evaluation of CDSS and facilitate future research in this field. A secondary aim is to compare the main empirical findings of these studies.

Methods

Our systematic review followed the guidelines outlined in the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA 2020) Statement [33] (see Supplementary Tables S1-S2, Additional File 1). This systematic review was registered with PROSPERO (CRD42023464746) [34].

Search strategy

We systematically searched PubMed, Embase, CINAHL, and the Cochrane Library for papers published before September 20th, 2023. The search strategy included terms about the character and type of intervention (digital decision support), the aim of these interventions (medication safety) and the targeted setting (outpatient/primary and LTC). Relevant MeSH-terms were considered (see Supplementary Table S1, Additional File 2). We developed the search strategy in accordance with published CDSS-related systematic reviews [25, 26, 28, 35]. Further publications were searched manually via hand search and automatically using forward and backward citation of the Spider Cite tool [36].

Eligibility criteria

We included English and German language full-text publications that report data on interventional studies evaluating CDSS to improve the medication safety in the primary/outpatient and LTC setting. Only studies reporting medication-, patient- or cost-related outcomes were included, while studies reporting only outcomes related to healthcare providers attitude or acceptance regarding CDSS and studies focusing only on performance or quality indicators of CDSS (e.g. sensitivity, specificity) were excluded. Studies were also excluded if the intervention was conducted in inpatient care, did not automatically engage in the medication process (e.g., via automated alerts), or included only a simple reminder function. Furthermore, studies were not eligible if they focused only on a single potentially problematic drug or only on one specific indication. Finally, studies were excluded if they did not primarily aim at the improvement of medication safety. There were no restrictions regarding the comparator of the intervention (see Supplementary Table S2, Additional File 2). Two investigators (DL and DGR) independently screened search results and assessed the eligibility of potentially relevant studies according to the predefined inclusion and exclusion criteria. Discrepancies (n = 131) were resolved by consensus. Another investigator (BA) was consulted if consensus could not be reached.

Data extraction, categorization and synthesis

We extracted the following data from the included studies: study design, study period, sample, and setting, type of intervention and comparator (Table 1), primary and secondary outcome measures (Table 2), outcome levels (Table 3), and main empirical findings (Table 4). Two investigators (DGR, JG) jointly performed the data extraction, which was verified by a third investigator (BA). We grouped types of interventions and comparators into the following categories:

Computerized physician order entry

Computerized Physician Order Entry (CPOE) is defined as any system that allows health care providers to “directly place orders for medications, tests or studies into an electronic system, which then transmits the order directly to the recipient responsible for carrying out the order (e.g. the pharmacy, laboratory, or radiology department)” [27].

Electronic prescribing

Electronic Prescribing (e-prescribing or eRx) can be seen as a special form of CPOE [79]. For example, Donovan et al. show that the implementation costs of hospital-based CDSS are rarely reported and the methods used to measure and value such costs are often not well described [80]. Thus, intervention costs, as well as costs that may have occurred in other (health care) sectors, are often not considered in economic evaluations of CDSS [81]. Since the quality of the current health economic literature on health information technology in medication management is poor [81], future studies should follow established standards of health economic evaluations [78, 82, 83]. Additionally, since the economic impacts of improved medication safety may occur on different levels, economic evaluations of CDSS should take into account not only the payers’ perspective, but also financial effects at the provider level.

To summarize: CDSS evaluations should include multiple outcomes from each of the three outcome categories [32, 76]. However, we found that none of the included studies conducted a comprehensive evaluation of all three outcome categories. Furthermore, two-thirds of studies did not consider any harm-related outcomes. Those studies that did use harm-related outcomes mostly used ADE or other injuries; very few used morbidity or hospitalization. Although process-related outcomes were by far the most used outcomes, this is mostly due to the large number of studies using error rates. In contrast, response rates and alert rates were used less commonly, making it difficult to fully investigate and interpret CDSS activity and use. Finally, only three studies used cost-related outcomes. This finding is consistent with the sparse and conflicting evidence regarding the financial impact and cost-effectiveness of CDSS [16, 81, 84]. The studies that used cost-related outcomes included only a small subset of direct costs and did not consider indirect costs.

Defining outcome measures

We have seen that the included studies differ in the outcome categories they use. However, studies also differ in their definition and operationalization of outcomes even within categories (and subcategories).

While mortality and hospitalization are easily measured standardized outcomes, other harm-related outcomes (such as injuries) may be defined and operationalized in various ways, limiting the comparability of harm-related results between studies. Cost-related outcomes were only considered in three studies, which used significantly different (and therefore non-comparable) approaches.

Differences in outcome definition and operationalization between studies were most pronounced for process-related outcomes. First, these outcomes measured the occurrence of a number of different types of errors, responses, and alerts. For example, an error rate may refer to the number of PIM or the number of DDI. Second, these outcomes can be defined at different levels, including patient-level, encounter-level, prescription-level or alert-level. For example, an error rate may refer to the number of errors per prescription or the number of errors per patient-month. These differences in outcome definitions are in line with the literature: a review by Rinke et al. [85] also found differences in outcome definition and operationalization for evaluations of interventions to reduce paediatric medication errors.

Due to these differences in outcome definition, comparing results between studies can be difficult or even impossible [85], even if studies use the same outcome categories. Therefore, future research should work toward consensus definitions for key outcomes. This could increase the efficiency of evidence synthesis and reduce the risk of duplicated research efforts, thereby accelerating the improvement of care [86]. When agreed-upon definitions are unavailable, researchers can increase the comparability of their results by reporting multiple outcome definitions.

Importantly, this does not imply that all CDSS evaluation research should use a one-size-fits-all approach. Different healthcare systems, care settings, study populations, or CDSS types may give rise to different research questions, which will likely require the use of different outcomes and definitions. For example, an evaluation of a novel CDSS introduced in an LTC setting with a history of inappropriate medications may use a PIM/PIP-based error rate, while an evaluation of an existing primary care CDSS which has recently been upgraded to generate dosage alerts may instead measure the rate of dosage errors. However, studies with similar research questions concerning similar settings and populations should still strive to use comparable outcome definitions, when possible.

Finally, researchers should carefully consider at which level they define their outcomes. For many types of error rates, the prescription-level may be most appropriate. For example, the number of errors per prescription (or per encounter) reflects the total opportunities for errors more accurately than the number of errors per patient or per patient-month [85]. Similarly, it may be more appropriate to define response rates at the alert-level, rather than the prescription-level. As discussed above, the most appropriate outcome definition will depend on the context and specific research question.

Reducing the risk of bias

But even if the included studies had used a wider variety of outcomes from all outcome categories, with agreed-upon definitions and standardized operationalizations for each outcome, many studies would still have exhibited a risk of bias due to their study design and other methodological problems. In particular, most studies were cross-sectional designs without a sufficient follow-up period, many studies were not randomized or not controlled and most controlled studies did not demonstrate study group comparability. Finally, many studies did not specify a primary outcome, and only 12 studies reported power calculations.

To reduce the risk of bias, future research should rely on well-designed (cluster) RCTs including a sufficient follow-up period; study group comparability should be assessed and reported. Whenever possible, studies should be longitudinal rather than cross-sectional. Finally, studies should explicitly specify a clear (preferably harm-related) primary outcome and should perform and report sample size and power calculations for this outcome.

Empirical findings

Only 20 out of 32 included studies explicitly specified a clear primary outcome and, of these, only five studies used harm-related primary outcomes. While half of all studies with primary outcomes demonstrated a significant intervention effect, most studies finding significant effects did so for process-related primary outcomes. This result is in line with current research demonstrating significant intervention effects when using process-related outcomes [18,19,20,21,22]. In contrast, only one study found a significant intervention effect for a harm-related primary outcome. Overall, our results agree with prior reviews finding that the effectiveness of CDSS for medication safety in primary care [27,28,29] and LTC settings [29,30,31] remains inconsistent and future research on the harm-related effects of medication-related CDSS is needed.

To generate stronger evidence on the effectiveness of CDSS, future studies should follow the methodological recommendations outlined above. Furthermore, additional research should take place in LTC settings, as this setting was underrepresented in the included studies. Finally, insights from research using process-related outcomes to study CDSS activity should be used to improve on the design and functionality of future CDSS. While uptake levels are rarely reported in CDSS evaluations, available evidence indicates that uptake is low [87]. In addition to alert fatigue, high override rates are an increasingly important problem for CDSS interventions [88, 89]. If these overrides are inappropriate, they can lead to medication errors, patient harms and increased costs [90]. Comprehensive CDSS evaluations using a variety of outcomes and outcome categories are therefore needed to identify and remove barriers to user acceptance of CDSS.

Limitations

Compared to a recent review [26], we expanded our scope by including the LTC setting and focusing primarily on methodological aspects and outcomes used in CDSS evaluations. However, our systematic review still has several limitations. First, relevant studies that have not been indexed in the searched databases might be missing from this review, although we followed an extensive search strategy, including hand search and automated citation tools alongside the search of multiple databases. Second, due to the methodological heterogeneity of the included studies, we only compared whether or not studies found a significant effect for their primary outcome and did not compare levels of significance or effect sizes. We also did not consider outcomes related to user acceptance of CDSS. Finally, a sco** review may also have been an appropriate method for addressing our primary (methodological) aim, although the lines between these types of reviews are often blurred [91]. However, due to our secondary (empirical) aim and our performance of a risk of bias assessment, we decided to conduct a full systematic review according to the PRISMA, rather than PRIMSA Extension for Sco** Reviews [92], guidelines.

The included studies vary in terms of applied interventions and comparisons. Some studies compared the CDSS intervention to non-automated IT systems, while other studies used handwritten or paper-based prescription forms as a comparison. Consequently, the applied interventions and comparisons are not comparable, which could also have an influence on the differences in outcome measures and operationalizations. For example, comparing CDSS to other IT systems rather than handwritten prescriptions may allow alert rates or response rates to be calculated for both the intervention and control groups.

Furthermore, since 75% of the studies were from North America, the generalizability of the studies to other regions may be limited. Finally, the included studies’ high risk of bias (particularly for PPS and N-RCT studies), their lack of clearly specified primary outcomes and their weak reporting of sample sizes need to be considered when drawing conclusions from study results. Despite these limitations, our results give rise to a number of key recommendations for future studies researching the effect of CDSS on medication safety, summarized in Table 5.

Conclusions

Our primary aim in this review was to summarize and categorize the outcome measures used in CDSS evaluation studies. Furthermore, we assessed the methodological quality of these studies and compared their key findings.

Although a variety of studies have evaluated the effectiveness of CDSS, we found that these studies face a number of (methodological) problems that limit the generalizability of their results. In particular, no studies used a comprehensive set of harm-related, process-related and cost-related outcomes. Definitions and operationalizations of outcomes varied widely between studies, complicating comparisons and limiting the possibility of evidence synthesis. Furthermore, a number of studies were not controlled, lacked randomization or did not demonstrate the comparability of study groups. Only 63% of studies explicitly specified a primary outcome. Of these, half found a significant intervention effect.

Overall, evidence on CDSS effectiveness is mixed and evidence synthesis remains difficult due to methodological concerns and inconsistent outcome definitions. Additional high-quality studies using a wider array of harm-, process- and cost-related outcomes are needed to close this evidence gap and increase the availability of effective CDSS in primary care and LTC.

Availability of data and materials

No datasets were generated or analysed during the current study.

Abbreviations

- ADE:

-

Adverse drug event

- ADR:

-

Adverse drug reaction

- CDSS:

-

Computerized decision support system

- CPOE:

-

Computerized provider order entry

- C-RCT:

-

Cluster-randomized controlled trial

- DDI:

-

Drug-drug interactions

- EHR:

-

Electronic health record

- eRx:

-

Electronic prescribing

- HCRU:

-

Healthcare resource utilization

- HMO:

-

Health Maintenance Organization

- HRQoL:

-

Health-related Quality of Life

- LTC:

-

Long-term care

- N-RCT:

-

Non-randomized controlled trial

- n.a:

-

Not applicable

- OC:

-

Outpatient/ambulatory clinic

- PCP:

-

Primary care practice/centers

- PIM:

-

Potentially inappropriate medication

- PIP:

-

Potentially inappropriate prescribing

- PPS:

-

Pre-post study

- RCT:

-

Randomized controlled trial

References

Assiri GA, Shebl NA, Mahmoud MA, Aloudah N, Grant E, Aljadhey H, Sheikh A. What is the epidemiology of medication errors, error-related adverse events and risk factors for errors in adults managed in community care contexts? A systematic review of the international literature. BMJ Open. 2018;8:e019101. https://doi.org/10.1136/bmjopen-2017-019101.

Olaniyan JO, Ghaleb M, Dhillon S, Robinson P. Safety of medication use in primary care. Int J Pharm Pract. 2015;23:3–20. https://doi.org/10.1111/ijpp.12120.

Phillips DP, Bredder CC. Morbidity and mortality from medical errors: an increasingly serious public health problem. Annu Rev Public Health. 2002;23:135–50. https://doi.org/10.1146/annurev.publhealth.23.100201.133505.

Payne R, Slight S, Franklin BD, Avery AJ. Medication errors. Geneva: World Health Organization; 2016.

Lisby M, Nielsen LP, Brock B, Mainz J. How are medication errors defined? A systematic literature review of definitions and characteristics. Int J Qual Health Care. 2010;22:507–18. https://doi.org/10.1093/intqhc/mzq059.

Naseralallah L, Stewart D, Price M, Paudyal V. Prevalence, contributing factors, and interventions to reduce medication errors in outpatient and ambulatory settings: a systematic review. Int J Clin Pharm. 2023;45:1359–77. https://doi.org/10.1007/s11096-023-01626-5.

Taché SV, Sönnichsen A, Ashcroft DM. Prevalence of adverse drug events in ambulatory care: a systematic review. Ann Pharmacother. 2011;45:977–89. https://doi.org/10.1345/aph.1P627.

Thomsen LA, Winterstein AG, Søndergaard B, Haugbølle LS, Melander A. Systematic review of the incidence and characteristics of preventable adverse drug events in ambulatory care. Ann Pharmacother. 2007;41:1411–26. https://doi.org/10.1345/aph.1h658.

Insani WN, Whittlesea C, Alwafi H, Man KKC, Chapman S, Wei L. Prevalence of adverse drug reactions in the primary care setting: a systematic review and meta-analysis. PLoS ONE. 2021;16:e0252161. https://doi.org/10.1371/journal.pone.0252161.

Walsh EK, Hansen CR, Sahm LJ, Kearney PM, Doherty E, Bradley CP. Economic impact of medication error: a systematic review. Pharmacoepidemiol Drug Saf. 2017;26:481–97. https://doi.org/10.1002/pds.4188.

Stark RG, John J, Leidl R. Health care use and costs of adverse drug events emerging from outpatient treatment in Germany: a modelling approach. BMC Health Serv Res. 2011;11:9. https://doi.org/10.1186/1472-6963-11-9.

Elliott RA, Camacho E, Jankovic D, Sculpher MJ, Faria R. Economic analysis of the prevalence and clinical and economic burden of medication error in England. BMJ Qual Saf. 2021;30:96–105. https://doi.org/10.1136/bmjqs-2019-010206.

Agrawal A. Medication errors: prevention using information technology systems. Br J Clin Pharmacol. 2009;67:681–6. https://doi.org/10.1111/j.1365-2125.2009.03427.x.

Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med. 2003;163:1409–16. https://doi.org/10.1001/archinte.163.12.1409.

Kaushal R, Bates DW. Information technology and medication safety: what is the benefit? Qual Saf Health Care. 2002;11:261–5. https://doi.org/10.1136/qhc.11.3.261.

Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157:29–43. https://doi.org/10.7326/0003-4819-157-1-201207030-00450.

Forrester SH, Hepp Z, Roth JA, Wirtz HS, Devine EB. Cost-effectiveness of a computerized provider order entry system in improving medication safety ambulatory care. Value Health. 2014;17:340–9. https://doi.org/10.1016/j.jval.2014.01.009.

Jia P, Zhang L, Chen J, Zhao P, Zhang M. The effects of clinical decision support systems on Medication Safety: an overview. PLoS ONE. 2016;11:e0167683. https://doi.org/10.1371/journal.pone.0167683.

Sutton RT, Pincock D, Baumgart DC, Sadowski DC, Fedorak RN, Kroeker KI. An overview of clinical decision support systems: benefits, risks, and strategies for success. NPJ Digit Med. 2020;3:17. https://doi.org/10.1038/s41746-020-0221-y.

McKibbon KA, Lokker C, Handler SM, Dolovich LR, Holbrook AM, O’Reilly D, et al. The effectiveness of integrated health information technologies across the phases of medication management: a systematic review of randomized controlled trials. J Am Med Inform Assoc. 2012;19:22–30. https://doi.org/10.1136/amiajnl-2011-000304.

Hemens BJ, Holbrook A, Tonkin M, Mackay JA, Weise-Kelly L, Navarro T, et al. Computerized clinical decision support systems for drug prescribing and management: a decision-maker-researcher partnership systematic review. Implement Sci. 2011;6:89. https://doi.org/10.1186/1748-5908-6-89.

Nieuwlaat R, Connolly SJ, Mackay JA, Weise-Kelly L, Navarro T, Wilczynski NL, Haynes RB. Computerized clinical decision support systems for therapeutic drug monitoring and dosing: a decision-maker-researcher partnership systematic review. Implement Sci. 2011;6:90. https://doi.org/10.1186/1748-5908-6-90.

Ji M, Yu G, ** H, Xu T, Qin Y. Measures of success of computerized clinical decision support systems: an overview of systematic reviews. Health Policy Technol. 2021;10:196–208. https://doi.org/10.1016/j.hlpt.2020.11.001.

Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330:765. https://doi.org/10.1136/bmj.38398.500764.8F.

Lainer M, Mann E, Sönnichsen A. Information technology interventions to improve medication safety in primary care: a systematic review. Int J Qual Health Care. 2013;25:590–8. https://doi.org/10.1093/intqhc/mzt043.

Cerqueira O, Gill M, Swar B, Prentice KA, Gwin S, Beasley BW. The effectiveness of interruptive prescribing alerts in ambulatory CPOE to change prescriber behaviour & improve safety. BMJ Qual Saf. 2021;30:1038–46. https://doi.org/10.1136/bmjqs-2020-012283.

Ranji SR, Rennke S, Wachter RM. Computerised provider order entry combined with clinical decision support systems to improve medication safety: a narrative review. BMJ Qual Saf. 2014;23:773–80. https://doi.org/10.1136/bmjqs-2013-002165.

Eslami S, Abu-Hanna A, de Keizer NF. Evaluation of Outpatient Computerized Physician Medication Order Entry systems: a systematic review. J Am Med Inform Assoc. 2007;14:400–6. https://doi.org/10.1197/jamia.M2238.

Brenner SK, Kaushal R, Grinspan Z, Joyce C, Kim I, Allard RJ, et al. Effects of health information technology on patient outcomes: a systematic review. J Am Med Inform Assoc. 2016;23:1016–36. https://doi.org/10.1093/jamia/ocv138.

Marasinghe KM. Computerised clinical decision support systems to improve medication safety in long-term care homes: a systematic review. BMJ Open. 2015;5:e006539. https://doi.org/10.1136/bmjopen-2014-006539.

Kruse CS, Mileski M, Syal R, MacNeil L, Chabarria E, Basch C. Evaluating the relationship between health information technology and safer-prescribing in the long-term care setting: a systematic review. Technol Health Care. 2021;29:1–14. https://doi.org/10.3233/THC-202196.

Seidling HM, Bates DW. Evaluating the impact of Health IT on Medication Safety. Stud Health Technol Inf. 2016;222:195–205.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6:e1000097. https://doi.org/10.1371/journal.pmed.1000097.

Lampe D, Grothe D, Aufenberg B, Gensorowsky D, Witte J. Measuring the effects of clinical decision support systems (CDSS) to improve medication safety in primary and long-term care: a systematic review. PROSPERO 2023 CRD42023464746. https://www.crd.york.ac.uk/prospero/display_record.php?ID=CRD42023464746.

Monteiro L, Maricoto T, Solha I, Ribeiro-Vaz I, Martins C, Monteiro-Soares M. Reducing potentially inappropriate prescriptions for older patients using computerized decision support tools: systematic review. J Med Internet Res. 2019;21:e15385. https://doi.org/10.2196/15385.

SR-Accelerator. 13.09.2023. https://sr-accelerator.com/#/spidercite. Accessed 13 Sep 2023.

Abramson EL, Malhotra S, Fischer K, Edwards A, Pfoh ER, Osorio SN, et al. Transitioning between electronic health records: effects on ambulatory prescribing safety. J GEN INTERN MED. 2011;26:868–74. https://doi.org/10.1007/s11606-011-1703-z.

Abramson EL, Barrón Y, Quaresimo J, Kaushal R. Electronic prescribing within an electronic health record reduces ambulatory prescribing errors. JOINT COMM J QUAL PATIENT SAF. 2011;37:470–8.

Abramson EL, Malhotra S, Osorio SN, Edwards A, Cheriff A, Cole C, Kaushal R. A long-term follow-up evaluation of electronic health record prescribing safety. J AM MED Inf ASSOC. 2013;20:e52–8. https://doi.org/10.1136/amiajnl-2012-001328.

Andersson ML, Böttiger Y, Lindh JD, Wettermark B, Eiermann B. Impact of the drug-drug interaction database SFINX on prevalence of potentially serious drug-drug interactions in primary health care. EUR J CLIN PHARMACOL. 2013;69:565–71. https://doi.org/10.1007/s00228-012-1338-y.

Field TS, Rochon P, Lee M, Gavendo L, Baril JL, Gurwitz JH. Computerized clinical decision support during medication ordering for long-term care residents with renal insufficiency. J AM MED Inf ASSOC. 2009;16:480–5. https://doi.org/10.1197/jamia.M2981.

Glassman PA, Belperio P, Lanto A, Simon B, Valuck R, Sayers J, Lee M. The utility of adding retrospective medication profiling to computerized provider order entry in an ambulatory care population. J Am Med Inf Assoc. 2007;14:424–31. https://doi.org/10.1197/jamia.M2313.

Gurwitz JH, Field TS, Rochon P, Judge J, Harrold LR, Bell CM, et al. Effect of computerized provider order entry with clinical decision support on adverse drug events in the long-term care setting. J AM GERIATR SOC. 2008;56:2225–33. https://doi.org/10.1111/j.1532-5415.2008.02004.x.

Hou J-Y, Cheng K-J, Bai K-J, Chen H-Y, Wu W-H, Lin Y-M, Wu M-TM. The effect of a computerized pediatric dosing decision support system on pediatric dosing errors. J Food Drug Anal. 2013;21:286–91. https://doi.org/10.1016/j.jfda.2013.07.006.

Humphries TL, Carroll N, Chester EA, Magid D, Rocho B. Evaluation of an electronic critical drug interaction program coupled with active pharmacist intervention. Ann Pharmacother. 2007;41:1979–85. https://doi.org/10.1345/aph.1K349.

Jani YH, Ghaleb MA, Marks SD, Cope J, Barber N, Wong ICK. Electronic prescribing reduced prescribing errors in a Pediatric Renal Outpatient Clinic. J PEDIATR. 2008;152:214–8. https://doi.org/10.1016/j.jpeds.2007.09.046.

Judge J, Field TS, DeFlorio M, Laprino J, Auger J, Rochon P, et al. Prescribers’ responses to Alerts during Medication Ordering in the Long Term Care setting. J AM MED Inf ASSOC. 2006;13:385–90. https://doi.org/10.1197/jamia.M1945.

Jungo KT, Ansorg A-K, Floriani C, Rozsnyai Z, Schwab N, Meier R, et al. Optimising prescribing in older adults with multimorbidity and polypharmacy in primary care (OPTICA): cluster randomised clinical trial. BMJ. 2023;381:e074054. https://doi.org/10.1136/bmj-2022-074054.

Kahan NR, Waitman D-A, Berkovitch M, Superstine SY, Glazer J, Weizman A, Shiloh R. Large-scale, community-based trial of a personalized drug-related problem rectification system. Am J Pharm Benefits. 2017;9:41–6.

Kaushal R, Barrón Y, Abramson EL. The comparative effectiveness of 2 electronic prescribing systems. AM J MANAGE CARE. 2011;17:SP88–94.

Kaushal R, Kern LM, Barrón Y, Quaresimo J, Abramson EL. Electronic prescribing improves medication safety in community-based office practices. J GEN INTERN MED. 2010;25:530–6. https://doi.org/10.1007/s11606-009-1238-8.

Mazzaglia G, Piccinni C, Filippi A, Sini G, Lapi F, Sessa E, et al. Effects of a computerized decision support system in improving pharmacological management in high-risk cardiovascular patients: a cluster-randomized open-label controlled trial. HEALTH Inf J. 2016;22:232–47. https://doi.org/10.1177/1460458214546773.

Overhage JM, Gandhi TK, Hope C, Seger AC, Murray MD, Orav EJ, Bates DW. Ambulatory computerized prescribing and preventable adverse drug events. J PATIENT SAF. 2016;12:69–74. https://doi.org/10.1097/PTS.0000000000000194.

Price M, Davies I, Rusk R, Lesperance M, Weber J. Applying STOPP guidelines in primary care through Electronic Medical Record decision support: Randomized Control Trial highlighting the importance of Data Quality. JMIR Med Inf. 2017;5:e15. https://doi.org/10.2196/medinform.6226.

Raebel MA, Carroll NM, Kelleher JA, Chester EA, Berga S, Magid DJ. Randomized Trial to Improve Prescribing Safety during pregnancy. J AM MED Inf ASSOC. 2007;14:440–50. https://doi.org/10.1197/jamia.M2412.

Raebel MA, Charles J, Dugan J, Carroll NM, Korner EJ, Brand DW, Magid DJ. Randomized trial to improve prescribing safety in ambulatory elderly patients. J AM GERIATR SOC. 2007;55:977–85. https://doi.org/10.1111/j.1532-5415.2007.01202.x.

Rieckert A, Reeves D, Altiner A, Drewelow E, Esmail A, Flamm M, et al. Use of an electronic decision support tool to reduce polypharmacy in elderly people with chronic diseases: cluster randomised controlled trial. BMJ. 2020. https://doi.org/10.1136/bmj.m1822.

Schwarz EB, Parisi SM, Handler SM, Koren G, Cohen ED, Shevchik GJ, Fischer GS. Clinical decision support to promote safe prescribing to women of reproductive age: a cluster-randomized trial. J GEN INTERN MED. 2012;27:831–8. https://doi.org/10.1007/s11606-012-1991-y.

Simon SR, Smith DH, Feldstein AC, Perrin N, Yang X, Zhou Y, et al. Computerized prescribing alerts and group academic detailing to reduce the use of potentially inappropriate medications in older people. J AM GERIATR SOC. 2006;54:963–8. https://doi.org/10.1111/j.1532-5415.2006.00734.x.

Smith DH, Perrin N, Feldstein A, Yang X, Kuang D, Simon SR, et al. The impact of prescribing safety alerts for elderly persons in an electronic medical record: an interrupted time series evaluation. Arch Intern Med. 2006;166:1098–104. https://doi.org/10.1001/archinte.166.10.1098.

Steele AW, Eisert S, Witter J, Lyons P, Jones MA, Gabow P, Ortiz E. The effect of automated alerts on provider ordering behavior in an outpatient setting. PLOS MED. 2005;2:864–70. https://doi.org/10.1371/journal.pmed.0020255.

Subramanian S, Hoover S, Wagner JL, Donovan JL, Kanaan AO, Rochon PA, et al. Immediate financial impact of computerized clinical decision support for long-term care residents with renal insufficiency: a case study. J Am Med Inform Assoc. 2012;19:439–42. https://doi.org/10.1136/amiajnl-2011-000179.

Tamblyn R, Eguale T, Buckeridge DL, Huang A, Hanley J, Reidel K, et al. The effectiveness of a new generation of computerized drug alerts in reducing the risk of injury from drug side effects: a cluster randomized trial. J Am Med Inf Assoc. 2012;19:635–43. https://doi.org/10.1136/amiajnl-2011-000609.

Tamblyn R, Huang A, Taylor L, Kawasumi Y, Bartlett G, Grad R, et al. A Randomized Trial of the effectiveness of On-demand versus computer-triggered drug decision support in primary care. J AM MED Inf ASSOC. 2008;15:430–8. https://doi.org/10.1197/jamia.M2606.

Tamblyn R, Huang A, Perreault R, Jacques A, Roy D, Hanley J, et al. The medical office of the 21st century (MOXXI): effectiveness of computerized decision-making support in reducing inappropriate prescribing in primary care. CMAJ. 2003;169:549–56.

Vanderman AJ, Moss JM, Bryan WE, Sloane R, Jackson GL, Hastings SN. Evaluating the impact of Medication Safety alerts on Prescribing of potentially inappropriate medications for older Veterans in an ambulatory care setting. J PHARM PRACT. 2017;30:82–8. https://doi.org/10.1177/0897190015621803.

Witte J, Scholz S, Surmann B, Gensorowsky D, Greiner W. Efficacy of decision support systems to improve medication safety - results of the evaluation of the Arzneimittelkonto NRW. Z Evid Fortbild Qual Gesundhwes. 2019;147–148:80–9. https://doi.org/10.1016/j.zefq.2019.10.002.

Zillich AJ, Shay K, Hyduke B, Emmendorfer TR, Mellow AM, Counsell SR, et al. Quality improvement toward decreasing high-risk medications for older veteran outpatients. J Am Geriatr Soc. 2008;56:1299–305. https://doi.org/10.1111/j.1532-5415.2008.01772.x.

**e M, Weinger MB, Gregg WM, Johnson KB. Presenting multiple drug alerts in an ambulatory electronic prescribing system: a usability study of novel prototypes. Appl Clin Inf. 2014;5:334–48. https://doi.org/10.4338/ACI-2013-10-RA-0092.

Porterfield A, Engelbert K, Coustasse A. Electronic prescribing: improving the efficiency and accuracy of prescribing in the ambulatory care setting. Perspect Health Inf Manag. 2014;11:1 g.

Osheroff JA, Teich JM, Middleton B, Steen EB, Wright A, Detmer DE. A roadmap for national action on clinical decision support. J Am Med Inform Assoc. 2007;14:141–5. https://doi.org/10.1197/jamia.M2334.

Häyrinen K, Saranto K, Nykänen P. Definition, structure, content, use and impacts of electronic health records: a review of the research literature. Int J Med Inf. 2008;77:291–304. https://doi.org/10.1016/j.ijmedinf.2007.09.001.

Roumeliotis N, Sniderman J, Adams-Webber T, Addo N, An V, et al. Effect of electronic prescribing strategies on Medication Error and Harm in Hospital: a systematic review and Meta-analysis. JGIM: J Gen Intern Med. 2019;34:2210–23. https://doi.org/10.1007/s11606-019-05236-8.

Kennedy CE, Fonner VA, Armstrong KA, Denison JA, Yeh PT, O’Reilly KR, Sweat MD. The evidence project risk of bias tool: assessing study rigor for both randomized and non-randomized intervention studies. Syst Rev. 2019;8:3. https://doi.org/10.1186/s13643-018-0925-0.

Naranjo CA, Busto U, Sellers EM, Sandor P, Ruiz I, Roberts EA, et al. A method for estimating the probability of adverse drug reactions. Clin Pharmacol Ther. 1981;30:239–45. https://doi.org/10.1038/clpt.1981.154.

Coleman JJ, van der Sijs H, Haefeli WE, Slight SP, McDowell SE, Seidling HM, et al. On the alert: future priorities for alerts in clinical decision support for computerized physician order entry identified from a European workshop. BMC Med Inf Decis Mak. 2013;13:111. https://doi.org/10.1186/1472-6947-13-111.

Hussain MI, Reynolds TL, Zheng K. Medication safety alert fatigue may be reduced via interaction design and clinical role tailoring: a systematic review. J Am Med Inform Assoc. 2019;26:1141–9. https://doi.org/10.1093/jamia/ocz095.

Drummond MF, Sculpher MJ, Claxton K, Stoddart GL, Torrance GW. Methods for the economic evaluation of health care programmes. 4th ed. Oxford: Oxford University Press; 2015.

Bassi J, Lau F. Measuring value for money: a sco** review on economic evaluation of health information systems. J Am Med Inf Assoc. 2013;20:792–801. https://doi.org/10.1136/amiajnl-2012-001422.

Donovan T, Abell B, Fernando M, McPhail SM, Carter HE. Implementation costs of hospital-based computerised decision support systems: a systematic review. Implement Sci. 2023;18:7. https://doi.org/10.1186/s13012-023-01261-8.

O’Reilly D, Tarride J-E, Goeree R, Lokker C, McKibbon KA. The economics of health information technology in medication management: a systematic review of economic evaluations. J Am Med Inform Assoc. 2012;19:423–38. https://doi.org/10.1136/amiajnl-2011-000310.

Husereau D, Drummond M, Augustovski F, de Bekker-Grob E, Briggs AH, Carswell C, et al. Consolidated Health Economic Evaluation Reporting Standards (CHEERS) 2022 explanation and elaboration: a report of the ISPOR CHEERS II Good practices Task Force. Value Health. 2022;25:10–31. https://doi.org/10.1016/j.jval.2021.10.008.

White NM, Carter HE, Kularatna S, Borg DN, Brain DC, Tariq A, et al. Evaluating the costs and consequences of computerized clinical decision support systems in hospitals: a sco** review and recommendations for future practice. J Am Med Inform Assoc. 2023. https://doi.org/10.1093/jamia/ocad040.

Jacob V, Thota AB, Chattopadhyay SK, Njie GJ, Proia KK, Hopkins DP, et al. Cost and economic benefit of clinical decision support systems for cardiovascular disease prevention: a community guide systematic review. J Am Med Inf Assoc. 2017;24:669–76. https://doi.org/10.1093/jamia/ocw160.

Rinke ML, Bundy DG, Velasquez CA, Rao S, esh, Zerhouni Y, et al. Interventions to reduce pediatric medication errors: a systematic review. Pediatrics. 2014;134:338–60. https://doi.org/10.1542/peds.2013-3531.

Porter ME, Larsson S, Lee TH. Standardizing patient outcomes measurement. N Engl J Med. 2016;374:504–6. https://doi.org/10.1056/NEJMp1511701.

Kouri A, Yamada J, Lam Shin Cheung J, van de Velde S, Gupta S. Do providers use computerized clinical decision support systems? A systematic review and meta-regression of clinical decision support uptake. Implement Sci. 2022;17:21. https://doi.org/10.1186/s13012-022-01199-3.

van der Sijs H, Aarts J, Vulto A, Berg M. Overriding of drug safety alerts in computerized physician order entry. J AM MED Inf ASSOC. 2006;13:138–47. https://doi.org/10.1197/jamia.M1809.

Poly TN, Islam MM, Yang H-C, Li Y-CJ. Appropriateness of Overridden alerts in Computerized Physician Order Entry: systematic review. JMIR Med Inf. 2020;8:e15653. https://doi.org/10.2196/15653.

Slight SP, Seger DL, Franz C, Wong A, Bates DW. The national cost of adverse drug events resulting from inappropriate medication-related alert overrides in the United States. J Am Med Inform Assoc. 2018;25:1183–8. https://doi.org/10.1093/jamia/ocy066.

Chang S. Sco** reviews and systematic reviews: is it an Either/Or question? Ann Intern Med. 2018;169:502–3. https://doi.org/10.7326/M18-2205.

Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA Extension for sco** reviews (PRISMA-ScR): Checklist and Explanation. Ann Intern Med. 2018;169:467–73. https://doi.org/10.7326/M18-0850.

Acknowledgements

Not applicable.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

JW, DG, and WG contributed to the study conception and provided comments/revisions to the manuscript. DL, DGR and BA contributed to the screening and execution of the data extraction. DL and JG drafted the manuscript. JW, DG, WG, DGR, and BA read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Lampe, D., Grosser, J., Grothe, D. et al. How intervention studies measure the effectiveness of medication safety-related clinical decision support systems in primary and long-term care: a systematic review. BMC Med Inform Decis Mak 24, 188 (2024). https://doi.org/10.1186/s12911-024-02596-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-024-02596-y