Abstract

The service cycle and dynamic performance of structural parts are affected by the weld grinding accuracy and surface consistency. Because of reasons such as assembly errors and thermal deformation, the actual track of the robot does not coincide with the theoretical track when the weld is ground offline, resulting in poor workpiece surface quality. Considering these problems, in this study, a vision sensing-based online correction system for robotic weld grinding was developed. The system mainly included three subsystems: weld feature extraction, grinding, and robot real-time control. The grinding equipment was first set as a substation for the robot using the WorkVisual software. The input/output (I/O) ports for communication between the robot and the grinding equipment were configured via the I/O map** function to enable the robot to control the grinding equipment (start, stop, and speed control). Subsequently, the Ethernet KRL software package was used to write the data interaction structure to realize real-time communication between the robot and the laser vision system. To correct the measurement error caused by the bending deformation of the workpiece, we established a surface profile model of the base material in the weld area using a polynomial fitting algorithm to compensate for the measurement data. The corrected extracted weld width and height errors were reduced by 2.01% and 9.3%, respectively. Online weld seam extraction and correction experiments verified the effectiveness of the system’s correction function, and the system could control the grinding trajectory error within 0.2 mm. The reliability of the system was verified through actual weld grinding experiments. The roughness, Ra, could reach 0.504 µm and the average residual height was within 0.21 mm. In this study, we developed a vision sensing-based online correction system for robotic weld grinding with a good correction effect and high robustness.

Similar content being viewed by others

1 Introduction

With the progress of industrial technology and the development of manufacturing, grinding robots are playing an increasingly important role in fields such as automobiles and construction machinery [1, 2]. Quality sensing, information collection, and processing in the grinding process are inevitable requirements for the development of grinding equipment. Automation and intelligence have become key development directions of welding seam grinding processes [3, 4].

Industrial robots are widely used in the field of weld grinding, and grinding paths are typically obtained offline through manual teaching. However, in the actual welding seam grinding process, owing to thermal deformation, misalignment, and workpiece assembly errors, the actual weld track is often inconsistent with that in the theory, resulting in scratching of the workpiece or invalid removal of the weld [5, 6]. The requirements for weld grinding are stringent, particularly in fields such as rail-transit aerospace [7]. Traditional offline teaching modes cannot satisfy the production requirements. Therefore, online robot trajectory correction systems must be urgently used for welds.

Based on the aforementioned problems, a better solution is to use visual sensors to detect, track, and extract weld contour information and analyze and process the weld seam space coordinates [8]. Vision sensors can be divided into active [9] and passive systems [10], with monocular and binocular structures [11, 12]. Gu et al. [13] improved a binocular vision system to capture the images of the weld pool surface by suppressing strong arc interference during gas metal arc welding. An algorithm to eliminate image mismatch was proposed to improve the accuracy of the weld image matching algorithm. Wang et al. [14] combined passive and active methods to propose a 3D narrow butt weld inspection system based on binocular consistency analysis that significantly improved inspection efficiency. Dinahm et al. [15] realized a process of automatic recognition of the welding seam position according to the image contrast difference and image texture that was applied in robot arc welding. However, the welding seam extraction approach based on an industrial camera is easily affected by processing conditions such as splash, dust, and arc interference signals in the process of grinding. Moreover, more complex image processing algorithms are required to ensure the accuracy of the extraction results, thereby increasing the computational time and cost of the system to meet the grinding requirements.

To avoid the problems associated with industrial cameras, Yang et al. [16] proposed a method for reconstructing weld seam profiles based on coded structured light to plan the welding task. The developed system could solve different weld seams such as butt and fillet joints. However, coded structured light is largely dependent on the surrounding environment; hence, ensuring welding accuracy is difficult. Therefore, it is utilized less frequently in engineering applications. Laser line-structured light is widely used in robotic operations owing to its high robustness. Common laser fringes have various shapes, including linear [17], multilinear [18], cross [19], and triangular [20]. Liu et al. [21] proposed a robotic weld seam tracking system based on laser vision and a conditional generation adversarial network (CGAN) for the problem of low welding accuracy in a multilayer multipass metal active gas arc welding (MAG) process. The experimental results showed that the average correction error was less than 0.6 mm, and the adjustment process of the welding gun position could be completed within 1 s. To improve the welding quality of robotic gas metal arc welding, Xu et al. [22] developed a welding system for seam tracking based on a purpose-built vision sensor. The system included the following modules: welding power control, intelligent parameter setting, image capturing and processing, robot communication and path planning, and welding expert database. Li et al. [23] proposed a robust automatic welding seam identification and tracking method utilizing structured light vision to solve possible disturbances in complex unstructured welding environments such as welding splashes and thermal-induced deformations. This method used a string approach to qualitatively describe the weld profile. He et al. [24] presented a visual attention feature-based method to extract the weld seam profile (WSP) from a strong arc background using clustering results to solve the interference data point problem. Compared with previous methods, the method proposed in this study can extract more useful details of the WSP and has better stability in terms of removing interference data. For online seam tracking, Ding et al. [25] developed an online laser-based machine vision system for seam tracking and proposed a shape-matching algorithm to achieve a reliable and accurate seam tracking process adaptive to different groove types. To solve the problem of traditional image processing methods not overcoming the interference of strong noise, Zou et al. [26] proposed a lightweight segmentation network based on SOLOv2. The results showed that the average absolute error of welding was stable at approximately 0.2 mm. However, the aforementioned research works only for the robot welding process, and the research on weld grinding is relatively simple. Guo et al. [27] presented a robotic weld grinding motion planning methodology and developed an experimental platform for the robotic removal of seam weld beads. Li et al. [28] designed a robotic system for grinding weld seams and presented a monitoring method for excessive grinding. Owing to the complexity and uncertainty of the grinding process, little research has been conducted on robotic automation systems for weld grinding.

After welding, the shape of the weld is irregular and the thermal deformation of the weld is significant; hence, the online identification process of the weld is difficult. Owing to the complexity and uncontrollability of the grinding process, welding seam grinding and online deviation correction are difficult. Currently, few studies exist on automatic welding seam grinding. Most grinding robots can only perform grinding operations according to a planned path and are unable to adapt to the influence of interference factors such as deformation and error, resulting in poor grinding quality. Therefore, a robotic weld grinding online correction system is urgently required to satisfy manufacturing requirements.

In this study, a robot weld grinding online correction system based on laser visual sensing was built to identify, track, and extract weld contour information in real time and adjust the grinding path online. Experiments showed that the system could effectively solve the problems of low productivity, poor grinding quality, and a low level of automation in manual grinding. Section 2 introduces the overall design of the system, focusing on the analysis of its overall architecture and communication process. Section 3 introduces the online correction process for the system. In Section 4, the experimental results demonstrate the effectiveness and adaptability of the proposed system.

2 System Overall Design

2.1 System Requirements

Owing to the wide variety of weld seams and shapes of structural components [29] (Figure 1) combined with the thermal deformation and assembly error of the welding process, satisfying the processing requirements by manual teaching alone is difficult. Therefore, during the grinding process, the weld contours must be recognized and tracked and the grinding trajectory of the robot must be corrected in real time to suit the different types and shapes of grinding tasks. A robotic weld seam grinding correction system is proposed based on the weld grinding requirements for various structural components.

2.2 Overall System Architecture

The system has a KUKA robot as its core body, with vision-sensing equipment and grinding equipment installed as subdevices at the end of the robot. The system software is also developed for real-time weld seam feature extraction, analysis, and data interaction. A laser vision sensor is mounted in front of the grinding wheel to prescan the weld. The software system extracts the weld profile from the scan information, calculates the weld characteristics (height, width, and center point offset), and stores them in a data buffer. When the robot starts grinding, the software system transfers the correction data to the robot control system in real time, enabling it to correct the deflection online. The robotic weld grinding online correction system is shown in Figure 2. It mainly consists of a grinding robot, laser vision system, grinding system, industrial control machine, communication system, and human–computer interaction system. The system includes the following functions.

(1) Extraction of weld seam feature information. The measurement results of laser vision sensors are easily affected by workpiece deformation and irregular weld seam contours, resulting in inaccurate measurements. Therefore, a neural network model is first established to segment the point cloud data in the base material and weld seam area. A surface profile model of the base material is then established in the weld area using a polynomial fitting algorithm to compensate for the measurement data. The edge points of the weld seam and base material are then extracted using a threshold segmentation method. The height, width, and center of the weld seam are obtained. The difference between the center of the weld seam and the actual position of the robot is calculated to obtain the correction value.

(2) Online interaction of the correction data. In this study, an Extensible Markup Language (XML)-based data interaction architecture is developed that allows the system software on a PC to send the correction data to the robot control system in real time to correct the robot grinding path.

2.3 System Communication Process

The system consists of three main modules: weld feature extraction, data real-time interaction, and weld grinding.

-

1)

Weld seam feature extraction module. The vision sensor is mounted in front of the grinding wheel at the end of the robot to ensure that the laser structured light is projected onto the surface of the weld.

-

2)

Data online interaction module. An industrial computer is used as the server and a robot controller as the client. The written XML is used as a data carrier to interact online with the robot real-time position and correction data that are used by the robot control system as an offset to the tool coordinate system.

-

3)

Weld grinding module. Weld grinding equipment, including frequency converters, electric spindles, and CBN grinding wheels, is installed at the end flange of the robot. In particular, the electric spindle provides grinding power, frequency converter changes the grinding speed, and grinding wheel removes the material.

The communication process between the modules is illustrated in Figure 3. First, the laser vision sensor transmits weld seam point cloud data to the data online interaction module via the TCP. On the PC side, the system extracts the weld seam features based on the proposed method and stores them in a data cache while waiting for the robot to respond. After receiving a signal from the robot, the system sends the correction data to the robot controller in the form of an XML file in the KUKA robot communication package, Ethernet KRL, that adjusts the robot tool coordinate system based on the correction data, thereby enabling the online correction of the weld grinding trajectory.

3 Online Correction Process for Weld Grinding

3.1 Accurate Extraction of Weld Features

3.1.1 Date Fitting

The best way to achieve an online correction process is to analyze the profile data obtained by the sensor for each weld cross section. However, inaccurate and unstable measurement results are often caused by workpiece bending deformations and noisy signals. Therefore, a suitable contour model must be identified as a reference to correct the measurement results, accommodating the effects of dynamic measurement processes and other noisy signals. In this system, we first denoise and classify the contour data. A surface profile model of the base material in the weld area is then constructed using a polynomial fitting algorithm to correct the measurement errors caused by the bending deformation of the workpiece. Finally, accurate weld seam features are extracted online, and correction values are calculated.

In this study, a neural network model [30] is first established to segment the point cloud data in the base material and weld seam area. The model is shown in Figure 4, where \(x_{j}\) denotes the measured contour data, \(w_{j}\) denotes the weight of the point data, \(\theta\) denotes the threshold of the hidden layer node, and f represents the following activation function.

This model learns from a large amount of data and adjusts the neuron weights and thresholds to accurately classify the weld spot and base material areas. \(x_{i}\) is a point cloud dataset of weld seam profile sections measured using a laser vision sensor. The hidden layer is one layer, and the scaled conjugate gradient (SCG) algorithm is used for training, with the number of iterations initially set at 1000. Because the zero position of the sensor is at the position of its laser emitter, the threshold is the distance from the laser emitter to the base material of the weld that is 25 mm for this measurement.

Next, the segmented base material area data are fitted to a polynomial algorithm expressed as follows:

where \(f(x_{j} )\) represents the triple spline function, \(y_{j}\) denotes the height at the location of laser \(x_{j}\) along the weld profile, and \(\left[ {\begin{array}{*{20}c} a & b & c & d \\ \end{array} } \right]\) represents the parameter vector of the triple spline function.

\(\varepsilon_{j}\) is introduced to denote the residual value between \(y_{j}\) and measured value \(y^{\prime}_{j}\)

The parameters in Eq. (2) can be solved on the basis of the \(\varepsilon_{j}\) minimum principle.

Eq. (5) is further constructed as follows:

To satisfy the minimization principle, the partial derivative of \(f(a,b,c,d)\) is zero.

Therefore, Eq. (5) can be rewritten as follows:

The matrix can be simplified as follows:

Then, the parameter vector of the triple spline function can be calculated as follows:

Based on this segmentation model, the weld is segmented from the base material area, and the results are shown in Figure 5a. The fitting results based on the proposed fitting algorithm for further fitting the surface profile of the base material are shown in Figure 5b.

The root mean square error (RMSE) and sum of squares owing to error (SSE) are used to evaluate the accuracy of the fitted mode.

where n denotes the number of point clouds in the base material area, and \(\varepsilon_{j}\) denotes the residual value between fitting value \(y_{j}\) and measured value \(y^{\prime}_{j}\). After the calculation, RMSE = 0.0237 and SSE = 0.0908, proving the accuracy of the fitting results.

3.1.2 Data Correction

In this study, the difference between the fitted base material profile data and the measured weld profile data is calculated to obtain the compensated weld profile data. As shown in Figure 6, the corrected profile of the base material tends to be flat. The actual weld width based on multiple measurements with Vernier calipers is 9.46 mm and the height is 2.38 mm. The width of the weld measured before correction is 9.05 mm and the height is 2.03 mm. The width of the weld measured after correction is 9.24 mm, and the height is 2.25 mm; the error is reduced by 2.01% and 9.3%, respectively. This method solves the errors caused by the deformation of the base material and mounting angle of the sensor, resulting in a more accurate extraction result.

3.2 Real-time Interaction Process of Correction Data

During the weld grinding process, the robot drives the sensor to scan the weld in real time and obtain laser contour data. The system software accurately calculates the weld contour profile features, including the width, height, and weld center point, based on the algorithm proposed in this study. Once the system receives a response from the robot, it uses an XML file as the data carrier to interact between the robot and the vision system. The robot controller uses the correction values as the new grinding tool coordinate system, enabling the robot to correct the grinding trajectory in real time. The real-time interactions of the correction data are shown in Figure 7.

The real-time data interaction process is implemented based on the compiled XML files, avoiding control by third-party devices. Ethernet KRL, a KUKA robot communication package, can receive and send XML data structures. However, the data structure must be consistent when sending and receiving XML data. Therefore, in this study, a consistent XML data structure is written for online interaction of the data. As shown in Figure 8, this structure includes a parameter configuration, receiving structure, and sending structure. The PC first receives the robot position data from the robot controller, calculates the correction value, and simultaneously sends it to the robot controller that then corrects the deflection online. This process is repeated until the grinding process is completed.

4 Experimental Results and Discussion

4.1 Experimental Platform

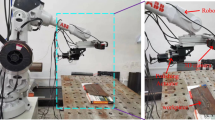

The vision sensing-based online correction system for robotic weld grinding consisted of a KUKA KR210 industrial robot, Keyence LJ-G500 sensor, a frequency converter, an electric spindle, a converter, a force sensor, an Advantech IP610 industrial computer, a control panel, an end bracket, and a system software, as shown in Figure 9.

Experimental platform: a Robot weld grinding system, b Grinding device 1. Adapter plate between the robot flange and the force sensor, 2. Force sensor, 3. Force sensor bracket, 4. Adapter plate between the force transducer and the motor spindle, 5. Motor spindle bracket, 6. Laser vision sensor bracket, 7. Adapter plate between the laser vision sensor and the motor spindle, c Weld feature online extraction software

4.2 Weld Feature Extraction Experiment

In this study, the effectiveness of the proposed method for extracting weld features was verified. As shown in Figure 10a, an S-curve weld was used as the experimental object. Five weld sections were selected, and the weld width and height were measured repeatedly using a Vernier caliper. The average value was used as the measurement result. The robot was then taught in advance to record these five positions for online measurement and feature extraction experiments. The experimental results are shown in Figures 10b and c. Notably, the proposed method extracted the weld height error of approximately 1.1% and width error of 4.3%, thereby satisfying the processing requirements.

4.3 System Correction Experiments

Two sets of experiments were conducted to verify the online correction function of the proposed system. A straight weld of a steel plate was used as the experimental object. As shown in Figure 11a, weld starting point O was defined as the origin of the workpiece coordinate system. The initial position of the grinding wheel was then manually adjusted to point A, where the offset from point A to point O was 10 mm. When the system began running, the offset between the robot and the center of the weld was recorded every 20 mm using a Vernier caliper. The experimental results, as shown in Figure 11b, indicated that the robot corrected the initial trajectory at the second grinding point and later tracked the center of the weld. The error in the trajectory after correction was less than 0.1 mm. Subsequently, a curved weld of a steel plate was used as the experimental object to verify the correction effect of the system. As shown in Figure 11c, weld seam starting point A was defined as the origin of the workpiece coordinate system. First, the coordinate values of the 10 grinding points in the workpiece coordinate system were measured using a Vernier caliper and recorded as standard data. The grinding point trajectory was then manually taught to track the weld profile and record the coordinate values of the teaching grinding point in the workpiece coordinate system. The system began running, corrected the teaching trajectory, and recorded the coordinates of the grinding point in the workpiece coordinate system after correction. The experimental results are presented in Figure 11d. The teaching error was approximately 2 mm owing to the human error of the teaching process, and the grinding trajectory error after system correction was approximately 0.2 mm, thereby verifying the effectiveness of the system correction function. Curved weld trajectories had a higher tracking error than straight weld trajectories. This might be because the laser measurement was not perfectly perpendicular to the weld profile, the weld curvature changed abruptly, or the system did not completely respond.

4.4 System Grinding Experiments

To verify the actual grinding effect of the system, we conducted actual grinding experiments with straight and irregular curved welds, where the weld length was 220 mm and the material was alloy steel. The first was a grinding experiment using a straight weld. Based on the method proposed in this study, each weld profile was extracted, and all the profiles were reconstructed to obtain the global information of the weld. In Figure 12a, the green part of the figure indicates the entire weld seam, and the red part indicates the center of the weld. The surface of the weld after grinding is shown in Figure 12b, with good surface quality. Ten equally spaced points were selected to measure the surface roughness and residual height after grinding; the sampling length for measuring the roughness was 0.8 mm. The results are shown in Figures 12c and d. The after grinding could reach 0.504 μm, and the average residual height was 0.215 mm, thereby completely satisfying the processing requirements.

Subsequently, an irregular curved weld grinding experiment was conducted, where the weld length was 220 mm and the material was alloy steel. The surface of the weld after grinding is shown in Figure 13b, with good surface quality. The surface roughness and residual height after grinding are shown in Figures 13c and d. The after grinding could reach 0.512 μm, and the average residual height was 0.221 mm.

These experimental results showed that the system could adapt to different shapes of weld grinding, had a high correction accuracy, and could obtain good surface quality.

5 Conclusions

To solve the problem that the actual trajectory of the robot does not coincide with the theoretical trajectory during robotic weld grinding, vision sensing-based online correction system for robotic weld grinding was developed; this is urgently required for robotic weld grinding. The main focus of this study was the construction of a correction system, accurate extraction of weld features, and online interaction of grinding data. The experimental results proved the effectiveness of the correction function of the system.

-

(1)

A vision sensing-based online correction system for robotic weld grinding was developed that could correct the grinding trajectory online and effectively remove weld tumors. The experimental results showed that the correction effect of the system was remarkable, the corrected trajectory error could be controlled to within 0.2 mm, and the surface roughness after grinding could reach 0.504 μm, thereby completely meeting the processing requirements.

-

(2)

The values measured by the laser vision sensor were effectively corrected based on the third order polynomial fit to the base material surface. The corrected extracted weld width and height errors were reduced by 2.01% and 9.3%, respectively. Comparative experiments demonstrated that the proposed online extraction method for post-weld seam features could quickly and accurately extract weld feature values, including seam height, width, and center point data.

-

(3)

A data interaction architecture based on an XML file was compiled to realize real-time data transfer between the laser visual sensor and the robot.

Availability of Data and Materials

Data and materials will be made available on request.

References

Y J Lv, Z Peng, C Qu, et al. An adaptive trajectory planning algorithm for robotic belt grinding of blade leading and trailing edges based on material removal profile model. Robotics and Computer-Integrated Manufacturing, 2020, 66: 101987.

C Chen, Y Wang, Z T Gao, et al. Intelligent learning model-based skill learning and strategy optimization in robot grinding and polishing. Science China Technological Sciences, 2022, 65(9): 1957-1974.

Q L **e, H Zhao, T Wang, et al. Adaptive impedance control for robotic polishing with an intelligent digital compliant grinder. International Conference on Intelligent Robotics and Applications. Shenyang, China, Aug 8–11, 2019: 482-494.

A Verl, A Valente, S Melkote, et al. Robots in machining. CIRP Annals, 2019, 68(2): 799-822.

D H Zhu, X Z Feng, X H Xu, et al. Robotic grinding of complex components: A step towards efficient and intelligent machining–challenges, solutions, and applications. Robotics and Computer-Integrated Manufacturing, 2020, 65: 101908.

V Pandiyan, P Murugan, T Tjahjowidodo, et al. In-process virtual verification of weld seam removal in robotic abrasive belt grinding process using deep learning. Robotics and Computer-Integrated Manufacturing, 2019, 57: 477-487.

H Y Zhang, L Li, J B Zhao, et al. Design and implementation of hybrid force/position control for robot automation grinding aviation blade based on fuzzy PID. The International Journal of Advanced Manufacturing Technology, 2020, 107(3): 1741-1754.

J M Ge, Z H Deng, Z Y Li, et al. Adaptive parameter optimization approach for robotic grinding of weld seam based on laser vision sensor. Robotics and Computer-Integrated Manufacturing, 2023, 82: 102540.

L Hong, B S Wang, X L Yang, et al. Offline programming method and implementation of industrial robot grinding based on VTK. Industrial Robot: the International Journal of Robotics Research and Application, 2020, 47(4): 547-557.

H Y He, J Liu, Y Zhang, et al. Quantitative prediction of additive manufacturing deposited layer offset based on passive visual imaging and deep residual network. Journal of Manufacturing Processes, 2021, 72: 195-202.

Z Y Zhang, Y F Cao, M Ding, et al. Monocular vision-based obstacle avoidance trajectory planning for unmanned aerial vehicle. Aerospace Science and Technology, 2020, 106: 106199.

H D Zhang, J Z Huo, F Yang, et al. A flexible calibration method for large-range binocular vision system based on state transformation. Optics & Laser Technology, 2023, 164: 109546.

Z N Gu, J Chen, C S Wu. Three-dimensional reconstruction of welding pool surface by binocular vision. Chinese Journal of Mechanical Engineering, 2021, 34: 47.

X G Wang, T J Chen, Y M Wang, et al. The 3D narrow butt weld seam detection system based on the binocular consistency correction. Journal of Intelligent Manufacturing, 2023, 34: 2321-2332.

M Dinham, G Fang. Autonomous weld seam identification and localisation using eye-in-hand stereo vision for robotic arc welding. Robotics and Computer-Integrated Manufacturing, 2013, 29(5): 288-301.

L Yang, Y H Liu, J N Z Peng, et al. A novel system for off-line 3D seam extraction and path planning based on point cloud segmentation for arc welding robot. Robotics and Computer-Integrated Manufacturing. 2020, 64: 101929.

Z Y Tan, B L Zhao, Y Ji, et al. A welding seam positioning method based on polarization 3D reconstruction and linear structured light imaging. Optics & Laser Technology, 2022, 151: 108046.

Z H Yan, F T Hu, J Fang , et al. Multi-line laser structured light fast visual positioning system with assist of TOF and CAD. Optik, 2022, 269: 169923.

J C Guo, Z M Zhu, W B Sun, et al. Principle of an innovative visual sensor based on combined laser structured lights and its experimental verification. Optics & Laser Technology, 2019, 111: 35-44.

J M Romano, A Garcia-Giron, P Penchev, et al. Triangular laser-induced submicron textures for functionalising stainless steel surfaces. Applied Surface Science, 2018, 440: 162-169.

C F Liu, J Q Shen, S S Hu, et al. Seam tracking system based on laser vision and CGAN for robotic multi-layer and multi-pass MAG welding. Engineering Applications of Artificial Intelligence, 2022, 116: 105377.

Y L Xu, N Lv, G Fang, et al. Welding seam tracking in robotic gas metal arc welding. Journal of Materials Processing Technology, 2017, 248: 18-30.

X D Li, X G Li, S Ge, et al. Automatic welding seam tracking and identification. IEEE Transactions on Industrial Electronics, 2017, 64(9): 7261-7271.

Y S He, Z H Yu, J Li, et al. Discerning weld seam profiles from strong arc background for the robotic automated welding process via visual attention features. Chinese Journal of Mechanical Engineering, 2020, 33: 21.

Y Y Ding, W Huang, R Kovacevic. An on-line shape-matching weld seam tracking system. Robotics and Computer-Integrated Manufacturing, 2016, 42: 103-112.

Y B Zou, G H Zeng. Light-weight segmentation network based on SOLOv2 for weld seam feature extraction. Measurement, 2023: 112492.

W J Guo, Y G Zhu, X He. A robotic grinding motion planning methodology for a novel automatic seam bead grinding robot manipulator. IEEE Access, 2020, 8: 75288-75302.

M Y Li, Z J Du, X M Ma, et al. System design and monitoring method of robot grinding for friction stir weld seam. Applied Sciences, 2020, 10(8): 2903.

T Y Liu, P Zheng, J S Bao. Deep learning-based welding image recognition: A comprehensive review. Journal of Manufacturing Systems, 2023, 68: 601-625.

M Y **n, Y Wang. Research on image classification model based on deep convolution neural network. EURASIP Journal on Image and Video Processing, 2019: 1-11.

Acknowledgements

Not applicable.

Funding

Supported by Hunan Provincial Natural Science Foundation of China (Grant No. 2021JJ50116).

Author information

Authors and Affiliations

Contributions

JG wrote the manuscript; ZD reviewed the manuscript; SW was in charge of the whole trial; ZL and WL assisted with sampling and laboratory analyses; JN provided the trial help. All authors read and approved the final manuscript. All authors read and approved the final manuscript.

Authors’ Information

Jimin Ge, born in 1995, is currently a PhD candidate at Hunan University of Science and Technology, China. He received his bachelor’s degree from Hunan University of Science and Technology, China, in 2018. His main research interests include robotic weld grinding and intelligent manufacturing.

Zhaohui Deng, born in 1968, is currently a professor at Huaqiao University, China. He received his doctor’s degree from Hunan University, China. His main research interests include precision and efficient machining of difficult-to-machine materials, intelligent grinding expert knowledge system, green manufacturing and intelligent manufacturing.

Shuixian Wang, born in 1998, received her master's degree from Hunan University of Science and Technology, China, in 2023. Her main research interests include three-dimensional reconstruction and feature extraction of weld surfaces.

Zhongyang Li, born in 1994, received his doctor's degree from Hunan University of Science and Technology, China, in 2023. His main research interests include Sapphire double-sided chemical mechanical polishing and intelligent expert knowledge systems.

Wei Liu, born in 1986, is currently an assistant professor at Hunan University of Science and Technology, China. He received his doctor's degree from Hunan University, China. His main research interests include precision and efficient machining of difficult-to-machine materials and preparation of structured grinding wheels.

Jiaxu Nie, born in 1988, is an engineer at ZOOMLION Heavy Industry Science and Technology Co., Ltd., Changsha, China. He received his master's degree from Central South University, China. His main research interests include development of processes and systems for pump truck equipment and automated grinding of weld seams.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing financial interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ge, J., Deng, Z., Wang, S. et al. Vision Sensing-Based Online Correction System for Robotic Weld Grinding. Chin. J. Mech. Eng. 36, 125 (2023). https://doi.org/10.1186/s10033-023-00955-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1186/s10033-023-00955-w