Abstract

Matrix product state methods are known to be efficient for computing ground states of local, gapped Hamiltonians, particularly in one dimension. We introduce the multi-targeted density matrix renormalization group method that acts on a bundled matrix product state, holding many excitations. The use of a block or banded Lanczos algorithm allows for the simultaneous, variational optimization of the bundle of excitations. The method is demonstrated on a Heisenberg model and other cases of interest. A large of number of excitations can be obtained at a small bond dimension with highly reliable local observables throughout the chain.

Graphical abstract

Similar content being viewed by others

Data Availability Statement

Data used for this paper are derived entirely from the DMRjulia library for tensor network computations [43].

References

S.R. White, Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863 (1992)

S.R. White, Density-matrix algorithms for quantum renormalization groups. Phys. Rev. B 48, 10345 (1993)

U. Schollwöck, The density-matrix renormalization group. Rev. Modern Phys. 77, 259 (2005)

U. Schollwöck, The density-matrix renormalization group in the age of matrix product states. Ann. Phys. 326, 96–192 (2011)

M. Edwin, S.R. Stoudenmire, Studying two-dimensional systems with the density matrix renormalization group. Annu. Rev. Condens. Matter Phys. 3, 111–128 (2012)

S. Östlund, S. Rommer, Thermodynamic limit of density matrix renormalization. Phys. Rev. Lett. 75, 3537 (1995)

S. Rommer, S. Östlund, Class of ansatz wave functions for one-dimensional spin systems and their relation to the density matrix renormalization group. Phys. Rev. B 55, 2164 (1997)

I. Affleck, T. Kennedy, E.H. Lieb, H. Tasaki, Valence bond ground states in isotropic quantum antiferromagnets, in Condensed matter physics and exactly soluble models. (Springer, Berlin, 1988), pp.253–304

J. Feldt, C. Filippi, Excited-state calculations with quantum monte carlo, ar**v preprint ar**v:2002.03622 ( 2020)

L. Vanderstraeten, J. Haegeman, F. Verstraete, Simulating excitation spectra with projected entangled-pair states. Phys. Rev. B 99, 165121 (2019)

E.F. D’Azevedo, W.R. Elwasif, N.D. Patel, G. Alvarez, Targeting multiple states in the density matrix renormalization group with the singular value decomposition, ar**v preprint ar**v:1902.09621 ( 2019)

B. Ponsioen, P. Corboz, Excitations with projected entangled pair states using the corner transfer matrix method. Phys. Rev. B 101, 195109 (2020)

C.D.E. Boschi, F. Ortolani, Investigation of quantum phase transitions using multi-target dmrg methods. Eur. Phys. J. B-Condens. Matter Complex Syst. 41, 503–516 (2004)

A. Karen Hallberg, Density-matrix algorithm for the calculation of dynamical properties of low-dimensional systems. Phys. Rev. B 52, R9827 (1995)

E. Jeckelmann, Dynamical density-matrix renormalization-group method. Phys. Rev. B 66, 045114 (2002)

N. Chepiga, F. Mila, Excitation spectrum and density matrix renormalization group iterations. Phys. Rev. B 96, 054425 (2017)

M. Van Damme, R. Vanhove, J. Haegeman, F. Verstraete, L. Vanderstraeten, title Efficient matrix product state methods for extracting spectral information on rings and cylinders. Phys. Rev. B 104, 115142 (2021)

V. Khemani, F. Pollmann, S.L. Sondhi, Obtaining highly excited eigenstates of many-body localized hamiltonians by the density matrix renormalization group approach. Phys. Rev. Lett. 116, 247204 (2016)

Yu. **ongjie, D. Pekker, B.K. Clark, Finding matrix product state representations of highly excited eigenstates of many-body localized hamiltonians. Phys. Rev. Lett. 118, 017201 (2017)

T.E. Baker, S. Desrosiers, M. Tremblay, M.P. Thompson, Méthodes de calcul avec réseaux de tenseurs en physique. Can. J. Phys. 99, 4 (2021). https://doi.org/10.1139/cjp-2019-0611

T.E. Baker, S. Desrosiers, M. Tremblay, M.P. Thompson, Basic tensor network computations in physics, ar**v preprint ar**v:1911.11566, p. 19 ( 2019)

N. Nakatani, S. Wouters, D. Van Neck, G.K. Chan, Linear response theory for the density matrix renormalization group: Efficient algorithms for strongly correlated excited states. J. Chem. Phys. 140, 2 (2014)

R.-Z. Huang, H.-J. Liao, Z.-Y. Liu, H.-D. **e, Z.-Y. **e, H.-H. Zhao, J. Chen, T. **ang, Generalized Lanczos method for systematic optimization of tensor network states. Chin. Phys. B 27, 070501 (2018)

J. Cullum, W.E. Donath, A block lanczos algorithm for computing the q algebraically largest eigenvalues and a corresponding eigenspace of large, sparse, real symmetric matrices, in 1974 IEEE Conference on Decision and Control including the 13th Symposium on Adaptive Processes (organization IEEE, 1974) pp. 505–509

Z. Bai, J. Demmel, J. Dongarra, A. Ruhe, H. van der Vorst, Templates for the solution of algebraic eigenvalue problems: a practical guide (SIAM, Singapore, 2000)

T.N. Dionne, A. Foley, M. Rousseau, D. Sénéchal Pyqcm: An open-source Python library for quantum cluster methods. SciPost Phys. Codebases 23 (2023) https://doi.org/10.21468/SciPostPhysCodeb.23

A. Di Paolo, T.E. Baker, A. Foley, D. Sénéchal, A. Blais, Efficient modeling of superconducting quantum circuits with tensor networks. NPJ Quant Inf 7, 1–11 (2021). https://doi.org/10.1038/s41534-020-00352-4

T.E. Baker , M.P. Thompson, D.M. Rjulia, Basic construction of a tensor network library for the density matrix renormalization group, ar**v preprint ar**v:2109.03120 ( 2021)

J. Hauschild, F. Pollmann, Efficient numerical simulations with tensor networks: Tensor network python (tenpy), SciPost Physics Lecture Notes , 005 ( 2018)

R. Orús, Tensor networks for complex quantum systems. Nat. Rev. Phys. 1, 538–550 (2019)

I.P. McCulloch, From density-matrix renormalization group to matrix product states. J. Stat. Mech. Theory Exp. 2007, P10014 (2007)

C. Hubig, I.P. McCulloch, U. Schollwöck, F.A. Wolf, Strictly single-site dmrg algorithm with subspace expansion. Phys. Rev. B 91, 155115 (2015)

F.D.M. Haldane, Continuum dynamics of the 1-d Heisenberg antiferromagnet: Identification with the o (3) nonlinear sigma model. Phys. Lett. A 93, 464–468 (1983)

F.D.M. Haldane, Nonlinear field theory of large-spin Heisenberg antiferromagnets: semiclassically quantized solitons of the one-dimensional easy-axis Néel state. Phys. Rev. Lett. 50, 1153 (1983)

E.H. Lieb, Two theorems on the Hubbard model. Phys. Rev. Lett. 62, 1201 (1989)

H.A. Kramers, Théorie générale de la rotation paramagnétique dans les cristaux. Proc. Acad. Amst 33, 2 (1930)

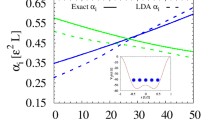

T.E. Baker, E.M. Stoudenmire, L.O. Wagner, K. Burke, R. Steven, One-dimensional mimicking of electronic structure: The case for exponentials. Phys. Rev. B 91, 235141 (2015). https://doi.org/10.1103/PhysRevB.91.235141

L.O. Wagner, T.E. Baker, E.M. Stoudenmire, K. Burke, R. Steven, Kohn-Sham calculations with the exact functional. Phys. Rev. B 90, 5109 (2014). https://doi.org/10.1103/PhysRevB.90.045109

S.V. Dolgov, B.N. Khoromskij, I.V. Oseledets, D.V. Savostyanov, Computation of extreme eigenvalues in higher dimensions using block tensor train format. Comput. Phys. Commun. 185, 1207–1216 (2014)

A.V. Knyazev, Locally optimal block preconditioned conjugate gradient method, Toward the optimal preconditioned eigensolver. SIAM J. Sci. Comput. 23, 517–541 (2001)

R. Bhatia, Matrix Analysis (Springer-Verlag, New York, 1997). https://doi.org/10.1007/978-1-4612-0653-8

T.E. Baker, Lanczos recursion on a quantum computer for the Green’s function and ground state. Phys. Rev. A 103, 032404 (2021). https://doi.org/10.1103/PhysRevA.103.032404

T.E. Baker, D.M.R. Julia, https://github.com/bakerte/DMRJtensor.jl

Acknowledgements

This research was enabled in part by support provided by Calcul Québec (www.calculquebec.ca) and the Digital Research Alliance of Canada (www.alliancecan.ca). This project was undertaken on the Viking Cluster, which is a high performance compute facility provided by the University of York. We are grateful for computational support from the University of York High Performance Computing service, Viking and the Research Computing team. This work has been supported in part by the Natural Sciences and Engineering Research Council of Canada (NSERC) under grants RGPIN-2015-05598 and RGPIN-2020-05060. T.E.B. thanks funding provided by the postdoctoral fellowship from Institut quantique and Institut Transdisciplinaire d’Information Quantique (INTRIQ). This research was undertaken thanks in part to funding from the Canada First Research Excellence Fund (CFREF). T.E.B. is grateful to the US-UK Fulbright Commission for financial support under the Fulbright U.S. Scholarship programme as hosted by the University of York. This research was undertaken in part thanks to funding from the Bureau of Education and Cultural Affairs from the United States Department of State. This research was undertaken, in part, thanks to funding from the Canada Research Chairs Program. The Chair position in the area of Quantum Computing for Modelling of Molecules and Materials is hosted by the Departments of Physics & Astronomy and of Chemistry at the University of Victoria. T.E.B. is grateful for support from the University of Victoria’s start-up grant from the Faculty of Science.

Author information

Authors and Affiliations

Contributions

A.F. conceived of the general strategy using block Lanczos and made the first implementation of the two-site algorithm. T.E.B. implemented all other elements and wrote the paper. D.S. provided support and introduced the concepts of the block Lanczos algorithm to the other authors and provided reference data to check the algorithm.

Corresponding author

Appendices

Appendix A: Review of the Block Lanczos method

The traditional Lanczos algorithm is a recursion relation where a linear combination of previously obtained states are multiplied with scalar coefficients \(\alpha \) and \(\beta \). The recursion relation is given in Appendix in Eq. B2. The advantage of this method over a power method is that fewer operations are required to generate the ground-state wavefunction.

Block Lanczos is an extension of the Lanczos algorithm that generates the Krylov subspace of a set of states instead of a single one [24]. In the context of DMRG, it is perfectly suited to updating the orthogonality center of a bundled MPS.

The recurrence relation of block Lanczos is

where the \(\Psi _n\) are rectangular \(K\times g\) matrices composed of mutually orthonormal columns. The column length K is the size of the Hilbert space of H and the number of columns g is the block dimensions, a parameter of the algorithm. The \(\textbf{A}_n\) and \(\textbf{B}_n\) are \(g\times g\) matrices.

The matrices in Eq. A1 are defined by the following equations:

where \(\mathbb {I}_{g\times g}\) is a size g identity matrix and \(\delta _{mn}\) is Kronecker’s delta. The recurrence relation Eq. A1 has the following initial conditions: \(\Psi _{-1} = 0\) and \(\Psi _0\) is an arbitrary matrix satisfying Eq. A2. The columns of \(\Psi _0\) are the states for which the block Lanczos algorithm generates the Krylov subspace. Note that by choosing \(g=1\), we recover the usual Lanczos algorithm. In that case, the matrices \(\textbf{A}_n\) and \(\textbf{B}_n\) are reduced to the \(\alpha _n\) and \(\beta _n\) coefficients. Note that the \(\Psi _n\) matrices can be related to the notation for the scalar Lanczos case (see Eq. B2) as

where each of the \(|\psi _i^{(n)}\rangle \) is a vector of size N and can be placed as a column in the \(\Psi _n\) matrix.

In practice, \(\textbf{A}_n\) can be computed from its definition Eq. A3, but \(\Psi _n\) and \(\textbf{B}_n\) cannot be computed from their respective definitions. Instead one must proceed as follows: Given the quantities resulting from the previous iteration, we can compute

To obtain \(\Psi _{n+1}\), we must orthonormalize the columns of \(\widetilde{\Psi }_{n+1}\), the (non-unitary) matrix transform that accomplish this goal is \(\textbf{B}_{n+1}^{-1}\). We proceed by performing a matrix decomposition such as the SVD or the QR decomposition. The choice of decomposition is a choice of gauge, with no fundamental consequences. We choose to use the SVD in this way:

where it is noted that with this choice of gauge, \(\textbf{B}_n^\dagger = \textbf{B}_n\). To obtain \(\Psi _{n+1}\) and \(\textbf{B}_{n+1}\), a closure relationship was inserted into the decomposition of \(\tilde{\Psi }_{n+1}\). The form chosen here is the polar decomposition [41]; however, any unitary matrix can be inserted into the SVD and give another form without changing the overall properties of the system. The QR decompositions could be used here but would give a different form for the matrices, although when implemented the results presented in the following do not change.

With these practical definitions in hand, one can easily verify that we satisfy Eq. A6. Assuming the non-singularity of the \(\textbf{B}_n\) one can demonstrate by induction that Eqs. A2 and A4 are satisfied.

Once we have performed p steps of the block Lanczos recursion, the Hamiltonian takes a block tridiagonal form in the orthonormal basis defined by the columns of the \(\Psi _n\) matrices:

where it should be noted again that the arrangement of bundled states in Eq. A10 can be represented as a matrix of size \(K\times (pg)\).

This block diagonal matrix can be diagonalized and its lowest eigenvalues are reasonable approximation of the lowest eigenvalues of H. Degeneracies of degree g or less are resolved. In the context of DMRG, one step of this algorithm allows us to update the g eigenstates candidates contained in the orthogonality center of the bundled MPS.

Appendix B: Review of matrix product states and the density matrix renormalization group

The relationship between the MPS and the full wavefunction for a quantum system can be seen as a series of tensor decompositions on the full wavefunction [28]. Recasting an N-site wavefunction as a tensor, \(\psi _{\sigma _1\sigma _2\ldots \sigma _N}\), with physical degrees of freedom expressed by \(\sigma _i\), then a series of QR decompositions can give the state [20, 21]

where a series of resha** operations group and separate the indices so that the QR decomposition on matrices can be used. Raising and lowering an index has no significance in this notation or in general for a tensor network [20, 21]. An extra index a is introduced and corresponds to the remaining states in a system [20, 21], as introduced during a tensor decomposition. The final tensor is denoted as D to signify the orthogonality center, which is the only tensor in the network that does not contract to the identity and contains the square root of the coefficients of the density matrix for whatever partition is selected in the system [28]. The tensors A are left-normalized and when contracted on the dual left-normalized tensors, contract to the identity. The gauge of an MPS can be changed by a series of SVDs (or QR or LQ decompositions [28]) which transforms some of the tensors to right-normalized tensors, B. No matter how the MPS is gauged, a single orthogonality center is present.

The challenge for handling many excitations is to incorporate each as an additional index on the orthogonality center.

1.1 Two-site density matrix renormalization group algorithm

The DMRG algorithm operates on an MPS and has the following four basic steps in the algorithm. These are repeated until convergence [28].

-

1.

Contract a tensor network (MPS and MPO) down to a few-site system.

-

2.

Perform a Lanczos algorithm to update the tensors

$$\begin{aligned} |\psi _{n+1}\rangle =H|\psi _n\rangle -\alpha _n|\psi _n\rangle -\beta _n|\psi _{n-1}\rangle \end{aligned}$$(B2)with \(\alpha _n=\langle \psi _n|H|\psi _n\rangle \) and \(\beta _n^2=\langle \psi _{n-1}|\psi _{n-1}\rangle \). The coefficients form a tridiagonal matrix which is the Hamiltonian in the Lanczos basis [26, 42].

-

3.

The tensor is decomposed with—for example—the SVD to break apart the tensors

-

4.

The center of orthogonality, \(\hat{D}\), is moved onto the next tensor.

Having found the renormalized representation of the problem for the next subset of sites by updating the environment tensors for the reduced site representation, the steps can be repeated. Once the full lattice is swept by the algorithm, one iteration is complete and the algorithm can be run until the ground-state converges or some other criterion is met. More details (and diagrams) are found in Ref. [

and for the right sweep the operation is

and the MPO can be contracted inside of the environment (\(L^E\) for the left sweep, \(R^E\) for the right). This forms the term \(H-E\) in the appropriate basis.

The next step is to join the link indices of the MPS and MPO together to then join those indices to the original tensor according to the following diagrams

where gray dots represent indices that are joined together. Thus, the dotted indices are joined together into a single index, similar to a reshape but adding the dimensions together instead of maintaining the same number of elements as in a reshape [20, 21].

The final step is to move the orthogonality center according to the following diagrams

where the two tensors on the right are contracted onto the next MPS tensor for the right sweep. Similarly, the two tensors on the left of the decomposition are contracted onto the tensor on the left for the left sweep.

In the tensor form, the above equations are

and

The algorithm as presented so far is when the expansion term is to be applied at full strength. However, it is highly useful to decrease the effective magnitude of the expansion term such that the expansion is zero when the MPS is mostly converged. This will aid convergence. The algorithm presented in Ref. [32] uses two quantities to ensure that the value for the noise parameter \(\gamma \) is set correctly. The relationship stated in Ref. [32] is to keep the relationship \(\Delta E_T\approx -0.3\Delta E_O\), where \(\Delta E_T\) is the energy difference from the previous sweep and \(\Delta E_O\) (both of which can be estimated from the energy values and truncation error) is the energy difference lost when truncating the tensor with the SVD. To control the size of \(\gamma \) further, a small decay can be multiplied onto the value at each step.

Because of the noise term, the 3S algorithm does not always converge the energy to the ground state of the system, but it generally gets very close. Further, some bias in the wavefunction based on the sweep direction occurs, just as for the original single-site methods. The implementation here is that of the DMRjulia library [28].

The method then scales as \(O(N_sdm^3)\), a reduction of a factor of d from the two-site algorithm because only one physical index is present.