Abstract

Tokamaks are the most promising way for nuclear fusion reactors. Disruption in tokamaks is a violent event that terminates a confined plasma and causes unacceptable damage to the device. Machine learning models have been widely used to predict incoming disruptions. However, future reactors, with much higher stored energy, cannot provide enough unmitigated disruption data at high performance to train the predictor before damaging themselves. Here we apply a deep parameter-based transfer learning method in disruption prediction. We train a model on the J-TEXT tokamak and transfer it, with only 20 discharges, to EAST, which has a large difference in size, operation regime, and configuration with respect to J-TEXT. Results demonstrate that the transfer learning method reaches a similar performance to the model trained directly with EAST using about 1900 discharge. Our results suggest that the proposed method can tackle the challenge in predicting disruptions for future tokamaks like ITER with knowledge learned from existing tokamaks.

Similar content being viewed by others

Introduction

Nuclear fusion energy could be the ultimate energy for humankind. Tokamak is the leading candidate for a practical nuclear fusion reactor. It uses magnetic fields to confine extremely high temperature (100 million K) plasma. Disruption is a catastrophic loss of plasma confinement, which releases a large amount of energy and will cause severe damage to tokamak machine1,2,3,4. Disruption is one of the biggest hurdles in realizing magnetically controlled fusion. DMS(Disruption Mitigation System) such as MGI (Massive Gas Injection) and SPI (Shattered Pellet Injection) can effectively mitigate and alleviate the damage caused by disruptions in current devices5,6. For large tokamaks such as ITER, unmitigated disruptions at high-performance discharge are unacceptable. Predicting potential disruptions is a critical factor in effectively triggering the DMS. Thus it is important to accurately predict disruptions with enough warning time7. Currently, there are two main approaches to disruption prediction research: rule-based and data-driven methods. Rule-based methods are based on the current understanding of disruption and focus on identifying event chains and disruption paths and provide interpretability8,9,10,11. Feature engineering may benefit from an even broader domain knowledge, which is not specific to disruption prediction tasks and does not require knowledge of disruptions. On the other hand, data-driven methods learn from the vast amount of data accumulated over the years and have achieved excellent performance, but lack interpretability12,13,14,15,16,17,18,19,20. Both approaches benefit from the other: rule-based methods accelerate the calculation by surrogate models, while data-driven methods benefit from domain knowledge when choosing input signals and designing the model. Currently, both approaches need sufficient data from the target tokamak for training the predictors before they are applied. Most of the other methods published in the literature focus on predicting disruptions specifically for one device and lack generalization ability. Since unmitigated disruptions of a high-performance discharge would severely damage future fusion reactor, it is challenging to accumulate enough disruptive data, especially at high performance regime, to train a usable disruption predictor.

There are attempts to make a model that works on new machines with existing machine’s data. Previous studies across different machines have shown that using the predictors trained on one tokamak to directly predict disruptions in another leads to poor performance15,19,21. Domain knowledge is necessary to improve performance. The Fusion Recurrent Neural Network (FRNN) was trained with mixed discharges from DIII-D and a ‘glimpse’ of discharges from JET (5 disruptive and 16 non-disruptive discharges), and is able to predict disruptive discharges in JET with a high accuracy15. The Hybrid Deep-Learning (HDL) architecture was trained with 20 disruptive discharges and thousands of discharges from EAST, combined with more than a thousand discharges from DIII-D and C-Mod, and reached a boost performance in predicting disruptions in EAST19. An adaptive disruption predictor was built based on the analysis of quite large databases of AUG and JET discharges, and was transferred from AUG to JET with a success rate of 98.14% for mitigation and 94.17% for prevention22.

Mixing data from both target and existing machines is one way of transfer learning, instance-based transfer learning. But the information carried by the limited data from the target machine could be flooded by data from the existing machines. These works are carried out among tokamaks with similar configurations and sizes. However, the gap between future tokamak reactors and any tokamaks existing today is very large23,24. Sizes of the machine, operation regimes, configurations, feature distributions, disruption causes, characteristic paths, and other factors will all result in different plasma performances and different disruption processes. Thus, in this work we selected the J-TEXT and the EAST tokamak which have a large difference in configuration, operation regime, time scale, feature distributions, and disruptive causes, to demonstrate the proposed transfer learning method. J-TEXT is a tokamak with a full-carbon wall where the main types of disruptions are those induced by density limits and locked modes25,26,27,28,29. In contrast, EAST is a tokamak with a metal wall where disruptions caused by density limits and locked modes are also observed, but the most frequent causes of disruptions are temperature hollowing, edge cooling, and VDEs51. Discharges from the J-TEXT tokamak are used for validating the effectiveness of the deep fusion feature extractor, as well as offering a pre-trained model on J-TEXT for further transferring to predict disruptions from the EAST tokamak. To make sure the inputs of the disruption predictor are kept the same, 47 channels of diagnostics are selected from both J-TEXT and EAST respectively, as is shown in Table 4. When selecting, the consistency across discharges, as well as between the two tokamaks, of geometry and view of the diagnostics are considered as much as possible. The diagnostics are able to cover the typical frequency of 2/1 tearing modes, the cycle of sawtooth oscillations, radiation asymmetry, and other spatial and temporal information low level enough. As the diagnostics bear multiple physical and temporal scales, different sample rates are selected respectively for different diagnostics.

854 discharges (525 disruptive) out of 2017–2018 compaigns are picked out from J-TEXT. The discharges cover all the channels we selected as inputs, and include all types of disruptions in J-TEXT. Most of the dropped disruptive discharges were induced manually and did not show any sign of instability before disruption, such as the ones with MGI (Massive Gas Injection). Additionally, some discharges were dropped due to invalid data in most of the input channels. It is difficult for the model in the target domain to outperform that in the source domain in transfer learning. Thus the pre-trained model from the source domain is expected to include as much information as possible. In this case, the pre-trained model with J-TEXT discharges is supposed to acquire as much disruptive-related knowledge as possible. Thus the discharges chosen from J-TEXT are randomly shuffled and split into training, validation, and test sets. The training set contains 494 discharges (189 disruptive), while the validation set contains 140 discharges (70 disruptive) and the test set contains 220 discharges (110 disruptive). Normally, to simulate real operational scenarios, the model should be trained with data from earlier campaigns and tested with data from later ones, since the performance of the model could be degraded because the experimental environments vary in different campaigns. A model good enough in one campaign is probably not as good enough for a new campaign, which is the “aging problem”. However, when training the source model on J-TEXT, we care more about disruption-related knowledge. Thus, we split our data sets randomly in J-TEXT. As for the EAST tokamak, a total of 1896 discharges including 355 disruptive discharges are selected as the training set. 60 disruptive and 60 non-disruptive discharges are selected as the validation set, while 180 disruptive and 180 non-disruptive discharges are selected as the test set. It is worth noting that, since the output of the model is the probability of the sample being disruptive with a time resolution of 1 ms, the imbalance in disruptive and non-disruptive discharges will not affect the model learning. The samples, however, are imbalanced since samples labeled as disruptive only occupy a low percentage. How we deal with the imbalanced samples will be discussed in “Weight calculation” section. Both training and validation set are selected randomly from earlier compaigns, while the test set is selected randomly from later compaigns, simulating real operating scenarios. For the use case of transferring across tokamaks, 10 non-disruptive and 10 disruptive discharges from EAST are randomly selected from earlier campaigns as the training set, while the test set is kept the same as the former, in order to simulate realistic operational scenarios chronologically. Given our emphasis on the flattop phase, we constructed our dataset to exclusively contain samples from this phase. Furthermore, since the number of non-disruptive samples is significantly higher than the number of disruptive samples, we exclusively utilized the disruptive samples from the disruptions and disregarded the non-disruptive samples. The split of the datasets results in a slightly worse performance compared with randomly splitting the datasets from all campaigns available. Split of datasets is shown in Table 4.

Normalization

With the database determined and established, normalization is performed to eliminate the numerical differences between diagnostics, and to map the inputs to an appropriate range to facilitate the initialization of the neural network. According to the results by J.X. Zhu et al.19, the performance of deep neural network is only weakly dependent on the normalization parameters as long as all inputs are mapped to appropriate range19. Thus the normalization process is performed independently for both tokamaks. As for the two datasets of EAST, the normalization parameters are calculated individually according to different training sets. The inputs are normalized with the z-score method, which \({X}_{{{{{\rm{norm}}}}}}=\frac{X-{{{{{\rm{mean}}}}}}(X)}{{{{{{\rm{std}}}}}}(X)}\). Theoretically, the inputs should be mapped to (0, 1) if they follow a Gaussian distribution. However, it is important to note that not all inputs necessarily follow a Gaussian distribution and therefore may not be suitable for this normalization method. Some inputs may have extreme values that could affect the normalization process. Thus, we clipped any mapped values beyond (−5, 5) to avoid outliers with extremely large values. As a result, the final range of all normalized inputs used in our analysis was between −5 and 5. A value of 5 was deemed appropriate for our model training as it is not too large to cause issues and is also large enough to effectively differentiate between outliers and normal values.

Labeling

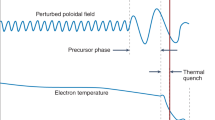

All discharges are split into consecutive temporal sequences. A time threshold before disruption is defined for different tokamaks in Table 5 to indicate the precursor of a disruptive discharge. The “unstable” sequences of disruptive discharges are labeled as “disruptive” and other sequences from non-disruptive discharges are labeled as “non-disruptive”. To determine the time threshold, we first obtained a time span based on prior discussions and consultations with tokamak operators, who provided valuable insights into the time span within which disruptions could be reliably predicted. We then conducted a systematic scan within the time span. Our aim was to identify the constant that yielded the best overall performance in terms of disruption prediction. By iteratively testing various constants, we were able to select the optimal value that maximized the predictive accuracy of our model.

However, research has it that the time scale of the “disruptive” phase can vary depending on different disruptive paths. Labeling samples with an unfixed, precursor-related time is more scientifically accurate than using a constant. In our study, we first trained the model using “real” labels based on precursor-related times, which made the model more confident in distinguishing between disruptive and non-disruptive samples. However, we observed that the model’s performance on individual discharges decreased when compared to a model trained using constant-labeled samples, as is demonstrated in Table 6. Although the precursor-related model was still able to predict all disruptive discharges, more false alarms occurred and resulted in performance degradation. These results indicate that the model is more sensitive to unstable events and has a higher false alarm rate when using precursor-related labels. In terms of disruption prediction itself, it is always better to have more precursor-related labels. However, since the disruption predictor is designed to trigger the DMS effectively and reduce incorrectly raised alarms, it is an optimal choice to apply constant-based labels rather than precursor-relate labels in our work. As a result, we ultimately opted to use a constant to label the “disruptive” samples to strike a balance between sensitivity and false alarm rate.

Weight calculation

As not all sequences are used in disruptive discharges, and the number of non-disruptive discharges is far more than disruptive ones, the dataset is greatly imbalanced. To deal with the problem, weights for both classes are calculated and passed to the neural network to help to pay more attention to the under-represented class, the disruptive sequences. The weights for both classes are calculated as: \({W}_{{{{{\rm{disrutive}}}}}}=\frac{{N}_{{{{{\rm{disruptive}}}}}}+{N}_{{{{{\rm{non}}}}}-{disruptive}}}{{2* N}_{{{{{\rm{disruptive}}}}}}},{W}_{{{{{\rm{non}}}}}-{disruptive}}=\frac{{N}_{{{{{\rm{disruptive}}}}}}+{N}_{{{{{\rm{non}}}}}-{disruptive}}}{2* {N}_{{{{{\rm{non}}}}}-{disruptive}}}\). Scaling by \(\frac{{N}_{{{{{\rm{disruptive}}}}}}+{N}_{{{{{\rm{non}}}}}-{disruptive}}}{2}\) helps to keep the loss to a similar magnitude.

Overfitting handling

Overfitting occurs when a model is too complex and is able to fit the training data too well, but performs poorly on new, unseen data. This is often caused by the model learning noise in the training data, rather than the underlying patterns. To prevent overfitting in training the deep learning-based model due to the small size of samples from EAST, we employed several techniques. The first is using batch normalization layers. Batch normalization helps to prevent overfitting by reducing the impact of noise in the training data. By normalizing the inputs of each layer, it makes the training process more stable and less sensitive to small changes in the data. In addition, we applied dropout layers. Dropout works by randomly drop** out some neurons during training, which forces the network to learn more robust and generalizable features. L1 and L2 regularization were also applied. L1 regularization shrinks the less important features’ coefficients to zero, removing them from the model, while L2 regularization shrinks all the coefficients toward zero but does not remove any features entirely. Furthermore, we employed an early stop** strategy and a learning rate schedule. Early stop** stops training when the model’s performance on the validation dataset starts to degrade, while learning rate schedules adjust the learning rate during training so that the model can learn at a slower rate as it gets closer to convergence, which allows the model to make more precise adjustments to the weights and avoid overfitting to the training data.

The deep learning-based FFE design

Our deep learning model, or disruption predictor, is made up of a feature extractor and a classifier, as is demonstrated in Fig. 1. The feature extractor consists of ParallelConv1D layers and LSTM layers. The ParallelConv1D layers are designed to extract spatial features and temporal features with a relatively small time scale. Different temporal features with different time scales are sliced with different sampling rates and timesteps, respectively. To avoid mixing up information of different channels, a structure of parallel convolution 1D layer is taken. Different channels are fed into different parallel convolution 1D layers separately to provide individual output. The features extracted are then stacked and concatenated together with other diagnostics that do not need feature extraction on a small time scale. The concatenated features make up a feature frame. Several time-consecutive feature frames further make up a sequence and the sequence is then fed into the LSTM layers to extract features within a larger time scale. In our case, we choose Relu as our activation function for the layers. After the LSTM layers, the outputs are then fed into a classifier which consists of fully-connected layers. All layers except for the output also select Relu as the activation function. The last layer has two neurons and applies sigmoid as the activation function. Possibilities of disruption or not of each sequence are output respectively. Then the result is fed into a softmax function to output whether the slice is disruptive.

Training and transferring details

When pre-training the model on J-TEXT, 8 RTX 3090 GPUs are used to train the model in parallel and help boost the performance of hyperparameters searching. Since the samples are greatly imbalanced, class weights are calculated and applied according to the distribution of both classes. The size training set for the pre-trained model finally reaches ~125,000 samples. To avoid overfitting, and to realize a better effect for generalization, the model contains ~100,000 parameters. A learning rate schedule is also applied to further avoid the problem. The learning rate takes an exponential decay schedule, with an initial learning rate of 0.01 and a decay rate of 0.9. Adam is chosen as the optimizer of the network, and binary cross-entropy is selected as the loss function. The pre-trained model is trained for 100 epochs. For each epoch, the loss on the validation set is monitored. The model will be checkpointed at the end of the epoch in which the validation loss is evaluated as the best. When the training process is finished, the best model among all will be loaded as the pre-trained model for further evaluation.

When transferring the pre-trained model, part of the model is frozen. The frozen layers are commonly the bottom of the neural network, as they are considered to extract general features. The parameters of the frozen layers will not update during training. The rest of the layers are not frozen and are tuned with new data fed to the model. Since the size of the data is very small, the model is tuned at a much lower learning rate of 1E-4 for 10 epochs to avoid overfitting. As for replacing the layers, the rest of the layers which are not frozen are replaced with the same structure as the previous model. The weights and biases, however, are replaced with randomized initialization. The model is also tuned at a learning rate of 1E-4 for 10 epochs. As for unfreezing the frozen layers, the layers previously frozen are unfrozen, making the parameters updatable again. The model is further tuned at an even lower learning rate of 1E-5 for 10 epochs, yet the models still suffer greatly from overfitting.

Data availability

Raw data were generated at the J-TEXT and EAST facilities. Derived data are available from the corresponding author upon reasonable request.

Code availability

The computer code that was used to generate figures and analyze the data is available from the corresponding author upon reasonable request.

References

MHD, I. P. E. G. D. & Editors, I. P. B. MHD stability, operational limits and disruptions. Nucl. Fusion 39, 2251–2389 (1999).

Hender, T. C. et al. MHD stability, operational limits and disruptions. Nucl. Fusion 47, S128–S202 (2007).

Boozer, A. H. Theory of tokamak disruptions. Phys. Plasmas 19, 058101 (2012).

Schuller, F. C. Disruptions in tokamaks. Plasma Phys. Control. Fusion. 37, A135–A162 (1995).

Luo, Y. H. et al. Designing of the massive gas injection valve for the joint Texas experimental tokamak. Rev. Sci. Instrum. 85, 083504 (2014).

Li, Y. et al. Design of a shattered pellet injection system on J-TEXT tokamak. Rev. Sci. Instrum. 89, 10K116 (2018).

Sugihara, M. et al. Disruption scenarios, their mitigation and operation window in ITER. Nucl. Fusion 47, 337–352 (2007).

Aymerich, E. et al. A statistical approach for the automatic identification of the start of the chain of events leading to the disruptions at JET. Nucl. Fusion 61, 036013 (2021).

Lungaroni, M. et al. On the potential of ruled-based machine learning for disruption prediction on JET. Fusion Eng. Des. 130, 62–68 (2018).

Rattá, G. A. et al. An advanced disruption predictor for JET tested in a simulated real-time environment. Nucl. Fusion 50, 025005 (2010).

Rea, C., Montes, K. J., Erickson, K. G., Granetz, R. S. & Tinguely, R. A. A real-time machine learning-based disruption predictor in DIII-D. Nucl. Fusion 59, 096016 (2019).

Yang, Z. et al. A disruption predictor based on a 1.5-dimensional convolutional neural network in HL-2A. Nucl. Fusion 60, 016017 (2020).

Guo, B. H. et al. Disruption prediction using a full convolutional neural network on EAST. Plasma Phys. Control. Fusion. 63, 025008 (2021).

Guo, B. H. et al. Disruption prediction on EAST tokamak using a deep learning algorithm. Plasma Phys. Control. Fusion. 63, 115007 (2021).

Kates-Harbeck, J., Svyatkovskiy, A. & Tang, W. Predicting disruptive instabilities in controlled fusion plasmas through deep learning. Nature 568, 526–531 (2019).

Ferreira, D. R., Carvalho, P. J. & Fernandes, H. Deep learning for plasma tomography and disruption prediction from bolometer data. IEEE Trans. Plasma Sci. 48, 36–45 (2019).

Aymerich, E. et al. Disruption prediction at JET through deep convolutional neural networks using spatiotemporal information from plasma profiles. Nucl. Fusion 62, 66005 (2022).

Churchill, R. M., Tobias, B., Zhu, Y. & Team, D. Deep convolutional neural networks for multi-scale time-series classification and application to tokamak disruption prediction using raw, high temporal resolution diagnostic data. Phys. Plasmas 27, 62510 (2020).

Zhu, J. X. et al. Hybrid deep learning architecture for general disruption prediction across tokamaks. Nucl. Fusion 61, 049501 (2021).

Martin, E. J. & Zhu, X. W. Scenario adaptive disruption prediction study for next generation burning-plasma tokamaks. Nucl. Fusion 61, 114005–1616 (2021).

Windsor, C. G. et al. A cross-tokamak neural network disruption predictor for the JET and ASDEX Upgrade tokamaks. Nucl. Fusion 45, 337–350 (2005).

Murari, A. et al. On the transfer of adaptive predictors between different devices for both mitigation and prevention of disruptions. Nucl. Fusion 60, 56003 (2020).

Shimomura, Y., Aymar, R., Chuyanov, V., Huguet, M. & Parker, R. ITER overview. Nucl. Fusion 39, 1295–1308 (1999).

Shimada, M. et al. Overview and summary. Nucl. Fusion 47, S1–S17 (2007).

Huang, M. et al. The operation region and MHD modes on the J-TEXT tokamak. Plasma Phys. Control. Fusion. 58, 125002 (2016).

Shi, P. et al. First time observation of local current shrinkage during the MARFE behavior on the J-TEXT tokamak. Nucl. Fusion 57, 116052 (2017).

Shi, P. et al. Observation of the high-density front at the high-field-side in the J-TEXT tokamak. Plasma Phys. Control. Fusion. 63, 125010 (2021).

Wang, N., Ding, Y., Rao, B. & Li, D. A brief review on the interaction between resonant magnetic perturbation and tearing mode in J-TEXT. Rev. Mod. Plasma Phys. 6, 26 (2022).

He, Y. et al. Prevention of mode coupling by external applied resonant magnetic perturbation on the J-TEXT tokamak. Plasma Phys. Control. Fusion. 65, 65011 (2023).

Chen, D., Shen, B., Yang, F., Qian, J. & **ao, B. Characterization of plasma current quench during disruption in EAST tokamak. Chin. Phys. B. 24, 25205 (2015).

Wang, B. et al. Establishment and assessment of plasma disruption and warning databases from EAST. Plasma Sci. Technol. 18, 1162–1168 (2016).

Chen, D. L. et al. Disruption mitigation with high-pressure helium gas injection on EAST tokamak. Nucl. Fusion 58, 36003 (2018).

Zhang, C. et al. Plasma-facing components damage and its effects on plasma performance in EAST tokamak. Fusion Eng. Des. 156, 111616–493 (2020).

Voulodimos, A., Doulamis, N., Doulamis, A. & Protopapadakis, E. Deep learning for computer vision: a brief review. Comput. Intell. Neurosci. 2018, 1–13 (2018).

Young, T., Hazarika, D., Poria, S. & Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 13, 55–75 (2018).

Ben-David, S., Blitzer, J., Crammer, K. & Pereira, F. Analysis of representations for domain adaptation. Adv. Neural Inf. Process Syst. 19, 151–175 (2006).

Ding, Y. et al. Overview of the J-TEXT progress on RMP and disruption physics. Plasma Sci. Technol. 20, 125101 (2018).

Liang, Y. et al. Overview of the recent experimental research on the J-TEXT tokamak. Nucl. Fusion 59, 112016 (2019).

Liu, Z. X. et al. Experimental observation and simulation analysis of the relationship between the fishbone and ITB formation on EAST tokamak. Nucl. Fusion 60, 122001 (2020).

Blum, J. & Le Foll, J. Plasma equilibrium evolution at the resistive diffusion timescale. Comp. Phys. Rep. 1, 465–494 (1984).

Chen, Z. Y. et al. The behavior of runaway current in massive gas injection fast shutdown plasmas in J-TEXT. Nucl. Fusion 56, 112013 (2016).

Shen, C. et al. Investigation of the eddy current effect on the high frequency response of the Mirnov probe on J-TEXT. Rev. Sci. Instrum. 90, 123506 (2019).

Wang, C. et al. Disruption prevention using rotating resonant magnetic perturbation on J-TEXT. Nucl. Fusion 60, 102992 (2020).

Shen, C. et al. IDP-PGFE: an interpretable disruption predictor based on physics-guided feature extraction. Nucl. Fusion 63, 46024 (2023).

Shen, Z., Liu, Z., Qin, J., Savvides, M. & Cheng, K. Partial is better than all: revisiting fine-tuning strategy for few-shot learning. Proc. AAAI Conf. Artif. Intell. 2021, 9594–9602 (2021).

Rajpurkar, P., Chen, E., Banerjee, O. & Topol, E. J. AI in health and medicine. Nat. Med. 28, 31–38 (2022).

Singh, J., Hanson, J., Paliwal, K. & Zhou, Y. RNA secondary structure prediction using an ensemble of two-dimensional deep neural networks and transfer learning. Nat. Commun. 10, 5407 (2019).

De Vries, P. C. et al. Requirements for triggering the ITER disruption mitigation system. Fusion Sci. Technol. 69, 471–484 (2016).

Caruana, R. Multitask learning. Mach. Learn. 28, 41–75 (1997).

Gao, X. et al. Experimental progress of hybrid operational scenario on EAST tokamak. Nucl. Fusion 60, 102001 (2020).

Zhang, M., Wu, Q., Zheng, W., Shang, Y. & Wang, Y. A database for develo** machine learning based disruption predictors. Fusion Eng. Des. 160, 111981 (2020).

Zheng, W. et al. Overview of machine learning applications in fusion plasma experiments on J-TEXT tokamak. Plasma Sci. Technol. 24, 124003 (2022).

Acknowledgements

The authors are very grateful for the help of J-TEXT team and the EAST team. This work is supported by the National Key R&D Program of China (no. 2022YFE03040004) and the National Natural Science Foundation of China (no. 51821005).

Author information

Authors and Affiliations

Contributions

W.Z. and F.X. conceived the method, the design of the model, as well as the experiments and carried them out, and co-wrote the paper; D.C., B.G., B.S., and B.X. helped with accessing and using the EAST database; C.S. and X.A. helped with accessing J-TEXT database and J-TEXT feature extraction; W.Z. and D.C. offered computational resources; Z.C., Y.D., and C.S. contributed to the initial discussions and provided feedback on the manuscript; Y.P, N.W, M.Z., Z.C., and Z.Y. provided general guidance during the research process.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks Cristina Rea, Alessandro Pau, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zheng, W., Xue, F., Chen, Z. et al. Disruption prediction for future tokamaks using parameter-based transfer learning. Commun Phys 6, 181 (2023). https://doi.org/10.1038/s42005-023-01296-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-023-01296-9

- Springer Nature Limited