Abstract

Research algorithms are seldom externally validated or integrated into clinical practice, leaving unknown challenges in deployment. In such efforts, one needs to address challenges related to data harmonization, the performance of an algorithm in unforeseen missingness, automation and monitoring of predictions, and legal frameworks. We here describe the deployment of a high-dimensional data-driven decision support model into an EHR and derive practical guidelines informed by this deployment that includes the necessary processes, stakeholders and design requirements for a successful deployment. For this, we describe our deployment of the chronic lymphocytic leukemia (CLL) treatment infection model (CLL-TIM) as a stand-alone platform adjoined to an EPIC-based Danish Electronic Health Record (EHR), with the presentation of personalized predictions in a clinical context. CLL-TIM is an 84-variable data-driven prognostic model utilizing 7-year medical patient records and predicts the 2-year risk composite outcome of infection and/or treatment post-CLL diagnosis. As an independent validation cohort for this deployment, we used a retrospective population-based cohort of patients diagnosed with CLL from 2018 onwards (n = 1480). Unexpectedly high levels of missingness for key CLL-TIM variables were exhibited upon deployment. High dimensionality, with the handling of missingness, and predictive confidence were critical design elements that enabled trustworthy predictions and thus serves as a priority for prognostic models seeking deployment in new EHRs. Our setup for deployment, including automation and monitoring into EHR that meets Medical Device Regulations, may be used as step-by-step guidelines for others aiming at designing and deploying research algorithms into clinical practice.

Similar content being viewed by others

Introduction

Electronic Health Records (EHR) continue to increase in both size and complexity. EHR may include long-term patient histories of blood tests, previous comorbidities, interventions, medicines, several OMIC data and imaging1. In contrast, most research-based models, and the ones implemented into clinical practice, do away with this complexity entirely by creating very simple rule-based models using a handful of variables. The limited number of variables was recently highlighted in a review of CLL prognostic models, where it was found that, on average, only ten variables are used to develop a CLL prognostic model2. Some of these variables are dropped during the development process, and the final model may end up using even fewer variables. For example, the gold standard in CLL-IPI3 uses five variables to make a prediction. The net effect of not using more variables or adopting better machine-learning algorithms over time has resulted in a flat-lining of performance for CLL prognostic models over the last 20 years2. To achieve accurate and reliable prognostic models, solutions for a number of domain-specific challenges that EHR and disease modeling present us with are being developed; including models that handle diverse modalities4, high-missingness5, data-shifts6, underrepresented patient subgroups7, privacy limitations8 and small patient cohorts9. Despite the heterogeneity of these models, none involve low-dimensional rule-based approaches but rather tend towards more complex models. The complexity of these approaches may be a result of high dimensions, the use of long-term patient histories, the fusion of multiple data modalities, and also the use of ensembles or deep-learning architectures. With this in mind, we have no proof-of-concept examples of how complex research algorithms can be safely and successfully automated into clinical practice and the challenges involved in doing so.

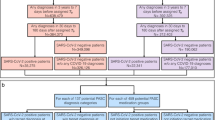

Using CLL as a target disease, we have previously developed the CLL Treatment Infection Model (CLL-TIM) to identify patients newly diagnosed with CLL at high risk of severe infections and/or CLL treatment within two years10 (Fig. 1a). As detailed in the primary publication of CLL-TIM, the model was developed based on a clinical unmet need. The major cause of morbidity and mortality for patients with CLL is infection-related11, and previous prognostic models did not succeed in identifying newly diagnosed CLL patients who would benefit from early preemptive treatment12. Thus, the CLL-TIM model was developed to identify such patients at high risk of severe infections and/or treatment needs within two years of CLL diagnosis and is currently deployed for patient selection in the randomized phase 2 trial PreVent-ACaLL (NCT03868722)13. CLL-TIM is an ensemble of 28 algorithms with 84 variables (spanning 228 features) that considers patient data with repeated measurements for up to seven years prior to diagnosis. The complexity was necessary for providing trustworthy predictions, personalized risk factors, and predictions on patients with large amounts of missing data. However, the manual data entry to run the algorithm approaches one hour per patient and is therefore considered prohibitory for the deployment of CLL-TIM into clinical practice as a decision support tool without automation. Therefore, in this work, we aimed to describe and critically assess the deployment of CLL-TIM from a research EHR to a deployment EHR (i.e., production environment). This work comprehensively outlines the transition and automation of CLL-TIM, encompassing external validation, data harmonization, and automation steps. As a final verification, we benchmark our CLL-TIM deployment on a new set of CLL patients including those with key data variables missing. Additionally, our work also details real-time performance monitoring, involvement of stakeholders, and legal frameworks.

a CLL-TIM was developed as an ensemble model integrating 28 different machine learners trained on data from 4149 patients with CLL as previously described10. Patient data for its development and internal validation consisted of patients diagnosed with CLL in Denmark between January 2004 and June 2017. The research database used for development of CLL-TIM (referred here as the research EHR), used all available patient data up until a prediction point of three months post diagnosis for modeling the 2-year risk composite outcome of infection and/or treatment post prediction point. Seven-year historical records of CLL patients were used, and all patient data available was fed into the development of CLL-TIM in a data-driven fashion. Data modalities included laboratory tests, microbiology, diagnosis, and pathology extracted from the PERSIMUNE data warehouse (persimune.org)27 and CLL registry-based data as previously described. After feature selection, CLL-TIM consisted of 84 variables spanning 228 features, and on an independent internal test research cohort, CLL-TIM identifies patients with a high-risk (precision of 72% and a recall of 75%) of serious infection and/or CLL treatment within two years from the time of diagnosis. b CLL-TIM was validated externally on the CLL7 German cohort and is undergoing further validation in its selection of patients in the PreVent-ACaLL (clinicaltrials.gov: NCT0386872213). Patients who, upon their CLL diagnosis, do not need treatment according to iwCLL criteria31 but are still deemed as high-risk with high confidence by CLL-TIM are randomized between observation (standard of care) and three months of preemptive venetoclax+acalabrutinib treatment. This is aimed to test whether grade 3-Infection-free, treatment-free survival can be improved. This work describes the deployment of CLL-TIM into the EHR production environment (referred to here as the deployment EHR), the data harmonization process, and the automation of both the data-variable retrieval and the provision of its predictions as views within the deployment EHR. After our deployment, we present CLL-TIM’s performance on a retrospective cohort of patients who were not present during the development of CLL-TIM. We also present our setup for continuous prospective monitoring of CLL-TIM’s performance within the deployment EHR. By design, CLL-TIM is able to provide confidence related to each of its predictions (see Methods—Calculation of CLL-TIM Confidence), explainable predictions unique to each patient, and a prediction for all patients irrespective of their missing data (see Methods—Handling of Missingness in CLL-TIM).

Results

Data harmonization, automation, stakeholder roles, and legal framework

Data harmonization was achieved through the development of map** dictionaries, which aligned variable nomenclature and units with the specific requirements of CLL-TIM (See Fig. 2 and Methods). This harmonization process was validated by comparing the results of CLL-TIM on the research EHR and the deployment of EHR on an identical set of patients. This included comparing the predicted risk, confidence, and personalized risk factors provided by CLL-TIM. We then setup the importation and exportation of data from and to CLL-TIM’s standalone script in an automated fashion (See Fig. 3 and Methods). Our setup for automation enforces that the input (patient data) and output (predictions) of CLL-TIM, were easily accessible. This enables us to both monitor the performance (using the outputs) and anticipate data shifts present (using the inputs) (See Supplementary Fig. 1 and Methods). Collaboration between an interdisciplinary team was necessary to integrate CLL-TIM’s results into the deployment EHR while also considering all clinical, technical, and regulatory aspects (See Table 1). A Medical Device Regulation (MDR) assessment was performed, and before making available CLL-TIM’s predictions on the visual interface of the deployment EHR, several aspects of CLL-TIM’s benchmarking and validation were also assessed by Hematological Clinical Healthcare Council (see Methods).

For each patient, CLL-TIM produces (i) a risk level, which represents the risk of infection and/or treatment with 2-years from its prediction point, (ii) a confidence that ranges from 0 to 1 and represents how sure it is in its prediction (see Methods—Calculation of CLL-TIM Confidence), (iii) a ranked list of features (risk factors) that contribute towards the predicted risk of a given patient. The risk factors and their contribution change for each patient and are, therefore, personalized risk factors. For this harmonization process, we first identified the same patients across both EHRs. The iterative process of data harmonization is complete once CLL-TIM on the same patients across both EHRs achieves the same risk level, confidence, and personalized risk factors. If not, we then identified which of CLL-TIM’s 228 features have unmatched contributions, and from this subset of features, we then extracted which variables were still problematic. Given the list of problematic variables, we could then look into the raw data related to these variables and check for discrepancies. All such discrepancies could be addressed by updating the dictionaries of the variable or the unit map**s accordingly. After updating our dictionaries, we re-ran all patients again to check if further discrepancies were present. Upon achieving a perfect match in CLL-TIM’s three outputs on matched patients across both EHRs, we addressed code optimization issues. Namely, we moved the loading of CLL-TIM’s 28 base learners to occur at the start of the script and for all patient predictions to be run as a single batch prediction. This removed the previous inefficiency of CLL-TIM, where the base learners were re-loaded for each patient prediction.

Data is stored in the Chronicles database of the EHR production environment (non-SQL database) upon entering the system from laboratories, diagnostic departments, and clinical personnel. Every night, during an ETL (extract transfer load) process, data is transferred to first the Clarity database and from there into the Caboodle database (both SQL databases) in which data are stored for reporting, data extraction, and monitoring purposes. On the SQL server, within a closed Python environment independently of the EHR system, an area has been dedicated uniquely to CLL-TIM. This closed python environment is managed by the central IT management that ensures identical setups across all hospitals. Data loaded from Chronicles through Clarity to Caboodle databases are passed on to CLL-TIM. Note that before the data enters CLL-TIM, the data harmonization scripts (see Methods) are executed first to ensure that patient data is fed in the right format. The CLL-TIM standalone script is then executed and the predictions are exported to and stored within the Caboodle database. From here, the predictive results are passed back into the Chronicles database, from which direct reporting in the patient chart is presented as a view within the EHR in the patient context, which can be chosen by relevant health professionals to present when accessing EHR for patients with CLL. CLL-TIM’s prediction integrated into EPIC includes the risk of infection and/or treatment for the patient, the confidence in prediction, and the top risk factors pushing towards high-risk or low-risk. The risk factors are personalized for each patient. Both the confidence and personalized risk factors provided may be used by the treating physician to gauge the level of trust one should put in a given patient prediction. In this setup, CLL-TIM is located on the SQL server hosting the databases, thereby minimizing data transfer across different instances. Furthermore, CLL-TIM is containerized in its own environment (Python, R, etc.), ensuring stability when upgrading the environment for any of CLL-TIM’s base algorithms. One important feature of this setup is that the predictions from CLL-TIM and the patient data required to run CLL-TIM are stored on the same database. In this way, our proposed monitoring setup (see Supplementary Methods) need only interact with this database to access both the predictions (to assess performance degradation) and the patient data (to assess data shifts). If found, any such issues may also be similarly reported in the clinical environment interface alongside CLL-TIM’s predictions.

Comparison of research and deployment EHR cohorts

CLL-TIM was originally developed, trained, and tested on a research EHR (n = 3720) of patients diagnosed with CLL prior to 2018 (Fig. 1). For further validation after deployment, we here tested CLL-TIM on an independent cohort of CLL patients diagnosed post-2018, from the deployment EHR (n = 1099) (see Table 2 for baseline characteristics and Supplementary Fig. 1 for Consort Diagram). We here highlight the key data differences exhibited by the research and deployment EHRs (Fig. 4). In the research EHR, within 2 years from the prediction point (set at 3 months post CLL diagnosis), 14.9% of patients had treatment as a first event, 18.1% of patients had an infection as a first event, and 33% patients had the composite event of treatment of infection. For the deployment of EHR, the respective rates were 17.5%, 23.2%, and 40.6% (Supplementary Fig. 1). The research EHR spanned patient data from nine hospitals across Denmark. Deployment EHR included only two of these nine hospitals—Rigshopsitalet and Roskilde. These two hospitals initially encompassed 23% of the training population of CLL-TIM. Patients in the research and deployment EHR showed similar baseline characteristics (Table 2), with the exception of the deployment EHR having a higher percentage of patients (76.4% vs 70.1%) above the age of 65. The research and deployment EHRs, however, showed marked differences in missingness (Fig. 4). For the baseline variables of CLL-TIM i.e., those taken around the time of diagnosis, missingness for the research vs. the deployment EHR were as follows: Binet Staging (0% vs 72.2%), β2 microglobulin (23.6% vs 35.8%), IGHV mutation status (21.1% vs 91.1%), Hierarchical FISH (0% vs 99.5%) and ECOG performance status (0.4% vs 100%). For the deployment of EHR, the high missingness of the aforementioned variables was due to them not being available in a structured format, even though they were mostly available in the medical records. For the research EHR, these variables were manually entered into a structured format. In the research EHR, the percentage of patients with a missing laboratory test was double that of the deployment EHR. This is because the research EHR spanned a wider range of hospitals, most of which had less data in structured format. The deployment EHR had, on average, less than a year of laboratory historical data (vs. ten years for the research cohort). The deployment of EHR had a lower incidence of patients with blood culture events. In the deployment of EHR, blood cultures were generally only available from 2017 and thus also exhibited a shorter historical availability. Diagnosis, pathology, and microbiology data were not extracted for the current deployment of CLL-TIM as their contribution to CLL-TIM’s predictions was found to be minimal during missingness simulations10. Future updates of the CLL-TIM deployment will also add these variables.

Research EHR data is patient data that was used for the development, training, and internal validation of CLL-TIM (Patients with CLL diagnosis prior to 2018). Deployment EHR is the patient data that was used to test CLL-TIM’s generalization performance upon its deployment (Patients with CLL diagnosis post-2019). Baseline characteristics are given in Table 2. EHR patient data considered is only up until CLL-TIM’s prediction point, i.e., 3 months post CLL diagnosis. As per our previous findings when performing missingness simulations during CLL-TIM’s development, the blue color indicates what is generally advantageous to have better predictions, and red indicates what is disadvantageous. The missingness of the baseline variables in the deployment EHR was due to them not being available in a structured format, even though they were present in the medical record. A laboratory variable missingness was calculated using the 33 laboratory variables used by CLL-TIM. Namely it shows the mean percentage of laboratory tests each patient has no data for. b Historical data was calculated by first considering, for each patient and for each laboratory test, what was the earliest test available. For each laboratory test, we took the mean overall patients, and our final number is the mean overall laboratory tests. This gives an idea of how far back laboratory data is available. Availability and historical data for each laboratory test may be found in Supplementary Fig. 2. c Blood culture availability is the rate of patients with at least one blood culture event. d Blood culture historical data is the mean number of years a blood culture event took place before the prediction point. Distribution of blood culture events prior to prediction points may be found in Supplementary Fig. 2. e CLL-TIM was developed and trained on patient data from Rigshospitalet, Roskilde, Herlev, Vejle, Esbjerg, Odense, Holstebro, Aarhus and Aalborg in Denmark. The deployment cohort includes patient data from Rigshospitalet and Roskilde. These two hospitals formed 23% of the patient population in the research EHR. β2-M Beta-2 microglobulin, IGHV the immunoglobulin heavy chain gene, FISH DNA fluorescence in situ hybridization, ECOG Eastern cooperative oncology group.

Benchmarking deployment of CLL-TIM on an independent cohort of patients

On the research EHR, on a set of patients separate from those that CLL-TIM was trained on, CLL-TIM achieved Matthew’s correlation coefficient (MCC) of 0.56 (95% CI: 0.42–0.70) (n = 145) on the subset of patients with no missing CLL-IPI variables (Binet Staging, IGHV mutational status, β2 microglobulin, del17p/TP53 mutation) and on which CLL-TIM showed high-confidence in its prediction10. Note that this initial benchmarking allowed missingness for any other variables. For an equitable comparison to test CLL-TIM’s generalization from the research EHR to the deployment EHR, we wanted to test CLL-TIM’s predictions in the deployment EHR on the subset of patients with non-missing CLL-IPI variables. Due to the limited availability of these variables (Fig. 4), we restricted it to non-missing Binet Staging and IGHV only. All other variables were not controlled for missingness. On this subset of the patients from the deployment EHR (n = 31, Table 3—Bench A), the high-confidence predictions of CLL-TIM achieve an MCC of 0.715 (95% CI: 0.712–0.719) with a precision of 0.942 (95% CI: 0.941–0.944) vs. 0.72 (95% CI: 0.63–0.90) and a recall of 0.852 (95% CI: 0.849–0.854) vs. 0.754 (95% CI: 0.65–0.86) for CLL-TIM on the research EHR respectively. Survival analysis (Fig. 5a) on the high-confidence predictions of CLL-TIM on the deployment EHR exhibited a hazard ratio (HR) of 11·06 (95% CI: 3.23–37.95) vs. 7.23 (95% CI: 7.19–7.52) for CLL-TIM on the Research EHR.

Kaplan–Meier graphs of infection-free, CLL treatment-free survival for CLL-TIM’s predictions. Patients predicted as high-risk is the blue curve with 95% confidence intervals) and the low-risk group is the yellow curve with 95% confidence intervals. p-value is by log-rank test. a All predictions shown are for high-confidence predictions. For each risk prediction, CLL-TIM provides confidence in its prediction that is given without knowledge of the ground truth. The threshold for a high-confidence prediction was 0.58, and any patients with a confidence level higher than 0.58 were considered to be high-risk with high confidence. For low-risk predictions, the high-confidence threshold was set at 0.28, and any patients with a confidence level less than 0.28 were considered to be low-risk with high confidence. Thresholds were derived from the original CLL-TIM publication10 and unchanged for this work. Derivation of these confidences and the thresholds is described in Methods. b Includes also patients in which CLL-TIM had low-confidence in its prediction. For an equitable comparison to CLL-TIM’s predictions on the research EHR, the results here are for the subset of patients with available Binet Stage and IGHV status but for missingness in any other variables.

Effect of missingness on CLL-TIM’s predictions on the deployment of EHR

CLL-TIM was designed to handle missingness using several layers (see Methods and Fig. 6). Two aspects of missingness are notable between the research and deployment of EHR. In the research EHR, two out of the top three prognostic markers of CLL-TIM (Binet Staging and IGHV status) were available for 100% of patients but only available for 28% and 9% of the patients in the deployment EHR. Additionally, long-term laboratory data was generally not available in the deployment EHR (Fig.4 and Supplementary Fig. 2). We benchmarked CLL-TIM on these as-is missingness conditions that were not seen or anticipated in the development of CLL-TIM using Bench-B (n = 276) from the deployment EHR (Table 3). All missingness conditions were kept ‘as is’ and missingness was handled as described (Fig. 6). CLL-TIM on Bench-B achieved MCC = 0.500 (95% CI: 0.499–0.501) (high-confidence predictions) vs 0.56 (95% CI: 0.418–0.697) for CLL-TIM on the research EHR. Increasing the threshold for a high-confidence prediction from t = 0.58 to t = 0.65 (Bench C, n = 233 in Table 3) helped in improving the MCC further. However, such calibration should be subsequently tested for its performance on a new set of patients in the future.

a CLL-TIM handles missingness using 3 different layers. Namely, (i) on the feature level using missingness representations, (ii) on the algorithm level by using algorithms that accept missingness, and (iii) on the ensemble level by using design principles that make it robust to missingness and noise (see Methods—Handling of Missingness in CLL-TIM). b Shows how CLL-TIM handles missingness on the feature level. For routine laboratory variables, the features that count the number of a given test can indicate to the algorithm that a test is missing when its value is 0. For baseline variables, since they are categorical, one-hot-encoding was used to create missingness indicator features. For example, ‘IGHV unknown’ indicates to the algorithm that the variable is missing. Similarly, for Diagnosis, Pathology, and Microbiology, a count of 0 implies the respective feature is missing. These missingness indicators enable the subsequent algorithms to include the missingness as part of their decision-making. For example, the algorithm might employ proxy features to model patients with missing IGHV status. Sometimes, missingness is time-dependent. For example, a laboratory test might be missing in the last year but not in the last 4 years. So, another type of feature that represents missingness is one that counts the number of days from a test. High values for this feature imply that the given test is not recent. c Features that represent the result of a laboratory variable and that are missing—are handled differently depending on the algorithm in question. For example, for XGBoost, which forms 46% (13 out of the 28 algorithms of CLL-TIM), missing values are fed directly without imputation as XGBoost automatically models missing data. For the remaining algorithms, median imputation is performed. In totality, imputation only ends up being employed for missing features of the routine laboratory variables, which are fed into non-XGBoost algorithms. d The final layer deals with a design that is robust to unseen missingness. Namely, ensembles are known to be robust to missingness and noise, particularly when the base-learners (i.e the algorithms making up the ensemble), have uncorrelated errors. CLL-TIM encouraged uncorrelated errors with the use of feature bagging, the use of different algorithms and parameters, and the use of different outcomes for different base-learners (see Methods—Algorithms, Feature and Variables in CLL-TIM). Lastly, CLL-TIM’s predictive confidence adds another layer of protection when predicting in new environments with missingness, as it can indicate when missingness is such that it is likely affecting predictive accuracy for a given patient. IGHV the immunoglobulin heavy chain gene, CRP C-reactive protein.

To assess how missingness in the deployment EHR may have affected CLL-TIM’s performance, we plotted the distribution of true-positives vs. false-positives with respect to the percentage of missing data for each patient, and similarly for true-negatives vs. false-negatives (Fig. 7). In both scenarios we found no marked differences in the missing rates for the correct or incorrect predictions. Our results suggest that CLL-TIM is robust to the general missingness of its variables but susceptible to missingness in its key variables, like the Binet Stage. The latter scenario is likely due to the fact that the Binet Stage was never missing during CLL-TIM’s development.

Data are shown for CLL-TIM predictions on Bench-C (n = 1099). The missing rate for each patient is calculated as the percentage of missing features from CLL-TIM’s 228 features. a True Positives in blue and false positives in orange. b True negatives in blue and false negatives in orange. Missing rates show similar distributions for all patients irrespective of whether they were correctly or incorrectly predicted.

Predictive confidence identifies trustworthy predictions

We next assessed whether on the deployment of EHR, the predictive confidence of CLL-TIM is a good indicator of which predictions are more likely to be correct over others (Supplementary Table 1). When not restricting to high-confidence predictions i.e., all predictions irrespective of confidence, CLL-TIM achieved a lower performance on Bench-A with MCC = 0.457 (95% CI: 0.453–0.460) vs. 0.715 (95% CI: 0.712–0.719) for high-confidence predictions. Similarly, on Bench-B, CLL-TIM achieved an MCC = 0.289 (95% CI: 0.288–0.290) when not restricted to high confidence predictions vs. 0.500 (95% CI: 0.499–0.501) for high confidence predictions only. Survival analysis on all predictions achieved a Hazard Ratio (HR) = 5.3 (95% CI: 2.46–11.42) vs. 11·06 (95% CI: 3.23–37.95) for high-confidence predictions only (Fig. 5). On Bench-B (n = 1099), 25% of CLL-TIM’s predictions were of high-confidence, and when controlling for the availability of key CLL-TIM prognostic markers, Binet Stage and IGHV mutation status (Bench A, n = 31), 83% of patients were predicted with high confidence.

Prediction coverage of CLL-TIM and gold-standard in CLL

Besides generalization and trust, it is necessary for deployed models to be able to have a high prediction coverage. This is defined as the percentage of patients for which predictions can be made irrespective of missing data. CLL-TIM has high dimensionality (84 variables) but does not restrict the availability of all these variables for predictions to be available (see Methods and Fig.6). Hence, 100% coverage was achieved for CLL-TIM upon deployment. The gold standard in CLL prognostication, CLL-IPI3, had the following availability in its five variables (age: 100%, Binet Stage: 17.8%, β2 microglobulin: 64.8%, IGHV status: 18.9%, Del17p and/or TP53 mutation: <0.05%). Given that CLL-IPI requires that all variables are available for its calculation, only n = 7/1099 (0.63%) of patients in the deployment EHR could have a CLL-IPI score. When attempting to increase CLL-IPI’s prediction coverage by imputing two of its variables, Binet Stage and IGHV mutation status, the prediction coverage increased to n = 32/1099 (2.9%) of the deployment EHR.

Discussion

Our work describes the practical challenges and processes for deployment of a high dimensional prognostic model adjoined to an EHR. While theoretical guidelines have been put forward14,15, proof-of-concepts of such implementations have been warranted. Our described algorithm deployment adjoined to an EPIC-based EHR, provides generalizable step-by-step guidance for the deployment of algorithms from research environments into EHR systems (Fig. 8).

For the deployment of a high-dimensional research algorithm like CLL-TIM, data harmonization was by far the most time-consuming aspect of this process. While the iterative method we devised may be replicated, automating such a process is not straightforward. Some variables required map** by a physician researcher clinically experienced within the deployment EHR system, others required close interaction between data analysts familiar with the EHR system and those that designed CLL-TIM. The complexity of models that address issues of trust, robustness, fairness, privacy, and accuracy in prognostic models4,5,6,7,8,9 will likely require large-scale data harmonization across EHRs. With only 5% of prognostic models undergoing external validation16, further data harmonization efforts are needed.

Generalization of algorithms between cohorts is particularly difficult because of data shifts presenting situations to the algorithm that are unseen during its development13, which required that 20% of the predictions be high-risk with high-confidence and 30% of the predictions be low-risk with high confidence10. On the research EHR, on a separate cohort from which the cutoffs were generated, CLL-TIM’s high-confidence predictions had a hazard ratio (HR) of 7.27 compared to a HR = 2.09 for low-confidence predictions. This confirmed the use of averaging the probabilistic output of CLL-TIM’s base-learners, as a reliable indicator for which predictions are more likely to be correct over others.

Handling of missingness in CLL-TIM

Due to its various missingness handling mechanisms in place, while CLL-TIM is trained using 84 variables and 7-year patient histories, it does not require them to be available for all patients. CLL-TIM addresses missingness over three layers (feature, algorithm, ensemble) that are designed to both handle missingness and to be robust to future missingness (Fig. 6). Missingness at the feature layer is addressed by encoding certain features, such as routine laboratory variables and baseline variables, to indicate when data is missing. This allows algorithms to adjust their decisions based on feature availability. On the algorithm layer, missing values for certain features vary depending on the algorithm. XGBoost (13/28 algorithms of CLL-TIM), for example, handles missing data ‘as-is’ internally28, while other algorithms necessitated median imputation. On the ensemble layer, we performed ensembling with feature bagging that is conducive to better generalization performance in situations of unseen noise and missing data18,29—for example, those when using CLL-TIM in new EHRs, hospitals and countries. Additionally, in such unseen scenarios, CLL-TIM’s confidence can be used as a reliable indicator of whether to trust a given prediction.

Data harmonization and matching predictions across deployment EHR

Data harmonization is essential for integrating an AI algorithm into a new EHR cohort. The central task involves aligning variable nomenclature and units with the algorithm’s specifications. For this, we created two many-to-one map** dictionaries. One for naming conventions and the other for unit conventions (Fig. 2). A physician researcher specialized in hematology selected and matched variable labels. To ascertain the validity of our map** dictionaries, we devised an iterative process that, for an identical group of patients, compares CLL-TIM’s predictions, when their data comes from the research EHR, and when it comes from the deployment EHR. The map** is considered finished when for a given patient, CLL-TIM’s predictions match on both EHRs. Further details of the described data harmonization may be found in the Supplementary Methods.

Automation of CLL-TIM predictions into the deployment EHR and monitoring

We set up CLL-TIM as a standalone script that accepts patient data and produces for each patient a risk level, confidence in the prediction, and a set of personalized risk factors (top five factors pushing towards high-risk and top five pushing towards low-risk). The script is ‘plug-n-play’, in that it can be run on any system as long as python is available and raw patient data (not features) are entered in the right format. Automation into EHR, therefore, focused mostly on how to automate the process of importing data into and exporting results from CLL-TIM’s standalone script (Fig. 3). Patient data fed into CLL-TIM is stored in the Chronicles database, with nightly ETL processes transferring data to Clarity and Caboodle databases. The previously described harmonization scripts pre-process data for CLL-TIM, then CLL-TIM is executed to provide predictions and its results are then integrated back into the Chronicles EHR database. The latter enables the provision of CLL-TIM’s results within the EHR interface (Fig. 3). This design, featuring containerization and shared databases for predictions and patient data, supports centralized monitoring and streamlined access of both CLL-TIM’s outputs (to assess performance degradation) and CLL-TIM’s inputs (to assess data-shifts)—Supplementary Fig. 3. In our setup, the hospital has full control and rights over the environment where CLL-TIM is executed, without any licensing or permissions required from the commercial provider of the deployment EHR. In light of potential model degradation, close monitoring of prognostic model performance after clinical deployment is crucial21. Our monitoring setup for CLL-TIM, upon its deployment, involves automatic tracking of outputs and outcomes for each patient through the caboodle database. Every three months, we evaluate using several metrics, both predictive performance decay and data shifts, that may be responsible for the deterioration of predictive power (Supplementary Fig. 3 and Supplementary Methods).

Stakeholder roles and legal framework

For delivering CLL-TIM’s results to treating physicians within the deployment EHR system, a multidisciplinary team including researchers, analysts, IT experts, interface developers, clinical council, and business owners collaborated (Table 1). This ensured comprehensive consideration of clinical, technical, and regulatory aspects. To ensure that the deployment of CLL-TIM met the requirements for a decision support tool, a Medical Device Regulation (MDR) assessment was performed according to Medical Device Regulation (EU) 2017/745 paragraph 5.526. The algorithm was considered a class 2 A device according to annex 8, rule 11 (software which is intended to provide information that is used to make clinical, diagnostic, or therapeutic decisions, except if leading to decisions that can cause serious harm or surgical interventions, the latter resulting in classification 2B). As CLL-TIM was only implemented into one EHR system within a limited organization, the system could be implemented locally without a resource-demanding CE labeling through the Medical Device Regulation path. Self-evaluation according to the guidelines on MDR evaluation from the local institution was put in place to meet the requirements of paragraph 5.5 for the local implementation of a 2A medical device. Before presenting CLL-TIM’s predictions to practicing physicians on the EHR interface, an assessment was performed by the Hematological Clinical Healthcare Council. The latter is responsible for the assessment of any significant changes to the EHR interface that may impact the management of patients with hematological disorders. The council requested proof of (i) the map** of all relevant map** within the caboodle copy of the EHR, (ii) confirmation and validation of all parts of the script and predictions within the EHR, (iii) validation of all predictions for patients matched between the research and deployment EHRs, (iv) validation of the personalized high/low-risk factors for these matched patients, and (v) training of all relevant physicians on interpretation and use of CLL-TIM as a decision support tool. As CLL-TIM is still being tested in a clinical trial, the predictions were furthermore labeled as “research-use only” within the deployment EHR.

Data approval

Based on approval from the National Ethics Committee and the Data Protection Agency, EHR data and data from health registries for current and previous patients with CLL were retrieved as previously described10,30.

Data availability

The individual patient-level data that support the findings of this study are available from the corresponding author upon reasonable request. As the individual patient-level data cannot be anonymized, only pseudonymized, according to Danish and EU legislation, the data cannot be deposited in a public repository. However, the authors provide a data repository with individual patient level data that can be made available with 2-factor authentication for researchers on a collaborative basis upon request.

Code availability

CLL-TIM code is available in the supplementary.

References

Mohsen, F., Ali, H., El Hajj, N. & Shah, Z. Artificial intelligence-based methods for fusion of electronic health records and imaging data. Sci. Rep. 12, 17981 (2022).

Agius, R., Parviz, M. & Niemann, C. U. Artificial intelligence models in chronic lymphocytic leukemia—recommendations toward state-of-the-art. Leuk. Lymphoma 63, 265–278 (2022).

International CLL-IPI working group. An international prognostic index for patients with chronic lymphocytic leukaemia (CLL-IPI): a meta-analysis of individual patient data. Lancet Oncol. 17, 779–790 (2016).

Huang, S.-C., Pareek, A., Zamanian, R., Banerjee, I. & Lungren, M. P. Multimodal fusion with deep neural networks for leveraging CT imaging and electronic health record: a case-study in pulmonary embolism detection. Sci. Rep. 10, 22147 (2020).

Groenwold, R. H. H. Informative missingness in electronic health record systems: the curse of knowing. Diagn. Progn. Res. 4, 8 (2020).

Li, Y. et al. Validation of risk prediction models applied to longitudinal electronic health record data for the prediction of major cardiovascular events in the presence of data shifts. Eur. Heart J. Digit. Health 3, 535–547 (2022).

Liu, Z., Li, X. & Yu, P. Mitigating health disparities in EHR via deconfounder. in Proceedings of the 13th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics 1–6 (ACM, 2022). https://doi.org/10.1145/3535508.3545516.

Sheller, M. J. et al. Federated learning in medicine: facilitating multi-institutional collaborations without sharing patient data. Sci. Rep. 10, 12598 (2020).

Zhang, X. S., Tang, F., Dodge, H. H., Zhou, J. & Wang, F. MetaPred: meta-learning for clinical risk prediction with limited patient electronic health records. KDD 2019, 2487–2495 (2019).

Agius, R. et al. Machine learning can identify newly diagnosed patients with CLL at high risk of infection. Nat. Commun. 11, 363 (2020).

Da Cunha-Bang, C. et al. Improved survival for patients diagnosed with chronic lymphocytic leukemia in the era of chemo-immunotherapy: a Danish population-based study of 10455 patients. Blood Cancer J. 6, e499 (2016).

Langerbeins, P. et al. The CLL12 trial: ibrutinib vs placebo in treatment-naïve, early-stage chronic lymphocytic leukemia. Blood 139, 177–187 (2022).

Da Cunha-Bang, C. et al. PreVent-ACaLL short-term combined acalabrutinib and venetoclax treatment of newly diagnosed patients with CLL at high risk of infection and/or early treatment, who do not fulfil IWCLL treatment criteria for treatment. A randomized study with extensive immune phenoty**. Blood 134, 4304–4304 (2019).

Van De Sande, D. et al. Develo**, implementing and governing artificial intelligence in medicine: a step-by-step approach to prevent an artificial intelligence winter. BMJ Health Care Inf. 29, e100495 (2022).

The Lancet Digital Health. Walking the tightrope of artificial intelligence guidelines in clinical practice. Lancet Digit. Health 1, e100 (2019).

Ramspek, C. L., Jager, K. J., Dekker, F. W., Zoccali, C. & van Diepen, M. External validation of prognostic models: what, why, how, when and where? Clin. Kidney J. 14, 49–58 (2021).

Avati, A. et al. BEDS-Bench: Behavior of EHR-models under Distributional Shift—A Benchmark. Preprint at ar**v https://arxiv.org/abs/2107.08189 (2021).

Hsu, K.-W. A theoretical analysis of why hybrid ensembles work. Comput. Intell. Neurosci. 2017, 1930702 (2017).

Barai, S. Vidhya. Our experience with numerai. Introduction to numerai. Medium. https://medium.com/analytics-vidhya/our-experience-with-numerai-2b0777acc12e (2021)

Futoma, J., Simons, M., Panch, T., Doshi-Velez, F. & Celi, L. A. The myth of generalisability in clinical research and machine learning in health care. Lancet Digit. Health 2, e489–e492 (2020).

Feng, J. et al. Clinical artificial intelligence quality improvement: towards continual monitoring and updating of AI algorithms in healthcare. npj Digit. Med. 5, 66 (2022).

Kompa, B., Snoek, J. & Beam, A. L. Second opinion needed: communicating uncertainty in medical machine learning. npj Digit. Med. 4, 4 (2021).

Savcisens, G. et al. Using sequences of life-events to predict human lives. Nat. Comput. Sci. 4, 43–56 (2024).

Muehlematter, U. J., Daniore, P. & Vokinger, K. N. Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015-20): a comparative analysis. Lancet Digit. Health 3, e195–e203 (2021).

Wu, E. et al. How medical AI devices are evaluated: limitations and recommendations from an analysis of FDA approvals. Nat. Med. 27, 582–584 (2021).

EUR-Lex - 32017R0745 - EN - EUR-Lex. https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32017R0745.

Persimune. Danish National Foundation for Research’s (DNRF) Centre of Excellence (COE) for Personalised Medicine of Infectious Complications in Immune Deficiency (PERSIMUNE). http://www.persimune.dk (2019).

Chen, T. & Guestrin, C. XGBoost: A Scalable Tree Boosting System. in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining - KDD ’16 785–794 (ACM Press, 2016). https://doi.org/10.1145/2939672.2939785.

Melville, P., Shah, N., Mihalkova, L. & Mooney, R. J. In Proc. of 5th International Workshop on Multiple Classifier Systems (MCS-2004), Experiments on ensembles with missing and noisy data. LNCS Vol. 3077, 293–302 (Springer Verlag, Italy, 2004).

Da Cunha-Bang, C. et al. The danish national chronic lymphocytic leukemia registry. Clin. Epidemiol. 8, 561–565 (2016).

Hallek, M. et al. iwCLL guidelines for diagnosis, indications for treatment, response assessment, and supportive management of CLL. Blood 131, 2745–2760 (2018).

Acknowledgements

CUN received research funding from the Alfred Benzon Foundation, Novo Nordisk Foundation and EU-funded CLL-CLUE for this study. DDM received funding from the Danish National Research Foundation (DNRF 126) for this study.

Author information

Authors and Affiliations

Contributions

C.N. and R.A. conceived the study; C.C.B., C.B.P. and C.N. provided data and reviewed the performance of the implemented version; J.D.L., B.S., D.D.M., H.L. and M.B.B. provided oversight and implementation of data paths from research to implementation, B.W., A.R.J., R.A. and C.N. performed the deployment and validation of the model into the EHR. C.N. and R.A. wrote the draft paper, and all authors contributed to the paper and reviewed the final version. C.N. and R.A. are responsible for direct access to the research data, while A.R.J. is responsible for direct access to the deployment script, all authors are accountable for the work as a whole.

Corresponding author

Ethics declarations

Competing interests

C.U.N. received research funding and/or consultancy fees from AstraZeneca, Janssen, AbbVie, Genmab, Beigene, Octapharma, C.S.L. Behring, Takeda, MSD and Lilly, Alfred Benzon Foundation, Novo Nordisk Foundation, EU-funded CLL-CLUE, and Danish Cancer Society, but declares no financial or non-financial competing interests. DDM received funding from the Danish National Research Foundation (DNRF 126), but declares no financial or non-financial competing interests. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Agius, R., Riis-Jensen, A.C., Wimmer, B. et al. Deployment and validation of the CLL treatment infection model adjoined to an EHR system. npj Digit. Med. 7, 147 (2024). https://doi.org/10.1038/s41746-024-01132-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-024-01132-6

- Springer Nature Limited