Abstract

Defects within chip solder joints are usually inspected visually for defects using X-ray imaging to obtain images. The phenomenon of voids inside solder joints is one of the most likely types of defects in the soldering process, and accurate detection of voids becomes difficult due to their irregular shapes, varying sizes, and defocused edges. To address this problem, an X-ray void image segmentation algorithm based on improved PCB-DeepLabV3 is proposed. Firstly, to meet the demand for lightweight and easy deployment in industrial scenarios, mobilenetv2 is used as the feature extraction backbone network of the PCB-DeepLabV3 model; then, Attentional multi-scale two-space pyramid pooling network (AMTPNet) is designed to optimize the shallow feature edges and to improve the ability to capture detailed information; finally, image crop** and cleaning methods are designed to enhance the training dataset, and the improved PCB-DeepLabV3 is applied to the training dataset. The improved PCB-DeepLabV3 model is used to segment the void regions within the solder joints and compared with the classical semantic segmentation models such as Unet, SegNet, PSPNet, and DeeplabV3. The proposed new method enables the solder joint void inspection to get rid of the traditional way of visual inspection, realize intelligent upgrading, and effectively improve the problem of difficult segmentation of the target virtual edges, to obtain the inspection results with higher accuracy.

Similar content being viewed by others

Introduction

Thips are used in almost all logic electronic and electrical equipment. The soldering process perfectly combines the chip with the bare board to form functionally different integrated circuit boards1. The quality of the solder relates to the functional integrity of the entire board. Therefore, checking the quality of the solder is a key part of ensuring the quality and efficiency of IC production. Hidden defects inside the chip solder joints are one of the biggest hidden dangers of chip solder quality. With the development of non-destructive testing technology, the use of X-rays to irradiate the solder joints with radioactivity can effectively observe the morphology of the different structures inside the solder joints, which makes it possible to expose defects such as bridging, false soldering, and voids in the solder joints2. Solder joint cavity phenomenon is one of the most common hidden defects in solder hidden defects, If the cavity area is too large, or the number of cavities will seriously affect the quality of the solder, so it is very important to accurately detect the cavity.

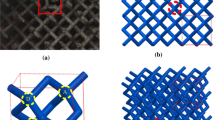

The use of X-ray technology to inspect welded joints for hidden defects has gained general acceptance, and some researchers have made outstanding contributions to the advancement of this field. For example, Gao et al.3, through the pre-processing of X-ray images such as Gaussian filtering, contrast stretching, etc., the use of the Canny operator for edge detection to locate the edge region of the weld joint cavity, and finally the use of digital image processing algorithms to extract the edges of the bubble region inside the weld ball, to achieve high-quality detection results, although by taking some pre-processing methods for X-ray images and at the same time, applying the classical Although some pre-processing methods are adopted for the X-ray image and the classical Canny operator is also used to segment the hard-to-segment cavity edges, the fuzzy zones of the cavity edges are of different widths and shapes, which are difficult to be segmented accurately by the traditional image processing methods. ** method to increase the training dataset while balancing the ratio of background and foreground training samples. The comparison image before and after image crop** is shown in Fig. 4.

Observing Fig. 4, it can be seen that, firstly, the edge-side non-chip-based X-ray images in the big picture are cropped out; then, the remaining parts are equally divided into 6 parts, and if there is no bubble pixel in the cropped image, it is discarded; finally, the ratio of bubble pixels to the cropped image is calculated, and the images with a ratio of less than 5% are discarded. After the above operations, 4128 trainable images are finally obtained.

Experimental setup and model training

This paper mentions that the training and evaluation of all the models were carried out on the open-source toolkit PyTorch via the Pycharm platform on a computer with a 12th Gen Intel® Core™ i5-12600KF 3.70 GHz CPU and 16 GB of installed RAM. They are using an NVIDIA GeForce RTX on Windows 10 3070 GPU (with 8 GB of RAM) to run all the semantic segmentation models involved in the experiments. After non-stop exploratory experiments to summarise the important hyperparameters in the semantic segmentation model, the model achieves stable and reliable performance when the model parameters are set as in Table 1.

During model training, the number of images processed at one time (Batch Size) is set to 4, the optimizer selects SGD and sets the initial learning rate to 0.007, the learning rate decreases by cosine "COS", and the weight decay rate is set to 0.0001, to avoid model overfitting. If the validation loss does not decrease for 3 epochs, the training is stopped. After many experiments, when the model is iterated for 100 generations, the loss value tends to converge successfully, so the number of training iterations is set to 100 to meet the requirements.

Experimental results and discussion

The experimental model and manufacturing engineering deployment schematic proposed in this paper are shown in Fig. 5.

Three phases of data acquisition, model training, and engineering deployment of the mature model are included in Fig. 5. In the data acquisition phase, it is done by an industrial X-ray collector; in the training phase, the PCB-DeepLabV3 model is trained by feeding the model with artificially labeled chip X-ray images until the model converges; in the engineering deployment phase, the successfully trained model is deployed in a chip manufacturing industrial scenario to complete the automated quality inspection.

To verify the importance of the dataset to the deep learning model, the original data and the cropped and cleaned data are input into the DeeplabV3 model respectively and are trained under the same experimental platform and settings respectively, after the training is completed, the two mature models are obtained and evaluated for their performance. Semantic segmentation is pixel-level classification, and its common evaluation metrics are three metrics such as mIOU (mean intersection over union), MPA (mean pixel accuracy), and CPA (Class Pixel Accuracy), where MPA and CPA correspond to the average accuracy in the classification model (mAP) and Precision (Precision) in the classification model, Recall indicator is not a commonly used indicator in semantic segmentation model, but in this paper, to illustrate the completeness of the empty pixel segmentation, so the Recall indicator is chosen, and the test results are shown in Fig. 6.

The bar chart in Fig. 6 shows that the model trained on the cropped and cleaned dataset substantially outperforms the model trained on the original dataset in the four metrics of mIOU, MPA, CPA, and Recall, which shows the importance of crop** and cleaning the original dataset for deep learning models. The principle of this is explained as follows: firstly, the sum of pixels of the six small images after crop** is smaller than that of the complete large image, and the non-chip-based X-ray image on the edge side of the large image is discarded, which is not useful for training the segmentation of foreground region and exacerbates the proportion of foreground and background samples in the training; however, the serious imbalance in the proportion of the samples makes it easier for the model to ignore the classes with a lower proportion and thus make the model invalid; then, the original dataset is cropped and cleaned to make the model more effective. Then, images with no foreground pixels after crop** and images with a lower proportion of foreground pixels are discarded to further reduce the foreground-to-background sample ratio, and a more balanced class-to-sample ratio helps the model learn the features in the foreground, which is important for the model to learn the semantics of segmenting the pixels in the empty region.

The cleaned LED chip X-ray dataset is input into the DeeplabV3 model and trained according to the experimental platform prepared in Sect. “Dataset expansion and cleaning”. After the training is completed, the nine LED chip X-ray images are tested, and the test results are shown in Fig. 7.

Observing Fig. 7, it can be seen that the DeeplabV3 model has a certain segmentation ability for 9 kinds of LED chip void defects. Due to the formation of fuzzy virtual edges between the voids and the soldered surface, and the low contrast between the FC-GB2 model chip voids and the base surface of the soldered joints, the above factors make it difficult to accurately segment the void defects of different LED chips. Through the actual testing of the DeeplabV3 model, it is found that most types of cavity segmentation are incomplete, especially in the difficult to segment FC-GB2 model chip cavity segmentation effect is not ideal, and model failure problems. By introducing the improved PCB-DeeplabV3 model, the model is trained in the same experimental environment and the same samples are used to test the model performance. The test results are shown in Fig. 8.

Figure 8 the only variable of the experiment is the semantic segmentation model, and observation of Fig. 8 reveals that the segmentation performance of the improved PCB-DeeplabV3 model is significantly improved, and the segmented void area region is larger than that segmented by the DeeplabV3 model for most of the chip types, and the void with smaller area is also detected, especially in the case of FC-GB2 model chip with low contrast, which can still be successfully segmented, indicating that the proposed AMTPNet network helps the DeeplabV3 model to improve the segmentation performance for hard-to-segment voxels and low-contrast pixels. The next experiment compares the performance of the improved PCB-DeeplabV3 model with DeeplabV3, U-net27, SegNet28, and PSPNet29, so that it is easier to choose the appropriate model for deployment in real engineering applications. In this part of the experiment, the VTF2 model chip is selected as the basic experimental object, and concerning the manually labeled images, the comparison of the segmentation performance of different models in the most critical void defect detail part is shown in Fig. 9.

In Fig. 9, when the detection model is VTF2, the area segmented by Deeplabv3, U-net, SegNet, PSPNet and the method in this paper is compared with the manually labelled area, and the changes in the detail part are observed to see the better detection capability of the PCB-DeeplabV3 detection results compared to the above models, which is mainly reflected in the increase in the segmented area and area Coherence, for example, Deeplabv3, U-net model lost a lot of empty defect areas, SegNet model appeared serious incoherence phenomenon, PSPNet model, although the detection of the bubble area greatly increased, but has exceeded the actual bubble defect area, segmentation of excessive, the method in this paper can basically accurately detect the bubble defects, and difficult to segmentation of the virtual edge of the spatial structure of a better spatial deconstruction ability, which also indicates that the model in this paper can handle better in ambiguous edge details and has better performance in resolving edge pixels.

To verify the performance impact of the channel attention mechanism (CAM), the two-space pyramid pooling network (TSP), and their fused network structure (AMTPNet) on the semantic segmentation model, the following ablation experiments provide a point-by-point comparison of the improved parts by the control variable method. This experiment uses the dataset enhanced by the crop** and cleaning method to train the model, using Deeplabv3 as the baseline model. Model a is the channel attention mechanism alone; model b is the two-space pyramid pooling network alone; and model c is the fusion network structure that considers both, and the results of the ablation experiments are shown in Table 2.

In this experiment, the void is used as a positive sample, and all the background except the void is used as a negative sample, separating the positive sample void from the negative sample background pixels, which is essentially a binary classification problem. Observing the mPA, mIOU, CPA, and Recall metrics data for models a, b, and c in Table 2, the addition of the CAM and TSP modules to the baseline model DeeplabV3 improves all metric values to a certain extent, indicates that the individual modules all play an active role in the model; when using the AMTPNet structure, the mIOU and mPA are once again improved, It shows that the channel attention mechanism (CAM) and the two-space pyramid pooling network (TSP) have good combinability and promote each other for better performance. For binary classification problems, especially when the model focuses on the party that is in the minority in the binary classification, the accuracy (mPA) loses its significance in judging, and the more commonly used evaluation metrics are the precision (CPA) and the recall (Recall). The mIOU, CPA, and Recall evaluation metrics in Table 2 are highly variable, and the CPA metrics improve more substantially when the baseline model is used with different models a, b, and c. This suggests that the Channel Attention Mechanism (CAM) and the Twin Spatial Pyramid Pooling Network (TSP) practically improve the accuracy of the model in segmenting pixels representing voids and that the combination of the two, the AMTPNet structure fusion performance is better, and the improvement of Recall metrics also proves the positive effect of models a, b, and c on the overall network performance improvement.

To verify that this paper's model also has advantages in the post-deployment inference process, this paper's method is compared with other major classical models, and two indexes, mIOU and FPS, are used as evaluation criteria. Where mIOU refers to the average of the intersection and merge ratio of each class in the dataset, and the intersection and merge ratio is the ratio of the intersection and merge of the true label and the predicted value of the class, the larger the ratio, the more accurate the segmentation is; FPS refers to the number of frames transmitted per second, which can measure the model inference speed, and the larger the value is, the higher the speed of the model inference is. Table 3 records the mIOU and FPS values for U-net, SegNet, PSPNet, DeeplabV3, and the method in this paper under the same experimental conditions.

As can be seen from Table 3, the method proposed in this study outperforms other methods in both mIOU and FPS metrics. Among them, the average intersection and merger ratio reaches 81.69%, and the model inference speed reaches 78.81 FPS, which indicates that the AMTPNet network improves the accuracy of model segmentation, especially on the fuzzy edge structure that is more difficult to segment, and at the same time, the backbone feature extraction part adopts the lightweight MobileNetV2 network, which accelerates the model inference speed and improves the performance of the model for practical deployment applications.

Conclusion

In this study, based on the Deeplabv3 network model, MobileNetV2 is introduced to optimize the network parameters so that the model is reduced in size and easy to deploy, and based on this, attentional multi-scale two-space pyramid pooling network (AMTPNet) is embedded into the low-level features, which facilitates the fusion of the edge details and extraction of the model. In the process of model training, the data set is enlarged and the proportion of positive and negative samples is reduced by crop** and cleaning the original data so that the model can be adequately trained in a small data set while eliminating the negative impact of the imbalance of the proportion of positive and negative samples. Through the above series of experiments and analyses, and comparing the improved PCB-Deeplabv3 with the current excellent U-net, SegNet, PSPNet, and DeeplabV3 classical semantic segmentation models, the following conclusions can be drawn:

-

(a)

When training the deep learning model, the image crop** and cleaning method designed in this paper greatly enhances the training dataset so that the model is fully trained to fit the target successfully, and there is a substantial improvement in all 4 metrics of mIOU, MPA, CPA, and Recall.

-

(b)

The AMTPNet network proposed in this study has a better effect on the extraction of detailed information that is difficult to segment at the edge, has a better generalization ability in identifying X-ray images of different chip models, and successfully detects void defects.

-

(c)

The PCB- Deeplabv3 model proposed in this paper has better segmentation performance compared with Unet, SegNet, PSPNet, DeeplabV3 model, especially in the blurred edges and low-contrast LED chip X-ray void defect images, the PCB- Deeplabv3 model proposed in this paper has better performance, and reaches 81.69% average accuracy in the mIOU and FPS indexes reaching 81.69% average accuracy and 78.81 frame rate, respectively, surpassing the performance of other classical models.

Data availability

The datasets generated during and/or analyzed during the current study are available in the [X-ray-Void-Defect-Detection-in-Chip-Solder-Joints-Based-on-PCB-DeepLabV3-Algorithm] repository, [https://github.com/jackong180/X-ray-Void-Defect-Detection-in-Chip-Solder-Joints-Based-on-PCB-DeepLabV3-Algorithm]. The datasets generated and/or analyzed during the current study are available in the [Reliability of High-Power LEDs and Solder Pastes] repository, [https://www.kaggle.com/datasets/andreaszippelius/hellastudy-of-leds2].

References

Kotadia, H. R., Howes, P. D. & Mannan, S. H. A review: On the development of low melting temperature Pb-free solders. Microelectron. Reliab. 54, 1253–1273. https://doi.org/10.1016/j.microrel.2014.02.025 (2014).

Kaira, C. S., Mayer, C. R., De Andrade, V., De Carlo, F. & Chawla, N. Nanoscale three-dimensional microstructural characterization of an sn-rich solder alloy using high-resolution transmission X-ray microscopy (TXM). Microsc. Microanal. 22, 808–813. https://doi.org/10.1017/s1431927616011429 (2016).

Gao, H., Chu, F., Wan, Y. & Liu, J. Arbitrarily shaped bubble extraction method for components based on X-ray inspection. Semicond. Technol. 37, 815–818 (2012).

**ao, K., Li, Q. & Chen, Y. Automatic detection method for BGA defects based on x-ray imaging. Appl. Opt. 61, 6356–6365 (2022).

Zhang, Q. et al. Deep learning based solder joint defect detection on industrial printed circuit board X-ray images. Complex Intell. Syst. 8, 1525–1537. https://doi.org/10.1007/s40747-021-00600-w (2022).

Li, L. et al. An adaptive false-color enhancement algorithm for super-8-bit high grayscale X-ray defect image of solid rocket engine shell. Mech. Syst. Signal Process. https://doi.org/10.1016/j.ymssp.2022.109398 (2022).

**ao, P. et al. Adaptive hybrid framework for multiscale void inspection of chip resistor solder joints. IEEE Trans. Instrum. Meas https://doi.org/10.1109/tim.2023.3235435 (2023).

Ulger, F., Yuksel, S. E., Yilmaz, A. & Gokcen, D. Solder joint inspection on printed circuit boards: A survey and a dataset. IEEE Trans. Instrum. Meas. https://doi.org/10.1109/TIM.2023.3277935 (2023).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 4510–4520.

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K. & Yuille, A. L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40, 834–848. https://doi.org/10.1109/tpami.2017.2699184 (2018).

Cai, N. et al. SMT solder joint inspection via a novel cascaded convolutional neural network. IEEE Trans. Compon. Packaging Manuf. Technol. 8, 670–677. https://doi.org/10.1109/tcpmt.2018.2789453 (2018).

Holler, M. et al. High-resolution non-destructive three-dimensional imaging of integrated circuits. Nature 543, 402. https://doi.org/10.1038/nature21698 (2017).

Jiang, Z. et al. AXI-ICRT: Towards a real-time AXI-interconnect for highly integrated SoCs. IEEE Trans. Comput. 72, 786–799. https://doi.org/10.1109/tc.2022.3179227 (2023).

Schmid, M., Zippelius, A., Hanss, A., Boeckhorst, S. & Elger, G. Investigations on high-power LEDs and solder interconnects in automotive application: Part I-initial characterization. IEEE Trans. Device Mater. Reliab. 22, 175–186. https://doi.org/10.1109/tdmr.2022.3152590 (2022).

Cui, Z. et al. ECANet: Enhanced context aggregation network for single image dehazing. Signal Image Video Process. 17, 471–479. https://doi.org/10.1007/s11760-022-02252-w (2023).

Zhang, T. & Zhang, X. Squeeze-and-excitation laplacian pyramid network with dual-polarization feature fusion for ship classification in SAR Images. IEEE Geosci. Remote Sens. Lett. https://doi.org/10.1109/lgrs.2021.3119875 (2022).

Purkait, P., Zhao, C. & Zach, C. SPP-Net: Deep absolute pose regression with synthetic views. Preprint at https://ar**v.org/1712.03452 (2017).

Ma, J., Dai, Y. & Tan, Y.-P. Atrous convolutions spatial pyramid network for crowd counting and density estimation. Neurocomputing 350, 91–101. https://doi.org/10.1016/j.neucom.2019.03.065 (2019).

Chollet, F. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 1251–1258.

Iandola, F. N. et al. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. Preprint at https://ar**v.org/1602.07360 (2016).

Zhang, X., Zhou, X., Lin, M. & Sun, J. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 6848–6856.

Qin, X. & Wang, Z. Nasnet: A neuron attention stage-by-stage net for single image deraining. Preprint at https://ar**v.org/1912.03151 (2019).

Tan, M. et al. In Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2820–2828.

Chen, H.-Y. & Su, C.-Y. In 2018 9th International Conference on Awareness Science and Technology (iCAST), 308–312 (IEEE).

Yurtkulu, S. C., Şahin, Y. H. & Unal, G. In 2019 27th Signal Processing and Communications Applications Conference (SIU), 1–4 (IEEE).

Liu, R. et al. RAANet: A residual ASPP with attention framework for semantic segmentation of high-resolution remote sensing images. Remote Sens. 14, 3109 (2022).

Ronneberger, O., Fischer, P. & Brox, T. In Medical image computing and computer-assisted intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, Proceedings, Part III 18, 234–241 (Springer, 2015).

Badrinarayanan, V., Kendall, A. & Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39, 2481–2495 (2017).

Zhou, J., Hao, M., Zhang, D., Zou, P. & Zhang, W. Fusion PSPnet image segmentation based method for multi-focus image fusion. IEEE Photonics J. 11, 1–12 (2019).

Acknowledgements

Thank you very much for the two major funding sources provided by **nyu Hu, namely the National Natural Science Foundation of China (No. 61976083); And Hubei Province Key R&D Program of China (No. 2022BBA0016).

Author information

Authors and Affiliations

Contributions

DK and XH completed the work and contributed to the writing of the manuscript. ZG conceived the project and provided the research methodology. DZ conducted the survey and data management for the project. All authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kong, D., Hu, X., Gong, Z. et al. Segmentation of void defects in X-ray images of chip solder joints based on PCB-DeepLabV3 algorithm. Sci Rep 14, 11925 (2024). https://doi.org/10.1038/s41598-024-61643-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-61643-w

- Springer Nature Limited