Abstract

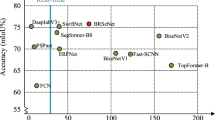

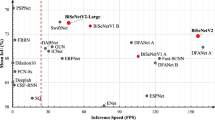

Due to poor illumination and low contrast, semantic segmentation of nighttime images faces major challenges. Various segmentation models with a large number of parameters are proposed to improve the performance but lead to an inability to process in real time. To tackle these problems, we propose a real-time edge-guided bilateral network (EGBNet) for nighttime semantic segmentation. Considering the blurred details and low contrast of nighttime images, we propose a lightweight multi-dilation dense aggregation module and introduce an efficient edge head to improve the ability to distinguish target features from the nighttime background. Moreover, a self-adaptive feature fusion module is proposed for the bilateral segmentation network to enhance the feature representation and generalization ability by fully using multi-scale feature maps. To capture more useful information from limited nighttime images, we further use the knowledge distillation strategy to improve the segmentation performance. Extensive experiments on ACDC and BDD datasets demonstrate the effectiveness of our EGBNet by achieving a satisfactory trade-off between segmentation accuracy and inference speed. Specifically, EGBNet achieves 55.56% mIoU on the ACDC test set with 9.4 M parameters and 60FPS speed for a \(1080\times 1920\) input image on a single NVIDIA 2080Ti.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Li, H., **ong, P., Fan, H., Sun, J.: Dfanet: deep feature aggregation for real-time semantic segmentation. CVPR (2019). https://doi.org/10.1109/CVPR.2019.00975

Romera, E., Alvarez, J.M., Bergasa, L.M., Arroyo, R.: Erfnet: efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intell. Transp. Syst. 19(1), 263–272 (2017)

Gao, K., et al.: Dual-branch combination network (DCN): Towards accurate diagnosis and lesion segmentation of covid-19 using CT images. Med. Image Anal. 67, 101836 (2021)

Oulefki, A., Agaian, S., Trongtirakul, T., Laouar, A.K.: Automatic covid-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 114, 107747 (2021)

Wang, J., et al.: Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 43(10), 3349–3364 (2020)

Lin, G., Milan, A., Shen, C., Reid, I.: Refinenet: multi-path refinement networks for high-resolution semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1925–1934 (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Yu, C., et al.: Bisenet v2: bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 129(11), 3051–3068 (2021)

Gao, R.: Rethink dilated convolution for real-time semantic segmentation. ar**v preprint ar**v:2111.09957 (2021)

Fan, M., et al.: Rethinking bisenet for real-time semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9716–9725 (2021)

Wang, Y., et al.: Lednet: a lightweight encoder-decoder network for real-time semantic segmentation. IEEE, pp. 1860–1864 (2019)

Ma, L., Ma, T., Liu, R., Fan, X., Luo, Z.: Toward fast, flexible, and robust low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5637–5646 (2022)

Tan, X., et al.: Night-time scene parsing with a large real dataset. IEEE Trans. Image Process. 30, 9085–9098 (2021)

Guo, C., et al.: Zero-reference deep curve estimation for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1780–1789 (2020)

Jiang, Y., et al.: Enlightengan: deep light enhancement without paired supervision. IEEE Trans. Image Process. 30, 2340–2349 (2021)

Hoyer, L., Dai, D., Van Gool, L.: Daformer: improving network architectures and training strategies for domain-adaptive semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9924–9935 (2022)

Sakaridis, C., Dai, D., Van Gool, L.: Map-guided curriculum domain adaptation and uncertainty-aware evaluation for semantic nighttime image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 44(6), 3139–3153 (2020)

Choi, S., et al.: Robustnet: improving domain generalization in urban-scene segmentation via instance selective whitening. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11580–11590 (2021)

Wu, X., Wu, Z., Guo, H., Ju, L., Wang, S.: Dannet: a one-stage domain adaptation network for unsupervised nighttime semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15769–15778 (2021)

Gao, H., Guo, J., Wang, G., Zhang, Q.: Cross-domain correlation distillation for unsupervised domain adaptation in nighttime semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9913–9923 (2022)

Sakaridis, C., Dai, D., Van Gool, L.: ACDC: the adverse conditions dataset with correspondences for semantic driving scene understanding. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10765–10775 (2021)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2881–2890 (2017)

Li, Y., Chen, Y., Wang, N., Zhang, Z.: Scale-aware trident networks for object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6054–6063 (2019)

Yu, C., et al.: Bisenet: bilateral segmentation network for real-time semantic segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 325–341 (2018)

Hong, Y., Pan, H., Sun, W., Jia, Y.: Deep dual-resolution networks for real-time and accurate semantic segmentation of road scenes. ar**v preprint ar**v:2101.06085 (2021)

Yu, F., et al.: Bdd100k: a diverse driving dataset for heterogeneous multitask learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2636–2645 (2020)

Park, S., Yu, S., Moon, B., Ko, S., Paik, J.: Low-light image enhancement using variational optimization-based Retinex model. IEEE Trans. Consum. Electron. 63(2), 178–184 (2017)

Tao, L., et al.: LLCNN: a convolutional neural network for low-light image enhancement. IEEE, pp. 1–4 (2017)

Wu, T., Tang, S., Zhang, R., Cao, J., Zhang, Y.: CGNet: a light-weight context guided network for semantic segmentation. IEEE Trans. Image Process. 30, 1169–1179 (2020)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3213–3223 (2016)

Deng, J., et al.: Imagenet: a large-scale hierarchical image database. IEEE, pp. 248–255 (2009)

Araujo, A., Norris, W., Sim, J.: Computing receptive fields of convolutional neural networks. Distill 4(11), e21 (2019)

Luo, W., Li, Y., Urtasun, R., Zemel, R.: Understanding the effective receptive field in deep convolutional neural networks. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Ding, X., Zhang, X., Han, J., Ding, G.: Scaling up your kernels to 31x31: revisiting large kernel design in CNNs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11963–11975 (2022)

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Semantic image segmentation with deep convolutional nets and fully connected CRFs. ar**v preprint ar**v:1412.7062 (2014)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 801–818 (2018)

Lin, M., Chen, Q., Yan, S.: Network in network. ar**v preprint ar**v:1312.4400 (2013)

Lin, T.-Y. et al.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125 (2017)

Tan, M., Pang, R., Le, Q.V.: Efficientdet: scalable and efficient object detection. pp. 10781–10790 (2020)

Li, H., **ong, P., An, J., Wang, L.: Pyramid attention network for semantic segmentation. ar**v preprint ar**v:1805.10180 (2018)

Ghiasi, G., Lin, T.-Y., Le, Q.V.: NAS-FPN: learning scalable feature pyramid architecture for object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7036–7045 (2019)

Huang, Z., et al.: CCNet: criss-cross attention for semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 603–612 (2019)

Canny, J.: A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 6, 679–698 (1986)

Hinton, G., Vinyals, O., Dean, J., et al.: Distilling the knowledge in a neural network 2(7) ar**v preprint ar**v:1503.02531 (2015)

Liu, Y., Shu, C., Wang, J., Shen, C.: Structured knowledge distillation for dense prediction. IEEE Trans. Pattern Anal. Mach. Intell. (2020)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. ar**v preprint ar**v:1412.6980 (2014)

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 62171315) and Tian** Research Innovation Project for Postgraduate Students (No. 2021YJSB153).

Author information

Authors and Affiliations

Contributions

GA, YW, and YA performed the data collection and analysis. GA completed the experiment and wrote the first draft. JG overseed and led the execution of research activities and contributed the required computational resources. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests as defined by Springer, or other interests that might be perceived to influence the results and/or discussion reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

An, G., Guo, J., Wang, Y. et al. EGBNet: a real-time edge-guided bilateral network for nighttime semantic segmentation. SIViP 17, 3173–3181 (2023). https://doi.org/10.1007/s11760-023-02539-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02539-6