Abstract

Recently, owing to the requirements of inference speed, most real-time semantic segmentation networks often have shallow network depth, which limits the receptive field size of the model, leading to the limited acquisition of semantic information and resulting in intraclass inconsistency and ultimately a decrease in segmentation accuracy. Additionally, the shallow network depth also restricts the feature extraction capability of the network, reducing its robustness and ability to adapt to complex scenes. To address these issues, a bilateral network with a rich semantic extractor (RSE) for real-time semantic segmentation (BRSeNet) is presented to perform real-time semantic segmentation. First, to solve the problem of insufficient semantic feature information extraction, an RSE is proposed, which includes a multiscale global semantic extraction module (MGSEM) and a semantic fusion module (SFM). The MGSEM can extract rich global semantics and expand the effective receptive field. Simultaneously, the SFM efficiently integrates multiscale local semantics with multiscale global semantics, resulting in more comprehensive semantic information for the network. Finally, based on the characteristics of detail and semantic branches, a bilateral reconstruction aggregation module is designed to reconstruct the contextual information of detail features, model the interdependencies on semantic feature channels, and enhance feature representation. Comprehensive experiments on the challenging Cityscapes and ADE20K datasets are conducted. The experimental results show that the proposed BRSeNet achieves mean intersection over union of 74.9% and 35.7% at inference speeds of 74 and 65 frames per second, respectively, and ensures a favorable balance between segmentation accuracy and inference speed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Semantic segmentation is a classical problem in computer vision that aims to assign pixel-level labels to images. A fully convolutional network (FCN) [1] first accomplished the semantic segmentation task in a fully convolutional manner with VGG [2] as the backbone network, and most subsequent studies have been based on its improvements. In the past few decades, owing to the excellent performance of the deep convolutional neural network (DCNN), many semantic segmentation methods [3,4,5] have been proposed. To achieve a significant improvement in segmentation accuracy, complex backbone networks (e.g., Xception [6] and ResNet [7]) are adopted to capture high-level contextual semantics. However, these networks are usually computationally intensive and slow in inference. For some special fields, such as autonomous driving, video surveillance, and human–computer interaction, the inference speed of semantic segmentation cannot meet the requirements of these applications.

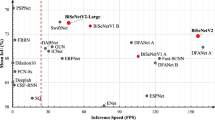

To meet the requirements of inference speed, many real-time semantic segmentation networks are designed with lightweight classification networks (e.g., mobilenet [8] and shufflenet [9]) to achieve low latency and good segmentation accuracy. In addition, some methods are used to construct special network architectures. For example, the Image Cascade Network (ICNet) [10] proposed a three-level cascade architecture that balances segmentation accuracy and efficiency by leveraging multiresolution processing. BiSeNet [11] divides the network into two branches, capable of extracting deep semantics while preserving detailed information, and introduces an attention mechanism to further enhance network robustness. BiSeNetv2 [12] also employs a two-stage structure and simultaneously utilizes attention and self-attention mechanisms to improve the perception of important features and spatial structures, thereby enhancing the quality of segmentation results and the preservation of details. Figure 1 shows the accuracy [mean intersection over union (mIoU)] and inference speed [frames per second (FPS)] achieved by several state-of-the-art semantic segmentation methods on the Cityscapes validation set. These networks have been proven to be excellent real-time segmentation networks. However, due to the limitation of parameters, the network layer of the real-time semantic segmentation network is shallow and the receptive field is small, resulting in insufficient feature extraction ability. This deficiency mainly includes two aspects: (1) The number of network layers is shallow, which leads to a small receptive field for feature extraction. When segmenting large objects, there are certain differences in the features corresponding to pixels of the same label, and these differences introduce intraclass inconsistency, resulting in a decrease in accuracy. (2) The scale of the extracted feature information is single, and there are multiple scales of segmentation targets in the image segmentation process. When a single scale is used for pixel-level classification, the robustness of the network will be reduced.

To effectively expand the receptive field of the network, DeepLabv3 [13] and PSPNet [3] utilize dilated convolutions without increasing computational costs, leading to improved accuracy of the segmentation network. However, the selection of dilation rates poses a challenge, and it can also introduce grid artifacts. Additionally, accurately capturing multiscale object information while maintaining the fast inference speed of the network is also a significant challenge. Previous approaches have attempted to address this problem in various ways. Chen et al. [14] have resized the input image to multiple ratios to extract semantic information at various scales. DeepLabv3 [13] introduced a pyramid pooling module to complete the fusion of multiscale features. DeepLabv3+ [15] aggregated contextual information at multiple scales based on the pyramid structure. EncNet [16] proposed a context encoding module to capture global context information and segment multiscale objects. These methods can extract certain multiscale information, but they typically incur significant computational costs.

To solve the problems of a real-time semantic segmentation network with a small receptive field that leads to intraclass inconsistency, a single semantic feature scale, and poor feature extraction, a bilateral network with a rich semantic extractor (RSE) for real-time semantic segmentation (BRSeNet) based on Bisenetv2 [12] is proposed in this study. Specifically, the local semantics from the three layers of the semantic branch of BiSeNetv2, referred to as the Gather-and-Expansion Layer (GE Layer), are used as input to the Multiscale Global Semantic Extraction Module (MGSEM), which leverages the excellent global feature capturing ability of Transformers to extensively aggregate contextual information across the entire receptive field. Simultaneously, a Semantic Fusion Module (SFM) is introduced to alleviate the receptive field differences between the multiscale global semantics from MGSEM and the multiscale local features from the three layers of the GE, allowing efficient fusion between the two. Additionally, to fully exploit the advantages of rich semantics, the Bilateral Reconstruction Aggregation Module (BRAM) is introduced to replace the Bilateral Guided Aggregation (BGA), enhancing the semantic feature representation while modeling the interdependencies among feature channels. The main contributions of this study are as follows.

-

1.

We propose an RSE that includes two parts: a multiscale global semantic extraction module (MGSEM) and a semantic fusion module (SFM). MGSEM is designed to extract multiscale global semantics, obtaining the receptive field of the entire image and addressing the issue of significantly poor feature extraction ability in lightweight backbone networks. Simultaneously, SFM is introduced, effectively reducing the gap between different receptive fields of semantic information. As a result, the issue of semantic interference between global and local semantics is alleviated, allowing for appropriate aggregation of this semantic information.

-

2.

BRAM is used to extract contextual information from detailed features, model interdependencies between feature channels in semantic branches, and enhance the feature representation ability of semantic information. Simultaneously, the feature maps from the detail and semantic branches are appropriately aggregated.

-

3.

Numerous experiments are conducted to verify the effectiveness of our method. BRSeNet achieves 75.6% mIoU on the Cityscapes validation set, 74.9% mIoU on the Cityscapes test set, and 35.7% mIoU on the ADE20K validation set.

Related works

Recently, semantic segmentation has rapidly developed with the support of various technologies. In this section, we mainly discuss the three most relevant aspects of this study: semantic segmentation, real-time semantic segmentation, and vision transformer.

Semantic segmentation

Recently, with the successful application of convolutional neural networks (CNNs) in the fields of artificial intelligence and computer vision, the FCN [1] achieved end-to-end training for dense prediction and semantic segmentation in a fully convolutional manner. Subsequently, a series of research methods were proposed to improve the accuracy of the model. U-Net [17] combines a "U-shaped structure" and skip connections, enabling the network to simultaneously focus on high-level semantic information and low-level fine-grained information, enhancing information detail extraction and improving the segmentation performance of the model. Based on an architecture similar to U-Net, SegNet [18] yielded a symmetric decoder structure and introduced a max pooling index to reduce the number of parameters while improving the semantic segmentation accuracy. Chen et al. [19] combined convolutional neural networks (CNNs) and Conditional Random Field (CRF) to enhance their ability to capture feature map details, and Conditional Random Fields (CRF) post-processing can smooth the segmentation results and further improve segmentation accuracy. DeepLabV3+ [15] was built based on the method used in [19] by further expanding the receptive field with dilated convolution at various rates to obtain more contextual information and capture finer boundary information. PSPNet [3] proposed pyramid pooling to aggregate contextual information from various regions, thereby improving its ability to obtain global information. Tao et al. [20] utilize feature extractors at different scales to capture image features at different scales, and combined predictions at various scales to further improve the results of the dense predictions.

Moreover, all the above methods can improve the semantic segmentation accuracy and enhance the performance of the network by adding additional modules. However, the significant computational cost increases, and the network inference speed is sacrificed.

Real-time semantic segmentation

The practical application of real-time semantic segmentation is rapidly growing with the continuous development of mobile devices, and speed has become an important metric for evaluating semantic segmentation. In previous studies, Han et al. [21] and Chen et al. [22] accelerated dense prediction by pruning unimportant parameters and reducing the number of channels, respectively. The MobileNet [8, 23, 24] series was used to propose lightweight network architectures to reduce the computational load of the network and improve the speed of dense predictions. Efficientnet [25] was introduced a new and effective composite network scaling method with a fixed set of scaling coefficients to simultaneously scale the width, depth, and resolution of the network, resulting in a reduction in the number of network parameters and an improvement in the accuracy gain. The ICNet [10] cascaded network adopts a fast-upsampling module with separable convolutions, which can effectively improve the network’s inference speed and achieve real-time semantic segmentation. LPS-Net [26] was utilized to design a multipath network architecture based on a low-latency mechanism, by gradually expanding the small network to a larger one through dimensionality extension (number of convolution blocks, number of channels, or input resolution), the balance between segmentation accuracy and inference speed is achieved. To construct a lightweight network architecture, BiSeNet [11] proposed a novel bilateral network with a high-resolution spatial path and a low-resolution contextual path, which can simultaneously focus on both detail information and semantic information. STDC [27] was employed to improve the two-branch network of BiSeNet to reduce the amount of network computation required while extracting the low-level detailed features, thereby enabling real-time semantic segmentation. In general, most real-time semantic segmentation models have limited feature extraction capabilities because of their inference speed limitations. Additionally, having a single semantic scale also affects the overall network performance.

Vision transformer

Owing to the successful use of transformers in natural language processing (NLP), numerous studies have attempted to introduce transformers into the field of computer vision, by leveraging the ability of transformers to extract global semantic information, the accuracy of semantic segmentation can be further improved. For example, ViT [28] was utilized for the first time to introduce transformer architecture to the image domain and to apply the pure transformer structure directly to image patch sequences, which is a good solution to the task of image classification. A pyramid vision transformer (PVT) [29] was used to propose a shrinking pyramid for reducing the sequence length of the transformer as the depth of the network continues to increase, simultaneously utilizing the transformer and pyramid reduction structure, which significantly reduces the computational cost. A swin-transformer [30] was used to introduce the concept of multiscale and to design a moving window to address the discrepancy between moving the transformer from the NLP domain to the CV domain, this approach is capable of effectively handling large-scale images, modeling global contextual relationships, and demonstrating good adaptability and performance. Because the spatial attention mechanism is important for dense prediction, twins [31] were employed to combine the spatial attention mechanism with the transformer to design a more effective model and enhance the accuracy of semantic segmentation. CVT [32] was applied to consider the importance of the local spatial context for image segmentation and to introduce convolution into ViT to improve the efficiency and performance of segmentation. Segformer [33] was utilized to design a new hierarchically structured transformer encoder to address segmentation performance degradation and to propose a lightweight multilayer perceptron (MLP) decoder to further balance the accuracy and inference speed of the network.

Method

Overview

To improve the semantic extraction capability of a real-time semantic segmentation network, BRSeNet is introduced based on BiSeNetV2 in this study. The network structure of the BRSeNet is shown in Fig. 2. First, the Rich Semantic Extractor (RSE) is introduced to further extract rich semantic information, which consists of two parts: the Multiscale Global Semantic Extraction Module (MGSEM) and the Semantic Fusion Module (SFM). The MGSEM module incorporates Transformer to model global contextual relationships. It downsamples the feature maps from the GE layer of the semantic branch in BiSeNetV2 to 1/64 and performs channel-wise fusion, completing the multiscale local semantic aggregation and serving as the input to MGSEM. Using the MGSEM module, multiscale global semantic information can be extracted, and the receptive field of the entire image can be obtained. The SFM module combines the multiscale global semantic information extracted by MGSEM with the multiscale local semantic information extracted by the GE layer, effectively aggregating rich multiscale semantic features. It mitigates the gap between global and local semantic information with different receptive fields to improve the accuracy of semantic segmentation. Bilateral Reconstruction Aggregation Module (BRAM) has also been designed, consisting of two parts: the Spatial Relationship Modeling (SRM) module, which models the contextual relationships of local features, and the Channel Reconstruction Module (CRM), which models the relationships between feature channels. Using self-attention mechanisms in SRM and CRM, the module can further enhance detailed information and enrich semantic information. Additionally, BRAM effectively aggregates the detailed branch and the semantic branch, further improving the accuracy of the segmentation network.

Table 1 shows the structure of BRSeNet. Opr represents operations such as conv, stem block, GE layer, downsampled, and MGSEM. C denotes the number of channels, S denotes the convolution step, and R denotes the number of operation repetitions.

For input images of size 3 \(\times \) 512 \(\times \) 1024, the Detail branch and Semantic branch are processed separately. In Detail branch, the image successively goes through three stages of convolution module, each convolution module includes two convolution layers, and one convolution with a step size of 2 is responsible for downsampling. Finally, the output feature map with a size of 128 \(\times \) 64 \(\times \) 128 is obtained. In Semantic branch, the image first passes through Stem block to obtain feature maps of size 16 \(\times \) 128 \(\times \) 256, and then passes through three stages of GE layer to generate feature maps of size 128 \(\times \) 16 \(\times \) 32. Then, the output of the three-stage GE layer is concatenated and downsampled as the input of RSE, and finally, the rich semantic feature map is obtained by RSE. The outputs of the two branches are aggregated together by BRAM and then upsampled \(\times \)8 to obtain the segmentation result.

RSE

The RSE mainly comprises MGSEM and SFM, as shown in Fig. 2. MGSEM takes the local semantic information from different stages of the GE layer as inputs and generates the multiscale global semantics. SFM alleviates the receptive field gap between multiscale local semantics and multiscale global semantics and efficiently fuses local semantics and global semantics. The RSE enables our network to adapt to segmentation tasks for objects of different sizes. In “MGSEM” and “SFM” sections, we introduce MGSEM and SFM, respectively.

MGSEM

Because the layers of the semantic branches are shallow, the receptive field of BiSeNetV2 is small, and the semantic extraction ability is insufficient. To expand the effective receptive field and extract multiscale global semantics effectively, an MGSEM based on the visual transformer is designed. The Vision Transformer introduced in the MGSEM module utilizes self-attention mechanisms to model global contextual relationships, allowing features from different positions to influence each other. It downsamples the feature maps from the GE layer of the semantic branch in BiSeNetV2 to 1/64 and performs channel-wise fusion, completing the multiscale local semantic aggregation and serving as the input to MGSEM. By utilizing this module, multiscale global semantic information can be extracted, and the receptive field of the entire image can be obtained. This approach addresses issues such as intraclass inconsistency caused by a small network receptive field, and limited feature scale in real-time semantic segmentation. As shown in Fig. 3a, MGSEM is stacked with 6-layer vision transformer blocks.

The transformer blocks in ViT comprise a layernorm, multihead self-attention, MLP, and residual connection. In multihead self-attention, a linear map is used to generate Q, K, and V, which causes the network to perform multiple flattening operations and affects its inference speed. To speedup the network, a multihead self-attention similar to LeViT [34] is adopted to replace the linear map with a convolutional map and reduce the head dimensions of Q and K. Moreover, inspired by TopFormer [35], we removed the normalization layer. The multihead self-attention in our model is shown in Fig. 3b.

In particular, the input feature map \(F^{C\times H\times W}\) undergoes three 1 \(\times \) 1 convolutions to generate Q, K, and V, as shown in Eq. (1)

where \(F^{C\times H\times W}\) represents the input feature map, \(f{q}^{1\times 1}\), \(f{k}^{1\times 1}\), and \(f{v}^{1\times 1}\) represent three 1 \(\times \) 1 convolutions, and bn represents batch normalization.

Second, we reshape Q, K, and V, respectively, as shown in Eq. (2)

where \(N = H\times W\), \(M = D\times S\), S denotes the number of heads, and D denotes the head dimension of K and Q. We follow the settings of LeViT, where \(D = 16\).

Finally, the multihead self-attention is calculated as shown in Eq. (3)

In the feed-forward network, we follow the practice of most vision transformers, as shown in Fig. 3c. A 3 \(\times \) 3 depth-wise convolution is inserted between two 1 \(\times \) 1 convolutional layers to enhance the local connections of the vision transformer. The feed-forward network can be formulated as follows:

where \(F_{input}\) and \(F_{output}\) represent the input and output feature maps, respectively, \(dwf^{3\times 3}\) represents the 3 \(\times \) 3 depth-wise convolutional layer, \(f_{a}^{1\times 1}\) and \(f_{b}^{1\times 1}\) represent two 1 \(\times \) 1 convolutional layer, and relu represents the relu activation function.

Therefore, in this case, the feature maps from different stages of the GE layer are concatenated and then downsampled to 1/64 of the original image as the input of MGSEM. Multiscale global semantic information can be obtained through 6-layer vision transformer blocks, so the network has a receptive field of the entire image. RSE is designed using transformers, which can significantly improve the segmentation effect with a small computational cost, and is also better than most networks in terms of inference speed.

SFM

To obtain rich semantics and further improve the effectiveness and robustness of the network, it is necessary to integrate multiscale global semantics with multiscale local semantics efficiently. The former is extracted using MGSEM, and the latter is retained by the GE layer. Simple aggregation operations such as element-wise addition or channel-wise concatenation are not optimal for combining these semantic information sources, because there is a gap in the receptive field between the DCNN and vision transformer. These operations may lead to semantic interference, preventing the network from accurately focusing on objects at different scales and resulting in decreased accuracy. Therefore, the introduction of SFM is aimed at mitigating the semantic information gap between local semantics and global semantics, thereby further improving the segmentation accuracy of the network. The structure of the SFM is shown in Fig. 4.

Local semantics \(F_{l}\) from the GE layer and global semantics \(F_{g}\) from MGSEM are used as the input for the SFM. The local semantics \(F_{l}\) passed through a 3 \(\times \) 3 convolutional layer, followed by batch normalization to produce L. Global semantic \(F_{g}\) passed through a 1 \(\times \) 1 convolution layer, a batch normalization layer, a sigmoid activation function, and then upsampling to generate semantic weights g. Global semantics \(F_{g}\) also passed through a 1 \(\times \) 1 convolutional layer, a batch normalization, and upsampling to produce G. Then, the local semantics L is guided by the semantic weights g to alleviate the semantic gaps. Finally, L and G are fused by element-wise addition. The SFM can be formulated as follows:

where \(F_{l}\) denotes local semantics, \(F_{g}\) denotes global semantics, bn denotes batch normalization, up denotes upsampling, sigmoid denotes Sigmoid activation function, and \(f_{L}^{1\times 1}\), \(f_{G}^{1\times 1}\), and \(f_{g}^{1\times 1}\) denote three 1 \(\times \) 1 convolutional layers.

We introduced RSE to extract multiscale global semantics, and fuse multiscale global semantics and multiscale local semantics efficiently to make the network obtain richer semantic information. On the one hand, we concatenate the outputs of GE layer at different stages to preserve the multiscale local semantics. On the other hand, MGSEM takes the multiscale local semantics as input, and produces the multiscale global semantics with the whole image receptive field. At the same time, SFM is introduced to integrate multiscale local semantics and multiscale global semantics simply efficiently. Therefore, BRSeNet takes into account both local semantics and global semantics at different scales, and has rich semantic information.

BRAM

The feature information of the semantic and detail branches is complementary. The bilateral guided aggregation in BiSeNetV2 extracts the contextual information of the semantic branch to guide the spatial branch and fuses the feature information of the two branches simply and effectively. In the case of BRSeNet, richer contextual information is included in the feature map after the introduction of RSE into the semantic branch. Inspired by SENet [36, 37], BRAM is introduced to address the two branches, which comprise two modules. For the feature maps from the detail branch, the space reconstruction module (SRM) is used to model the context relationship for the local detail features to enhance detail information. For feature maps from the semantic branch, the channel reconstruction module (CRM) is adequately adopted to model the relationship between feature channels, emphasize interdependent feature information, improve the feature representation of semantics, and take advantage of rich semantics. By utilizing BRAM, the extraction of both detailed information and semantic information is further enhanced, resulting in improved accuracy of the network.

The structure of the BRAM is shown in Fig. 5. For the feature maps from the detail branch, the feature representation is enhanced using a 3 \(\times \) 3 depth-wise separable convolution. The feature map \(D\in R^{C\times H\times W}\) is obtained, and then, D is input into the SRM to model the context relationship. For the feature maps from the semantic branch, the feature map \(S\in R^{C\times \frac{H}{4}\times \frac{W}{4}}\) is generated with a convolutional layer first. Then, S is fed into the CRM to generate the feature map \(M\in R^{C\times \frac{H}{4}\times \frac{W}{4}}\), which exhibits interdependence between channels. M is subsequently upsampled 4 times to obtain the same resolution as D. Subsequently, the sigmoid activation function is applied to generate the semantic weights, which are multiplied by the feature maps from the detail branch to complete the guidance of the contextual information. Finally, a shortcut connection and a 3 \(\times \) 3 convolutional layer are used to complete the aggregation of the two branches.

For SRM, the input feature map D is first passed through three 1 \(\times \) 1 convolutional layers and reshaped to obtain \(D1,D2,D3\in R^{C\times N}\) (here, \(N=\frac{H}{4}\times \frac{W}{4}\)). Second, the operation of matrix multiplication of \(D1^{T}\) with D2 is performed, and the softmax function is applied to generate channel attention \(E\in R^{N\times N}\). Afterward, the matrix multiplication of E with D3 is performed, and the result is reshaped as \(R^{C\times H\times W}\). Finally, a shortcut connection is added.

For CRM, three reshape operations are performed on S to obtain \(S1,S2,S3\in R^{C\times N}\) based on DANet [38]. Second, the matrix multiplication of S1 with \(S2^{T}\) is performed, and the softmax function is applied to generate channel attention \(C\in R^{C\times C}\). Then, the matrix multiplication of C with S3 is performed, and the result is reshaped as \(R^{C\times \frac{H}{4}\times \frac{W}{4}}\). Finally, a shortcut connection is added.

Experiments

In this section, we first introduce the dataset, implementation details, and evaluation metrics. Next, we present ablation experiments conducted on the Cityscapes validation set for each part of the BRSeNet. Finally, the accuracy and inference speed of BRSeNet are compared with those of other methods on Cityscapes [39] and ADE20K [40], respectively.

Datasets

To facilitate a comparison with other real-time semantic segmentation methods, we validate our model on the Cityscape, a semantic scene dataset. It contains 5000 images and is divided into training, validation, and test sets with 2975, 500, and 1525 images, respectively. The semantic segmentation task contains 19 classes, and the resolution of the images is 2048 \(\times \) 1024. To further illustrate the effectiveness of our model, we validate the model on ADE20K, which has more complex types and scenes. The ADE20K dataset contains 25K images in total, covering 150 classes. All images were segmented into 20K/2K/3K for training, validation, and testing.

Implementation details

Our implementation is executed based on Pytorch and MMSegmentation [41]. Instead of pretraining on the semantic branches, we train our model using stochastic gradient descent (SGD) [41]. With a momentum of 0.9, the weight decay is 0.0005. The initial rate is set to 0.04 with a “poly” learning rate, and a factor of 1.0 is used. For each dataset, we use a 160K scheduler with a batch size of 16. We applied data augmentation through random resize with a ratio in the range of 0.5\(-\)2.0, random horizontal flip**, and random crop** to 512 \(\times \) 1024 and 769 \(\times \) 769 for Cityscape and 512 \(\times \) 512 for ADE20K. The same model is trained five times and the standard deviation is calculated. Concurrently, we do not use online hard example mining (OHEM) [42], such as BiSeNetV2.

In the inference section, for the 2048 \(\times \) 1024 resolution input, we first resize it to 1024 \(\times \) 512 resolution for inference and then resize the prediction to the original size of the input. We measure the inference time using a GPU card and repeat 5000 iterations to eliminate error fluctuations. We conduct experiments based on PyTorch 1.10. The measurement of inference time is executed on an NVIDIA GeForce GTX 1080Ti with CUDA 11.3 and cuDNN 8.2.

Evaluation metrics

To evaluate the performance and efficiency of the proposed network (single-scale test), we adopt the number of parameters (Params) and floating-point operations (FLOPs), which measure the memory consumption and computational complexity of the model, respectively. Moreover, we use mIoU and FPS to evaluate the segmentation accuracy and latency, respectively. Notably, mIoU can be calculated as follows:

where TP, FN, and FP denote the numbers of true-positive, false-negative, and false-positive pixels, respectively.

Ablation study

In this section, ablation experiments are conducted to validate the effectiveness of each module in our network. All the results are obtained by training the training set and evaluating the validation set.

Ablation studies on MGSEM

First, to verify the effectiveness of MGSEM in RSE (w/SFM), BiSeNetV2 without a context embedding block (CEB) is used as the baseline. To make a fair comparison, we iterate 160K (150K in the original BiSeNetV2) for all methods. The experimental results are shown in Table 2. MGSEM improves by 2.83% (72.22%\(\rightarrow \)75.05%) over baseline, and GFLOPs increase by 0.53 (21.07\(\rightarrow \)21.60), which is 1.84% (73.21% \(\rightarrow \) 75.05%) higher than CEB. Although CEB uses global average pooling and shortcut connections to embed global context information, MGSEM has better global semantic extraction than CEB.

Second, to fully verify the importance of multiscale local semantical information for MGSEM, the outputs of the GE layer at various stages are used as the input of the MGSEM. The experimental results are shown in Table 2. From Table 2, we can see that the effect is the worst when only stage5 is used as the input to MGSEM, and the best effect is achieved when the outputs of the three stages are combined as input to MGSEM, reaching 75.05%.

Figures 6 and 7 show the visualization results of the ablation experiments conducted on the Cityscapes validation set and the ADE20K validation set for MGSEM. From these figures, it can be observed that MGSEM successfully expands the network’s receptive field and significantly improves the segmentation accuracy for large objects. Specifically, the segmentation results in the red boxes in Figs. 6 and 7 demonstrate noticeable improvements in segmentation accuracy. Furthermore, it is observed that preserving the multiscale local semantic features as input to MGSEM also improves the segmentation accuracy for medium and small objects. This is evident from the results in the yellow boxes in Figs. 6 and 7. Moreover, more refined object edge segmentation results can be observed in the fourth-column yellow boxes in Fig. 6 and the third and fourth-column yellow boxes in Fig. 7.

Therefore, in summary, MGSEM is able to extract global semantic information, thereby improving classification accuracy. Additionally, by preserving multiscale local semantic features as input to MGSEM, the network’s robustness is effectively enhanced to adapt to segmentation tasks of various object sizes, and it slightly improves the precision of segmenting object edges.

Ablation studies conducted on SFM

To evaluate the necessity of fusion between multiscale local semantics and multiscale global semantics using SFM in RSM, ablation studies were conducted using various fusion methods. The experimental results are presented in Table 3. Here, the RSE without SFM is taken as the baseline, which only outputs global semantics. It is worth noting that despite using simple fusion methods such as sum or concatenation for the local and global semantics, the accuracy still increased by 0.51% and 0.67%, respectively. This demonstrates the necessity of fusion for both local and global semantics. The improved SFM first utilizes the weights of global semantics to guide the local semantics and then combines the guided local semantics with the upsampled global semantics. This effectively mitigates the gaps between local and global semantics. The semantic information of various receptive fields is fused efficiently, and the accuracy is increased by 1.1%.

Figures 8 and 9 show the visualization results of the ablation experiments conducted on the Cityscapes validation set and the ADE20K validation set. Local semantic information involves the details and local features of different objects. However, to fully comprehend the entire scene, the influence of global semantic information must be considered. By introducing SFM, the gap between local semantic information and global semantic information can be effectively alleviated, resulting in more accurate segmentation of complete object contours and improved semantic segmentation accuracy. Specifically, from the red boxes in Figs. 8 and 9, it is evident that compared to simple fusion methods, such as summation and concatenation, the use of SFM leads to clearer object contours and a noticeable improvement in semantic segmentation accuracy.

In conclusion, mitigating the gap between local and global semantics plays an important role in semantic segmentation, as it significantly improves accuracy and enables better fusion of local and global semantics, thereby enriching the network with richer semantic information. Furthermore, this approach enhances the model’s robustness and generalization ability, allowing it to adapt to various scenarios and achieve more precise and comprehensive semantic segmentation results.

Ablation studies on BRAM

To verify the rationality of BRAM, experiments were conducted on the aggregation method of the two branches, as shown in Table 4. Compared with the baseline, which simply adds the feature maps from the two branches, BRAM achieved an accuracy increase of 1.66% (73.90%\(\rightarrow \)75.56%). Compared with BGA, BRAM improved the accuracy by 0.51% (75.05% \(\rightarrow \) 75.56%) with a small increase in computational cost. The main reason for this is that BRAM adopts a self-attention mechanism to enhance the contextual information of the detail branch. Simultaneously, BRAM models the interdependencies among the channels of the feature map in the semantic branch to enhance effective features. Consequently, BRAM is more suitable for BRSeNet than for BGA. The experiments demonstrate that BRAM effectively merges the two branches, validating its rationality.

Figures 10 and 11 present the visualization results of the ablation experiments conducted on the Cityscapes validation set and the ADE20K validation set, respectively. The self-attention mechanism in BRAM allows the feature maps in the detail branch to capture long-range contextual dependencies, mitigating the disparity between detail features and semantic features and enabling more effective aggregation of the two. The space reconstruction module (SRM) is utilized to model the context relationship for the local detail features, effectively expanding the receptive field of the local detail features and alleviating the receptive field disparity with semantic features. As shown in the second column, the second red box in Fig. 10, and the segmentation between road and lawn, as well as the third column red box in Fig. 11, depicting the segmentation between pool and cabinet, and the fourth-column red box depicting the segmentation between chair and table, BRAM helps the detail branch capture a broader range of contextual information related to the segmentation targets. This enables the detail branch to better understand the relationships between the target object and other objects, thereby improving the accuracy of segmenting the target object. Furthermore, BRAM enhances the attention of the detail branch toward the details of the segmentation target, as observed in the red boxes in Fig. 10 and the first and second-column red boxes in Fig. 11, leading to improved accuracy and preservation of these details.

In summary, using BRAM to enhance the contextual information in the detail branch provides long-range contextual dependencies, strengthens detail information, and improves contextual awareness. These effects collectively contribute to semantic segmentation tasks, enhancing the accuracy, detail preservation, and overall semantic understanding of the segmentation results.

The influence of the amount of vision transformer blocks in MGSEM

MGSEM mainly comprises vision transformer blocks. Thus, the number of blocks D is an important parameter. Table 5 shows the effects of varying D. Based on the conventional opinion, the higher the D value, the better the effect. However, from the experimental results, we can see that the segmentation accuracy is the highest when \(D = 6\), reaching 75.56%. Furthermore, the computational cost of the network is also shown in Table 5, which illustrates the lightweight nature of MGSEM from the other side. Figure 9 shows the visualizations of this experiment on the Cityscapes validation set.

Figures 12 and 13 show the visualization results of the ablation experiments conducted on the Cityscapes validation set and the ADE20K validation set. From the red boxes in Figs. 12 and 13, it can be observed that as the number of blocks D increases, the segmentation accuracy improves gradually. Particularly, at D=6, the experimental results achieve the best performance.

Comparison with state-of-the-art methods

In this subsection, we compare BRSeNet with other state-of-the-art methods on Cityscapes and ADE20K.

Experiments conducted on Cityscapes

As shown in Table 6, our method achieves an mIoU of 74.9% at 74 FPS. Compared to high-quality segmentation methods, BRSeNet may have slightly lower accuracy, but it leads significantly in terms of inference speed, while reducing computational costs. For example, compared to FCN, DeeplabV3+, and Segformer, BRSeNet significantly improves inference speed by 38.9\(\times \), 37.0\(\times \), and 29.6\(\times \), respectively, while reducing computational complexity by 97.8%, 97.6%, and 96.6%. Compared to most real-time semantic segmentation methods, our approach achieves higher accuracy while maintaining high inference speed. From the table, it can be observed that our proposed method outperforms FCN, DeepLabV3+, ERFNet, and SegFormer in both segmentation accuracy and inference speed. Compared to BiSeNetV1, BiSeNetV2, and Topformer, although there is a slight decrease in inference speed, our method achieves better segmentation accuracy, with improvements of 9.6%, 4.2%, and 13.5% on the validation set, respectively.

To provide a detailed analysis of the segmentation results obtained by our proposed network, Fig. 14 displays the visualization results on the Cityscapes dataset. From the red boxes in Fig. 14, it can be observed that networks, such as FCN, PSPNet, DeepLabv3+, and BiSeNet, have some errors in segmenting large objects, such as roads, buildings, trees, and sky. The main reason is the limited receptive field of CNN, which introduces inconsistencies between features corresponding to pixels of the same label and affects segmentation quality. However, BRSeNet overcomes this drawback by constructing MGSEM to extract global receptive fields and extend them across the entire image. Additionally, with the help of RSE, BRSeNet possesses more comprehensive multiscale semantic information, which benefits the segmentation of large and medium-sized objects. From the red boxes in Fig. 14, it can be observed that BRSeNet performs better in segmenting objects such as traffic lights and traffic signs. Moreover, BRAM models the contextual information of local details and the relationships between channels, effectively mitigating the receptive field disparity between spatial features and semantic features, resulting in better aggregation of spatial and semantic features. Therefore, BRSeNet also outperforms BiSeNetV2 in segmenting small objects, such as fences, poles, and certain edges.

Experiments conducted on ADE20K

To demonstrate the generalization and robustness of BRSeNet, we compared it with other methods on the ADE20K dataset. These methods include FCN [1], PSPNet [3], DeeplabV3+ [15], OCRNet [43], BiSeNetV2 [12], SegFormer [25], and TopFormer [35]. The results are shown in Table 7. We can see that BRSeNet achieves an mIoU of 35.7% on ADE20K, outperforming FCN, PSPNet, DeeplabV3+, and OCRNet by 16%, 6.1%, 1.7%, and 0.6% in terms of segmentation accuracy, respectively. Additionally, BRSeNet also exhibits faster inference speed, surpassing these networks by 1.0\(\times \), 1.1\(\times \), 1.5\(\times \), and 2.2\(\times \), respectively. Compared to BiSeNetV2, although it has a relatively lower inference speed, BRSeNet achieves a higher segmentation accuracy by 3.2%. The emergence of MGSEM in BRSeNet endows it with more comprehensive feature extraction capabilities compared to general CNNs, further validating its generalization and robustness. However, when compared to the latest methods, such as SegFormer and TopFormer, BRSeNet slightly falls behind, indicating possibilities for further improvement in future work.

For a comprehensive analysis of the proposed method, Fig. 15 displays the visualization results of BRSeNet and DeeplabV3+ on ADE20K. From Fig. 15, it can be observed that the proposed method avoids many segmentation errors compared to DeeplabV3+. This is because RSE enables BRSeNet to capture the entire image size receptive field and extract rich semantic information. Moreover, from the red boxes in Fig. 15, it can be observed that BRSeNet performs better in segmenting small objects and preserving boundaries. This is mainly attributed to the effective aggregation of details and semantic information by BRAM.

Conclusion

To solve the problem of insufficient feature extraction ability for real-time semantic segmentation, a bilateral network with RSE is proposed for real-time semantic segmentation. RSE includes MGSEM and SFM; first, MGSEM extracts multiscale global semantic information through a multiscale vision transformer, which effectively expands the effective receptive field of the network. Second, SFM efficiently integrates multiscale local semantics and multiscale global semantics, so the network has rich semantic information. Finally, we design BRAM based on the respective characteristics of the detail and semantic branches, reconstruct the contextual information of detail features, model the interdependence between semantic feature channels, and enhance the feature representation ability of semantic information. Experimental results show that the proposed network achieves a good balance between segmentation accuracy and inference speed. In a subsequent study, we will continue to investigate how to extract rich semantic information and the balance between detail and semantic information.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code Availability

The code are available from the corresponding author upon reasonable request.

References

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3431–3440

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. ar**v:1409.1556

Zhao H, Shi J, Qi X, Wang X, Jia J (2017) Pyramid scene parsing network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2881–2890

Lin G, Milan A, Shen C, Reid I (2017) Refinenet: multi-path refinement networks for high-resolution semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1925–1934

Chen L-C, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2017) Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intell 40(4):834–848

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L-C (2018) Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4510–4520

Zhang X, Zhou X, Lin M, Sun J (2018) Shufflenet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6848–6856

Zhao H, Qi X, Shen X, Shi J, Jia J (2018) Icnet for real-time semantic segmentation on high-resolution images. In: Proceedings of the European conference on computer vision (ECCV), pp 405–420

Yu C, Wang J, Peng C, Gao C, Yu G, Sang N (2018) Bisenet: bilateral segmentation network for real-time semantic segmentation. In: Proceedings of the European conference on computer vision (ECCV), pp 325–341

Yu C, Gao C, Wang J, Yu G, Shen C, Sang N (2021) Bisenet v2: bilateral network with guided aggregation for real-time semantic segmentation. Int J Comput Vis 129(11):3051–3068

Chen L-C, Papandreou G, Schroff F, Adam H (2017) Rethinking atrous convolution for semantic image segmentation. ar**v:1706.05587

Chen L-C, Yang Y, Wang J, Xu W, Yuille AL (2016) Attention to scale: scale-aware semantic image segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3640–3649

Chen L-C, Zhu Y, Papandreou G, Schroff F, Adam H (2018) Encoder–decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European conference on computer vision (ECCV), pp 801–818

Zhang H, Dana K, Shi J, Zhang Z, Wang X, Tyagi A, Agrawal A (2018) Context encoding for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7151–7160

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 234–241

Badrinarayanan V, Kendall A, Cipolla R (2017) Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell 39(12):2481–2495

Chen L-C, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2014) Semantic image segmentation with deep convolutional nets and fully connected crfs. ar**v:1412.7062

Tao A, Sapra K, Catanzaro B (2020) Hierarchical multi-scale attention for semantic segmentation. ar**v:2005.10821

Han S, Pool J, Tran J, Dally W (2015) Learning both weights and connections for efficient neural network. Adv Neural Inf Process Syst 28:1135–1143

Chen X, Wang Y, Zhang Y, Du P, Xu C, Xu C (2020) Multi-task pruning for semantic segmentation networks. ar**v:2007.08386

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. ar**v:1704.04861

Howard A, Sandler M, Chu G, Chen L-C, Chen B, Tan M, Wang W, Zhu Y, Pang R, Vasudevan V, etal (2019) Searching for mobilenetv3. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 1314–1324

Tan M, Le Q (2019) Efficientnet: rethinking model scaling for convolutional neural networks. In: International conference on machine learning. PMLR, pp 6105–6114

Zhang Y, Yao T, Qiu Z, Mei T (2022) Lightweight and progressively-scalable networks for semantic segmentation. ar**v:2207.13600

Fan M, Lai S, Huang J, Wei X, Chai Z, Luo J, Wei X (2021) Rethinking bisenet for real-time semantic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9716–9725

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. ar**v:2010.11929

Wang W, **e E, Li X, Fan D-P, Song K, Liang D, Lu T, Luo P, Shao L (2021) Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 568–578

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022

Chu X, Tian Z, Wang Y, Zhang B, Ren H, Wei X, **a H, Shen C (2021) Twins: Revisiting the design of spatial attention in vision transformers. Adv Neural Inf Process Syst 34:9355–9366

Wu H, **ao B, Codella N, Liu M, Dai X, Yuan L, Zhang L (2021) Cvt: introducing convolutions to vision transformers. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 22–31

**e E, Wang W, Yu Z, Anandkumar A, Alvarez JM, Luo P (2021) Segformer: simple and efficient design for semantic segmentation with transformers. Adv Neural Inf Process Syst 34:12077–12090

Graham B, El-Nouby A, Touvron H, Stock P, Joulin A, Jégou H, Douze M (2021) Levit: a vision transformer in convnet’s clothing for faster inference. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 12259–12269

Zhang W, Huang Z, Luo G, Chen T, Wang X, Liu W, Yu G, Shen C (2022) Topformer: token pyramid transformer for mobile semantic segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12083–12093

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. Adv Neural Inf Process Syst 30:6000–6010

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3146–3154

Cordts M, Omran M, Ramos S, Rehfeld T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B (2016) The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3213–3223

Zhou B, Zhao H, Puig X, Fidler S, Barriuso A, Torralba A (2017) Scene parsing through ade20k dataset. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 633–641

Bottou L (2010) Large-scale machine learning with stochastic gradient descent. In: Proceedings of COMPSTAT’2010. Springer, pp 177–186

Shrivastava A, Gupta A, Girshick R (2016) Training region-based object detectors with online hard example mining. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 761–769

Zhang H, Dana K, Shi J, Zhang Z, Wang X, Tyagi A, Agrawal A (2018) Context encoding for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7151–7160

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 61602157), and Henan Science and Technology Planning Program (Grant No. 202102210167).

Author information

Authors and Affiliations

Contributions

SZ: conceptualization, methodology, and supervision. XW: conceptualization, methodology, and supervision. KT: data curation, writing—original draft preparation, visualization, investigation, validation, and writing—reviewing and editing. YY: data curation, writing—original draft preparation, visualization, investigation, validation, and writing—reviewing and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Consent to participate

All authors agreed to participate in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhao, S., Wu, X., Tian, K. et al. Bilateral network with rich semantic extractor for real-time semantic segmentation. Complex Intell. Syst. 10, 1899–1916 (2024). https://doi.org/10.1007/s40747-023-01242-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01242-w