Abstract

In teacher education, video representations of practice offer a motivating means for applying conceptual teaching knowledge toward real-world settings. With video analysis, preservice teachers can begin cultivating professional vision skills through noticing and reasoning about presented core teaching practices. However, with novices’ limited prior knowledge and experience, processing transient information from video can be challenging. Multimedia learning research suggests instructional design techniques for support, such as signaling keyword cues during video viewing, or presenting focused self-explanation prompts which target theoretical knowledge application during video analysis. This study investigates the professional vision skills of noticing and reasoning (operationalized as descriptions and interpretations of relevant noticed events) from 130 preservice teachers participating in a video-analysis training on the core practice of small-group instruction. By means of experimental comparisons, we examine the effects of signaling cues and focused self-explanation prompts on professional vision performance. Further, we explore the impact of these techniques, considering preservice teachers’ situational interest. Overall, results demonstrated that preservice teachers’ professional vision skills improved from pretest to posttest, but the instructional design techniques did not generally offer additional support. However, moderation analysis indicated that training with cues fostered professional vision skills for preservice teachers with low situational interest. This suggests that for uninterested novices, signaling cues may compensate for the generative processing boost typically associated with situational interest. Research and practice implications involve the consideration of situational interest as a powerful component of instructional design, and that keyword cueing can offer an alternative when interest is difficult to elicit.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Video-analysis is a promising multimedia learning activity for complex skill development in teacher education. It provides preservice teachers (PSTs) with opportunities to apply theoretical knowledge toward real-world settings by noticing and reasoning about core teaching practices (Derry et al., 2014; Grossman, 2021). Such activities help them develop skills for professional visionFootnote 1 of important teaching and learning events (Seidel & Stürmer, 2014; van Es & Sherin, 2008). While course-long professional vision trainings are particularly effective (e.g., van Es et al., 2017), even a one-time training can facilitate improvements. For example, short video analysis tasks prepared with theoretical pre-training texts for application can support content-specific noticing and reasoning, particularly with videos focusing on specific contents to reduce complexity (e.g., small-group tutoring instead of whole class instruction; Farrell et al., 2022; Martin et al., 2023).

Learning with video can be motivating, but its transient information presentation can also be challenging for novices (Derry, et al., 2014; Mayer, 2014). Research on multimedia learning emphasizes instructional design implications and suggests techniques to offer support (Mayer, 2005). For example, segmenting videos into shorter meaningful events has been shown to buffer the disadvantages of transient information for PSTs during training (Martin et al., 2022). Another helpful technique, investigated in the present study, may be signaling keyword cues during video viewing, which highlight events linked to pre-trained theoretical knowledge (van Gog, 2014). Furthermore, focused self-explanation prompts that connect to key theoretical concepts may help learners to reason about relevant events (Martin et al., 2022; Renkl & Eitel, 2019; Wylie & Chi, 2014).

This study is part of a larger research project investigating various multimedia instructional design techniques which aim to support novice learning with video. The present study investigates PSTs’ professional vision performance, namely, noticing and reasoning about relevant events in video after a training. We further examine the effect of signaling cues and focused self-explanation prompts as training techniques which may facilitate additional improvements to professional vision skill development (via repeated-measures MANOVA). Moreover, we explore the moderating role of situational interest (with multiple regression analysis). Results will contribute evidence about the development of professional vision after our training, as well as information on effectiveness of these support techniques for the present context, which provide guidance to instructional designers and offer ideas for further research. With our additional focus on situational interest, this study contributes to the increasing demand to bring cognitive and motivational design considerations together in multimedia learning (Mayer, 2014; Park et al., 2014).

Multimedia learning with video analysis: Training professional vision in teacher education

Teachers in training need multiple practice-oriented learning opportunities. However, beginning with real teaching scenarios is often too complex and overwhelming (Syring et al., 2015). Thus, practice opportunities should gradually increase in authenticity and complexity through tasks approximating authentic core teaching practices (Ball & Forzani, 2009; Grossman et al., 2009). According to the Framework for Teaching Practice in Professional Education, it is suggested that novices rehearse a particular element of practice on a continuum of authenticity, starting with approximation tasks that focus on a reduced set of practice components and have many low-stakes practice opportunities, and gradually work up to enacting the authentic practice itself with support (Grossman et al., 2009). For example, approximating the practice of noticing important teaching and learning moments while teaching might first begin with analysis of a written case, then video analysis (as in the present study), then real-time role-plays and simulations, and finally making noteworthy observations during student teaching with feedback from the instructor.

Within this Framework, (computer-based) instruction using complementary media (e.g., text, pictures, video, animation, etc.; Mayer, 2001), also termed multimedia learning environments, offer promise for PSTs’ complex skill development (e.g., Eilam & Poyas, 2009) by capitalizing on the particular advantages of different representational formats (e.g., text or picture) and presentation modalities (e.g., auditory and visual; Mayer & Moreno, 2003; Weidenmann, 1997). However, for meaningful learning, PSTs must actively engage in cognitive processing (Mayer, 2001, 2014). Since novices’ processing capacity is particularly limited, instructional designers can mitigate the challenges associated with different representations and modalities (Mayer & Fiorella, 2014; Mayer & Moreno, 2003). For example, they can incorporate instructional support techniques, such as signaling cues or focused prompts, that assist in core practice training.

Many experienced teachers exhibit core teaching practices at the expert level, such as efficiently noticing meaningful teaching and learning patterns and automatically reacting with adaptable action (Berliner, 2001). These skills of professional vision (Es & Sherin, 2002; Goodwin, 1994; Seidel & Stürmer, 2014; van Es & Sherin, 2021) capitalize on the spontaneous processing developed over years of accumulated experiential knowledge and practice (Berliner, 2001). For novices, however, who have yet to develop this experiential repertoire, knowledge-based decision-making can take considerable cognitive effort (Leinhardt & Greeno, 1986). Thus, student teachers often default to making intuitive decisions without considering theoretical knowledge (see apprenticeship of observation, Lortie, 1975), or sticking rigidly to curriculum plans rather than adapting teaching to the context (Borko & Livingston, 1989; Westerman, 1991).

For training professional vision skills in teacher education, the multimedia learning activity of video analysis offers a valuable platform for approximating core practices (Moreno & Ortegano-Layne, 2008; Stürmer et al., 2016). Even within the scope of video analysis itself as an approximation of practice, we can further design tasks on a continuum of authenticity, which align with the learning goals of the task and are ideally tailored to the needs of the learner (Blomberg et al., 2013). Instruction can include representations of practice (Grossman, 2021) in the form of example teaching videos, from simplified, scripted scenarios (as in the present study) to more complex authentic videos from real classrooms. This learning material can be paired with practice-oriented activities for conceptual knowledge application. Moreover, these trainings typically emphasize particular aspects of teaching and learning, depending on the content focus for noticing and reasoning practice (e.g., professional vision of classroom management; Gold et al., 2021; noticing of student thinking in mathematics; Jacobs et al., 2010). Therefore, PSTs can use these tasks to practice selecting relevant information, organizing it into meaningful cognitive representations, and integrating this knowledge across different information sources and their own prior knowledge (Chung & van Es, 2014; Mayer, 2001). Without the pressure of in-the-moment teaching (Sherin et al., 2009), analyzing video-based examples offers a flexible approximation of practice format for breaking down and deliberately practicing the processing components of professional vision, that is, noticing and reasoning about relevant teaching and learning events (Ericsson, 2006; Grossman, 2011; Sherin & van Es, 2009).

The noticing component of professional vision involves the attention allocation and selection of teaching and learning events, relevant for the given learning goals in the respective context (Blomberg et al., 2011; Star & Strickland, 2008; van Es, 2011). For training professional vision in video analysis, noticing is typically elicited reflectively, with oral or written accounts of what was selected by the observer. With various qualitative, quantitative, and mixed-methods measures of this component, the literature contains diverse terminology representing different facets of the noticing component (e.g., noticing, noticing focus, attending/perceiving, describing, etc.; Chan et al., 2021; Santagata et al., 2021). When elicited qualitatively, learners’ descriptions provide information about several aspects of the noticed events. Mention or absence of the target teaching and learning content provides information about what was attended to or missed (van Es & Sherin, 2021). Further, the description can indicate how focused the learner was toward these content-specific events versus aspects outside the aim (Martin et al., 2022). Moreover, the level of relevant, knowledge-related elaboration demonstrates the sophistication of learners’ mental representations of the target events (Jacobs et al., 2010; Santagata et al., 2007).Footnote 2 Thus, in the present study context, we operationalize PSTs’ professional vision noticing as a measure of their video analysis description skills, namely, their description of noticed events and the level of focus and sophistication in which they are described (Farrell et al., 2022; van Es, 2011).

The reasoning component of professional vision represents the way observers make sense of noticed events (Gegenfurtner et al., 2020; Seidel & Stürmer, 2014; Sherin & van Es, 2009). In the context of video analysis, this is typically demonstrated through learners’ interpretations, which show their capacity to make connections between instructional material and their own conceptual knowledge (van Es, 2011). Knowledge-based interpretations (e.g., cause-and-effect explanations, predictions of cognitive/motivational learning consequences) make logical inferences about noticed events using knowledge from the learning materials and/or teaching and learning theory as evidence to support their claims (Santagata et al., 2007; Schäfer & Seidel, 2015). Uninformed interpretations typically lack knowledge-based evidence, and rather make less cultivated arguments, such as unjustified assumptions or judgmental evaluations (Farrell et al., 2022; van Es, 2011). PSTs’ level of argument coherence and complexity gives an indication of how well they have integrated their knowledge about particular theoretical concepts together with the corresponding practice-in-action they noticed (Jacobs et al., 2010; Kersting, 2008). Thus, for the present study, PSTs’ video analysis interpretation skills, that is, their interpretations of noticed events and the level of argumentation sophistication in which they are interpreted, comprise the operationalization of the reasoning component of professional vision (Farrell et al., 2022; van Es, 2011).

Professional vision development is most effective with deliberate practice across a series of lessons (e.g., Santagata & Taylor, 2018; van Es et al., 2017). However, even a one-time training can begin to make a difference when paired with a theoretical text pre-training (Martin et al., 2023). Moreover, there are indications that presenting video materials depicting a simplified instructional context (e.g., small-group tutoring) may offer PSTs support in student-centered focus and knowledge-based interpretations (Farrell et al., 2022). The use of evidence-based scripted videos (as in the present study), which are developed according to validity and quality assurance guidelines from research (e.g., Dieker et al., 2009; Kim et al., 2006; Piwowar et al., 2018; Seidel et al., 2023), can also offer complexity-reducing benefits. Through careful planning, development, and editing, they reduce the extraneous information presented, while also allowing for target learning material to be demonstrated saliently, further reducing the cognitive demand for novice learners (Fischer et al., 2022; Piwowar et al., 2018). In addition, successful video analysis tasks focus on a selected set of skills around specific learning goals (Blomberg et al., 2013; Kang & van Es, 2019). Nevertheless, it is also recommended to take a holistic approach in the sense that the complete skill is trained, but initially, only applied to cases of low complexity (see 4C-ID, van Merriënboer & Kirschner, 2018). Moreover, designers should consider the training-relevant prior knowledge and experiences of the target training group, since these variables play a role in their professional vision skills and development potential (König et al., 2022). Further design techniques for processing support can tailor multimedia learning environments toward novices’ needs.

Instructional design techniques for novices’ video analysis processing support

The Cognitive Theory of Multimedia Learning (CTML; Mayer, 2001) provides a framework for instructional design of multimedia environments for novice learning. This theory assumes: (1) visual and auditory information is processed through separate channels; (2) each channel is limited in the amount of processing that can take place at once; and (3) learning occurs through active processing which involves information selection, organization into mental representations, and knowledge integration (Mayer, 2014). When develo** multimedia learning environments, designers should try to optimize conditions with the right balance of information presentation from varying media and instructional activities (Paas et al., 2004; van Merriënboer et al., 2006).

Each type of learning material medium offers potential benefits. Video scenes of teaching used as learning material, for example, have the advantage of depicting representations of practice (Blomberg et al., 2013; Brophy, 2004; Grossman et al., 2009). Additionally, learning with video representations of practice can enhance noticing and reasoning skills, promote effective learning, and increase motivation (Gaudin & Chaliès, 2015). These outcomes have also been noted when scripted video representations of practice were used as a learning material medium (e.g., Codreanu et al., 2020; Farrell et al., 2022). Moreover, scripted videos can be particularly useful for novices when developed according to evidence-based guidelines (e.g., Piwowar et al., 2018). For video-based multimedia learning, the cognitive-affective motivational construct of situational interest is particularly relevant, since this psychological state involves momentary attention and engagement toward an object of interest and is typically induced, or triggered, by external aspects of the learning context (e.g., video design or content; Hidi & Renninger, 2006; for more, see subsection “Situational Interest and Multimedia Instructional Design”).

While video representations as learning materials may offer several benefits, they may also introduce potential challenges (Derry et al., 2014). Novices are especially vulnerable to processing overload, since they often lack prior knowledge or sophisticated schemas in long-term memory which facilitate efficient knowledge acquisition (Chandler, 2004). Thus, video learning, generally speaking, can be difficult due to the transient nature of information presentation. Learners’ processing may exceed capacity if they must invest too much effort searching for pertinent information. Moreover, they may waste processing energy on task-irrelevant information or miss incoming material because they are already holding too much previously-presented information in working memory (i.e., representational holding, Mayer & Moreno, 2003). Therefore, instruction designed for novices should support generative processing and reduce the necessity for unproductive processing in dealing with extraneous information (CTML assumptions 1 and 2, Mayer, 2014; see also, Cognitive Load Theory, Sweller, 1988; Sweller et al., 2019). Kee** novices’ needs in mind, training materials can be designed to reduce complexity, for example, as in the present study, with the use of scripted videos (e.g., Clarke et al., 2013; Deng et al., 2020), and a simplified instructional context (van Merriënboer & Kirschner, 2018). Moreover, several instructional methods can help to mitigate potential difficulties. For video analysis training, we consider two techniques: signaling cues and focused self-explanation prompts. Moreover, we explore the generative processing advantages of situational interest and its potential for moderating support.

Signaling cues in video analysis

For novices with limited prior knowledge, salient information within learning materials typically receives most attention, even when irrelevant to the learning task (Lowe, 1999). Thus, deep learning is challenging. Signaling, or cueing, is a supportive instructional technique that draws attention to important aspects within the learning material (van Gog, 2014). It supports learners to more effectively manage essential cognitive processing and reduce extraneous processing (Mayer & Moreno, 2010). Several recent meta-analyses on signaling (Alpizar et al., 2020; Richter et al., 2016; Schneider et al., 2018; ** between theoretical principles and cases to develop principle-based schematic representations (Renkl & Eitel, 2019; Rittle-Johnson et al., 2017). This “interconnected knowledge” allows learners to know when and how to apply underlying principles to make interpretations about new cases (Renkl & Eitel, 2019, p. 533). Thus, this instructional technique is particularly useful for the processing step of knowledge integration (CTML assumption 3, Mayer, 2014). Learning from video in teacher education often involves making connections across transient information using underlying educational principles to make sense of the events (Derry, et al., 2014; Leahy & Sweller, 2011). Therefore, the principle-based self-explanation technique may support learning in this context.

Self-explanation prompts can be designed along a continuum, ranging from least structured (e.g., open-ended prompts) to most structured (e.g. menu-based prompts with drop-down list). Open-ended prompts have few restrictions on learners’ explanations, freely promoting the generation of links between new learning material and relevant underlying principles (Renkl & Eitel, 2019; Rittle-Johnson et al., 2017). Foundational professional vision video analysis research used open-ended prompts (e.g., “What do you notice? What’s your evidence? What’s your interpretation of what took place?”, Sherin & van Es, 2005, p. 480). In contrast, focused self-explanation prompts are another less-structured format, but differ from open prompts in that they directly specify how the target learning content should be explained (Wylie & Chi, 2014). Focused prompts in professional vision video analysis typically emphasize a specific component of the video (e.g., “Please make sense of the lesson by dividing it into its main parts, reflect on the learning goals of each part, and on the relationships between the parts within the overall lesson structure”; Santagata et al., 2007, p. 128).

The general consensus from the few studies that have examined open vs. focused prompts is that both prompts improve learning, but focused prompt groups typically have greater learning gains (e.g., Gadgil et al., 2012; Van der Meij & de Jong, 2011). This pattern suggests that tasks linking specific information across learning materials help learners to better integrate knowledge (Wylie & Chi, 2014). However, this pattern is less clear from recent meta-analytic evidence. One study suggested that specific, general, and a combination of both self-explanation prompts significantly improve achievement (Bisra et al., 2018). With mixed evidence on open and focused prompts facilitating learning, the question of which format is more supportive may depend on the complexity of the instructional context. Learning content from teaching video examples are usually complex. Thus, focused prompts may guide PSTs’ connections with the learning material more effectively by supporting their essential and generative processing of the target learning content (Mayer & Moreno, 2010). For professional vision training, this technique seems especially helpful for the reasoning component.

Situational interest and multimedia instructional design

Over the last decade, multimedia researchers have called for evidence building on the explicit integration of motivation into instructional design as a means to increase generative processing (Mayer, 2014; Moreno & Mayer, 2010; Plass & Kaplan, 2016). From a theoretical perspective, adding a further dimension to the CTML (Mayer, 2005), the Cognitive-Affective Theory of Learning with Media (CATLM; Moreno, 2005; Moreno & Mayer, 2010) proposes that motivation, affect, and metacognition determine the amount of available cognitive resources allocated to a learning task. It assumes that motivational and affective variables open up or block learners’ capacity for processing and cognitive engagement (Moreno, 2005).

Situational interest is one such motivational construct likely to influence multimedia learning (Mayer, 2014). Situational interest is a cognitive-affective psychological state, which directs attention, boosts cognitive functioning, and encourages emotional connection and cognitive engagement via an affective response and value related valence toward an object of interest (Hidi & Renninger, 2006; Krapp, 2002; Mitchell, 1993). As the name suggests, situational interest is principally activated from situational factors, circumstances, and/or objects within the external learning context (Hidi & Renninger, 2006; Krapp, 2002). Initial, or triggered situational interest can be activated by properties of the learning material or activity, such as strange or unexpected information, or a topic/character of personal identification (Hidi & Renninger, 2006; Rotgans & Schmidt, 2014). Situational interest is often maintained when instruction involves active participation and/or activities that convey personal significance and thereby value valence (Hidi & Renninger, 2006). It is especially influential for generative processing within challenging tasks (Moreno & Mayer, 2010) and it can endure or change throughout the learning activity (Knogler et al., 2015; Rotgans & Schmidt, 2011). Due to its externally activated properties, situational interest is a particularly appropriate motivational variable for planning and investigation within instructional design.

For a video analysis training, it is likely that situational interest could impact PSTs’ performance. It is feasible that soon-to-be teachers could identify with the topic and characters within teaching scenarios and actively involve themselves in the task, especially if they found it personally meaningful. In combination with other supportive techniques, situational interest has been implicated in learning with several multimedia design techniques (e.g., emotional design, Endres et al., 2020; decorative illustrations, Magner et al., 2014; interesting text additions, Muller et al., 2008). For signaling cues and self-explanation prompts, however, previous research has hardly included motivational constructs.

Signaling cues and motivation

Since signaling cues aim toward the attention-guidance principle (Bétrancourt, 2005), and situational interest is a motivational construct that represents externally triggered attention and engagement (Renninger & Hidi, 2002), it seems obvious that these two variables would be associated with each other in multimedia learning. Surprisingly though, apart from social cues with pedagogical agents (e.g., Park, 2015), there are no studies to date that specifically investigate signaling cues and situational interest. Broadening the scope to other motivational constructs, the research is still quite limited. Lin et al. (2014) found that intrinsic motivation significantly predicted learning outcomes for participants who had learning material with cues (i.e., single red arrow), but not for the no-cues participants. More evidence, albeit limited, comes from a recent meta-analysis which suggests a small positive relationship between signaling cues and motivation (n = 13, g = 0.13, 95% CI [0.04, 0.22], k = 13; Schneider et al., 2018). Though more evidence is needed for clarity, research suggests that signaling cues and motivation are at least somewhat related in multimedia learning. This evidence inspires exploration into how cues and motivation (e.g., situational interest) might interact with each other to improve or distract from knowledge building.

Focused prompts and motivation

Research is also limited regarding any form of self-explanation prompts and motivation in multimedia learning. So far, meta-analyses on self-explanation prompts do not offer evidence for motivational outcomes (Bisra et al., 2018; Rittle-Johnson et al., 2017). However, there are a few primary studies that address this relationship. Richey and Nokes-Malach (2015), for example, investigated the effects of offering or withholding instructional explanations to understand worked examples. They found that when learners had to construct their own understanding with self-explanations rather than getting an explanation, they were more likely to demonstrate mastery goals, which are typically associated with high interest (Grant & Dweck, 2003).

With the limited amount of mixed evidence on the nature of signaling cues, prompts, and motivation (e.g., situational interest) in multimedia learning, the exploration of these relationships could be a welcome addition to the field. For instruction that is designed to support novices’ cognitive processes (e.g., with signaling cues or focused prompts), considering the interplay between situational interest and these techniques could provide a more nuanced understanding of how these instructional elements should be designed to best foster professional vision development.

The present study and research questions

This study examines a professional vision training intervention for teacher education, which involves PSTs’ video analysis of small-group tutoring representations of practice. After PSTs’ participation in a pretest and video analysis training phase, we investigate their professional vision performance change from pretest to posttest in their descriptions and interpretations of relevant noticed events. We first aim to validate whether the overall training is effective in terms of improving professional vision, which is also an attempt to replicate previous findings from the project (Martin et al., 2022).

New to this study, in the training phase between tests, we trial two techniques for novice support informed by research in multimedia learning: signaling cues and focused self-explanation prompts (i.e., random assignment to different instructional conditions: cues/no cues, and focused/open self-explanation prompts). We further examine the impact of these techniques on professional vision development and their relationship with PSTs’ situational interest on performance.

Professional vision training and multimedia instructional design techniques

In a previous study using the same training materials, the same pretest and posttest materials, and a similar procedure (pretest one week before training, then posttest directly after training), we found that PSTs’ professional vision skills significantly improved from pretest to posttest (Martin et al., 2022). Specifically, PSTs’ professional vision skills improved in describing more noticed events with more elaborations, and in interpreting noticed events with more sophisticated knowledge-based reasoning. Before investigating the support from signaling cues and focused self-explanation prompts, we consider replicating our findings as an important prerequisite to make substantial contributions to the field of teacher education (Pressley & Harris, 1994).

Moreover, in our previous intervention study, we further investigated the impact of training with the instructional design techniques of segmenting and self-explanation prompts on PSTs’ professional vision (Martin et al., 2022). We found that these techniques did not offer additional support in the posttest after training. In the present study, we investigate the instructional design techniques of signaling cues and focused prompts. In terms of theoretically-driven hypotheses, the CTML (Mayer, 2014) would suggest that these techniques should support improvements in PSTs’ professional vision performance in a subsequent video analysis, in contrast to PSTs who train without these techniques (i.e., no cues, open prompts). However, given the findings from the previous study, it is possible that these techniques do not offer additional support for professional vision skill transfer.

More specifically, we address the following research questions:

-

RQ1.1: To what extent do PSTs’ professional vision skills improve from before a video analysis training session to a subsequent video analysis transfer task, in terms of enhancing their descriptions and interpretations of noticed tutoring events?

-

RQ1.2: To what extent does a video analysis training session using the supportive instructional design techniques of (a) signaling cues, or (b) focused self-explanation prompts, impact PSTs’ professional vision in the form of enhancing their descriptions and interpretations of noticed events in a subsequent video analysis transfer task?

Exploring situational interest moderation of instructional design techniques

Since there is limited research on situational interest’s relationship with multimedia techniques for support, exploring its moderating role could offer suggestions about when signaling cues and focused self-explanation prompts are more or less facilitative for learning, from the motivational perspective of participants. Thus, we address the following exploratory research question:

-

RQ2: For PSTs who receive signaling cues (a), or focused prompts (b) during a video analysis training, does their situational interest in the training moderate the magnitude of their professional vision performance in a subsequent video analysis transfer task?

Overall, our investigation aims to add further evidence to the field of multimedia video learning, extend our findings from previous project studies (Farrell et al., 2022; Martin et al., 2022, 2023), as well as offer new insights. We hope to further validate our video analysis training as a flexible and efficient intervention for PSTs to improve their professional vision skills in small-group instruction. Moreover, our investigation will present new evidence on the effectiveness of signaling cues and focused prompts for support in professional vision skill acquisition. Finally, our study answers the call for integration of cognitive and motivational design considerations and effects in multimedia learning research (Mayer, 2014). With our exploration of PSTs’ situational interest in combination with signaling cues and focused prompts, we hope to contribute unique findings to the field to better understand the role it may play in video analysis contexts.

Methods

Participants

Data collection initially comprised a total of 168 participants who provided at least some data for one or more phases of the study. To determine the final sample, we included all participants who completed all three data collection phases of the study (n = 38 excluded). Thus, the final sample comprised 130 preservice teachersFootnote 3 (96 female, which is typical for this population; Mage = 23.26, SDage = 2.39; Bachelors / Masters students = 35 / 95), from four German universities across two states. We focused our selection on biology teacher students, since video representations depicted instruction on the circulatory system. For ecological validity, students participated in the study within biology teacher education seminars, which lasted 90 min.

We collected data online due to covid-19 restrictions at the time, thus we expected some format-related dropout. However, it remained minimal, with the highest participant dropouts occurring from pretest to training phase (n = 19), and before completing the pretest (n = 8). These early dropout participants (n = 27) did not significantly differ from twenty-seven randomly selected included-participants on any study related preexisting variables, such as previous grades, coursework, or experiences (e.g. tutoring, video in teacher education). This indicates that these early dropout participants did not systematically differ from included participants.

In terms of included participants’ study-relevant knowledge and experiences, the PSTs had taken an average of 14.59 (SD = 9.92) courses in biology, 3.63 (SD = 2.43) courses in teaching biology, and 10.05 (SD = 10.92) courses in general pedagogical-psychology. They also performed similarly low on prior knowledge pretest measures of PPK (0.677; SD = 0.10) and biology PCK (0.675; SD = 0.10). Additionally, more than half of participants (53.8%) had no experience using video for learning during coursework. While participants varied in other study-relevant experiences, the majority tended to have lower experience levels. They had a range of zero to 239 weeks at a school internship (M = 9.51 weeks, SD = 27.57) with most participants having either no experience (n = 21; 16.4%) or one semester or less (n = 85; 66.4%). In terms of participants’ experience teaching their own classes, (M = 10.82 h, SD = 28.01, range = 0—140 h), the majority had taught zero to 20 h (n = 106; 82.8%). Finally, participants’ tutoring experience ranged from zero to 108 months (M = 13.38 months, SD = 23.22), with most having no experience (n = 53; 41.1%) or one year or less (n = 37; 28.7%).

Procedure and design

The study comprised three online data collection phases: pretest, training phase, and posttest, each one week apart. Duration between phases was based on our intention to minimize the disruption our study would cause to the teacher education seminar of our participants, as well as to provide enough time between pretest and posttest measures to reduce the potential for a validity threat from direct practice effects (Shadish et al., 2002).

For the pretest (~ 45 min), after obtaining informed consent, we gathered demographic and experiential information, including their teaching, tutoring, and video analysis experience, and the number of completed teacher education courses. Further, we gathered information on their semester of study, subject foci, and final high school grade. These measures were used in preliminary analysis to assure no a priori group differences.

Next, we measured participants’ professional vision with a video analysis. The task involved watching two short videos and writing open-response comments. Before video viewing, participants were instructed to pay attention to events they thought were educationally relevant for the tutoring context. Next, participants watched the video with no pause or review possibility. After each video, they were asked to comment on at least two events they noticed. For each event, they answered the analysis task open response: (1) describe the noticed tutor behavior/event, and (2) explain its relevance to the teaching–learning process. This open prompt allowed for a range of events to be described and interpreted at the level of the participants’ ability, thus eliciting their professional vision skills in describing and interpreting small group tutoring events they noticed. This procedure was then repeated for the second video. Participants had a minimum time-on-task of four minutes per video with no maximum time limit. The responses to this task and the respective posttest task comprise the qualitative data analyzed for scores of professional vision. Finally, with a multiple-choice test, we assessed participants’ prior knowledge of both pedagogical-psychological concepts (PPK; 24 items selected from Kunina-Habenicht et al., 2020) and biological pedagogical content knowledge (Shulman, 1987) about the circulatory system (PCK; 30 items selected from Schmelzing et al., 2013), obtaining individual measures for participants’ PPK and PCK, respectively, to check for potential a priori differences between the investigated groups.

The training phase (~ 60 min) consisted of a short pre-training text (56 sentences on average, or ~ 1000 words) outlining a set of tutoring strategies for subsequent video analyses application. Before reading, students were instructed to remember the strategies outlined in the text for application in subsequent video analyses in which they should describe and interpret the corresponding strategies they noticed in the videos.

After reading the text, participants watched the videos (different from pretest and posttest videos), with no pause or review possibility. For video viewing, participants were randomly assigned to a signaling cue condition (with signaling cues, n = 63; without cues, n = 67). For the video analyses following each video, PSTs were randomly assigned to a self-explanation prompt condition (focused prompts, n = 71; open prompts, n = 59). In both groups, participants were reminded to apply the content from the introductory text to as many specific examples as they noticed from the video (at least 4), which connected to the tutoring strategies. The instructions then reminded them to describe the tutor’s behavior as specifically as possible and interpret why this behavior was relevant to the context of tutoring.

Participants then evaluated the training on cognitive and motivational measures (i.e., cognitive load, situational interest, and utility value). PSTs’ in-the-moment situational interest in the video task was measured with a validated self-report questionnaire (Knogler et al., 2015) adapted to present study context (see Table 1). The timing of the situational interest measurements occurred directly after PSTs’ completion of the video analysis tasks in order to capture their momentary and situationally triggered response (Rotgans & Schmidt, 2014). Moreover, since the elicitation of situational interest is considered a psychological state comprising both cognitive and affective components (Krapp, 2002; Mitchell, 1993), the instrument operationalizes situational interest to capture both aspects: three items focused on features of attention-oriented cognitive value (i.e., curiosity, attention, and concentration), and three items focused on the positive emotional responses (i.e., entertaining, fun, and exciting) that were generated (Hidi & Renninger, 2006; Knogler et al., 2015). Finally, all items were directed toward the object of interest for the present study, namely, activation from the video analysis task (Hidi & Renninger, 2006; Krapp, 2002).

For other measures, we provide detailed scale information within a further project publication (Seidel et al., 2023).

In the posttest (~ 30 min), participants completed a video-analysis task parallel to the pretest. Afterwards, they evaluated the whole experience with the same cognitive and motivational measures as in the training phase. Upon completion, participants received a written debriefing and compensation (25 Euros).

Materials and conditions

Videos

The development and production process we undertook for the creation of our scripted video learning materials (of the present study and associated project studies) followed an evidence-based approach according to Dieker and coleagues (2009), Kim and colleagues (2006), and Piwowar and colleagues (2018). These resources outline specific phases and step-by-step design and development processes for creating valid scripted video cases for teacher education and professional development.

Moreover, we added a further step to this process to ensure more authentic depictions of tasks, tutor strategies, and dialogues. In this step, we created mock-up teaching scenarios, wherein biology teacher educators and preservice biology teachers gave an individually planned 30-min. lesson on the circulatory system for four 8th grade student actors. In a 25-min. preparation beforehand, the (preservice) teachers were given an assortment of teaching materials for optional use to freely plan their lesson, with the only requirement being that group work or group discussion should be implemented at some point. These lessons were video recorded by the research group.

These mock-up videos were then analyzed by the research group to extract authentic elements that were relevant to the selected tutoring strategies agreed upon from an initial literature review. These tasks, strategies, and dialogue excerpts were then planned into four script storyboards and then developed into scripts, so that scenes would represent a balance of the target learning content in each scenario in a concise way, but also retain sufficient authenticity. Next, enactment of these scripts by real teachers and students as actors were videotaped, and the final versions of the scripted videos were completed after editing. This production process is elaborated in detail in Seidel and colleagues (2023).

We used two videos for the pretest and posttest analysis task. Both videos depicted a circulatory system introductory lesson with one teacher/tutor and four eighth-grade biology students. The first video (elicitation-phase, ~ 4 min.) showed a scene from the beginning of a lesson, and the second video (learning-phase, ~ 4 min.) depicted a scenario from the middle of a lesson (see Martin et al., 2022, for study material details).

There were two different versions of each pretest/posttest video, depicting the same script but performed by different actors. The two video versions were randomly counterbalanced between pretest and posttest to reduce the likelihood of order effects. Moreover, this design reduced the possibility that results would be tied to a particular actor, but rather to the events shown, while also ensuring comparability of pretest and posttest professional vision measures. For the training phase, new elicitation-phase and learning-phase videos were used (elicitation-phase, ~ 8 min.; learning-phase, ~ 6 min.). This reduced the chances of direct practice effects in the posttest, and helped to ensure noticed events would not be tied to any particular video, but rather multiple representations of varied examples.

Preservice teachers’ perceptions of authenticity for the video learning materials were investigated in a preliminary study of the larger research project (Martin et al., 2023). All videos were rated with six items (e.g., “The video was realistic”) on a 4-point scale (1 = do not agree; 4 = fully agree; Piwowar et al., 2018). They found them to be sufficiently authentic (M = 3.00, SD = 0.46). We do not have authenticity ratings from the present study sample, however, we can assume that the previous ratings can be referenced, since the sample used was very similar (i.e., preservice biology teachers in Germany), and since the videos were used in an almost identical setting.

Training phase conditions

The experimental condition variations took place within the training phase. At the beginning, participants were randomly assigned to one of two content-focused conditions: PPK or PCK in biology. Since participants had a range of course experiences in both PPK and PCK, we randomized these texts to minimize sample-wide variance associated with participants’ prior knowledge. The respective groups received a short pre-training text outlining PPK or PCK tutoring strategies. Both texts were parallel in format and described four student-centered strategies beneficial to the tutoring context, contrasted with four instructive-style strategies, less facilitative in this context. Text length was designed according to previous research on tutoring in biology (59 sentences: Herppich et al., 2013). In previous studies of the same project, we found no significant interactions with PPK and PCK groups and the experimental variations (Martin et al., 2022, 2023). Thus, for the present study, we maintained consistency with previous study designs, but did not expect group differences in their professional vision performance and planned to combine them for main analyses.

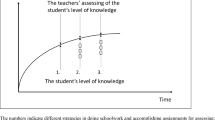

Next, during video viewing, participants watched videos with or without signaling cues. In the cues condition, the videos for analysis displayed keyword cues linking back to the tutoring strategies outlined in participants’ respective introductory text. Before an event began, the video paused for one second, displaying the tutoring strategy that would take place (Fig. 1a). Then the video resumed and the keyword cue remained in the top right corner of the video for the event’s duration (Fig. 1b). The cueing procedure repeated for the subsequent tutoring strategies in the video (elicitation-phase video: seven events; learning-phase video: 5 events). Signaling cues were determined by the research group, based on the events planned and implemented into the scripts during video and introductory text development, along with consensus discussion to determine an equal proportion of salient PPK and PCK events (see example outlined in Appendix A, Table 5). Participants in the condition without signaling cues watched plain versions of the same videos with no displayed cues.

After watching each video, open or focused self-explanation prompts were used for PSTs’ analysis comments (8 min. minimum time-on-task). The focused prompts presented the summary diagram from the introductory text (see Fig. 2a). This diagram version was interactive and participants were instructed to click on a particular tutoring strategy for each event they remembered from the video. Upon selecting a strategy, a pop-up text box appeared, instructing PSTs to describe and interpret that noticed strategy (see Fig. 2b). They were to click and comment on as many specific examples of noticed events/strategies as possible (minimum four per video).

We consider the open prompts condition the control condition, since participants did not receive the diagram, which connected to introductory text elements. Instead, participants were simply instructed to remember any tutoring events they noticed in the video and try to connect them with the introductory text content as best as they could remember. They were given an empty text box and asked to write a short report describing the events they noticed and interpret why they were relevant to the tutoring learning process. Martin et al. (2022) elaborate on the self-explanation prompt condition and provides examples of responses from the open versus focused self-explanation training groups.

Qualitative analysis and professional vision scoring

To analyze PSTs’ video analysis responses from the pretest and posttest, we used scaled qualitative content analysis (Mayring, 2014). More specifically, we used a coding scheme, which captured the content and quality of PSTs’ descriptions and interpretations of noticed events (see Appendix B, Table 6 for details). The coding scheme was adapted from coding protocols of previous project studies, which were developed by the research group to assess the instructional content and professional vision quality of PSTs’ video analysis descriptions and interpretations (Farrell et al., 2022; Martin et al., 2023). The scaled coding categories represent theoretically-based indicators extracted from literature on teacher noticing and professional vision (e.g., Kersting, 2008; Santagata et al., 2007; Seidel & Stürmer, 2014; Sherin & van Es, 2009).

For the professional vision description component, codes rated the level of content focus, detail, and information sophistication of each noticed event with a four-point scale, from unclear (0 points) to differentiated (3 points). The unit of analysis consisted of the response to the first part of the pretest/posttest analysis task open response, which asked participants to describe the tutoring event they noticed. For the professional vision interpretation component, codes rated the sophistication level of analytical argumentation and connection to knowledge-based evidence within the interpretation of each noticed event (0 to 3 points). The unit of analysis consisted of the response to the second part of the pretest/posttest analysis task open response, which asked participants to interpret the relevance of the tutoring event they noticed. In the evaluation of interpretations, we also considered the corresponding description as context, due to the integrated nature of these analyses (e.g. description as cause of predicted effect, Sherin et al., 2008).

All responses (n = 1169) were independently coded by the first author and a second trained rater. Interrater agreement was measured with intra-class correlation (ICC) based on a mean-rating (k = 2), consistency, 2-way mixed-effects model. Consistency scores indicated very good agreement for descriptions (ICC = 0.90) and interpretations (ICC = 0.89) (Koo & Li, 2016). Response examples of participant descriptions and interpretations for each scoring level are provided in Appendix C, Table 7.

Final professional vision scores were determined from this analysis. For each test, participants analyzed two videos, making two to five comments per video. The noticed event per response was categorized according to the tutoring strategy mentioned (Martin et al., 2023). Then, the description and interpretation of the noticed event in each response was analyzed and scored for professional vision on these two components, according to the coding scheme. In some instances, participants described and interpreted more than one event per response. In these cases, each event was scored separately on both components. For pretest and posttest participant-level analysis, mean scores of all comments across both videos were calculated for a participant-level test score on each component. Further, the sum of component mean scores, a total professional vision score, was also determined for analysis of RQ2.

Results

Preliminary analyses

Before our main analyses, we checked whether participants differed across conditions on any preexisting variables. We found that PSTs were not significantly different in knowledge or experience (i.e., high school GPA, (biology) teacher education courses, tutoring or student-teaching, or prior knowledge in PPK or PCK (all p values between 0.09 and 0.98)). Further, as expected, we found no interactions with instructional design techniques and the PPK/PCK content condition (Wilks’ Λ = 0.93, F(6, 226) = 1.48, p = 0.19), so we pooled text groups together for main analyses to increase statistical power. We also found no significant differences in professional vision between the different video versions used for the pretest and posttest (Wilks’ Λ = 1.00, F(2, 113) = 0.30, p = 0.74) or interactions with training conditions (Wilks’ Λ = 0.93, F(6, 226) = 1.38, p = 0.22), so we could assume the counterbalanced conditions did not affect professional vision scores.

Training professional vision and instructional design techniques

For our main analyses, first, we were interested in whether participants’ professional vision improved from pretest to posttest, regardless of training condition (RQ1.1). In addition to PSTs’ improvement overall, we further inquired whether the training instructional design techniques of signaling cues, or focused self-explanation prompts helped PSTs further improve their professional vision (RQ1.2). To investigate these questions, we performed a repeated-measures MANOVA (within-subjects factor: pretest and posttest professional vision scores of description and interpretation; between-subjects factors: cues condition and prompts condition).

Overall, our results on the first part of the first research question indicated that participants’ professional vision performance significantly improved from pretest to posttest (see Table 2 and Appendix D, Fig. 4). We found a medium-sized significant main effect of test time on professional vision (Wilks’ Λ = 0.89, F(2, 125) = 7.91; p = 0.001, partial η2 = 0.11). This effect demonstrated a significant score increase and medium effect size for descriptions (F(1, 129) = 11.06, p = 0.001, partial η2 = 0.08), and interpretations (F(1, 129) = 10.81, p = 0.001, partial η2 = 0.08). These results indicate that in contrast to the pretest, PSTs in the posttest described more noticed events using more video-specific detail, and interpreted these events using more logical connections and knowledge-based evidence to justify their arguments. Moreover, these results support findings from a previous project study, which also found significant professional vision performance improvement on a posttest directly after the training phase (Martin et al., 2022). The posttest video analysis task of the current study was completed one week after the training phase, extending previous findings to indicate at least a short-term duration of transfer effects.

For the second part of our first research question on instructional design techniques, our analysis revealed no significant effect between professional vision change score and the cues condition (PVtime * cues: Wilks’ Λ = 1.00, F(2, 125) = 0.24; p = 0.79) or the self-explanation prompts condition (PVtime * prompts: Wilks’ Λ = 0.98, F(2, 125) = 1.05; p = 0.35). The interaction between cues and focused prompts was also not significant (PVtime * cues * prompts: Wilks’ Λ = 0.98, F(2, 125) = 1.18; p = 0.31). These results indicate that neither signaling cues, nor focused self-explanation prompts, nor their combination contributed to additional improvements in participants’ professional vision scores (see Table 2 and Appendix D, Table 6).

Exploring situational interest moderation (RQ2)

Since situational interest can often have an impact on learning within multimedia environments, we wanted to explore the potential role it might play with the implemented instructional design techniques. Such analysis can uncover when and for whom signaling cues and/or focused self-explanation prompts support or do not support professional vision development, when taking participants’ interest into account.

To answer our exploratory research question, we performed moderation analyses according to Baron and Kenny’s (1986) approach with the PROCESS macro for SPSS (Hayes, 2017). The instructional design technique conditions (signaling cues: RQ2a; self-explanation prompts: RQ2b) represent the antecedent variables in the two moderation models. Participants’ situational interest ratings from the training phase represented the moderating variable, and PSTs’ total posttest professional vision score was the dependent variable. Participants’ pretest total professional vision score was added as a control variable covariate. Situational interest did not significantly correlate with either design technique, so conditions with respect to potential multicollinearity were met. Exploring the interaction between these techniques and participants’ situational interest, both overall models (i.e., including all main and interaction effects) were statistically significant (cues condition: R2 = 0.33, F(4, 125) = 15.52, p < 0.001, MSE = 0.61; prompts condition: R2 = 0.33, F(4, 125) = 15.24, p < 0.001, MSE = 0.62). Table 3 presents the relevant descriptive statistics for the moderation.

In the moderation model for signaling cues, the interaction term was significant (p = 0.012), indicating that situational interest moderated the effect of cues on professional vision (see Table 4). Simple slope analysis revealed differential effects between participants who trained with cues versus no cues (see Table 4). For participants with low situational interest (1 SD below the mean, or rating 2.44 out of 4.00), there was a significant effect of cues on professional vision. Thus, PSTs with particularly low situational interest achieved higher professional vision scores when training with cues compared to no cues. However, for participants with average or high situational interest (rating 3.00 or more out of 4.00), the effect did not remain significant, indicating no cue effects for these PSTs.

Figure 3 depicts the moderation for cues. Since situational interest is mean-centered for analysis, values displayed can be converted to the situational interest scale by adding the mean of 3.00. Further probing of the moderation with the Johnson-Neyman method (Finsaas & Goldstein, 2021; Fraas & Newman, 1997) revealed that for our sample of participants, the region of significance for cueing effectiveness was for PSTs with a situational interest rating range from 1.83 (i.e., sample min., depicted at a mean-centered value of − 1.17) until maximally 2.60 (depicted with the dotted line at a mean-centered value of −0.40), meaning between hardly and somewhat situationally interested. Thus, for below average situationally interested PSTs, training with the instructional design technique of signaling cues supported their professional vision development more than training without cues. This region included 26.2% of our participants (green shaded area). Beyond this point on the situational interest scale (red shaded area), the effect of signaling cues ceases to remain significant for our sample.

This figure shows the visualization of the moderation, demonstrating professional vision scores across the range of participants’ situational interest for PSTs who received signaling cues (yellow line) or no cues (gray line) in the training. Line endpoints represent the situational interest minimum (mean-centered SI = − 1.17, SI = 1.83) and maximum (mean-centered SI = 1, SI = 4) for the observed population. Further points represent the situational interest scores at the mean (centered at 0) and 1 SD above and below the mean

In the moderation model for prompts, the interaction term was not significant, (Prompts * SI: b = − 0.22, t(125) = − 0.87, p = 0.388, 95% CI [− 0.71, 0.28], SE = 0.25) indicating that situational interest did not moderate the relationship between focused prompts and professional vision. We did not further probe the moderation because it did not reach the level of significance (Hayes, 2017).

Discussion

Overall, PSTs’ professional vision improved in the posttest after our video-based training (RQ1.1). Further, the instructional design techniques of signaling cues and focused self-explanation prompts did not provide additional support for improved performance in the posttest for all participants (RQ1.2). However, PSTs with low situational interest who trained with videos that signaled keyword cues had significantly better professional vision performance in the posttest, than low-interested participants who did not receive cues in the training (RQ2).

In the context of other project studies, this study supports evidence from earlier findings and also introduces new evidence. Firstly, these results validate that professional vision skills can improve, even after a short video analysis intervention, which focuses on tutoring instruction and implements an introductory text pre-training (Farrell et al., 2022; Martin et al., 2022, 2023). Further, the present results parallel findings that instructional design techniques implemented in the training were not effective in providing additional professional vision support in a posttest video analysis. A previous study found segmenting and self-explanation prompts to be ineffective for additional professional vision support on the posttest (Martin et al., 2022), while the current study added new results on the impact of signaling cues and focused prompts. Finally, this study offers a unique contribution to our project findings and to the field of multimedia design with our exploration of the moderating role of situational interest. These findings brought nuance to the results regarding the effect of signaling cues. It seems that the cueing effect might compensate for the generative processing boost typically associated with situational interest to offer support specifically to low situationally interested PSTs. In the following, we further discuss the results, implications, limitations, and future directions for each research question.

Training Professional Vision (RQ1.1)

The first part of our first research question investigated the extent that PSTs’ professional vision skills improved from before a video analysis training to a subsequent video analysis posttest. We found PSTs significantly improved their descriptions and interpretations of relevant noticed events. PSTs noticed more events and made more focused and detailed video-specific descriptions of pre-trained tutoring actions. Further, they made clearer logical connections and used more knowledge-based evidence to support their inferential claims. This effect was independent from training conditions.

These findings were a replication from an earlier study of the research project, which found similar outcomes from a posttest directly after the training phase intervention (Martin et al., 2022). In the current study, we implemented a delayed posttest, one week after the intervention. The replication of similar results adds further support to the sustainability of effects. However, while we found overall improvements, PSTs’ performance was still at the lower range of professional vision scoring, also mirroring results from previous studies (Farrell et al., 2022; Martin et al., 2023). This demonstrates PSTs’ limited professional vision skills, further emphasizing the need for professional vision interventions in teacher education.

Evidence from previous project studies offers some indications on how the training helped PSTs improve their professional vision from pretest to posttest (Farrell et al., 2022; Martin et al., 2023). These studies suggest elements which likely offered support: pre-training texts and the use of scripted videos. First, the training phase began with a pre-training in the form of a short introductory text outlining specific tutoring strategies for application. Previously, we found that the text seemed to help with noticing content-specific events and increased knowledge-based interpretations (Martin et al., 2023). Secondly, the videos were designed for novice training according to the Framework for Teaching Practice in Professional Education (Grossman et al., 2009) and followed evidence-based development guidelines (e.g., Piwowar et al., 2018; Seidel et al., 2023). They were short and depicted an instructional context still applicable to the general classroom, but with reduced complexity (e.g., scripted scenes, fewer people). Moreover, in a preliminary project study, participants perceived the videos as sufficiently authentic (Martin et al., 2023). Further, the scripted videos were designed to be closely aligned with the learning goals and to exemplify the theoretical principles from the text within a concentrated format (Blomberg et al., 2013; Piwowar et al., 2018). Thus, these videos seemed to help PSTs pay more attention to individual students and connect knowledge from the texts to their interpretations of noticed events (Farrell et al., 2022). Still, one must be cautious to attribute effectiveness to only this particular combination of elements.

Additional caution should be considered due to design. At the training level, we did not have a group who received no training due to ethical concerns that it would prevent some PSTs from experiencing a potentially valuable learning opportunity. Thus, the single group pretest–posttest design for RQ1.1 introduces potential validity concerns (e.g., history/maturation, practice/testing, instrumentation, mortality; Campbell & Stanley, 1963; Shadish et al., 2002). However, we took several measures to reduce risks that other factors could have influenced findings. Since the time between pretest and posttest was two weeks, we consider it a low risk that improvements occurred due to a natural learning process over time (maturation), or that improvements occurred outside the control of the intervention (history) considering our intervention was an add-on to teacher seminars. To take precautions against direct practice effects, we used the same content in the pretest and posttest videos, but depicted by different actors. Moreover, the pretest and posttest occurred two weeks apart and PSTs trained with different videos in between. Instrumentation bias did not likely play a role, since coders were blind to the test phase and condition, and coding sequence was randomized. In terms of participant attrition (mortality), early dropouts did not significantly differ on multiple study-relevant measures, indicating that the remaining sample would not have a positive bias toward performance.

While we suggest to take caution in the interpretation of these results, the measures we took against potential biases offer some confidence to the validity of our findings. Still, we recommend that future studies investigating interventions modeled after the present study include a waiting-list control group. Further studies could also experimentally investigate different combinations of training phase features to elucidate stronger causal evidence of effectiveness mechanisms. Moreover, we assumed that preservice teachers’ perception of video authenticity would have been similar to the measure we obtained from a similar population using the same videos in an almost identical setting (Martin et al., 2023). However, we suggest future researchers always obtain authenticity measures when using scripted videos to assure that these learning materials have sufficient authenticity for their intended purpose. We also suggest future research investigate the motivational impact of different video learning materials. Perhaps authentic videos elicit higher motivation compared to scripted videos, which could have implications for instructional design.

We recommend practitioners develo** video-based trainings to explicitly consider evidence-based design guidelines for instruction with video (e.g., Blomberg et al., 2013; Kang & van Es, 2019). Further, they should think about associated implications for the target learner and context. Tailoring instructional elements accordingly should make trainings more successful. For example, using the Framework for Teaching Practice in Professional Education (Grossman et al., 2009), video analysis trainings could begin with less authentic, yet also less complex scripted videos. Then, step-by-step, trainings could evolve on the authenticity continuum, next with the use of authentic video for analysis, then to noticing and reasoning during classroom observations, and finally in-the-moment professional vision practice during one’s own lesson.

Instructional Design Techniques (RQ1.2)

The second part to our first research question investigated the additional effects of signaling cues (RQ1.2a) or focused self-explanation prompts (RQ1.2b). We found no additional benefit to PSTs’ professional vision performance for either instructional design technique in general. For both conditions, the expected effects may not have occurred due to a close design alignment, and thus familiarity between the unsupported video analyses (i.e., no cues, open prompts) in the test phases and training control conditions. This influence might have mitigated any chance to observe differential effects in the cues or self-explanation prompts conditions, respectively.

A further explanation may be that their supportive benefits were short-lived. Perhaps these techniques were effective, but only during the training phase in which they were implemented. Results of our previous study suggested that effects were especially evident during training, but they did not transfer to the posttest (Martin et al., 2022). Thus, it could be similar for the present study. Further research on the direct effects of cues and focused prompts during training would help to clarify their short-term impact. If similar patterns emerged, this would shed light on the support duration that these and similar techniques might have in the video analysis context. Along similar lines, further investigation could extend the length of training across several weeks, with the support from instructional techniques systematically faded out (Martin et al., 2022; Paas et al., 2004).

Signaling cues (RQ1.2a)

Professional vision training first involves attentional processing of information within the video material. Signaling cues support learning by directing attention to important information (Bétrancourt, 2005), thus we expected they could offer support. Research in learning with dynamic multimedia (e.g., animation) suggests that cues are most facilitative for lower cognitive processing skills (e.g., selecting; de Koning et al., 2009), so we assumed they could be particularly facilitative for professional vision descriptions of noticed events. However, this was not the case.

In our later discussion of the exploratory moderation, we will consider how signaling cues were effective for some participants. However, for others, the cues did not have the effect we expected. Perhaps the simultaneous concentration on the cues together with the transient flow of video information overextended PSTs’ processing capacity or distracted learners’ attention away from important video information (i.e., split attention effect, Ayres & Sweller, 2005; Chandler & Sweller, 1992), even with the cues integrated into the video as suggested by Moreno (2007).

Alternatively, the split-attention effect may not be the central reason, and other factors specific to video-based noticing could be involved. As novices with limited experiential knowledge, PSTs likely have incomplete schemata of what particular teaching strategies may look like in practice (Hogan et al., 2003; Peterson & Comeaux, 1987). Thus, their knowledge of abstract conceptual and procedural strategy characteristics might have been difficult to map onto video-based depictions without the help of representations from memory. Perhaps PSTs who did not have signaling cues were not burdened with high visual search and representational holding (Mayer & Fiorella, 2014; Sweller, 2010) to deductively find a signaled event. Rather, they could openly let video information trigger their recall of conceptual characteristics and inductively map noticed patterns to one event from many potentially relevant ones (e.g., goal-free effect; Sweller et al., 2011).

Self-Explanation Prompts (RQ1.2b)

We investigated focused self-explanation prompts as a technique to support PSTs in video analysis training. In contrast to open prompts, we expected focused prompts to offer more professional vision support by explicitly directing PSTs to make connections between the theoretical learning material and noticed video events (Chi, 2009). Specifically, for PSTs’ interpretations, we expected focused prompts to activate important concepts associated with the tutoring strategies from the texts, and to help PSTs apply this knowledge when making sense of noticed events. However, we did not find evidence of additional professional vision support from focused prompts.

Self-explanation prompts, in general, may not have fostered professional vision due to the complexity of the teaching context. Research suggests that self-explanation prompts are best suited for learning in well-defined domains (Rittle-Johnson & Loehr, 2016) wherein categories are reliable and general heuristics can be clearly implemented in examples (e.g., physics, Williams et al., 2013). However, teaching examples can be unreliable in the sense that recognizing principles, or depictions of theoretical tutoring strategies, may not be so straightforward, due to the situated nature of the practice (McDonald et al., 2013). Teacher decision-making typically involves simultaneous processing of context-specific information from multiple sources and perspectives. This can lead to many conditional circumstances or exceptions to general guiding principles (Williams et al., 2013). Thus, with their limited experience, PSTs may have found it more difficult to elucidate depictions of tutoring strategies based on their theoretical descriptions. Moreover, this complexity can increase processing demands and lead to oversimplifications within self-explanations (Williams et al., 2013), thus limiting professional vision performance.

Assuming both self-explanation prompts shared this difficulty, we expected focused prompts to be less difficult, since they included a diagram reminder of the target tutoring strategies from the text. However, this was not the case. Perhaps the effect of the focused prompts was not realized because the additional information in the diagram added more potential for overwhelming PSTs’ processing capacity (e.g., coherence principle, Mayer & Fiorella, 2014). While trying to remember what they noticed in the video, PST were confronted with eight different strategies in the diagram that they also needed to recall and connect to the noticed events. If some of the tutoring strategies were unfamiliar, processing the diagram would involve further efforts to remember theoretical characteristics from the text, rather than aid in quick recall of established mental models.

Situational Interest Moderation (RQ2)

Situational interest is a relevant motivational variable to explore in multimedia learning because it is typically elicited from external elements of the learning environment (Hidi & Renninger, 2006). Since signaling cues and focused prompts did not make an impact in professional vision support overall, our next question explored how PSTs’ situational interest interacted with cues and prompts, respectively, and whether these design techniques had differential effects on professional vision performance for participants with high or low situational interest. Descriptive results showed that situational interest could impact performance, demonstrating a significant positive relationship to PSTs’ professional vision scores overall (see Table 3). Our moderation analysis revealed that situational interest significantly moderated the relationship between cues and professional vision performance, but the moderation was not significant for focused prompts and professional vision. These results indicated that participants with low situational interest had significantly higher professional vision scores when they trained with cues versus without cues. This suggests that signaling cues were effective for more than one-fourth of our participants in the cues condition, namely those with low situational interest.

Situational interest can direct attention, boost cognitive functioning, and encourage task engagement (Hidi & Renninger, 2006; Moreno & Mayer, 2010). Since this motivational variable was related to participants’ overall professional vision performance, these characteristics could have positively influenced interested PSTs’ generative processing. However, participants who were not situationally interested likely missed out on this potential. With the cues/no cues differential performance from low interested PSTs, we estimate that cues could have compensated for situational interest. As intended, the cues likely boosted their attention to the specific tutoring strategies that were taking place, giving them a specific target to search for (Bétrancourt, 2005). In turn, their directed attention could have led them to notice more details and make more explicit connections to their knowledge about the tutoring strategy. However, without these cues to trigger and guide specific targets of attention, uninterested PSTs likely made little effort to fulfill the task.

For the instructional design technique of focused prompts, we did not find similar moderation effects. We attribute this to the similarity of constructive elicitations from both prompt formats. Situational interest can be activated when learners become aware of a knowledge gap that can be filled through their efforts in a given task (i.e., knowledge-deprivation hypothesis, Rotgans & Schmidt, 2014). Constructive self-explanation prompts may help learners to not only reveal what they already understand in their explanations, but also expose gaps in their mental models or inconsistencies between their own conceptions and the new material (Chi, 2000), thus potentially triggering situational interest to fill these gaps. We hypothesize that if we had compared a constructive prompt group to a different control group with, for example, more passive prompt format (e.g., menu-based), we might have found a moderation effect.