Abstract

The disruptions to health research during the COVID-19 pandemic are being recognized globally, and there is a growing need for understanding the pandemic’s impact on the health and health preferences of patients, caregivers, and the general public. Ongoing and planned health preference research (HPR) has been affected due to problems associated with recruitment, data collection, and data interpretation. While there are no “one size fits all” solutions, this commentary summarizes the key challenges in HPR within the context of the pandemic and offers pragmatic solutions and directions for future research. We recommend recruitment of a diverse, typically under-represented population in HPR using online, quota-based crowdsourcing platforms, and community partnerships. We foresee emerging evidence on remote, and telephone-based HPR modes of administration, with further studies on the shifts in preferences related to health and healthcare services as a result of the pandemic. We believe that the recalibration of HPR, due to what one would hope is an impermanent change, will permanently change how we conduct HPR in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Background

The COVID-19 pandemic has brought worldwide disruption to health research involving primary data collection due to restrictions on healthcare practices and research outside of COVID-19 and other urgent healthcare, shielding of vulnerable participants, travel restrictions, and work from home mandates. The first wave of COVID-19 infections began in early 2020, yet many countries are currently experiencing subsequent waves of infections. Each wave brings different restrictions due to a better understanding of the virus mutations and its spread. The timing of these waves, along with the severity and extent of government response, cannot be accurately predicted in advance. Altogether, this creates considerable challenges to health preference research (HPR), which involves direct primary data collection from individuals and the analysis and interpretation of that data.

For the purposes of this commentary, HPR is defined as research that focuses on the elicitation of preferences from individuals about health and healthcare services. The individuals involved in preference elicitation studies include pediatric and adult patients, caregivers, healthcare professionals, as well as members of the general population. The aims of HPR studies can be diverse. They range from eliciting preferences for hypothetical health states, healthcare, or health outcomes from members of the general population, patients, or direct or indirect caregivers [1]. The distinction between HPR and health-related quality of life (HRQOL) is important. HRQOL research is concerned with assessing the impact of health on an individual’s ability to live a fulfilling life, and includes a dynamic interplay of concepts of physical, psychological, social, and sexual well-being. HPR is concerned with values and preferences regarding these states and aspects of HRQOL, and is a specialized type of HRQOL research, with origins in economics in addition to educational and psychological measurement [1,2,3]. This research remains important and will continue to be relevant throughout the pandemic and beyond.

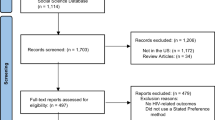

HPR has faced three key challenges due to the COVID-19 pandemic. First, the recruitment of participants may be impacted by sampling issues and lower response rates due to research fatigue and decreased willingness and ability to participate in HPR [4]. Second, the most commonly used mode of data collection in HPR, specifically in health state valuation, has traditionally been through face-to-face interviews. This is unlikely to be advisable or possible and considered “non-essential” during the pandemic. Evidence on the use of online HPR to elicit health state values and the equivalence of online and face-to-face HPR modes of administration in terms of health state values emerged in the pre-pandemic era (for example, [5,6,7,8]). Provided data quality and sample representativeness are achieved in online HPR studies, there is little reason why health state valuation cannot be conducted online during both the pandemic and post-pandemic. Notably, the non-representativeness of the participant sample, while common to face-to-face and online HPR, is exaggerated in online HPR. Further, online HPR makes it difficult to evaluate participant engagement and provide as-needed support, rendering the reliability of the data questionable [9]. Issues pertaining to data quality and sample representativeness need to be addressed particularly for iterative techniques, such as time trade-off, that were historically undertaken face-to-face. Several checks and careful study designs can be implemented to ensure these threats to the data are minimized and will be discussed in the subsequent sections of this commentary. However, to date, no best practice guidelines for designing and conducting online HPR exists (reporting guidelines can be found here [10]). This is in contrast with HRQOL research, where there is substantial literature on the potential sources and assessment of measurement equivalence of online and in-person modes of administration [11]. Third, data interpretation may be confounded due to the temporary or long-term impact of the pandemic on preferences for health, health states, and healthcare [13,14,15,16]. While this effectively indicates a logistic criterion to participant selection (i.e., the individual is able to attend an in-person visit at the local university or hospital), this has not generally been felt to diminish the generalizability of the health preferences to the population under consideration. There are exceptions to this, in that individuals who belong to a vulnerable population [17, 18], including the economically disadvantaged, ethnic minorities, gender and sexually diverse, elderly, homeless, people with chronic or severe health conditions (e.g., mental illness), and residents in rural areas with limited access to healthcare services, could become de facto exclusion criteria for participation in HPR. The longstanding challenge of incorporating these individuals to provide a more meaningful set of health preferences has been exacerbated during the pandemic [19]. Participants previously not considered vulnerable, such as healthy elderly participants, may be regarded as vulnerable during the pandemic. Further, existing vulnerabilities of the participant population may be worsened. Figure 1 provides a summary of vulnerability considerations that are important for HPR during the pandemic.

A strategy to alleviate the recruitment concerns is to pivot HPR entirely to an online format for population-based studies with pre-set recruitment quotas. This may help ensure that the study sample reflects the national sociodemographic and clinical characteristics. Vulnerable individuals from minorities and rural areas may be engaged in HPR through community-based formal and informal organizations and stakeholders, such as places of worship, religion study groups, community centers, and through community leaders, chiefs, and elders [20]. This strategy has rarely been used in population-based or clinical HPR but has been widely used in community-based participatory research. Community-academic-funder partnerships could be used to institute sustained access to desktop or mobile devices, internet connectivity, and technical support. This can result in longitudinal retention of participants and, more importantly, rural research infrastructure. Furthermore, certain vulnerable populations and people of working age may be more likely to engage in HPR during the pandemic due to working from home, leading to time savings associated with work-related travel, flexible working hours, and increased awareness of the role of research in improving public health. However, this is likely to differ by country, industry, and occupation since not all jobs can be undertaken at home. Individuals who may be reluctant to participate in online HPR may include those with increased burden due to lack of childcare and school closures, who have assumed a primary caregiver role due to partners or family members being essential workers or infected with COVID-19, or those experiencing psychosocial distress due to job loss or financial stresses.

The COVID-19 pandemic may introduce selection bias based on the potential participant's perceived risk of COVID-19 infection. To elaborate, a study involving an in-person mode of administration during the pandemic may unintentionally recruit a higher number of individuals who are “risk-tolerant” compared to individuals who are “risk-averse.” However, the use of ethically appropriate non-stochastic monetary incentives to enroll in the study may be employed to recruit a more risk-averse sample [21]. Additionally, there may be a long-lasting reluctance for individuals who are considered “high-risk” to participate in non-urgent health research, including HPR. This may generally occur because members of the general public may self-identify as high risk in a way that is unknown to the researchers, thereby excluding an essential segment of the underlying population from in-person HPR. This threat is further exacerbated when eliciting health preferences for a population defined by a health condition. These individuals may already be considered high risk and, therefore, unable or unwilling to participate in in-person HPR. The use of financial incentives may be increased to offset the economic uncertainty that individuals may be facing; however, they must be balanced with the effort required to avoid becoming an inducement to participate or encourage risk-taking behaviors for research participation during the pandemic.

A critical decision for recruitment will be if individuals who have been infected with the COVID-19 virus in the past and have recovered should be asked to self-identify in population-based studies or included in comparative effectiveness research. Further, research fatigue [4] may set in, especially as many members of the general population are eager to participate in trials assessing the effectiveness of various COVID-19 vaccines, potentially reducing the pool of participants available for HPR. Lastly, whether participation in COVID-19-related trials affects health state valuations and the ability to render preferences in an unbiased manner should be considered. Efforts to retain participants in longitudinal HPR initiated before or during a pandemic will be crucial to examining the differences between pre- and post-pandemic health state valuations.

Data collection

HPR is concerned with understanding and measuring the priorities and preferences of patients, caregivers, and other stakeholders. Subsequently, by definition, the data collection methods in HPR have included direct participant involvement through either in-person face-to-face interviews, telephone, or written surveys, and more recently online surveys and remote interviews (i.e., interviewer-assisted online interviews). The inherent challenges of collecting self-report data during the pandemic also apply to HPR. The modes of data collection in HPR can be classified into 5 main categories: (a) in-person, interviewer-assisted using physical props [22], (b) in-person, interviewer-assisted using a computer [28,29]. The interviewer-assisted administration can be executed using interaction elements (e.g., visual representations, labels, animations), physical props (e.g., chance or choice board, time trade-off board, feeling thermometer), or computer programs, resulting in improved respondent engagement and understanding [30]. The use of computers allows the researchers to build in the logic of iterations required for the valuation tasks and the use of graphics. It also eliminates data entry, making it less resource intensive while affording the same level of decision support as using physical props. Further, computer algorithms can be programmed to allow for real-time data checks and analyses. However, research involving in-person methods is particularly challenging to implement during the pandemic and may be temporarily discontinued based on regional or country-specific shelter-in-place orders. If in-person data collection can proceed with physical distancing precautions, then the recruitment rate may be affected due to the factors described in the recruitment section (Fig. 1).

The in-person mode of administration is highly discouraged in regions with higher numbers of COVID-19 cases per capita; however, specific measures can be put in place to protect the participant and the researcher in regions with lower cases per capita. Figure 2 includes some practical steps that may be implemented to reduce the risk of COVID-19 transmission and alleviate some of the psychological distress that may be associated with in-person research visits during the pandemic. Remote, interviewer-assisted mode of administration (c–e) is a persuasive alternative to the in-person method during the pandemic and beyond. In this method, the interviewer uses online video-conferencing platforms and applications (e.g., Zoom, Microsoft Teams, Google Meet, Cisco Webex, Adobe Connect, and telemedicine applications) with collaborative features (screenshare, audio and video call, chat option) to simulate an in-person interview. The remote method allows for data collection to continue regardless of shelter-in-place orders while offering the same level of interviewer support to the participant as the in-person method. The potential advantages of the remote, online method are the elimination of research travel and associated COVID-19 exposure risks and costs, anonymity that may result in endorsement of socially undesirable views, inclusion of a geographically diverse sample (including rural participants), flexibility in scheduling the interviews outside office hours, and safety of the interviewer and interviewee. However, some potential disadvantages include suboptimal internet connectivity, distractions in the environment, lack of rapport building, and varying levels of digital literacy of the participants [31, 32]. Further, individuals who are working remotely may be reluctant to participate with the purview of preventing “Zoom fatigue [33]”—a term used to describe exhaustion associated with computer-mediated communication. A limitation is that it may exclude individuals who are technologically illiterate or from low socioeconomic backgrounds who may not have access to electronic devices or stable internet connection. However, depending on the available resources, this may be addressed by providing participants with portable or desktop computers, a portable device charger (i.e., power bank), and pre-paid Wi-Fi cards. Another option that is increasingly being explored in HPR is the use of an online, self-report mode of administration, whereby the participant clicks on a weblink and is directed to the survey without an interviewer present. The online method is suitable for international, multicenter studies and is the least resource-intensive method as it omits the costs associated with the trained interviewer. It is also associated with minimal to no social desirability bias. We anticipate that some studies that have employed the online mode of administration for HPR—especially the ones that pivoted to online from face-to-face mode of data collection—will be published in near future.

Using an online, self-report mode of administration requires meticulous planning in terms of selecting a survey tool that can be accessed across different operating systems (e.g., macOS, Windows, Linux), electronic devices (tablets, laptops, or desktop computers) and has accessibility features (e.g., change font size or color, background color, Help option). Based on our team’s experience with designing and conducting online HPR, we outline the following key considerations for readers when designing an online HPR study. To optimally engage with low-skilled and low literate users, special attention should be paid to visual (e.g., vivid or bold graphics, large icons, pictures, pictographs, low clutter, color coding, and signposts to direct to next steps), audio (e.g., slow, clear, loud speech, ability to pause or repeat audio, automated voice-based text recognition, option to record audio and store information for open text-based questions), and text (e.g., short and simple sentences, enabling input in primary language) components [34]. Pre-launch, cognitive debriefing interviews with the population of interest should be conducted to ensure plausibility and comprehensibility of the HPR tasks, such as health and healthcare descriptions. A soft launch (more than one soft launch may be necessary) should be planned to allow the researchers to check the validity of the survey design, check the consistency of responses, and estimate a reasonable survey completion time. The introductory email should clearly explain the purpose of the study framed in terms of individual or societal benefit during and beyond the pandemic, approximate completion time, the end date of the survey, and financial incentive (if any). After the study is launched, the response rates should be monitored to target missing demographics in the next cycle of recruitment and identify times during which the population of interest is likely to complete the survey. While the steps mentioned above are required to ensure rigor in online HPR studies irrespective of the pandemic status, they take prominence during the pandemic to ensure that participants who may already feel overstrained in their personal lives understand the purpose of the survey, engage and complete the study, and are attentive and considerate in their responses.

An important consideration in the online method of recruitment and mode of administration is that the use of incentives can increase the likelihood of potential malicious responding from bots [35]. Hence, frequent data checks should be conducted to check for impossible or exactly identical timestamps, identical, nonsensical or illogical responses (especially to open-ended questions), and time taken to complete the survey. Strategies to deal with bots in survey data have been published in the literature [36, 37].

Given the broad penetration of cellphone, telephone-based communication can broaden the inclusion of under-represented individuals, especially from socioeconomically deprived areas into HPR. However, telephone-based administration has been deemed inappropriate for HPR-related tasks that require a visual prompt or for considerable information to be read aloud by the interviewer. There may be opportunities to improve access to HPR surveys by making them mobile-interface friendly (whenever possible). Emerging evidence suggests that for studies eliciting preferences using online methods (e.g., discrete choice experiment), there is little to no variability in preferences or choice behavior associated with the device on which the survey is accessed [38].

Previous studies have found that online self-report HPR-related data are associated with a higher completion rate than the interviewer-based methods. However, a greater tendency to endorse central and extreme values (i.e., 0, 1, − 1) has also been observed [6]. This may be partly due to the complex nature of the task, where participants may respond with more uncertainty without the guidance of an interviewer. A tendency to provide higher absolute values for health states and higher estimates for negative effects of attribute levels has also been noted [6]. The data quality may also be affected due to the cognitively burdensome nature of HPR-related tasks which may cause participants to feel less committed to giving carefully formed responses or finding shortcuts to quickly move from one health state to the next, generally by reporting indifference between options [26]. Research to understand the impact of the self-report method of administration on the reliability and validity of preference values is emerging. Some of the strategies to improve data quality in self-report HPR preference elicitation surveys that have been proposed to-date include (a) imposing a minimum number of iterations or trade-offs to be completed before task completion [26], (b) providing the option to “repair the error” by highlighting inconsistencies [39], (c) including attention check questions within the valuation task [40], (d) explaining the purpose of the study clearly and convincingly, and (e) providing financial incentives based on the number of tasks completed or time spent [6].

Data interpretation

The interpretation of HPR data collected before, during, and post-pandemic has its own unique challenges. First, concerns arise over the generalizability, validity, and reliability of HPR data collected during the pandemic relative to pre-pandemic studies. For example, people may use different appraisals or internal standards to evaluate underlying health preferences and norms as a consequence of COVID-19 due to factors such as increased perceived health risk [41] or higher levels of psychological distress [42]. This will have significant implications for the interpretation of comparative effectiveness research data. It will be challenging to ascertain if changes in utilities, such as those generated using the EQ-5D, are due to the effects of the intervention alone or intervention and the effects of the pandemic (for example, anxiety/depression or usual activities could be impacted by COVID-19 rather than the intervention only). Second, while we expect preference shifts to have occurred during the pandemic, it is unclear how long shifts in preferences may last in the post-pandemic era. Lastly, HPR data collected from particular populations (i.e., those theorized to be most affected by the ongoing pandemic) or incorporating health or healthcare descriptions with an apparent association with the COVID-19 infection may be hypothesized as most likely affected by shifts in preferences. In particular, some health state classification systems may include symptoms that have a direct conceptual overlap with COVID-19, such as difficulty breathing, fever, or a persistent cough. These attributes may be temporarily evaluated differently by participants as a result of the pandemic.

Recommended solutions to HPR data interpretation as impacted by the COVID-19 pandemic include comparing acquired data to pre-existing data, wherever possible, and assessing any irregularities or unexpected deviations from a priori expectations and hypotheses. Relatedly, it is crucial to report the findings in context, stating clearly when the HPR data were collected and disclosing the potential impact of the pandemic on participants’ health preferences. Additionally, where possible and justified, researchers should consider conducting interim data analyses to assess the impact of the pandemic on the HPR data and make corrective adjustments to their data collection protocol, if necessary. As the data collection evolves from before, during and post-pandemic, researchers should make adjustments in their analyses to account for biased preferences, such as screening for and controlling for reported exposure to COVID-19, both directly and indirectly. Finally, and where relevant, such as in HPR data supporting the derivation of utility weights for a preference-based measure, researchers may recommend that data are recollected post-pandemic (and once potential shifts in preferences due to the pandemic are no longer present) to be compared to the original dataset and findings.

Conclusions

The COVID-19 pandemic has undoubtedly disrupted HPR in numerous ways. Yet, it has also offered opportunities by forcing innovation concerning equitable and digital inclusion of the general population, online modes of administration, and response shifts within the HPR context. The pandemic has highlighted the urgency and significance of investing capital and personnel resources in engaging individuals with low literacy, from rural or remote areas, and diverse cultures in clinical research. Future studies should continue to develop guidance regarding methodological considerations and data quality issues, specifically concerning online modes of data collection. Additionally, more research is needed to explore the impact, extent, and assessment of response shift on preferences, characterized by sociodemographic characteristics of the study population (e.g., age, gender, educational status, income level, risk perception).

To conclude, this commentary explores the emerging challenges and offers potential solutions for the successful selection and recruitment of research participants, as well as collection and interpretation of HPR data in the context of the pandemic. We expect that the changes that occur in HPR due to the pandemic will shift the paradigm of HPR, especially towards using online methods for recruitment and data collection, in the foreseeable future.

Data availability

Not applicable.

Abbreviations

- HPR:

-

Health preference research

- HRQOL:

-

Health-related quality of life

- PPE:

-

Personal protective equipment

References

Craig, B. M., Lancsar, E., Mühlbacher, A. C., Brown, D. S., & Ostermann, J. (2017). Health preference research: An overview. The Patient-Patient-Centered Outcomes Research, 10(4), 507–510.

Research, I. S. f. Q. O. L. (2016). What is health-related quality of life research. Available in http://www.isoqol.org/about-isoqol/whatis-health-related-quality-of-life-research. Accessed March 14, 2021

Clancy, C. M., & Eisenberg, J. M. (1998). Outcomes research: Measuring the end results of health care. American Association for the Advancement of Science, 282(5387), 245–246.

Patel, S. S., Webster, R. K., Greenberg, N., Weston, D., & Brooks, S. K. (2020). Research fatigue in COVID-19 pandemic and post-disaster research: Causes, consequences and recommendations. Disaster Prevention and Management: An International Journal, 29(4), 445–455.

Sullivan, T., Hansen, P., Ombler, F., Derrett, S., & Devlin, N. (2020). A new tool for creating personal and social EQ-5D-5L value sets, including valuing ‘dead.’ Social Science & Medicine, 246, 112707.

Norman, R., King, M. T., Clarke, D., Viney, R., Cronin, P., & Street, D. (2010). Does mode of administration matter? Comparison of online and face-to-face administration of a time trade-off task. Quality of Life Research, 19(4), 499–508.

Norman, R., Mercieca-Bebber, R., Rowen, D., Brazier, J. E., Cella, D., Pickard, A. S., Street, D. J., Viney, R., Revicki, D., King, M. T., & European Organisation for Research and Treatment of Cancer (EORTC) Quality of Life Group and the MAUCa Consortium. (2019). UK utility weights for the EORTC QLU-C10D. Health Economics, 28(12), 1385–1401.

Rowen, D., Brazier, J., Keetharuth, A., Tsuchiya, A., & Mukuria, C. (2016). Comparison of modes of administration and alternative formats for eliciting societal preferences for burden of illness. Applied Health Economics and Health Policy, 14(1), 89–104.

Mulhern, B., Longworth, L., Brazier, J., Rowen, D., Bansback, N., Devlin, N., & Tsuchiya, A. (2013). Binary choice health state valuation and mode of administration: Head-to-head comparison of online and CAPI. Value in Health, 16(1), 104–113.

Angeliki, N. M., Olsen, S. B., & Tsagarakis, K. P. (2016). Towards a common standard–a reporting checklist for web-based stated preference valuation surveys and a critique for mode surveys. Journal of Choice Modelling, 18, 18–50.

Coons, S. J., Gwaltney, C. J., Hays, R. D., Lundy, J. J., Sloan, J. A., Revicki, D. A., Lenderking, W. R., Cella, D., Basch, E., & ISPOR ePRO Task Force. (2009). Recommendations on evidence needed to support measurement equivalence between electronic and paper-based patient-reported outcome (PRO) measures: ISPOR ePRO Good Research Practices Task Force report. Value in Health, 12(4), 419–429.

Hay, J. W., Gong, C. L., Jiao, X., Zawadzki, N. K., Zawadzki, R. S., Pickard, A. S., **e, F., Crawford, S. A., & Gu, N. Y. (2021). A US population health survey on the impact of COVID-19 using the EQ-5D-5L. Journal of General Internal Medicine, 36(5), 1292–1301.

Brazier, J., Roberts, J., & Deverill, M. (2002). The estimation of a preference-based measure of health from the SF-36. Journal of Health Economics, 21(2), 271–292.

Devlin, N. J., Shah, K. K., Feng, Y., Mulhern, B., & van Hout, B. (2018). Valuing health-related quality of life: An EQ-5 D-5 L value set for England. Health Economics, 27(1), 7–22.

Dolan, P. (1997). Modeling valuations for EuroQol health states. Medical Care, 35, 1095–1108.

Rowen, D., Brazier, J., Young, T., Gaugris, S., Craig, B. M., King, M. T., & Velikova, G. (2011). Deriving a preference-based measure for cancer using the EORTC QLQ-C30. Value in Health, 14(5), 721–731.

Mermet-Bouvier, P., & Whalen, M. D. (2020). Vulnerability and clinical research: Map** the challenges for stakeholders. Therapeutic Innovation & Regulatory Science, 54(5), 1037–1046.

Patrick, K., Flegel, K., & Stanbrook, M. B. (2018). Vulnerable populations: An area CMAJ will continue to champion. Canadian Medical Association Journal, 190(11), E307.

Chokkara, S., Volerman, A., Ramesh, S., & Laiteerapong, N. (2021). Examining the inclusivity of US trials of COVID-19 treatment. Journal of General Internal Medicine, 36(5), 1443–1445.

Young, L., Barnason, S., & Do, V. (2015). Review strategies to recruit and retain rural patient participating self-management behavioral trials. Online Journal of Rural Research and Policy, 10(2), 1.

Harrison, G. W., Lau, M. I., & Rutström, E. E. (2009). Risk attitudes, randomization to treatment, and self-selection into experiments. Journal of Economic Behavior & Organization, 70(3), 498–507.

Dolan, P., Gudex, C., Kind, P., & Williams, A. (1996). The time trade-off method: Results from a general population study. Health Economics, 5(2), 141–154.

Sayah, F. A., Bansback, N., Bryan, S., Ohinmaa, A., Poissant, L., Pullenayegum, E., **e, F., & Johnson, J. A. (2016). Determinants of time trade-off valuations for EQ-5D-5L health states: Data from the Canadian EQ-5D-5L valuation study. Quality of Life Research, 25(7), 1679–1685.

Oppe, M., Devlin, N. J., van Hout, B., Krabbe, P. F., & de Charro, F. (2014). A program of methodological research to arrive at the new international EQ-5D-5L valuation protocol. Value in Health, 17(4), 445–453.

Mulhern, B. J., Bansback, N., Norman, R., Brazier, J., & SF-6Dv2 International Project Group. (2020). Valuing the SF-6Dv2 classification system in the United Kingdom using a discrete-choice experiment with duration. Medical Care, 58(6), 566–573.

Jiang, R., Kohlmann, T., Lee, T. A., Mühlbacher, A., Shaw, J., Walton, S., & Pickard, A. S. (2020). Increasing respondent engagement in composite time trade-off tasks by imposing three minimum trade-offs to improve data quality. The European Journal of Health Economics, 22, 1–17.

Zhuo, L., Xu, L., Ye, J., Sun, S., Zhang, Y., Burstrom, K., & Chen, J. (2018). Time trade-off value set for EQ-5D-3L based on a nationally representative Chinese population survey. Value in Health, 21(11), 1330–1337.

Ratcliffe, J., Flynn, T., Terlich, F., Stevens, K., Brazier, J., & Sawyer, M. (2012). Develo** adolescent-specific health state values for economic evaluation. PharmacoEconomics, 30(8), 713–727.

Rogers, H. J., Marshman, Z., Rodd, H., & Rowen, D. (2021). Discrete choice experiments or best-worst scaling? A qualitative study to determine the suitability of preference elicitation tasks in research with children and young people. Journal of Patient-Reported Outcomes, 5(1), 1–11.

Devlin, N. J., Shah, K. K., Mulhern, B. J., Pantiri, K., & van Hout, B. (2019). A new method for valuing health: Directly eliciting personal utility functions. The European Journal of Health Economics, 20(2), 257–270.

Lipman, S. A. (2020). Time for tele-TTO? Lessons learned from digital interviewer-assisted time trade-off data collection. The Patient-Patient-Centered Outcomes Research, 14, 1–11.

Hewson, C., & Stewart, D. W. (2014). Internet research methods. Wiley StatsRef: Statistics reference online (pp. 1–6). Wiley.

Bailenson, J. N. (2021). Nonverbal overload: A theoretical argument for the causes of Zoom fatigue. Technology, Mind, and Behavior. https://doi.org/10.1037/tmb0000030

Zelezny-Green, R., Vosloo, S., & Conole, G. (2018). A landscape review: Digital inclusion for low-skilled and low-literate people. Paris: UNESCO, 2018. Online. Internet. Available: http://www.unesco.org/ulis/cgi-bin/ulis.pl?catno=261791&set=005B764DD4_2_333&gp=1&lin=1&ll=1. Accessed March 20, 2021

Pozzar, R., Hammer, M. J., Underhill-Blazey, M., Wright, A. A., Tulsky, J. A., Hong, F., Gundersen, D. A., & Berry, D. L. (2020). Threats of bots and other bad actors to data quality following research participant recruitment through social media: Cross-sectional questionnaire. Journal of Medical Internet Research, 22(10), e23021.

Storozuk, A., Ashley, M., Delage, V., & Maloney, E. A. (2020). Got bots? Practical recommendations to protect online survey data from bot attacks. The Quantitative Methods for Psychology, 16(5), 472–481.

Teitcher, J. E., Bockting, W. O., Bauermeister, J. A., Hoefer, C. J., Miner, M. H., & Klitzman, R. L. (2015). Detecting, preventing, and responding to “fraudsters” in internet research: Ethics and tradeoffs. The Journal of Law, Medicine & Ethics, 43(1), 116–133.

Vass, C. M., & Boeri, M. (2021). Mobilising the next generation of stated-preference studies: The association of access device with choice behaviour and data quality. The Patient-Patient-Centered Outcomes Research, 14(1), 55–63.

Lenert, L. A., Sturley, A., & Rupnow, M. (2003). Toward improved methods for measurement of utility: Automated repair of errors in elicitations. Medical Decision Making, 23(1), 67–75.

Abbey, J. D., & Meloy, M. G. (2017). Attention by design: Using attention checks to detect inattentive respondents and improve data quality. Journal of Operations Management, 53, 63–70.

Wise, T., Zbozinek, T. D., Michelini, G., Hagan, C. C., & Mobbs, D. (2020). Changes in risk perception and self-reported protective behaviour during the first week of the COVID-19 pandemic in the United States. Royal Society open science, 7(9), 200742.

Mazza, C., Ricci, E., Biondi, S., Colasanti, M., Ferracuti, S., Napoli, C., & Roma, P. (2020). A nationwide survey of psychological distress among Italian people during the COVID-19 pandemic: Immediate psychological responses and associated factors. International Journal of Environmental Research and Public Health, 17(9), 3165.

Funding

No funding was received for the preparation of this manuscript. Manraj N. Kaur is supported through the Canadian Institutes of Health Research’s Fellowship (2020–23).

Author information

Authors and Affiliations

Contributions

All authors developed the research idea and contributed to the content and writing of the manuscript. MK leads the drafting of the manuscript. All authors approved and read the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests in relation to the content of the article.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kaur, M.N., Skolasky, R.L., Powell, P.A. et al. Transforming challenges into opportunities: conducting health preference research during the COVID-19 pandemic and beyond. Qual Life Res 31, 1191–1198 (2022). https://doi.org/10.1007/s11136-021-03012-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-021-03012-y