Abstract

Meta-analyses often present flexibility regarding their inclusion criteria, outcomes of interest, statistical analyses, and assessments of the primary studies. For this reason, it is necessary to transparently report all the information that could impact the results. In this meta-review, we aimed to assess the transparency of meta-analyses that examined the benefits of cognitive training, given the ongoing controversy that exists in this field. Ninety-seven meta-analytic reviews were included, which examined a wide range of populations with different clinical conditions and ages. Regarding the reporting, information about the search of the studies, screening procedure, or data collection was detailed by most reviews. However, authors usually failed to report other aspects such as the specific meta-analytic parameters, the formula used to compute the effect sizes, or the data from primary studies that were used to compute the effect sizes. Although some of these practices have improved over the years, others remained the same. Moreover, examining the eligibility criteria of the reviews revealed a great heterogeneity in aspects such as the training duration, age cut-offs, or study designs that were considered. Preregistered meta-analyses often specified poorly how they would deal with the multiplicity of data or assess publication bias in their protocols, and some contained non-disclosed deviations in their eligibility criteria or outcomes of interests. The findings shown here, although they do not question the benefits of cognitive training, illustrate important aspects that future reviews must consider.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In the last years, there has been growing concern regarding the transparency, reproducibility, and replicability of psychological science. Failed large-scale attempts to replicate empirical studies (Open Science Collaboration, 2015; Shrout & Rodgers, 2018) have harmed the credibility of the research conducted in the field (Baker, 2016).

Carrying out a meta-analysis, just like primary studies, involves making decisions at different stages, such as the design of the study, data collection, data analysis, and reporting of the results. To illustrate the myriad decisions that have to be made when carrying out a meta-analysis, we have created a non-exhaustive scheme (see the Appendix). This particular scheme is intended for the cognitive training field but can be easily adapted to most meta-analyses.

Importantly, many of these decisions are often rather arbitrary, as they lack a strong methodological or substantive rationale. This element of subjectivity is often referred to as “researcher degrees of freedom” (Hardwicke & Wagenmakers, 2021; Simmons et al., 2011; Wicherts et al., 2016). Acknowledging the researcher degrees of freedom in meta-analysis is important as well because they can have an impact on the results (Ioannidis, 2016; Maassen et al., 2020; Page et al., 2013).

Considering the relevant role that meta-analysis has in science, it is of great importance that every meta-analytic decision that could influence the results or conclusions is transparently reported, so that anyone can evaluate the methodology and the potential for bias (Ioannidis, 2005; Kvarven et al., 2020; Lakens et al., 2017, 2020; Scionti et al., 2020). Last, it is worth adding that Gavelin et al. (2020) conducted a meta-review including systematic reviews and meta-analyses of cognitive training and other cognition-oriented treatments, such as cognitive rehabilitation or cognitive stimulation specifically in older adults (see Clare & Woods, 2004; Gavelin et al., 2020 for a clarification of the terms). Here, authors found a small but significant effect for cognitive training, and interestingly, although the quality of reviews, as rated with the AMSTAR, was generally low, they also found a positive correlation between the quality of the review and the magnitude of the pooled effect size.

Thus, given the state of this research field, it is especially important that cognitive training meta-analyses transparently report all the information about the decisions that could influence any result or conclusion. This would help minimize the risk of bias arising from the authors of the meta-analyses as well as allow anyone to properly evaluate the methodological adequacy of already published meta-analytical studies.

Purpose

The main aim of this study was to examine the transparency of the reporting of information in meta-analyses that examined the benefits of cognitive training programs. In addition, our results are compared to previous studies, checking whether transparency practices are similar across different disciplines. Moreover, in contrast to previous research assessing transparency practices in meta-analyses, this review also intends to serve as an illustration of the different meta-analytical practices in the field of cognitive training, showing the methods and decisions that authors have used for meta-analytical decisions such as defining the eligibility criteria.

Method

Inclusion of Studies

Our initial aim was to include systematic reviews reporting at least one original meta-analysis that assessed the benefits of cognitive training delivered to any population, clinical or not, of any age and under any format, computerized or not, excluding reviews mainly focused on non-cognitive training videogames, physical exercise, exergames, non-invasive brain stimulation, or Brain Computer Interface, even if they were applied in combination with cognitive training. The reason to exclude these studies was to focus on studies reviewing solely the effect of cognitive training, reducing heterogeneity between studies and making them more comparable. Nonetheless, we found several reviews that, although only labeled as cognitive training meta-analyses, also included some primary studies that delivered other interventions in combination with cognitive training or that used non-cognitive training videogames, such as real-time strategy or action videogames (Lampit et al., 2014; Oldrati et al., 2020). In order to examine a larger sample, we decided to include these reviews as well. Besides, we also included meta-analytic studies that were labeled as cognitive rehabilitation or remediation, as long as the majority (i.e., > 50%) of the primary studies delivered a cognitive training intervention. To check whether cognitive training studies were the majority in a meta-analysis, we individually checked the details provided by the meta-analytic studies for each of the primary studies that were included. We did not impose any further restrictions on publication year. We included reviews written in English or Spanish. Lastly, we decided to exclude network meta-analyses and Bayesian meta-analyses because these meta-analytic frameworks have their own specific procedures and evaluating the reporting of information of those specific methods would have entailed a large cost, requiring including many other items.

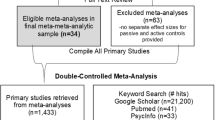

Searching and Screening of Studies

We aimed to identify meta-analyses conducting a systematic electronic search in PubMed, Scopus, and all the collections of Web of Science. The search was carried out through February 2021 and updated in July 2023. A full search strategy for each database is available in the Supplementary Table 1 at https://osf.io/j58ax. No search limits were set. All references identified through the electronic searches were imported to Zotero. De-duplication was performed using the tool included in the software and manually revised. We first evaluated the title and abstract of each article for potential inclusion. Where inclusion or exclusion of a study was not clear from the information available in the title or abstract, we assessed the full text. Additionally, we screened the references of the articles that met the inclusion criteria. Screening was performed by one author (ASL). Doubts were discussed with two authors (JSM and JLL). A PRISMA 2020 flow diagram (Page et al., 2021) summarizing the search and screening process is available in the Supplementary Fig. 1 at https://osf.io/mp8ye/.

Data Collection

A structured coding form containing items that covered different meta-analytical decisions was produced. This form was based on previous studies with similar scope (Hardwicke et al., 2020; Koffel & Rethlefsen, 2016; López-Nicolás et al., 2022; Pigott & Polanin, 2020; Wallach et al., 2018) and the PRISMA 2020 guidelines (Page et al., 2021). We preferred to use this approach over using the AMSTAR because the latter aims to evaluate quality of the review. Instead, we focus on the reporting of information of each decision, without assessing whether meta-analytic decisions were the most appropriate. Our approach also allowed us to evaluate a wider range of aspects such as data sharing, reporting of specific meta-analysis parameters, how authors performed meta-analytic decisions such as dealing with multiple effect sizes, or the eligibility criteria used by each study. The coding form for this study is available at https://osf.io/6d8be/. Data was extracted and coded independently by two authors (ASL and MT), except for the information regarding preregistered meta-analyses comparing the protocol and the final articles. Discrepancies were solved by consensus. For each included article, we extracted information about: basic characteristics of the article (journal, publication year, country, trained domains, population, funding sources, and conflicts of interest), preregistration, adherence to reporting guidelines, search and inclusion of the studies (information sources, search strategy, eligibility criteria, selection process), data extraction procedure, effect size measures, meta-analytical methods (statistical model, weights, between-studies variance estimators, combining multiple effect sizes, and software), assessment of the quality of primary studies, inspection of publication bias, and data and script availability.

In each meta-analysis, we coded any reported information about each of those stages, distinguishing when they reported that they did or did not perform a specific step. When a meta-analysis reported that they performed that step, we coded the specific procedure to examine which were the most frequent practices. For example, if authors explicitly reported that they did inspect publication bias, we coded “reported, they inspected publication bias” and coded which methods for inspecting publication bias were used. However, if they explicitly stated that they did not inspect publication bias, we coded “reported, they did not inspect publication bias.”

In the cases where the article reported having a preregistered protocol, we examined the protocols to assess their coverage of key meta-analytic decisions and possible deviations from the protocol. The coding scheme is adapted from https://osf.io/kf9dz, and we evaluated the following aspects: eligibility criteria, outcomes, outcome prioritization, risk of bias, meta-analysis model, dealing with multiple effect sizes, additional analyses, and publication bias. Three types of deviations were considered: (a) if there is information regarding some decision in the protocol but this information is not present in the final article, (b) if there is information regarding some decision reported in the final article but this was not pre-specified in the protocol, and (c) if there is information in both the protocol and the final article but there is different information. Besides, we also coded whether deviations were disclosed or not.

Data Analysis

All analyses were performed using R Statistical Software (v4.1.2; R Core Team, 2021). For each item, we calculated the proportion of meta-analyses in which the information was available. For this, we used the R package “DescTools” (Signorell et al., 2019). We also examined the possible association between publication year and the reporting of information using binary logistic regression, to see whether report trends have change over the years, for every item that was reported less than 80% of the time. For the data preparation and presentation, we used the packages included in “tidyverse” (Wickham et al., 2019). Scripts and data are available at https://osf.io/jw6rb/.

Results

Our search retrieved a total of 1983 results, which, after the removal of duplicates, yielded a total of 919 results. Of these, 659 studies were screened by title or abstract. Subsequently, we assessed 260 full-text articles and included 87 articles. Additional searches scanning the references retrieved 10 additional eligible articles, leaving us with a total of 97 meta-analytic studies. Most of these 97 meta-analyses selected in this meta-review included primary studies that applied a training program targeting several cognitive domains (63; 65%) or exclusively working memory (20; 21%). The rest of studies focused on executive functions, memory, attention, or spatial skills. There was substantial diversity in the populations examined across different meta-analyses, which assessed the benefits of cognitive training in a wide range of clinical conditions such as schizophrenia, multiple sclerosis or mild cognitive impairment considering any age, from children to older adults (see Supplementary Table 2 at https://osf.io/j58ax/ for the characteristics of the included studies). Meta-analyses were published between 1997 and 2023 (median = 2019), with 40 being published in 2020 or afterwards (see Supplementary Fig. 2 at https://osf.io/mp8ye/).

Preregistration, Guidelines, Conflict of Interest, Funding Sources, and Data Availability Statement

Of the 97 meta-analyses included in our meta-review, 35 (36%)Footnote 1 reported having preregistered a protocol (see Fig. 1a), with 30 (86%) using PROSPERO, 3 (9%) having their protocol published in the Cochrane Database of Systematic Reviews, 1 (3%) using INPLASY, and 1 (3%) sharing it in the supplementary materials. A total of 56 studies (58%) reported using any guidelines or checklists for the reporting of meta-analysis information (see Fig. 1b), where 53 (95%) used the PRISMA guidelines, 1 (2%) used the MARS, 1 (2%) used Cochrane guidelines, and 1 (2%) used APA guidelines. In total, 9 (9.3%) studies disclosed some conflict of interest, 62 (63.9%) reported having no conflict of interest, and 26 (26.8%) did not include a conflict of interest statement (see Fig. 1c). Finally, 70 (72%) studies included a funding statement, with 49 (51%) having only public funding, 10 (10%) having both public and private funding, and 11 (1%) reporting having no funding, while 27 (28%) did not include any information about funding sources (see Fig. 1d). Lastly, only 25 (26%) of the studies included a statement regarding the availability of the data, with 8 (8%) of them specifying that data would be available on request.

Eligibility Criteria, Information Sources, Search Strategy, and Selection Process

All 97 meta-analyses reported the electronic databases consulted (see Fig. 2A). 89 (92%) studies reported the date when the electronic search was conducted. Furthermore, 80 (82%) reported using additional search strategies, and 13 (13%) reported not using any other strategy. The most frequently used method to conduct an additional search was to consult the references of the articles was the preferred method (60 studies; 84%). 38 studies (39%) indicated imposing some search limits, 25 (26%) reported not setting any search limit, and 34 (35%) did not report any information regarding search limits. 94 studies (97%) reported the search terms that were used, although 73 (75%) reported the exact search strategy for, at least, one database. All the studies reported information about the eligibility criteria (See Fig. 2B). Inspecting the eligibility criteria using the PICO scheme showed that meta-analytic studies used very different criteria to decide which primary studies could be eligible. The largest variation was found for the training duration, where meta-analyses required the following durations: at least 4 h (Hallock et al., 2016; Hill et al., 2017; Lampit et al., 2014, 2019; Leung et al., 2015), more than 1 session (das Nair et al., 2016; Loetscher et al., 2019; Melby-Lervåg et al., 2016; Weicker et al., 2016), at least 3 sessions (Nguyen et al., 2019), at least 10 sessions (Giustiniani et al., 2022; Scionti et al., 2020), at least 20 sessions (Spencer-Smith & Klingberg, 2015), at least 1 week (Au et al., 2015), at least 2 weeks (Schwaighofer et al., 2015), from 4 to 12 weeks (Dardiotis et al., 2018), at least 12 weeks (Gates et al., 2020), and at least 1 month (Bonnechère et al., 2020). In contrast, 79 studies did not specify any required duration. Additionally, several cut-offs were used for the different age ranges. For example, studies that focused on children and adolescents often considered different age ranges such as 3 to 12 years (Cao et al., 2020; Kassai et al., 2019), 3 to 18 years (Chen et al., 2021; Cortese et al., 2015), 6 to 19 years (Oldrati et al., 2020), less than 18 years (He et al., 2022), less than 19 years (Robinson et al., 2014), and less than 21 years (Corti et al., 2019). Four studies did not specify their cut-offs. Similarly, when considering only older adults (25 studies), meta-analyses often required participants to be 60 years old or older. However, 3 (Basak et al., 2020; Ha & Park, 2023; Kelly et al., 2014) and 2 (Tetlow & Edwards, 2017; Zhang et al., 2019) studies also used 50 and 55, respectively, as the age cut-off. Variability was also found regarding the design of the primary studies, where 54 (56%) meta-analyses required primary studies to be randomized controlled trial (RCT), 33 (34%) studies allowed non-RCTs, and 10 (10%) did not report any information. Similarly, 39 (40%) studies included gray literature, 29 (30%) excluded it, and 29 (30%) did not report any information about including or excluding this literature. 16 (16%) studies reported allowing another intervention to be delivered in combination with cognitive training, with 6 (5%) studies (Aksayli et al., 2019; Goldberg et al., 2023; Hallock et al., 2016; Lampit et al., 2014, 2019; Leung et al., 2015) specifying that cognitive training had to conform 50% of the intervention and 1 (1%) study (McGurk et al., 2007) requiring that cognitive training was the 75% of the intervention. Last, 9 studies did not require that the entire sample of a study was their target population in order to include the study, again using different cut-offs: 50% (Tetlow & Edwards, 2017; Woolf et al., 2022), 60% (Goldberg et al., 2023), 70% (Kambeitz-Ilankovic et al., 2019; Wykes et al., 2011), 75% (das Nair et al., 2016; McGurk et al., 2007), and 80% (Gates et al., 2020; Yan et al., 2023).

Finally, most studies, 87 (90%), detailed the screening process (i.e., how many articles were retrieved and how many were screened by title or abstract), with 62 (71%) reporting that a double screening was performed, and 1 (1%) acknowledging that it was conducted by a single author. However, not every article that described their screening process specified in detail the reasons for screening the articles. The majority specified the number of articles that were for excluded for each reason, with only 11 (13%) specifying the exact reason for each article that was considered not eligible.

Data Collection Process

Most meta-analyses, 86 (89%), reported information about the data collection procedure (see Fig. 3A). Of these, 54 (56%) reported double coding the information, with 16 (30%) reporting some measure of inter-rater reliability, and 9 meta-analyses (9%) detailed that the coding was not performed by two authors. 60 studies (62%) reported using some procedure to deal with missing data, mainly by reaching the original authors (54 studies; 92%). 62 studies (64%) reported assessing the risk of bias of individual studies procedure (see Fig. 3A).

Effect Size Measures, Statistical Dependency, and Outlier Analyses

All meta-analyses reported which effect size measure they used in the synthesis (see Fig. 3B). However, only 20 (21%) specified the exact formula to compute the summary measure. Besides, 27 (28%) studies did not specify the exact standardized mean difference (SMD) measure that was used. Of the studies that specified this information, the majority, 44 (63%), reported using a pre-post controlled SMD, which was followed by using a post-test SMD (20 studies; 28%). The rest of the studies (6 studies, 9%) used a pre-post SMD or several measures. Of the studies that used a pre-post controlled SMD, only 4 studies specified which correlations they used for calculating the sampling variance and another 4 specified that they used a formula that did not require to input any correlation. On the other hand, 64 studies (66%) reported some method to deal with statistically dependent effect sizes. The most popular choice, reported by 37 studies (58%), was averaging multiple effect sizes. Selecting only one measure applying some decision rule was used in 10 studies (16%). 3 studies (5%) used both averaging and following a decision. 13 (20%) studies opted for modeling the statistical dependency, where robust variance estimation was the preferred method with 7 studies (11%), followed by multi-level meta-analysis (6 studies; 9%). One study used the Cheung and Chan method to merge statistically dependent effect sizes (See supplementary Table 3 at https://osf.io/j58ax/ for more details). Lastly, 32 studies (33%) reported conducting outlier analyses.

Meta-analytical Methods

Most meta-analyses stated the statistical model assumed for the synthesis (90 studies; 93%) (see Fig. 4A). Of these 90 studies, the most frequently used model, used by 62 studies, was the random-effects model. 12 studies used both random-effects models and fixed-effect models, according to the heterogeneity. Only 3 used a fixed-effect model uniquely. 13 studies used models to account for statistically dependent effect sizes. 25 studies (26%) specified the weighting scheme. Besides, of those studies that did not assume a fixed-effect model, only 8 reported the between-studies variance estimator. 95 meta-analyses (98%) reported having assessed heterogeneity. 67 (69%) reported moderator analyses. 75 (77%) reported publication bias assessments, while 6 (6%) explicitly reported not performing them. There was diversity in the methods that were used to inspect publication bias, with most studies using multiple methods (54 studies; 72%). The most popular methods were funnel plots (54 studies; 85%), Egger’s regression (28 studies; 52%), Trim-and-Fill (22 studies; 41%), and Fail-Safe N (14 studies; 26%) (See Supplementary Tables 4–5 at https://osf.io/j58ax/ for more details). Only 8 (11%) studies used methods other than those mentioned above. Of the studies that inspected publication bias, 6 (8%) found substantial evidence of potential publication bias, with half of them attempting to correct the effect sizes. 20 (27%) studies found some evidence for the presence of publication bias in, at least, one outcome with, again, half of them attempting to correct for this. 4 (5%) studies did not discuss the presence of publication bias despite reporting that publication bias was inspected. Finally, 92 (95%) reported the software, where “Comprehensive Meta Analysis” (34 studies; 38%) and “RevMan” (27 studies, 30%) were the most popular choices, followed by an R package (21 studies; 23%).

Data Availability

Most meta-analytical studies (90 studies; 93%) reported information about the characteristics of the primary studies that were included in the synthesis. 89 (92%) reported the effect sizes of the primary studies. Of these 89 studies, 73 (82%) made this information available only in a .PDF or .DOC format, with only 11 (12%) sharing the data files in .CSV or Excel files, and 5 studies (6%) sharing it through a RevMan file (See Supplementary Table 6 at https://osf.io/j58ax/). Only 34 (36%) of the studies reported the primary raw data (means and standard deviations of the primary studies) that they used to compute the effect sizes. Of these 34 studies, 22 (64%) reported this information in a .PDF or .DOC format, only 6 (18%) shared the data files in .CSV or Excel files, and 6 (18%) studies reported it through a RevMan file (See Supplementary Table 7 at https://osf.io/j58ax/). 88 studies (94%) reported the sample sizes of the primary studies, with 76 studies (86%) reporting this information in .PDF or .DOC format (See Supplementary Table 8 at https://osf.io/j58ax/). Most studies (57 studies; 87%) that performed a moderator analysis reported the data of the moderating variables, with 51 studies (89%) reporting this information in .PDF or .DOC format (see Supplementary Table 9 at https://osf.io/j58ax/). Finally, the analysis script, such as the R code or the SPSS syntax, was only available for 12 studies (12%).

Preregistered Meta-analyses

Most of the 35 preregistered meta-analyses pre-specified information regarding the eligibility criteria, outcomes of interest, risk of bias assessments, statistical model, and additional analyses in their protocols (see Fig. 5 for details). However, only 10 (29%) studies detailed in advance how they would deal with the multiplicity of effect sizes and 20 (57%) how they would inspect publication bias. Information regarding these aspects was later specified in the final article in most cases, which accounts for the 66% and 34% of deviations due to information being in the final article but not in the protocol for the items regarding multiplicity of data and publication bias, respectively. Besides, the largest deviations due to information being present in both protocol and final article but with differences were found in the eligibility criteria (6; 17%), outcomes of interest (12; 34%), additional analyses (14; 40%), and publication bias (5; 14%). Finally, some other deviations were found because the information was specified in the protocol but not in the final article. This was found in the prioritization of outcomes (6%), risk of bias (3%), additional analyses (26%) and publication bias (6%). Of note, deviations were rarely disclosed (See Supplementary Fig. 3 at https://osf.io/mp8ye/). Deviations were only mentioned in 17% of the cases regarding the outcomes of interest, 38% of the cases regarding additional analyses, and 11% of the cases regarding the inspection publication bias. None of the rest of the deviations were disclosed.

Association Between Publication Year and Transparency

The following variables were inspected: preregistration, following guidelines, setting search limits, providing the search strategy, performing double screening and coding, calculating the reliability of the coding, assessing risk of bias, providing effect size formulae, modeling statistically dependent effect sizes, inspecting outliers, reporting the between-studies variance estimator and weighting scheme, performing moderator analyses, inspecting publication bias, inspecting publication bias with methods other than the Funnel Plot/Egger’s regression/Trim and Fill, reporting the raw data (means, SDs, and Ns), using R as the meta-analytic software, sharing the analysis script and stating whether the data is available. Supplementary Table 10 (https://osf.io/j58ax/) shows the results for all the variables that were explored. We found statistically significant associations (p < 0.05) between year and the following variables: preregistration,Footnote 2 following guidelines, setting search limits, reporting the search strategy, risk of bias assessment, inspecting outliers, weighting scheme, inspecting publication bias, using R, and reporting the raw data. On the contrary, reporting a double screening or double coding, detailing the formulae, modeling statistically dependent effect sizes, reporting the between-studies variance, performing moderator analyses, inspecting publication bias with other methods, sharing the analysis script, and stating whether data is available did not seem to change over the years.

Discussion

Meta-analytical studies require many decisions regarding the inclusion of studies, analysis, or evaluation of the primary studies. Thus, they present a flexibility equal or greater than empirical studies. Considering how relevant meta-analyses are as well as the controversial results found in the field of cognitive training, it is of great importance that meta-analyses on this topic report transparently every decision that could influence the results or the conclusions. In this study, we examined the transparency practices in the meta-analyses of cognitive training published up to July 2023. Moreover, in contrast to previous studies examining the reporting of information in meta-analyses (López-Nicolás et al., 2022; Polanin et al., 2020) or meta-reviews related to cognitive training (Gavelin et al., 2020), we provide a comprehensive overview of the methods that these meta-analytic studies used, which allowed a critical evaluation of these practices. Besides, we provide information regarding the eligibility criteria that the meta-analyses have used, and we also inspected the preregistered meta-analyses and compared them with their final article.

We found 97 meta-analytical studies to be eligible for our meta-review, spanning more than 20 years. These studies covered a wide range of populations, including people with very varied clinical conditions and age ranges, training interventions with diverse delivery methods and target cognitive domains, and multiple measured outcomes. Similar to what is found in Gavelin et al. (2020), more than one third of the meta-analyses had a preregistered protocol, a higher prevalence than what was found in previous meta-reviews from other fields (López-Nicolás et al., 2022; Polanin et al., 2020), and much higher than what is typically found for primary studies (Hardwicke et al., 2020). It is also worth mentioning that some of the meta-analyses were published at a time when there was not even a platform for their preregistration, since PROSPERO was launched in 2011 (Page et al., 2018). Importantly, even if we only consider the studies that were published after its launch, the practice of preregistering meta-analyses seems to be increasing over the years. Nonetheless, inspecting the protocols of these preregistered meta-analyses revealed important issues. Authors often failed to pre-specify key meta-analytic decisions such as how they would deal with the multiplicity of data or inspect publication bias. Besides, we found changes between the protocol and the final article regarding critical aspects such as the eligibility criteria or the outcomes of interest. We also found cases where there was information specified in the protocol, but they did not report anything in the final article in items such as the prioritization of outcomes or additional analyses. Equally important, these deviations were rarely disclosed. Although these issues also have been found in other empirical studies and systematic reviews (Bakker et al., 2020; Claesen et al., 2021; Tricco et al., 2016; van den Akker et al., 2023), the risk of bias arising from these deviations can be especially sensitive given the state of the field.

We found modest rates of following guidelines for conducting and reporting a systematic review such as the PRISMA. Nonetheless, this practice has increased over the years and is also slightly higher than what previous studies (López-Nicolás et al., 2022) have found. However, it is also worth noticing that reporting following such guidelines does not guarantee reporting every aspect included in the guidelines. On the other hand, about one third of the studies did not report whether they had any conflict of interest or whether they received any funding. Although we acknowledge that this information is not always required by the journals, we recommend that this information be included considering that cognitive training is, in many cases, used with financial interests. As previous studies have shown, presenting conflicts of interests or receiving private funding can bias the results of systematic reviews and meta-analyses (Cristea et al., 2017; Ebrahim et al., 2016; Ferrell et al., 2022; Yank et al., 2007).

The best reporting practices were found regarding the search and screening procedures, since most studies reported aspects such as the electronic databases, the date of the searches, the exact search strategy, and additional search methods. Besides, all of them reported the inclusion criteria. Nonetheless, a careful inspection of the inclusion criteria revealed substantial heterogeneity. In aspects such as the required duration training, studies used up to 10 different duration criteria, with many studies not specifying any required duration. There was also wide diversity in the cut-offs used for the different age ranges, where studies also used up to 6 different criteria when their target population was children. Likewise, authors usually considered different requirements for study designs, publication status or interventions that could be applied in combination with cognitive training. Acknowledging these differences is essential because they have, intentionally or not, an impact on the results of the meta-analysis.

Another aspect to highlight is that over one-third of the studies of the did not provide an assessment of the risk of bias of the primary studies, akin to what was found in Gavelin et al. (2020). Although this practice seems to have improved over the years, this prevalence is lower than what is found in previous studies (López-Nicolás et al., 2022). Evaluating the risk of bias of primary studies is critical because the evidence provided by the meta-analyses depends on the quality of the primary studies (Kvarven et al., 2020; Savović et al., 2012) and cognitive training primary studies usually do not adhere to best-practice standards (Gobet & Sala, 2022; Sala & Gobet, 2019; Simons et al., 2016). Importantly, while meta-analyses cannot remedy the problems of primary studies, they should provide a transparent and comprehensive assessment of the strength of the primary studies, as it is the only way that allows for a thorough evaluation of the degree of confidence we can have in the results of a meta-analysis.

All meta-analyses reported the effect size measure used. However, some of them did not detail the exact SMD measure and few of them reported the specific formula, a practice that has not changed over the years. Besides, some studies that reported using a pre-post controlled SMD did not specify the imputed correlation. These aspects are also important to detail as different computations for the different effect size measures are available, and the choice may affect the synthesized results (López-Nicolás et al., 2022; Rubio-Aparicio et al., 2018). On the other hand, the majority of studies reported addressing the statistical dependency that arose from the multiplicity of effect sizes, although this was mainly accomplished by averaging dependent effect sizes or only selecting one effect size, which leads to loss of information (López-López et al., 2018). Importantly, this practice has not changed over the years. Future studies should consider accounting for dependent effect sizes by using models with robust variance estimation (RVE; Pustejovsky & Tipton, 2022; Tanner-Smith et al., 2016) or multilevel meta-analyses in order to make use of all the available information (Gucciardi et al., 2022; López-López et al., 2018; Van den Noortgate et al., 2015).

Regarding the synthesis methods, it was very common to report the statistical model assumed, assessing heterogeneity, conducting moderator analyses, and specifying the meta-analysis software. However, most meta-analyses failed to specify other relevant details such as the between-study variance estimator and the weighting scheme, which can also affect the results (Veroniki et al., 2016). Studies frequently inspected the possibility of publication bias in their results, which also was an increasingly popular practice. Of note, about one third found some evidence of publication bias in at least one outcome, with some stating that the potential for publication bias was substantial. The most frequent methods were inspecting funnel plots, using Trim-and-Fill, Egger’s regression or even the Fail-Safe N. Importantly, while it is true that earlier studies did not have such a variety of methods available, our analyses show that more recent meta-analytic studies are not applying other more modern techniques frequently. Future studies might consider alternate methods depending on the characteristics of each meta-analysis (Maier et al., 2022; van Aert et al., 2016), since the popular approaches mentioned above might not be the optimal in many applied scenarios.

Finally, regarding data availability, we found good reporting rates of the primary study design, effect sizes, sample sizes, and moderator data. Similar to previous studies examining data sharing practices (Polanin et al., 2020 and López-Nicolás et al., 2022), the worst rates were found for sharing raw data (means and SDs), which prevents recalculating the effect sizes, and sharing the analysis script. Besides, it is noteworthy that, although this practice seems to be improving over the years, most of the data that was available was reported in non-machine-readable formats such as .PDF or .DOC files, which requires to manually re-code data in case anyone wanted to reuse the data for further re-analyses or make reproducibility checks. Manually re-coding data is an especially time-consuming and error-prone task, so sharing data in a machine-readable format such as .CSV is preferred.

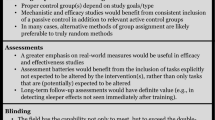

Recommendations for Future Cognitive Training and Other Cognition-Oriented Meta-analyses

A pragmatic way to ensure the correct reporting of information is to follow established reporting guidelines such as the PRISMA, which exhaustively covers meta-analytic aspects, from the search strategy to the conflicts of interest and funding. Besides, we strongly encourage sharing the data to allow other authors to reproduce and make re-analyses using different methods in machine-readable formats such as .CSV. Note that, because the primary unit of analysis is summary data, which has already been shared publicly, sharing meta-analytic data does not entail any ethical concern. Therefore, there is no good reason to not share the data (López-Nicolás et al., 2022; Moreau & Gamble, 2022).

On the other hand, being completely transparent lies beyond simply reporting the information about the methods. Providing a careful rationale for each decision as well as acknowledging the possible alternatives is essential, especially regarding eligibility criteria, which is, arguably, one of the most important decisions (Harrer et al., 2021; Voracek et al., 2019). Besides, when several decisions are possible, performing sensitivity analyses can be desirable.

Moreover, a transparent reporting of all the decisions and data is necessary, but not sufficient, to ensure the quality of a meta-analytical study. A completely transparent study can still suffer from outcome-dependent biases, poor methodology, or biases arising from the primary studies. A practical solution that minimizes the possibility of outcome-dependent biases and is often regarded as a best practice is to preregister a study protocol detailing the research questions, inclusion criteria and planned analyses prior the beginning of the study (Hardwicke & Wagenmakers, 2021; Moreau & Gamble, 2022; Nosek et al., 2019; Pigott & Polanin, 2020). Nonetheless, protocols should be comprehensive, specifying all key meta-analytic decisions. In this context, deviations from the protocol are natural and sometimes even desired, as long as they are disclosed and discussed. These points are essential since the effectiveness of the preregistration depends mainly on these aspects.

On the other hand, the evidence provided by a meta-analysis is just as good as the primary studies that are included in the analyses. It is well known that primary studies that do not comply with aspects such as a correct randomization can introduce bias to the results, and it has been shown that primary studies from the cognitive training field usually suffer from those important shortcomings (Savović et al., 2012; Simons et al., 2016). For this reason, it is very important that future reviews evaluate the risk of bias and discuss the evidence considering the results of this assessment. Finally, addressing methodological aspects, it is common for cognitive training studies to report multiple effect sizes even from the same cognitive domain. Accounting for statistically dependent effect sizes in your model might be preferred over averaging effect sizes or selecting only one effect size according to some decision rule. We refer to the work of Cheung (2019), López-López et al. (2018), and Moeyaert et al. (2017) for a detailed review and guidelines of this topic. Lastly, regarding the assessment of publication bias, it is important to note that not all methods to inspect publication bias work well under conditions such as small number of primary studies or high heterogeneity. Therefore, authors must be cautious selecting the analyses and consider using alternative methods (Maier et al., 2022) or a combination of them (Siegel et al., 2021; van Aert et al., 2019) to provide a more nuanced evaluation taking into account their specific situation.

Limitations of the Current Study

Our study presents some limitations. First, this work is purely descriptive and only focuses on the reporting practices of the cognitive training meta-analyses. We discarded conducting analyses examining the relationship between the reporting and the results of the meta-analyses because meta-analytic studies often synthesized several outcomes, with both significant and non-significant results. Therefore, trying to relate the reporting quality with the results of meta-analyses reporting both significant and non-significant effect sizes can be very challenging. Besides, it is important to acknowledge that reporting or not reporting information about an aspect does not necessarily have to be related with the results, in any specific direction. For this reason, no conclusions about the actual benefits of cognitive training can be drawn from the results presented here. Future studies might want to examine the relationship between the reported methodological practices and the results by conducting re-analyses of the meta-analyses comparing different methods and checking whether the conclusions change.

Another point to consider is that, as we mention above, the issue of transparency lies beyond simply reporting the information of each aspect and requires providing a rationale for each decision. Unfortunately, although we attempted to examine whether studies provided a justification for the meta-analytic decisions, we were unable to examine this issue for several reasons. First, considering adding a citation or some kind of argumentation as providing a rationale posed some problems, because, although some studies provided a justification of this kind, their adequateness was debatable. See, for example, recent studies included in our meta-review citing older methods for aspects such as how to deal with the multiplicity of effect sizes or publication bias. Therefore, we think that treating these cases as providing a rationale can be misleading. Second, when considering citations, evaluating whether studies provided a rationale not only requires checking whether authors provide a citation to back up their decision, but also requires examining that the citation actually supports the assertions. In fact, we found cases where it does not. For example, Ha and Park (2023) reported that the cut-off age for studying older adults was set at 50 years old, following guidelines provided by Shenkin et al. (2017) for studying older adults. However, what Shenkin et al. (2017) discuss is that, although there is no generally agreed criterion to define “older people,” a cut-off of over 60 or 65 years is often used. For all this, a careful examination of this issue would require an amount of work that is out of the scope of the present review.

Finally, it is worth mentioning that many reviews can be found which examine the benefits of cognitive training combined with additional interventions such as physical exercise or non-invasive brain stimulation. Although excluding these studies reduced potential heterogeneity between studies, making them more comparable, examining them could also offer interesting information. However, due to our resource constraints, this was not feasible in the current study. Similarly, for this reason, the screening process and the comparison between preregistered protocol and final articles was conducted by a sole author.

Conclusions

We found that the reporting of information and data of cognitive training meta-analyses was transparent in aspects such as how they performed the search of the studies, the screening procedure, or the data collection, akin to what previous studies examining other disciplines have found. Additionally, many reporting practices seem to have improved over the years. However, we found that the reporting quality was poorer in aspects such as specifying the exact meta-analytic parameters, formulas or sharing the data. Besides, other aspects such as the methods to deal with multiple effect sizes from the same study, analyses to assess the presence of publication bias and some data-sharing practices did not change over the years. Moreover, we found a remarkable heterogeneity in the eligibility criteria that needs to be better addressed in future studies. Also, we observed that meta-analyses that were preregistered often did not specify important decisions, and some contained non-disclosed deviations. Even though these results cannot be directly related with the results of the meta-analytic studies, they illustrate important points that must be addressed in future studies, especially considering how disputed the benefits of cognitive training programs are at present. In this context, we emphasize the need for a complete and detailed reporting of every decision that could influence the results, providing a clear and thoughtful rationale for each decision and, ideally, sharing all the meta-analytical data to allow any reproducibility check or further re-analyses incorporating other methods that might be more suitable.

Availability of Data and Materials

The datasets analyzed during the current study and the script code for generating processed data tables and performing statistical analyses are available in the Open Science Framework repository (https://osf.io/jw6rb/).

Notes

PROSPERO, the leading platform for preregistering systematic reviews and meta-analyses, was launched in 2011 (Page et al., 2018). If we consider only studies that were conducted after its implementation, the prevalence of preregistration increases to 38%.

A sensitivity analysis that included only studies published after the launch of PROSPERO in 2011 also showed a significant association between year and preregistration.

References

Aksayli, N. D., Sala, G., & Gobet, F. (2019). The cognitive and academic benefits of Cogmed: A meta-analysis. Educational Research Review, 27, 229–243. https://doi.org/10.1016/j.edurev.2019.04.003

Au, J., Buschkuehl, M., Duncan, G. J., & Jaeggi, S. M. (2016). There is no convincing evidence that working memory training is NOT effective: A reply to Melby-Lervåg and Hulme (2015). Psychonomic Bulletin & Review, 23(1), 331–337. https://doi.org/10.3758/s13423-015-0967-4

Au, J., Sheehan, E., Tsai, N., Duncan, G. J., Buschkuehl, M., & Jaeggi, S. M. (2015). Improving fluid intelligence with training on working memory: A meta-analysis. Psychonomic Bulletin & Review, 22(2), 366–377. https://doi.org/10.3758/s13423-014-0699-x

Baker, M. (2016). 1,500 scientists lift the lid on reproducibility. Nature, 533(7604), Article 7604. https://doi.org/10.1038/533452a

Bakker, M., Veldkamp, C. L. S., van Assen, M. A. L. M., Crompvoets, E. A. V., Ong, H. H., Nosek, B. A., Soderberg, C. K., Mellor, D., & Wicherts, J. M. (2020). Ensuring the quality and specificity of preregistrations. PLOS Biology, 18(12), e3000937. https://doi.org/10.1371/journal.pbio.3000937

Bartoš, F., Maier, M., Quintana, D. S., & Wagenmakers, E. J. (2022). Adjusting for publication bias in JASP and R: Selection models, PET-PEESE, and robust Bayesian meta-analysis. Advances in Methods and Practices in Psychological Science, 5(3). https://doi.org/10.1177/25152459221109259

Basak, C., Qin, S., & O’Connell, M. A. (2020). Differential effects of cognitive training modules in healthy aging and mild cognitive impairment: A comprehensive meta-analysis of randomized controlled trials. Psychology and Aging, 35(2), 220–249. https://doi.org/10.1037/pag0000442

Bonnechère, B., Langley, C., & Sahakian, B. J. (2020). The use of commercial computerised cognitive games in older adults: A meta-analysis. Scientific Reports, 10(1), Article 1. https://doi.org/10.1038/s41598-020-72281-3

Cao, Y., Huang, T., Huang, J., **e, X., & Wang, Y. (2020). Effects and moderators of computer-based training on children’s executive functions: A systematic review and meta-analysis. Frontiers in Psychology, 11, 580329. https://doi.org/10.3389/fpsyg.2020.580329

Chen, S., Yu, J., Zhang, Q., Zhang, J., Zhang, Y., & Wang, J. (2021). Which factor is more relevant to the effectiveness of the cognitive intervention? A meta-analysis of randomized controlled trials of cognitive training on symptoms and executive function behaviors of children with attention deficit hyperactivity disorder. Frontiers in Psychology, 12, 810298. https://doi.org/10.3389/fpsyg.2021.810298

Cheung, M.W.-L. (2019). A guide to conducting a meta-analysis with non-independent effect sizes. Neuropsychology Review, 29(4), 387–396. https://doi.org/10.1007/s11065-019-09415-6

Claesen, A., Gomes, S., Tuerlinckx, F., & Vanpaemel, W. (2021). Comparing dream to reality: An assessment of adherence of the first generation of preregistered studies. Royal Society Open Science, 8(10), 211037. https://doi.org/10.1098/rsos.211037

Clare, L., & Woods, R. T. (2004). Cognitive training and cognitive rehabilitation for people with early-stage Alzheimer’s disease: A review. Neuropsychological Rehabilitation, 14(4), 385–401. https://doi.org/10.1080/09602010443000074

Cortese, S., Ferrin, M., Brandeis, D., Buitelaar, J., Daley, D., Dittmann, R. W., Holtmann, M., Santosh, P., Stevenson, J., Stringaris, A., Zuddas, A., & Sonuga-Barke, E. J. (2015). Cognitive training for attention-deficit/hyperactivity disorder: Meta-analysis of clinical and neuropsychological outcomes from randomized controlled trials. Journal of the American Academy of Child & Adolescent Psychiatry, 54(3), 164–174. https://doi.org/10.1016/j.jaac.2014.12.010

Corti, C., Oldrati, V., Oprandi, M. C., Ferrari, E., Poggi, G., Borgatti, R., Urgesi, C., & Bardoni, A. (2019). Remote technology-based training programs for children with acquired brain injury: A systematic review and a meta-analytic exploration. Behavioural Neurology, 2019, 1346987. https://doi.org/10.1155/2019/1346987

Cristea, I. A., Gentili, C., Pietrini, P., & Cuijpers, P. (2017). Sponsorship bias in the comparative efficacy of psychotherapy and pharmacotherapy for adult depression: Meta-analysis. The British Journal of Psychiatry, 210(1), 16–23. https://doi.org/10.1192/bjp.bp.115.179275

Dardiotis, E., Nousia, A., Siokas, V., Tsouris, Z., Andravizou, A., Mentis, A. A., Florou, D., Messinis, L., & Nasios, G. (2018). Efficacy of computer-based cognitive training in neuropsychological performance of patients with multiple sclerosis: A systematic review and meta-analysis. Multiple Sclerosis and Related Disorders, 20, 58–66. https://doi.org/10.1016/j.msard.2017.12.017

das Nair, R., Cogger, H., Worthington, E., & Lincoln, N. B. (2016). Cognitive rehabilitation for memory deficits after stroke. Cochrane Database of Systematic Reviews, 9. https://doi.org/10.1002/14651858.CD002293.pub3

Ebrahim, S., Bance, S., Athale, A., Malachowski, C., & Ioannidis, J. P. A. (2016). Meta-analyses with industry involvement are massively published and report no caveats for antidepressants. Journal of Clinical Epidemiology, 70, 155–163. https://doi.org/10.1016/j.jclinepi.2015.08.021

Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315(7109), 629–634. https://doi.org/10.1136/bmj.315.7109.629

Epskamp, S. (2019). Reproducibility and replicability in a fast-paced methodological world. Advances in Methods and Practices in Psychological Science, 2(2), 145–155. https://doi.org/10.1177/2515245919847421

Ferrell, S., Demla, S., Anderson, J. M., Weaver, M., Torgerson, T., Hartwell, M., & Vassar, M. (2022). Association between industry sponsorship and author conflicts of interest with outcomes of systematic reviews and meta-analyses of interventions for opioid use disorder. Journal of Substance Abuse Treatment, 132, 108598. https://doi.org/10.1016/j.jsat.2021.108598

Gates, N. J., Rutjes, A. W., Nisio, M. D., Karim, S., Chong, L.-Y., March, E., Martínez, G., & Vernooij, R. W. (2020). Computerised cognitive training for 12 or more weeks for maintaining cognitive function in cognitively healthy people in late life. Cochrane Database of Systematic Reviews, 2. https://doi.org/10.1002/14651858.CD012277.pub3

Gavelin, H. M., Lampit, A., Hallock, H., Sabatés, J., & Bahar-Fuchs, A. (2020). Cognition-oriented treatments for older adults: A systematic overview of systematic reviews. Neuropsychology Review, 30(2), 167–193. https://doi.org/10.1007/s11065-020-09434-8

Giustiniani, A., Maistrello, L., Danesin, L., Rigon, E., & Burgio, F. (2022). Effects of cognitive rehabilitation in Parkinson disease: A meta-analysis. Neurological Sciences, 43(4), 2323–2337. https://doi.org/10.1007/s10072-021-05772-4

Gobet, F., & Sala, G. (2022). Cognitive training: A field in search of a phenomenon. Perspectives on Psychological Science, 18(1), 125–141. https://doi.org/10.1177/17456916221091830

Goldberg, Z., Kuslak, B., & Kurtz, M. M. (2023). A meta-analytic investigation of cognitive remediation for mood disorders: Efficacy and the role of study quality, sample and treatment factors. Journal of Affective Disorders, 330, 74–82. https://doi.org/10.1016/j.jad.2023.02.137

Gucciardi, D. F., Lines, R. L. J., & Ntoumanis, N. (2022). Handling effect size dependency in meta-analysis. International Review of Sport and Exercise Psychology, 15(1), 152–178. https://doi.org/10.1080/1750984X.2021.1946835

Ha, J.-Y., & Park, H.-J. (2023). Effects of mobile-based cognitive interventions for the cognitive function in the community-dwelling older adults: A systematic review and meta-analysis. Archives of Gerontology and Geriatrics, 104, 104829. https://doi.org/10.1016/j.archger.2022.104829

Hallock, H., Collins, D., Lampit, A., Deol, K., Fleming, J., & Valenzuela, M. (2016). Cognitive training for post-acute traumatic brain injury: A systematic review and meta-analysis. Frontiers in Human Neuroscience, 10, 537. https://doi.org/10.3389/fnhum.2016.00537

Hardwicke, T. E., & Wagenmakers, E.-J. (2021). Reducing bias, increasing transparency, and calibrating confidence with preregistration. Nature Human Behaviour, 7(1), 15–26. https://doi.org/10.1038/s41562-022-01497-2

Hardwicke, T. E., Wallach, J. D., Kidwell, M. C., Bendixen, T., Crüwell, S., & Ioannidis, J. P. A. (2020). An empirical assessment of transparency and reproducibility-related research practices in the social sciences (2014–2017). Royal Society Open Science, 7(2), 190806. https://doi.org/10.1098/rsos.190806

Harrer, M., Cuijpers, P., A, F. T., & Ebert, D. D. (2021). Doing meta-analysis with R: A hands-on guide (1.a ed.). Chapman & Hall/CRC Press.

Harvey, P. D., McGurk, S. R., Mahncke, H., & Wykes, T. (2018). Controversies in computerized cognitive training. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 3(11), 907–915. https://doi.org/10.1016/j.bpsc.2018.06.008

He, F., Huang, H., Ye, L., Wen, X., & Cheng, A. S. K. (2022). Meta-analysis of neurocognitive rehabilitation for cognitive dysfunction among pediatric cancer survivors. Journal of Cancer Research and Therapeutics, 18(7), 2058. https://doi.org/10.4103/jcrt.jcrt_1429_22

Hedges, L. V., Tipton, E., & Johnson, M. C. (2010). Robust variance estimation in meta-regression with dependent effect size estimates. Research Synthesis Methods, 1(1), 39–65. https://doi.org/10.1002/jrsm.5

Hill, N. T., Mowszowski, L., Naismith, S. L., Chadwick, V. L., Valenzuela, M., & Lampit, A. (2017). Computerized cognitive training in older adults with mild cognitive impairment or dementia: A systematic review and meta-analysis. American Journal of Psychiatry, 174(4), 329–340. https://doi.org/10.1176/appi.ajp.2016.16030360

Hou, J., Jiang, T., Fu, J., Su, B., Wu, H., Sun, R., & Zhang, T. (2020). The long-term efficacy of working memory training in healthy older adults: A systematic review and meta-analysis of 22 randomized controlled trials. The Journals of Gerontology: Series B, 75(8), e174–e188. https://doi.org/10.1093/geronb/gbaa077

Huntley, J. D., Gould, R. L., Liu, K., Smith, M., & Howard, R. J. (2015). Do cognitive interventions improve general cognition in dementia? A Meta-Analysis and Meta-Regression. BMJ Open, 5(4), e005247. https://doi.org/10.1136/bmjopen-2014-005247

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLOS Medicine, 2(8), e124. https://doi.org/10.1371/journal.pmed.0020124

Ioannidis, J. (2016). The mass production of redundant, misleading, and conflicted systematic reviews and meta-analyses. The Milbank Quarterly, 94(3), 485–514. https://doi.org/10.1111/1468-0009.12210

Jones, W. E., Benge, J. F., & Scullin, M. K. (2021). Preserving prospective memory in daily life: A systematic review and meta-analysis of mnemonic strategy, cognitive training, external memory aid, and combination interventions. Neuropsychology, 35, 123–140. https://doi.org/10.1037/neu0000704

Kambeitz-Ilankovic, L., Betz, L. T., Dominke, C., Haas, S. S., Subramaniam, K., Fisher, M., Vinogradov, S., Koutsouleris, N., & Kambeitz, J. (2019). Multi-outcome meta-analysis (MOMA) of cognitive remediation in schizophrenia: Revisiting the relevance of human coaching and elucidating interplay between multiple outcomes. Neuroscience & Biobehavioral Reviews, 107, 828–845. https://doi.org/10.1016/j.neubiorev.2019.09.031

Karbach, J., & Verhaeghen, P. (2014). Making working memory work: A meta-analysis of executive-control and working memory training in older adults. Psychological Science, 25(11), 2027–2037. https://doi.org/10.1177/0956797614548725

Karch, D., Albers, L., Renner, G., Lichtenauer, N., & von Kries, R. (2013). The efficacy of cognitive training programs in children and adolescents. Deutsches Ärzteblatt International, 110(39), 643–652. https://doi.org/10.3238/arztebl.2013.0643

Kassai, R., Futo, J., Demetrovics, Z., & Takacs, Z. K. (2019). A meta-analysis of the experimental evidence on the near- and far-transfer effects among children’s executive function skills. Psychological Bulletin, 145, 165–188. https://doi.org/10.1037/bul0000180

Kelly, M. E., Loughrey, D., Lawlor, B. A., Robertson, I. H., Walsh, C., & Brennan, S. (2014). The impact of cognitive training and mental stimulation on cognitive and everyday functioning of healthy older adults: A systematic review and meta-analysis. Ageing Research Reviews, 15, 28–43. https://doi.org/10.1016/j.arr.2014.02.004

Koffel, J. B., & Rethlefsen, M. L. (2016). Reproducibility of search strategies is poor in systematic reviews published in high-impact pediatrics, cardiology and surgery journals: A cross-sectional study. PLoS ONE, 11(9), e0163309. https://doi.org/10.1371/journal.pone.0163309

Kvarven, A., Strømland, E., & Johannesson, M. (2020). Comparing meta-analyses and preregistered multiple-laboratory replication projects. Nature Human Behaviour, 4(4), Article 4. https://doi.org/10.1038/s41562-019-0787-z

Lakens, D., Page-Gould, E., van Assen, M. A. L. M., Spellman, B., Schönbrodt, F., Hasselman, F., Corker, K. S., Grange, J. A., Sharples, A., Cavender, C., Augusteijn, H. E. M., Augusteijn, H., Gerger, H., Locher, C., Miller, I. D., Anvari, F., & Scheel, A. M. (2017). Examining the reproducibility of meta-analyses in psychology: A preliminary report. MetaAr**v. https://doi.org/10.31222/osf.io/xfbjf

Lampit, A., Hallock, H., & Valenzuela, M. (2014). Computerized cognitive training in cognitively healthy older adults: A systematic review and meta-analysis of effect modifiers. PLOS Medicine, 11(11), e1001756. https://doi.org/10.1371/journal.pmed.1001756

Lampit, A., Heine, J., Finke, C., Barnett, M. H., Valenzuela, M., Wolf, A., Leung, I. H. K., & Hill, N. T. M. (2019). Computerized cognitive training in multiple sclerosis: A systematic review and meta-analysis. Neurorehabilitation and Neural Repair, 33(9), 695–706. https://doi.org/10.1177/1545968319860490

Leung, I. H. K., Walton, C. C., Hallock, H., Lewis, S. J. G., Valenzuela, M., & Lampit, A. (2015). Cognitive training in Parkinson disease: A systematic review and meta-analysis. Neurology, 85(21), 1843–1851. https://doi.org/10.1212/WNL.0000000000002145

Light, R. J., & Pillemer, D. B. (1984). Summing up: The science of reviewing research. Harvard University Press.

Loetscher, T., Potter, K.-J., Wong, D., & das Nair, R. (2019). Cognitive rehabilitation for attention deficits following stroke. Cochrane Database of Systematic Reviews, 11. https://doi.org/10.1002/14651858.CD002842.pub3

López-López, J. A., Page, M. J., Lipsey, M. W., & Higgins, J. P. T. (2018). Dealing with effect size multiplicity in systematic reviews and meta-analyses. Research Synthesis Methods, 9(3), 336–351. https://doi.org/10.1002/jrsm.1310

López-López, J. A., Rubio-Aparicio, M., & Sánchez-Meca, J. (2022). Overviews of reviews: Concept and development. Psicothema, 34(2), 175–181. https://doi.org/10.7334/psicothema2021.586

López-Nicolás, R., López-López, J. A., Rubio-Aparicio, M., & Sánchez-Meca, J. (2022). A meta-review of transparency and reproducibility-related reporting practices in published meta-analyses on clinical psychological interventions (2000–2020). Behavior Research Methods, 54(1), 334–349. https://doi.org/10.3758/s13428-021-01644-z

Maassen, E., van Assen, M. A. L. M., Nuijten, M. B., Olsson-Collentine, A., & Wicherts, J. M. (2020). Reproducibility of individual effect sizes in meta-analyses in psychology. PLoS ONE, 15(5), e0233107. https://doi.org/10.1371/journal.pone.0233107

Maier, M., Bartoš, F., & Wagenmakers, E.-J. (2022). Robust Bayesian meta-analysis: Addressing publication bias with model-averaging. Psychological Methods, 28(1), 107. https://doi.org/10.1037/met0000405

McGurk, S. R., Twamley, E. W., Sitzer, D. I., McHugo, G. J., & Mueser, K. T. (2007). A meta-analysis of cognitive remediation in schizophrenia. American Journal of Psychiatry, 164(12), 1791–1802. https://doi.org/10.1176/appi.ajp.2007.07060906

Melby-Lervåg, M., & Hulme, C. (2016). There is no convincing evidence that working memory training is effective: A reply to Au et al. (2014) and Karbach and Verhaeghen (2014). Psychonomic Bulletin & Review, 23(1), 324–330. https://doi.org/10.3758/s13423-015-0862-z

Melby-Lervåg, M., Redick, T. S., & Hulme, C. (2016). Working memory training does not improve performance on measures of intelligence or other measures of “Far Transfer”: Evidence from a meta-analytic review. Perspectives on Psychological Science, 11(4), 512–534. https://doi.org/10.1177/1745691616635612

Moeyaert, M., Ugille, M., Natasha Beretvas, S., Ferron, J., Bunuan, R., & Van den Noortgate, W. (2017). Methods for dealing with multiple outcomes in meta-analysis: A comparison between averaging effect sizes, robust variance estimation and multilevel meta-analysis. International Journal of Social Research Methodology, 20(6), 559–572. https://doi.org/10.1080/13645579.2016.1252189

Moreau, D., & Gamble, B. (2022). Conducting a meta-analysis in the age of open science: Tools, tips, and practical recommendations. Psychological Methods, 27(3), 426–432. https://doi.org/10.1037/met0000351

Nguyen, L., Murphy, K., & Andrews, G. (2019). Immediate and long-term efficacy of executive functions cognitive training in older adults: A systematic review and meta-analysis. Psychological Bulletin, 145, 698–733. https://doi.org/10.1037/bul0000196

Nguyen, L., Murphy, K., & Andrews, G. (2022). A game a day keeps cognitive decline away? a systematic review and meta-analysis of commercially-available brain training programs in healthy and cognitively impaired older adults. Neuropsychology Review, 32(3), 601–630. https://doi.org/10.1007/s11065-021-09515-2

Nosek, B. A., Beck, E. D., Campbell, L., Flake, J. K., Hardwicke, T. E., Mellor, D. T., van ’t Veer, A. E., & Vazire, S. (2019). Preregistration is hard, and worthwhile. Trends in Cognitive Sciences, 23(10), 815–818. https://doi.org/10.1016/j.tics.2019.07.009

Oldrati, V., Corti, C., Poggi, G., Borgatti, R., Urgesi, C., & Bardoni, A. (2020). Effectiveness of computerized cognitive training programs (CCTP) with game-like features in children with or without neuropsychological disorders: A meta-analytic investigation. Neuropsychology Review, 30(1), 126–141. https://doi.org/10.1007/s11065-020-09429-5

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716. https://doi.org/10.1126/science.aac4716

Page, M. J., McKenzie, J. E., & Forbes, A. (2013). Many scenarios exist for selective inclusion and reporting of results in randomized trials and systematic reviews. Journal of Clinical Epidemiology, 66(5), 524–537. https://doi.org/10.1016/j.jclinepi.2012.10.010

Page, M. J., Moher, D., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., & … McKenzie, J. E. (2021). PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ, 372, n160. https://doi.org/10.1136/bmj.n160

Page, M. J., Shamseer, L., & Tricco, A. C. (2018). Registration of systematic reviews in PROSPERO: 30,000 records and counting. Systematic Reviews, 7(1), 32. https://doi.org/10.1186/s13643-018-0699-4

Pigott, T. D., & Polanin, J. R. (2020). Methodological guidance paper: High-quality meta-analysis in a systematic review. Review of Educational Research, 90(1), 24–46. https://doi.org/10.3102/0034654319877153

Polanin, J. R., Hennessy, E. A., & Tsuji, S. (2020). Transparency and reproducibility of meta-analyses in psychology: A meta-review. Perspectives on Psychological Science, 15(4), 1026–1041. https://doi.org/10.1177/1745691620906416

Pustejovsky, J. E., & Tipton, E. (2022). Meta-analysis with robust variance estimation: Expanding the range of working models. Prevention Science, 23(3), 425–438. https://doi.org/10.1007/s11121-021-01246-3

R Core Team. (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

Redick, T. S. (2019). The hype cycle of working memory training. Current Directions in Psychological Science, 28(5), 423–429. https://doi.org/10.1177/0963721419848668

Robinson, K. E., Kaizar, E., Catroppa, C., Godfrey, C., & Yeates, K. O. (2014). Systematic review and meta-analysis of cognitive interventions for children with central nervous system disorders and neurodevelopmental disorders. Journal of Pediatric Psychology, 39(8), 846–865. https://doi.org/10.1093/jpepsy/jsu031

Rubio-Aparicio, M., Marín-Martínez, F., Sánchez-Meca, J., & López-López, J. A. (2018). A methodological review of meta-analyses of the effectiveness of clinical psychology treatments. Behavior Research Methods, 50(5), 2057–2073. https://doi.org/10.3758/s13428-017-0973-8

Sala, G., & Gobet, F. (2017). Working memory training in typically develo** children: A meta-analysis of the available evidence. Developmental Psychology, 53, 671–685. https://doi.org/10.1037/dev0000265

Sala, G., & Gobet, F. (2019). Cognitive training does not enhance general cognition. Trends in Cognitive Sciences, 23(1), 9–20. https://doi.org/10.1016/j.tics.2018.10.004

Sala, G., & Gobet, F. (2020). Working memory training in typically develo** children: A multilevel meta-analysis. Psychonomic Bulletin & Review, 27(3), 423–434. https://doi.org/10.3758/s13423-019-01681-y

Savović, J., Jones, H. E., Altman, D. G., Harris, R. J., Jüni, P., Pildal, J., Als-Nielsen, B., Balk, E. M., Gluud, C., Gluud, L. L., Ioannidis, J. P. A., Schulz, K. F., Beynon, R., Welton, N. J., Wood, L., Moher, D., Deeks, J. J., & Sterne, J. A. C. (2012). Influence of reported study design characteristics on intervention effect estimates from randomized, controlled trials. Annals of Internal Medicine, 157(6), 429–438. https://doi.org/10.7326/0003-4819-157-6-201209180-00537

Schwaighofer, M., Fischer, F., & Buehner, M. (2015). Does working memory training transfer? A meta-analysis including training conditions as moderators. Educational Psychologist, 50(2), 138–166. https://doi.org/10.1080/00461520.2015.1036274

Scionti, N., Cavallero, M., Zogmaister, C., & Marzocchi, G. M. (2020). Is cognitive training effective for improving executive functions in preschoolers? A systematic review and meta-analysis. Frontiers in Psychology, 10. https://www.frontiersin.org/articles/10.3389/fpsyg.2019.02812

Shenkin, S. D., Harrison, J. K., Wilkinson, T., Dodds, R. M., & Ioannidis, J. P. A. (2017). Systematic reviews: Guidance relevant for studies of older people. Age and Ageing, 46(5), 722–728. https://doi.org/10.1093/ageing/afx105

Shrout, P. E., & Rodgers, J. L. (2018). Psychology, science, and knowledge construction: Broadening perspectives from the replication crisis. Annual Review of Psychology, 69(1), 487–510. https://doi.org/10.1146/annurev-psych-122216-011845

Siegel, M., Eder, J. S. N., Wicherts, J. M., & Pietschnig, J. (2021). Times are changing, bias isn’t: A meta-meta-analysis on publication bias detection practices, prevalence rates, and predictors in industrial/organizational psychology. Journal of Applied Psychology, 107(11), 2013. https://doi.org/10.1037/apl0000991

Signorell, A., Aho, K., Alfons, A., Anderegg, N., Aragon, T., Arppe, A., ... & Borchers, H. W. (2019). DescTools: Tools for descriptive statistics. R package version 0.99, 28, 17.

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22(11), 1359–1366. https://doi.org/10.1177/0956797611417632

Simons, D. J., Boot, W. R., Charness, N., Gathercole, S. E., Chabris, C. F., Hambrick, D. Z., & Stine-Morrow, E. A. L. (2016). Do “brain-training” programs work? Psychological Science in the Public Interest, 17(3), 103–186. https://doi.org/10.1177/1529100616661983

Simonsohn, U., Nelson, L. D., & Simmons, J. P. (2014). P-curve: A key to the file-drawer. Journal of Experimental Psychology: General, 143(2), 534. https://doi.org/10.1037/a0033242

Spencer-Smith, M., & Klingberg, T. (2015). Benefits of a working memory training program for inattention in daily life: A systematic review and meta-analysis. PLoS ONE, 10(3), e0119522. https://doi.org/10.1371/journal.pone.0119522

Stanley, T. D., & Doucouliagos, H. (2014). Meta‐regression approximations to reduce publication selection bias. Research Synthesis Methods, 5(1), 60–78. https://doi.org/10.1002/jrsm.1095

Tanner-Smith, E. E., Tipton, E., & Polanin, J. R. (2016). Handling complex meta-analytic data structures using robust variance estimates: A tutorial in R. Journal of Developmental and Life-Course Criminology, 2(1), 85–112. https://doi.org/10.1007/s40865-016-0026-5

Teixeira-Santos, A. C., Moreira, C. S., Magalhães, R., Magalhães, C., Pereira, D. R., Leite, J., Carvalho, S., & Sampaio, A. (2019). Reviewing working memory training gains in healthy older adults: A meta-analytic review of transfer for cognitive outcomes. Neuroscience & Biobehavioral Reviews, 103, 163–177. https://doi.org/10.1016/j.neubiorev.2019.05.009

Tetlow, A. M., & Edwards, J. D. (2017). Systematic literature review and meta-analysis of commercially available computerized cognitive training among older adults. Journal of Cognitive Enhancement, 1(4), 559–575. https://doi.org/10.1007/s41465-017-0051-2

Traut, H. J., Guild, R. M., & Munakata, Y. (2021). Why does cognitive training yield inconsistent benefits? A meta-analysis of individual differences in baseline cognitive abilities and training outcomes. Frontiers in Psychology, 12. https://www.frontiersin.org/articles/10.3389/fpsyg.2021.662139

Tricco, A. C., Cogo, E., Page, M. J., Polisena, J., Booth, A., Dwan, K., MacDonald, H., Clifford, T. J., Stewart, L. A., Straus, S. E., & Moher, D. (2016). A third of systematic reviews changed or did not specify the primary outcome: A PROSPERO register study. Journal of Clinical Epidemiology, 79, 46–54. https://doi.org/10.1016/j.jclinepi.2016.03.025

van Aert, R. C., Wicherts, J. M., & van Assen, M. A. (2016). Conducting meta-analyses based on p values: Reservations and recommendations for applying p-uniform and p-curve. Perspectives on Psychological Science, 11(5), 713–729. https://doi.org/10.1177/1745691616650874

van Aert, R. C. M., Wicherts, J. M., & van Assen, M. A. L. M. (2019). Publication bias examined in meta-analyses from psychology and medicine: A meta-meta-analysis. PLoS ONE, 14(4), e0215052. https://doi.org/10.1371/journal.pone.0215052

van den Akker, O. R., van Assen, M. A. L. M., Enting, M., de Jonge, M., Ong, H. H., Rüffer, F., Schoenmakers, M., Stoevenbelt, A. H., Wicherts, J. M., & Bakker, M. (2023). Selective hypothesis reporting in psychology: Comparing preregistrations and corresponding publications. Advances in Methods and Practices in Psychological Science, 6(3). https://doi.org/10.1177/25152459231187988

Van den Noortgate, W., López-López, J. A., Marín-Martínez, F., & Sánchez-Meca, J. (2015). Meta-analysis of multiple outcomes: A multilevel approach. Behavior Research Methods, 47(4), 1274–1294. https://doi.org/10.3758/s13428-014-0527-2

Veroniki, A. A., Jackson, D., Viechtbauer, W., Bender, R., Bowden, J., Knapp, G., Kuss, O., Higgins, J. P., Langan, D., & Salanti, G. (2016). Methods to estimate the between-study variance and its uncertainty in meta-analysis. Research Synthesis Methods, 7(1), 55–79. https://doi.org/10.1002/jrsm.1164

Vevea, J. L., & Woods, C. M. (2005). Publication bias in research synthesis: Sensitivity analysis using a priori weight functions. Psychological Methods, 10(4), 428. https://doi.org/10.1037/1082-989X.10.4.428

Voracek, M., Kossmeier, M., & Tran, U. S. (2019). Which data to meta-analyze, and how? Zeitschrift Für Psychologie, 227(1), 64–82. https://doi.org/10.1027/2151-2604/a000357

Wallach, J. D., Boyack, K. W., & Ioannidis, J. P. A. (2018). Reproducible research practices, transparency, and open access data in the biomedical literature, 2015–2017. PLOS Biology, 16(11), e2006930. https://doi.org/10.1371/journal.pbio.2006930

Weicker, J., Villringer, A., & Thöne-Otto, A. (2016). Can impaired working memory functioning be improved by training? A meta-analysis with a special focus on brain injured patients. Neuropsychology, 30(2), 190–212. https://doi.org/10.1037/neu0000227

Wicherts, J. M., Veldkamp, C. L. S., Augusteijn, H. E. M., Bakker, M., van Aert, R. C. M., & van Assen, M. A. L. M. (2016). Degrees of freedom in planning, running, analyzing, and reporting psychological studies: A checklist to avoid p-hacking. Frontiers in Psychology, 7. https://www.frontiersin.org/articles/10.3389/fpsyg.2016.01832

Wickham, H., Averick, M., Bryan, J., Chang, W., McGowan, L. D., François, R., Grolemund, G., Hayes, A., Henry, L., Hester, J., Kuhn, M., Pedersen, T. L., Miller, E., Bache, S. M., Müller, K., Ooms, J., Robinson, D., Seidel, D. P., Spinu, V., & … Yutani, H. (2019). Welcome to the Tidyverse. Journal of Open Source Software, 4(43), 1686. https://doi.org/10.21105/joss.01686

Wilkinson, M. D., Dumontier, M., Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., Blomberg, N., Boiten, J.-W., da Silva Santos, L. B., Bourne, P. E., Bouwman, J., Brookes, A. J., Clark, T., Crosas, M., Dillo, I., Dumon, O., Edmunds, S., Evelo, C. T., Finkers, R., & ... Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3(1), Article 1. https://doi.org/10.1038/sdata.2016.18

Woolf, C., Lampit, A., Shahnawaz, Z., Sabates, J., Norrie, L. M., Burke, D., Naismith, S. L., & Mowszowski, L. (2022). A systematic review and meta-analysis of cognitive training in adults with major depressive disorder. Neuropsychology Review, 32(2), 419–437. https://doi.org/10.1007/s11065-021-09487-3

Wykes, T., Huddy, V., Cellard, C., McGurk, S. R., & Czobor, P. (2011). A meta-analysis of cognitive remediation for Schizophrenia: Methodology and effect sizes. American Journal of Psychiatry, 168(5), 472–485. https://doi.org/10.1176/appi.ajp.2010.10060855

Yan, X., Wei, S., & Liu, Q. (2023). Effect of cognitive training on patients with breast cancer reporting cognitive changes: A systematic review and meta-analysis. BMJ Open, 13(1). https://doi.org/10.1136/bmjopen-2021-058088

Yank, V., Rennie, D., & Bero, L. A. (2007). Financial ties and concordance between results and conclusions in meta-analyses: Retrospective cohort study. BMJ, 335(7631), 1202–1205. https://doi.org/10.1136/bmj.39376.447211.BE

Zhang, H., Huntley, J., Bhome, R., Holmes, B., Cahill, J., Gould, R. L., Wang, H., Yu, X., & Howard, R. (2019). Effect of computerised cognitive training on cognitive outcomes in mild cognitive impairment: A systematic review and meta-analysis. BMJ OPEN, 9(8). https://doi.org/10.1136/bmjopen-2018-027062

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This study was funded by Agencia Estatal de Investigación (Government of Spain) and by FEDER Funds, AEI/10.13039/501100011033 (grant nos. PID2019‐104033GA‐I00 and PID2019‐104080 GB‐I00) as well as by the Region of Murcia (Spain) through the Regional Program for the Promotion of Scientific and Technical Research of Excellence (Action Plan 2022) of the Seneca Foundation - Science and Technology Agency of the Region of Murcia (grant no. 22064/PI/22).

Author information

Authors and Affiliations

Contributions