Abstract

In this work, we deal with the optimal control problem of maximizing biogas production in a chemostat. The dilution rate is the controlled variable, and we study the problem over a fixed finite horizon, for positive initial conditions. We consider the single reaction model and work with a broad class of growth rate functions. With the Pontryagin maximum principle, we construct a one-parameter family of extremal controls of type bang-singular arc. The parameter of these extremal controls is the constant value of the Hamiltonian. Using the Hamilton–Jacobi–Bellman equation, we identify the optimal control as the extremal associated with the value of the Hamiltonian, which satisfies a fixed point equation. We then propose a numerical algorithm to compute the optimal control by solving this fixed point equation. We illustrate this method with the two major types of growth functions of Monod and Haldane.

Similar content being viewed by others

Notes

The approach we have taken provides a new proof for the optimality of the synthesis already known for the reduced model, that is, the case where \(s_{\text {in}}=x_0+s_0\). To show consistency of our approach, the details for this case have been included in “Appendix.”

References

Rehl, T., Müller, J.: Co\(_{2}\) abatement costs of greenhouse gas (ghg) mitigation by different biogas conversion pathways. J. Environ. Manag. 114, 13–25 (2013)

Beddoes, J.C., Bracmort, K.S., Burns, R.T., Lazarus, W.F.: An analysis of energy production costs from anaerobic digestion systems on us livestock production facilities. USDA NRCS Technical Note (1) (2007)

Guwy, A., Hawkes, F., Wilcox, S., Hawkes, D.: Neural network and on-off control of bicarbonate alkalinity in a fluidised-bed anaerobic digester. Water Res. 31(8), 2019–2025 (1997)

Nguyen, D., Gadhamshetty, V., Nitayavardhana, S., Khanal, S.K.: Automatic process control in anaerobic digestion technology: a critical review. Bioresour. Technol. 193, 513–522 (2015)

García-Diéguez, C., Molina, F., Roca, E.: Multi-objective cascade controller for an anaerobic digester. Process Biochem. 46(4), 900–909 (2011)

Rodríguez, J., Ruiz, G., Molina, F., Roca, E., Lema, J.: A hydrogen-based variable-gain controller for anaerobic digestion processes. Water Sci. Technol. 54(2), 57–62 (2006)

Djatkov, D., Effenberger, M., Martinov, M.: Method for assessing and improving the efficiency of agricultural biogas plants based on fuzzy logic and expert systems. Appl. Energy 134, 163–175 (2014)

Dimitrova, N., Krastanov, M.: Nonlinear adaptive stabilizing control of an anaerobic digestion model with unknown kinetics. Int. J. Robust Nonlinear Control 22(15), 1743–1752 (2012)

Sbarciog, M., Loccufier, M., Wouwer, A.V.: An optimizing start-up strategy for a bio-methanator. Bioprocess Biosyst. Eng. 35(4), 565–578 (2012)

Bayen, T., Cots, O., Gajardo, P.: Analysis of an optimal control problem related to the anaerobic digestion process. J. Optim. Theory Appl. 178, 627–659 (2018)

Bernard, O., Chachuat, B., Hélias, A., Rodriguez, J.: Can we assess the model complexity for a bioprocess: theory and example of the anaerobic digestion process. Water Sci. Technol. 53(1), 85–92 (2006)

Stamatelatou, K., Lyberatos, G., Tsiligiannis, C., Pavlou, S., Pullammanappallil, P., Svoronos, S.: Optimal and suboptimal control of anaerobic digesters. Environ. Model. Assess. 2(4), 355–363 (1997)

Ghouali, A., Sari, T., Harmand, J.: Maximizing biogas production from the anaerobic digestion. J. Process Control 36, 79–88 (2015)

Haddon, A., Harmand, J., Ramírez, H., Rapaport, A.: Guaranteed value strategy for the optimal control of biogas production in continuous bio-reactors. IFAC PapersOnLine 50(1), 8728–8733 (2017)

Haddon, A., Ramírez, H., Rapaport, A.: First results of optimal control of average biogas production for the chemostat over an infinite horizon. IFAC PapersOnLine 51(2), 725–729 (2018)

Team Commands, Inria Saclay: Bocop: an open source toolbox for optimal control (2017). http://www.bocop.org. Assessed 2018

Gerdts, M.: Optimal control and parameter identification with differential-algebraic equations of index 1: user’s guide. Tech. rep., Institut für Mathematik und Rechneranwendung, Universität der Bundeswehr München (2011)

Bokanowski, O., Desilles, A., Zidani, H.: ROC-HJ-Solver. a C++ Library for Solving HJ equations (2013). http://uma.ensta-paristech.fr/soft/ROC-HJ/. Assessed 2018

Yane (2018). http://www.nonlinearmpc.com. Assessed 2018

Lobry, C., Rapaport, A., Sari, T., et al.: The Chemostat: Mathematical Theory of Microorganism Cultures. Wiley, New York (2017)

Bastin, G., Dochain, D.: On-line Estimation and Adaptive Control of Bioreactors. Elsevier, Amsterdam (1991)

Hermosilla, C.: Stratified discontinuous differential equations and sufficient conditions for robustness. Discrete Contin. Dyn. Syst.-Ser. A 35(9), 23 (2015)

Clarke, F.H., Ledyaev, Y.S., Stern, R.J., Wolenski, P.R.: Nonsmooth Analysis and Control Theory, vol. 178. Springer Science & Business Media, Berlin (2008)

Clarke, F.: Functional Analysis, Calculus of Variations and Optimal Control, vol. 264. Springer Science & Business Media, Berlin (2013)

Hermosilla, C., Zidani, H.: Infinite horizon problems on stratifiable state-constraints sets. J. Differ. Equ. 258(4), 1430–1460 (2015)

Bardi, M., Capuzzo-Dolcetta, I.: Optimal Control and Viscosity Solutions of Hamilton–Jacobi–Bellman Equations. Springer Science & Business Media, Berlin (2008)

Bernard, O., Hadj-Sadok, Z., Dochain, D., Genovesi, A., Steyer, J.P.: Dynamical model development and parameter identification for an anaerobic wastewater treatment process. Biotechnol. Bioeng. 75(4), 424–438 (2001)

Bonnans Frederic, J., Giorgi, D., Grelard, V., Heymann, B., Maindrault, S., Martinon, P., Tissot, O., Liu, J.: Bocop–A collection of examples. Tech. rep., INRIA (2017). http://www.bocop.org. Assessed 2018

Acknowledgements

A. Haddon was supported by a doctoral fellowship CONICYT –PFCHA/Doctorado Nacional/2017-21170249. The first author was also supported by FONDECYT Grant 1160567 and by Basal Program CMM-AFB 170001 from CONICYT–Chile. C. Hermosilla was supported by CONICYT-Chile through FONDECYT Grant Number 3170485.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Lorenz Biegler.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Reduced Model

Appendix: Reduced Model

In this final part, we provide a HJB proof for the optimal synthesis for the reduced model, that is, the case where the initial data satisfy \(s_{\text {in}}=x_0+s_0\). In particular, we show how the fixed point characterization of the optimal control can be used analytically in a special case when the dynamics reduces to a single equation. A well-known property of the chemostat model is that the set \(I:=\{ (x,s)\in \mathbb {R} :x+s=s_{\text {in}} \}\) is invariant for dynamics (1), and thus, for initial conditions in I, the dynamics reduce to \(\dot{s} = \big (D - \mu (s) \big ) (s_{\text {in}} -s).\) This special case was solved in [13], with the following assumptions

-

(H1)

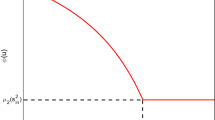

The function \(s \mapsto \mu (s) (s_{\text {in}} -s)\) has a unique maximizer \(s^*\) on \([0,s_{\text {in}}]\).

-

(H2)

The upper bound on the controls is such that \(D_{\max } > \mu (s^*)\).

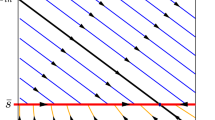

The optimal control is then \(D^*(s)= 0\) if \(s>s^*\), \(D^*(s)= D_{\max }\) if \(s<s^*\) and \(D^*(s)=\mu (s^*)\) if \(s=s^*\). Here, we will give another proof of the optimality of this control, by using the fixed point characterization. First, we can identify the control \(D^*\) as a control of type (15) where the singular arc is reduced to \(s=s^*\). In other words, it corresponds to the control \(\psi _{h^*}\) where \(h^*\) satisfies Eq. (9) for the singular arc with \(s=s^*\), which in this case is \(h^* \mu '(s^*) = \mu (s^*)^2\). Next, since \(s^*\) is a maximizer, we have \(\mu '(s^*)(s_{\text {in}}-s^*) - \mu (s^*) =0\) and therefore \(h^*= \mu (s^*)(s_{\text {in}} - s^* )\).

To prove the optimality of \(\psi _{h^*}\), we must now show that \(h^*\) is a fixed point of the map** \(h \mapsto - \partial _{t_0} J(t_0,x_0,s_0,\psi _{h})\). For this, we first study the trajectories obtained with the feedback control \(\psi _{h^*}\). We denote in the remainder of the section the right-hand side of the differential equation for \(s(\cdot )\) with control \(\psi _{h^*}\) as \(f(s):= (\psi _{h^*}(s) - \mu (s) ) (s_{\text {in}} -s)\).

Notice that for \(s>s^*\) we have \(f(s)=-\, \mu (s) (s_{\text {in}} -s) <0\) and for \(s<s^*\) we have \(f(s)= (D_{\max } - \mu (s) ) (s_{\text {in}} -s)>0\) from assumption (H2). Thus, \(s^*\) is reachable from any initial condition in I with control \(\psi _{h^*}\). We define the time \(t^*\) when \(s^*\) is reached, from a given initial condition \(s_0 \in [0,s_{\text {in}}]\) and initial time \(t_0\) with control \(\psi _{h^*}\), that is, \( t^*:= \inf \left\{ t \geqslant t_0 : s(t,t_0,s_0,\psi _{h^*} ) = s^*\right\} .\) Finally, note that with control \(D=\mu (s^*)\) the point \(s=s^*\) becomes a steady state. Therefore, the trajectories with control \(\psi _{h^*}\) are

We can now compute \(\partial _{t_0} J(t_0,x_0,s_0,\psi _{h^*})\), and for this, we need the following.

Lemma A.1

For any initial condition \((x_0,s_0) \in I\), for the trajectories with control \(\psi _{h^*}\), we have \(\partial _{t_0} s(t) = -\, f( s (t))\) at time \(t \in [t_0,t^*]\).

Proof

We can write the differential equation satisfied by \(s(\cdot )\) as \(s(t) = s_0 + \displaystyle \int _{t_0}^t f(s(\tau )) \, \text {d}\tau \), and differentiating, we get \(\partial _{t_0} s(t) = -\, f( s_0) + \displaystyle \int _{t_0}^t f'(s(\tau ))\partial _{t_0} s(\tau ) \, \text {d}\tau .\) This is a linear differential equation, and the solution is \(\partial _{t_0} s(t) = -\, f( s_0) \exp \left( \displaystyle \int _{t_0}^t f'(s(\tau )) \, \text {d}\tau \right) .\) Now, as f(s(t)) does not change sign for \(t\in [t_0,t^*)\) and since f(s(t)) is the derivative of s(t), we have \(\displaystyle \int _{t_0}^t f'(s(\tau )) \, \text {d}\tau = \int _{s_0}^{s(t)} \frac{f'(s)}{f(s)} \text {d}s = \ln \left( \frac{f(s(t))}{f(s_0)} \right) .\)\(\square \)

We are now in a position to prove the optimality of the feedback control proposed earlier.

Proposition A.1

For any initial condition \((x_0,s_0) \in I\) and for any initial time \(t_0\) such that \(s^*\) is reachable, that is, when \(t^* \leqslant T\), we have \(\partial _{t_0} J(t_0,x_0,s_0,\psi _{h^*}) = -\mu ( s^* ) (s_{\text {in}}-s^*)\), so that \(\psi _{h^*}\) is the optimal control.

Proof

We start by writing the cost as

differentiating with respect to \(t_0\) we get

Note that the terms with \(\partial _{t_0}t^*\) cancel out because \(s(t^*)=s^*\). Now, using Lemma A.1 we get

We conclude by recalling that \(h^* = \mu ( s^* ) (s_{\text {in}}-s^*) \), and therefore, \(h^*\) is a fixed point of \(h \mapsto - \partial _{t_0} J(t_0,x_0,s_0,\psi _{h})\). \(\square \)

Rights and permissions

About this article

Cite this article

Haddon, A., Hermosilla, C. An Algorithm for Maximizing the Biogas Production in a Chemostat. J Optim Theory Appl 182, 1150–1170 (2019). https://doi.org/10.1007/s10957-019-01522-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-019-01522-x

Keywords

- Optimal control

- Chemostat model

- Pontryagin maximum principle

- Hamilton–Jacobi–Bellman equations

- Optimal synthesis