Abstract

We propose the use of Bayesian estimation of risk preferences of individuals for applications of behavioral welfare economics to evaluate observed choices that involve risk. Bayesian estimation provides more systematic control of the use of informative priors over inferences about risk preferences for each individual in a sample. We demonstrate that these methods make a difference to the rigorous normative evaluation of decisions in a case study of insurance purchases. We also show that hierarchical Bayesian methods can be used to infer welfare reliably and efficiently even with significantly reduced demands on the number of choices that each subject has to make. Finally, we illustrate the natural use of Bayesian methods in the adaptive evaluation of welfare.

Similar content being viewed by others

Notes

In various forms Bayesian analysis has long been applied to condition inferences from experimental data. For example, see Harrison (1990) and the effect of priors over risk preferences on inferences about bidding behavior in first-price sealed bid auctions. Closer to our own implementation, Nilsson et al. (2011) employ hierarchical Bayesian methods to make inferences about risk preferences under Cumulative Prospect Theory, which is a structurally rich model and relatively hard to reliably estimate at the individual level.

One might also be interested in measures of social welfare, derived from these individual welfare evaluations. Kitagawa and Tetenov (2018) consider a related issue, using a social welfare function defined directly over observable outcomes of individuals. They examine the determination of the sample of a population that should be treated by some intervention, when it is impossible to treat the full population with the available budget, and when one has baseline data with which to condition who to treat with what intervention. They explicitly recognize (p. 592) that when “multiple outcome variables enter into the individual utility (e.g., consumption and leisure), [the individual outcome measure] can be set to a known function of these outcomes.” For us the challenge is to estimate this “known function” and account for the statistical properties of those estimates. The experimental task we use to estimate risk preferences is our counterpart of their baseline survey, albeit fully incentivized of course.

An extension of this approach conditions inferences about each parameter on a list of observable demographic characteristics of the pooled sample. One can then generate predictions about the distributions of these parameters that condition on the specific value of the characteristics of each individual being normatively evaluated, and use these predictions as priors for Bayesian inferences that pool the sample data for that individual. We evaluate this extension in Gao et al. (2020) and find that it adds no substantive insight for the sample from our population, although it does add considerable computational burden. This conclusion may be specific to our, relatively homogenous, population; we encourage examination of this extension for applications to field populations that are likely more heterogeneous.

The use of Bayesian hierarchical models to infer individual preferences has a long tradition in marketing: see Rossi and Allenby (1993), McCulloch et al. (1995), Allenby and Gintner (1995), Allenby and Rossi (1999) and Rossi et al. (2005). Random coefficient (or mixed logit) models have been developed for similar applications: see Huber and Train (2001), Train (2009; chapter 11) and Regier et al. (2009) for expositions and comparisons with Bayesian hierarchical methods.

For example, by estimating a pooled model with covariates and predicting risk preference parameters for each subject, with standard errors. The predictions for each subject then, at least, utilize the exact values of those covariates for that subject. Then one could use those predictions in lieu of estimates with individual subject data for those subjects whose ML estimates fail to converge or violate some ad hoc priors as to what constitute sensible estimates.

However, we do not follow their approach of classifying certain individuals as having risk preferences consistent with Expected Utility Theory (EUT). The statistical reason, stressed by Monroe (2022), is that those subjects that are characterized as EUT by the test for “no probability weighting” still have standard errors around the probability weighting parameters, and potentially large ones. And, perhaps surprisingly, these standard errors can make a substantive difference in precisely the normative evaluations undertaken here. Hence there is no formal need to differentiate EUT and RDU decision makers for these calculations, because EUT is nested within RDU, even if there is an important normative insight in knowing that there are these different types of risk preferences in the sample.

Appendix A documents the template used for our Bayesian estimation of risk preferences.. Appendix B provides details of convergence diagnostics for the core model of Sect. 1.

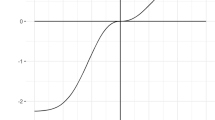

In the extreme case of EUT the risk premium is solely determined by utility curvature. In the extreme case of “dual theory” the risk premium is solely determined by the probability weighting function (Yaari 1987).

There are “jacknife” variants that use the N − 1 individuals in the sample other than the individual in question, but for large enough samples this is not likely to make an appreciable difference quantitatively.

In comparable calculations Harrison and Ross (2018, p. 54) report having to drop 19 of 193 subjects for effectively the same reason.

Specifically, using Power or Inverse-S probability weighting functions. Each is effectively nested in the Prelec probability weighting function. When φ = 1 the Prelec function collapses to the Power function, and when η = φ = 0 or η = 1 it collapses to the Inverse-S function.

The sample size is 10,000 for the MCMC sample and 2500 for the burn-in sample, and we do not use thinning. For convergence criteria we mainly reviewed trace plots and autocorrelations of the sample with different lags of iteration number. We generally find excellent mixing and low autocorrelations as the lag increases. In addition, we also compared the kernel density of each parameter when we use all, the first half and the second half of the posterior sample for that parameter, and find consistent distributions.

In Bayesian analysis it is common to report the mean of the posterior distribution, or occasionally the median. The mode is appropriate in this specific case since it the most directly comparable statistic of the posterior distribution to the classical maximum likelihood estimate.

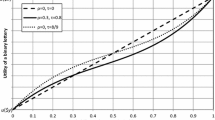

The ML confidence intervals for r, η and φ, respectively, are (0.49, 0.78), (0.63, 1.00) and (1.14, 1.31). The 95% Bayesian highest posterior density intervals for these parameters are (0.31, 0.53), (0.83, 1.05) and (1.08, 1.31). The Bayesian posterior overlaps with 10.3%, 90.0% and 80.4% of the corresponding ML confidence interval for these individual parameters. For η and φ jointly, the Bayesian posterior overlaps with 72.9% of the corresponding ML confidence intervals.

Again, this is an average of the 111 modes reflected in these histograms.

Imbens (2021) provides an exposition of the general value in economics of using Bayesian approaches to assess the “statistical significance” of inferences.

In fact, the distribution of 80 percentages in panels B and C overstate the similarity of ML and Bayesian estimates: for those subjects that are well characterized under EUT, the estimates of η and φ should be roughly the same.

Virtually identical distributions are generated if we restrict to the 80 individuals with both ML and Bayesian estimates, but one point of the exercise is not to do that.

The same type of comparison does not apply for the Efficiency measures, since these are categorical (0% and 100%) posterior distributions at each observed choice, and real-valued for the ML estimates. The evidence for CS is sufficient to make the general case for significantly different welfare evaluations with the Bayesian approach.

In this case it is appropriate to limit the sample to those that have both ML and Bayesian estimates.

For example, Harrison and Ng (2016, p. 99, 2018, pp. 49–51) discuss in detail why different types of lottery questions are included in their full battery for different type of normative inferences. In the latter case, focused on compound risks from non-performance of insurance contracts (e.g., due to fraud or bankruptcy), it was critical to estimate risk preferences that included compound lotteries.

Apart from the obvious need to ask questions about insurance purchases, in field settings we are also interested in eliciting preferences about time preferences, subjective beliefs, intertemporal risk aversion, and possibly even social preferences.

Earlier we used the posterior mode because it is a more comparable measure to ML estimates. However, here we have shifted our focus away from ML, and aim to compare Bayesian estimates with different sample size, so we use the more common measure of posterior mean. Alternatively, one could also use the median of the posterior distributions.

We also always report the Kendall rank correlation τ. The rank correlation is robust to the effect of outliers, but of course uses less information. In general the results for ρ and τ are qualitatively consistent, with τ < ρ in all cases.

The corresponding rank correlations are 0.87, 0.86, 0.74 and 0.80, respectively.

An alternative approach is to exploit the ability to sequentially update based on data solely obtained from one individual, by explicitly designing the “next” experimental task in a Bayesian (or classical) manner. Examples applied to eliciting risk preferences include Cavagnaro et al., (2013a, 2013b), Chapman et al. (2018), Ray et al. (2019) and Toubia et al. (2013). These methods place some “real-time” computational burden on the software generating the experimental interface, but these burdens are becoming minor as hardware and software improve. And one could certainly link these to Bayesian Hierarchical Models, providing informative priors for the individual prior to any dynamic optimization based on accumulating choices by the individual.

In principle one could also identify which of the full battery of questions are most informative to ask, which is just a “pre-posterior” analysis to a Bayesian. Lindley (1972; p, 20ff.) provided the first general, formal statement of Bayesian experimental design, and Chaloner and Verdinelli (1995) a valuable literature review. Gelman et al., (2013; ch. 8) review complementary literature on how various experimental designs impact Bayesian analyses.

As noted earlier, Harrison and Ng (2016, p. 110/111) show how one can bootstrap the welfare calculations to reflect the covariance matrix of ML estimates for each individual. So the ML approach also allows one to calculate distributions of welfare, although with a very different interpretation.

Perhaps a simpler and more familiar way to think of a posterior predictive distribution is to imagine that the subject was faced with a new battery of risk lotteries and we use the observed behavior from the old battery of risk lotteries to infer what choices would be made for the new battery. The posterior estimates of r, η or φ from the old choices are used to characterize the data-generating process, and then infer the distribution of expected choices for the new battery. In our case we substitute insurance choices for a new risk lottery battery, but the statistical principles are the same.

A more sophisticated “targeting” policy might use the information from the first 12 insurance choices to adaptively determine the actuarial parameters that might lead each subject to make better decisions in the remaining 12 decisions.

See Teele (2014) and Glennerster (2017) for discussion of the Belmont Report and the ethics of conducting randomized behavioral interventions in economics. Even when randomized clinical trials were not adaptive, or even sequential in terms of stop** rules, it has long been common to employ termination rules based on extreme, cumulative results (e.g., the “3 standard deviations” rule noted by Peto (1985, p. 33)).

This point has nothing to do with whether the subject exhibits “present bias” in any form. All that is needed is simple impatience, even with Exponential discounting. Andersen et al. (2008) consider the joint estimation of risk and time preferences. Berry and Fristedt (1985; chapter 3) stress the importance of time discounting in sequential “bandit” problems in medical settings.

The intertemporal risk aversion of a subject bears no necessary relationship to atemporal risk aversion. Andersen et al. (2018) consider the joint estimation of atemporal risk preferences, time preferences, and intertemporal risk preferences.

Commenting on the famous Extracorporeal Membrane Oxygenation (ECMO) adaptive randomization study for babies documented by Ware (1989), Royall (1989) and Berry (1989, p. 306) reject the claim that prior, well-known evidence from a randomized evaluation documented by Bartlett et al. (1985) supported such a perfectly diffuse prior. Kass and Greenhouse (1989, p. 313) raise similar concerns, but in the end explicitly, and reluctantly, assume that the study was “appropriately designed” to start with a diffuse prior. Royall (1989, p. 318) calculates the posterior probability that the ECMO treatment was inferior to be either 0.01 or 0.00003 based on previous data. Berry (1989, p. 310) sharply concludes that “clinical equipoise is an invention used to avoid difficult ethical questions.” In the context of economics experiments, that equipoise corresponds to claims that “anything could happen,” as distinct from “here is what I believe would happen.” Freedman (1987) first proposed the notion of clinical equipoise, controversially defining it in terms of priors that are presumed to be held in the broader research field, not the priors of the immediate investigators. Harrison (2021) provides a more extensive review of these issues from a Bayesian perspective, with implications for experimental design in economics.

References

Allenby, G. M., & Gintner, J. L. (1995). Using extremes to design products and segment markets. Journal of Marketing Research, 32, 392–403.

Allenby, G. M., & Rossi, P. E. (1999). Marketing models of consumer heterogeneity. Journal of Econometrics, 89, 57–78.

Andersen, S., Harrison, G. W., Lau, M. I., & Rutström, E. E. (2008). Eliciting risk and time preferences. Econometrica, 76(3), 583–618.

Andersen, S., Harrison, G. W., Lau, M. I., & Rutström, E. E. (2018). Multiattribute utility theory, intertemporal utility, and correlation aversion. International Economic Review, 59(2), 537–555.

Armitage, P. (1985). The search for optimality in clinical trials. International Statistical Review, 53(1), 15–24.

Bartlett, R. H., Roloff, D. W., Cornell, R. G., Andrews, A. F., Dillon, P. W., & Zwischenberger, J. B. (1985). Extracorporeal circulation in neonatal respiratory failure: A prospective randomized study. Pediatrics, 76(4), 479–487.

Berry, D. A. (1989). Comment: Ethics and ECMO. Statistical Science, 4(4), 306–310.

Berry, D. A., & Fristedt, B. (Eds.). (1985). Bandit problems: Sequential allocation of experiments. Springer.

Caria, S., Gordon, G., Kasy, M., Quinn, S., Shami, S., & Teytelboym, A. (2021). An adaptive targeted field experiment: Job search assistance for refugees in Jordan. Draft Working Paper. Oxford University, July 2021; available at https://maxkasy.github.io/papers/.

Cavagnaro, D. R., Pitt, M. A., Gonzalez, R., & Myung, J. I. (2013a). Optimal decision stimuli for risky choice experiments: An adaptive approach. Management Science, 59(2), 358–375.

Cavagnaro, D. R., Pitt, M. A., Gonzalez, R., & Myung, J. I. (2013b). Discriminating among probability weighting functions using adaptive design optimization. Journal of Risk and Uncertainty, 47(3), 255–289.

Chaloner, K., & Verdinelli, I. (1995). Bayesian experimental design: A review. Statistical Science, 10(3), 273–304.

Chapman, J., Snowberg, E., Wang, S., & Camerer, C. (2018). Loss attitudes in the U.S. population: Evidence from dynamically optimized sequential experimentation (DOSE). NBER Working Paper 25072, September 2018.

Freedman, B. (1987). Equipoise and the ethics of clinical research. New England Journal of Medicine, 317(3), 141–145.

Gao, X. S., Harrison, G. W., & Tchernis, R. (2020). Estimating risk preferences for individuals: A Bayesian analysis. CEAR Working Paper 2020–15, Center for the Economic Analysis of Risk, Robinson College of Business, Georgia State University, 2020.

Gelman, A., Carlin, J. B., Stern, H. S., Dunson, D. B., Vehtari, A., & Rubin, D. B. (2013). Bayesian data analysis (3rd ed.). CRC Press.

Glennerster, R. (2017). The practicalities of running randomized evaluations: Partnerships, measurement, ethics, and transparency. In A. Banerjee & E. Duflo (Eds.), Handbook of field experiments: Volume one. Elsevier.

Hadad, V., Hirshberg, D. A., Zhan, R., Wager, S., & Athey, S. (2021). Confidence intervals for policy evaluation in adaptive experiments. Proceedings of the National Academy of Sciences, 118(15), e2014602118.

Harrison, G. W. (1990). Risk attitudes in first-price auction experiments: A Bayesian analysis. Review of Economics & Statistics, 72, 541–546.

Harrison, G. W. (2011). Experimental methods and the welfare evaluation of policy lotteries. European Review of Agricultural Economics, 38(3), 335–360.

Harrison, G. W. (2019). The behavioral welfare economics of insurance. Geneva Risk & Insurance Review, 44(2), 137–175.

Harrison, G. W. (2021). Experimental design and Bayesian interpretation. In H. Kincaid & D. Ross (Eds.), Modern guide to the philosophy of economics. Elgar.

Harrison, G. W., & Ng, J. M. (2016). Evaluating the expected welfare gain from insurance. Journal of Risk and Insurance, 83(1), 91–120.

Harrison, G. W., & Ng, J. M. (2018). Welfare effects of insurance contract non-performance. Geneva Risk & Insurance Review, 43(1), 39–76.

Harrison, G. W., & Ross, D. (2018). Varieties of paternalism and the heterogeneity of utility structures. Journal of Economic Methodology, 25(1), 42–67.

Harrison, G. W., & Rutström, E. E. (2008). Risk aversion in the laboratory. In J. C. Cox & G. W. Harrison (Eds.), Risk aversion in experiments. (Vol. 12). Emerald, Research in Experimental Economics.

Harrison, G. W., & Rutström, E. E. (2009). Expected utility and prospect theory: One wedding and a decent funeral. Experimental Economics, 12(2), 133–158.

Huber, J., & Train, K. (2001). On the similarity of classical and Bayesian estimates of individual mean partworths. Marketing Letters, 12(3), 259–269.

Imbens, G. W. (2021). Statistical significance, p-values, and the reporting of uncertainty. Journal of Economic Perspectives, 35(3), 157–174.

Kass, R. E., & Greenhouse, J. B. (1989). Comment: A Bayesian perspective. Statistical Science, 4(4), 310–317.

Kasy, M., & Sautmann, A. (2021). Adaptive treatment assignment in experiments for policy choice. Econometrica, 89(1), 113–132.

Kitagawa, T., & Tetenov, A. (2018). Who should be treated? Empirical welfare maximization methods for treatment choice. Econometrica, 86(2), 591–616.

Kruschke, J. K. (2015). Doing Bayesian data analysis: A tutorial with R, JAGS, and Stan (2nd ed.). Academic Press.

Kruschke, J. K., & Liddell, T. M. (2018). The Bayesian new statistics: Hypothesis testing, estimation, meta-analysis, and power analysis from a Bayesian perspective. Psychonomic Bulletin & Review, 25, 178–206.

Kruschke, J. K., & Vanpaemel, W. (2015). Bayesian estimation in hierarchical models. In J. R. Busemeyer, J. T. Townsend, Z. J. Wang, & A. Eidels (Eds.), Oxford handbook of computational and mathematical psychology. Oxford University Press.

Leamer, E. E. (1978). Specification searches: Ad hoc inference with nonexperimental data. Wiley.

Lindley, D. V. (1972). Bayesian statistics: A review. Society for Industrial and Applied Mathematics.

McCulloch, R., Rossi, P. E., & Allenby, G. M. (1995). Hierarchical modeling of consumer heterogeneity: An application to targeting. In C. Gatsonis, J. S. Hodges, E. E. Kass, & N. D. Singpurwalla (Eds.), Case studies in Bayesian statistics, volume II. (Vol. 105). Springer, Lecture Notes in Statistics.

Monroe, B. (2022). The welfare consequences of individual-level risk preference estimation. In G. W. Harrison & D. Ross (Eds.), Models of risk preferences: Descriptive and normative challenges. Emerald, Research in Experimental Economics.

Nilsson, H., Rieskamp, J., & Wagenmakers, E.-J. (2011). Hierarchical Bayesian parameter estimation for cumulative prospect theory. Journal of Mathematical Psychology, 55, 84–93.

Peto, R. (1985). Discussion of papers by J.A. Bather and P. Armitage. International Statistical Review, 53(1), 31–34.

Prelec, D. (1998). The probability weighting function. Econometrica, 66, 497–527.

Quiggin, J. (1982). A theory of anticipated utility. Journal of Economic Behavior & Organization, 3(4), 323–343.

Ray, D., Golovin, D., Krause, A., & Camerer, C. (2019). Bayesian rapid optimal adaptive design (BROAD): Method and application distinguishing models of risky choice. OSF Preprints. https://doi.org/10.31219/osf.io/utvbz

Regier, D. A., Ryan, M., Phimister, E., & Marra, C. A. (2009). Bayesian and classical estimation of mixed logit: an application to genetic testing. Journal of Health Economics, 28(3), 598–610.

Rossi, P. E., & Allenby, G. M. (1993). A Bayesian approach to estimating household parameters. Journal of Marketing Research, 30, 171–182.

Rossi, P. E., Allenby, G. M., & McCulloch, R. (2005). Bayesian statistics and marketing. Wiley.

Royall, R. (1989). Comment. Statistical Science, 4(4), 318–319.

Teele, D. L. (2014). Reflections on the ethics of field experiments. In D. Teele (Ed.), Field experiments and their critics: Essays on the uses and abuses of experimentation in the social sciences. Yale University Press.

Toubia, O., Johnson, E., Evgeniou, T., & Delquié, P. (2013). Dynamic experiments for estimating preferences: An adaptive method of eliciting time and risk parameters. Management Science, 59(3), 613–640.

Train, K. (2009). Discrete choice methods with simulation (2nd ed.). Cambridge University Press.

Ware, J. H. (1989). Investigating therapies of potentially great benefit: ECMO. Statistical Science, 4(4), 298–306.

Yaari, M. E. (1987). The dual theory of choice under risk. Econometrica, 55(1), 95–115.

Yusuf, S., Peto, R., Lewis, J., Collins, R., & Sleight, P. (1985). Beta blockade during and after mycoardial infarction: An overview of the randomized trials. Progress in Cardiovascular Diseases, 28(5), 335–371.

Acknowledgements

We are grateful to Andre Hofmeyr, two referees and the editor for comments. All data and code to replicate our analyses in Stata are at http://www.cear.gsu.edu/gwh/ or https://doi.org/10.17605/OSF.IO/9FSAE.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Gao, X.S., Harrison, G.W. & Tchernis, R. Behavioral welfare economics and risk preferences: a Bayesian approach. Exp Econ 26, 273–303 (2023). https://doi.org/10.1007/s10683-022-09751-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10683-022-09751-0