Abstract

Comparing early- and late-onset blindness in individuals offers a unique model for studying the influence of visual experience on neural processing. This study investigated how prior visual experience would modulate auditory spatial processing among blind individuals. BOLD responses of early- and late-onset blind participants were captured while performing a sound localization task. The task required participants to listen to novel “Bat-ears” sounds, analyze the spatial information embedded in the sounds, and specify out of 15 locations where the sound would have been emitted. In addition to sound localization, participants were assessed on visuospatial working memory and general intellectual abilities. The results revealed common increases in BOLD responses in the middle occipital gyrus, superior frontal gyrus, precuneus, and precentral gyrus during sound localization for both groups. Between-group dissociations, however, were found in the right middle occipital gyrus and left superior frontal gyrus. The BOLD responses in the left superior frontal gyrus were significantly correlated with accuracy on sound localization and visuospatial working memory abilities among the late-onset blind participants. In contrast, the accuracy on sound localization only correlated with BOLD responses in the right middle occipital gyrus among the early-onset counterpart. The findings support the notion that early-onset blind individuals rely more on the occipital areas as a result of cross-modal plasticity for auditory spatial processing, while late-onset blind individuals rely more on the prefrontal areas which subserve visuospatial working memory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Information received by sensory systems needs to be processed and integrated before it can be meaningfully utilized by individuals (Beer et al. 2011). Processing of sensory information can be modulated by an individual’s experience in life. For instance, the lack of visual input among congenitally blind individuals has been revealed to alter their processing of spatial information resulting in under-development of spatial knowledge (Emier 2004; Rieser et al. 1992). The present study explored the mechanisms behind prior visual experience modulating auditory spatial processing. The neural processes associated with sound localization were compared between individuals with early- and late-onset blindness. The findings can shed light on the role of visuospatial function in auditory spatial processing and cross-modal plasticity involving the visual system.

Previous studies investigating functional differences between early- and late-onset blind individuals include pitch change discrimination (Kujala et al. 1997), tactile discrimination (Sadato et al. 2002), Braille reading (Büchel et al. 1998; Cohen et al. 1999), and auditory spatial processing (Collignon et al. 2013; Voss et al. 2008, 2011). A common theme revealed across these studies is the differentiation of involvement of the occipital areas between the two groups. Individuals who were early-onset blind were consistently found to recruit more occipital areas, particularly the primary visual cortex (V1), than their late-onset blind counterparts in non-visual tasks (e.g., Burton et al. 2002a, b). Another between-group difference was the cross-modal connectivity with the occipital cortex. Functional connectivity studies reported that congenitally and early-onset blind individuals appeared to rely on a direct feed-forward cortico-cortical connection, whereas late-onset blind individuals relied on a feed-back cortico-cortical connection for mediating non-visual processing (Collignon et al. 2013; Wittenberg et al. 2004).

Auditory spatial processing is crucial in the everyday lives of individuals with blindness, for example, when navigating within a space and orienting oneself to a person in conversation. Voss et al. (2008) revealed, when compared with the late-blind, the early-blind group performed better in discriminating monaural sounds. Increases in BOLD responses in the middle frontal gyrus and right parietal cortex were found to associate with the discrimination process in both groups. However, the superior discrimination ability of the early-blind group was found to correlate with increased cerebral blood flow in the left dorsal extrastriate cortex, which included the middle occipital gyrus. Collignon et al. (2013) in a recent study investigated the role of experience in sha** functional organization of the occipital cortex during processing of pitch or spatial attributes of sounds. The main difference in auditory spatial processing between congenitally blind and late-onset blind participants was that the former showed increased BOLD responses in the right dorsal stream, which included the middle occipital gyrus and cuneus. Collignon et al. (2013) proposed that the functionality of the dorsal stream among early-blind individuals was for spatial computations of inputs from a non-visual system. More importantly, this cross-modal functional specialization was likely to be developed only early in life.

The notion of prior visual experience modulating auditory spatial processing is interesting in two ways. First, prior visual experience would interfere with ways in which auditory spatial information is processed among individuals with blindness. Among individuals with normal vision, visual experience provides basic pictorial information for spatial processing (Emier 2004; Mark 1993). The inferior parietal lobule has been found to mediate such process (Macaluso and Driver 2005). Auditory spatial processing inevitably would couple with visuospatial working memory, particularly when the information itself or the processes involved become complex (Arnott and Alain 2011; Lehnert and Zimmer 2006, 2008; Martinkauppi et al. 2000). Studies revealed that the parieto-frontal network was associated with auditory spatial processing among late-onset blind individuals, suggesting possible involvement of visuospatial working memory in these processes (Courtney et al. 1996; Ricciardi et al. 2006). The key neural substrates of this network are in the dorsolateral regions of the prefrontal cortex, particularly the middle frontal gyrus and superior frontal gyrus. In contrast, early-onset blind individuals would be less inclined to involve visuospatial function, which is under-developed (Cornoldi and Vecchi 2000; Thinus-Blanc and Gaunet 1997; Vecchi et al. 1995), in auditory spatial processing. Second, without prior visual experience, early-onset blind individuals are likely to rely on non-visual systems for mediating auditory spatial processing. Previous studies have revealed an extensive occipito-parietal network in congenitally and early-onset blind individuals while processing auditory spatial information (e.g., Collignon et al. 2011; Renier et al. 2010; Weeks et al. 2000). Chan et al. (2012) reported a parieto-frontal network mediating auditory spatial processing in a distance judgment task among congenitally blind individuals.

This study aimed to understand how prior visual experience would modulate the auditory spatial processing among blind individuals. The prior visual experience was tested by comparing two groups of blind individuals (early- vs. late-onset blindness) with different levels of visual experience (in years). Different from previous studies, this study used a sound localization paradigm which combines depth into the distances and the sound localization can only be resolved by using subtle spectral cues embedded in the auditory stimuli. The auditory stimuli were simple da–da–da sounds recorded from the electronic “Bat-ears.” “Bat-ears” is a non-invasive, ultrasonic emission device developed for assisting navigation of individuals with blindness. The ultrasound pulses that reflected from obstacles placed in different locations are collected, amplified, demodulated, and put out as audible signals through earphones. In other words, the auditory stimuli were recordings of previous played sounds containing the echo cues created by obstacles at different spatial locations rather than sounds emitted from spatially distinct areas. In this way, the audible sounds contained spatial information with pitch and intensity indicating azimuth and distance (Blumsack and Ross 2007). The experimental task used required the participants to localize each sound stimulus on a 15-location fan-shape space (5 azimuths × 3 distances) which ensures auditory spatial localization process. The findings obtained can shed light on the neural processes associated with auditory spatial localization, as well as help validate usefulness of the “Bat-ears” as a navigation device for people with blindness. With prior visual experience, we hypothesize that the late-onset blind individuals would involve visuospatial process during sound localization which is reflected from increase in BOLD responses in neural substrates mediating such processes. In contrast, sound localization of early-onset blind individuals would rely on cross-modal plasticity involving the occipital cortex. To further address the possibility of involving visuospatial function in auditory spatial processing, tests of visuospatial working memory and general intellectual abilities were administered to the participants, which formed the behavioral correlates of the study.

Materials and Methods

Participants

The early-onset blind group was composed of 15 participants (mean age: 28.9 years; from 20 to 38 years) who lost vision at birth (n = 11), or before 1 year of age (n = 3), or at 1 year old (n = 1) (Table 1). The late-onset blind group was composed of 17 participants (mean age: 32.4 years; from 20 to 49 years) who lost vision later in life with a mean onset age of 20.4 years and a mean duration of blindness of 12.0 years. At the time of this study, all participants reported no light perception and normal hearing abilities. Hearing abilities were assessed with a pitch discrimination test (Collignon et al. 2007) that employed stimuli resembling those presented in the sound localization task. Participants had to attain an accuracy rate higher than 60 % to satisfy the inclusion criteria. All participants scored within the normal range on the WAIS-RC (Gong 1982; Wechsler 1955). Before the fMRI acquisition, all participants underwent 30 min of familiarization with the sound-to-azimuth and sound-to-distance relationships using a headphone and joystick. It covered six da–da–da sounds (3 azimuths: −30°, 0°, +30° and 2 distances: 1 and 4 m). The study was approved by the Human Ethics Committee of The Hong Kong Polytechnic University and Bei**g Normal University. All participants gave their consent by stam** their fingerprint on the consent form. They were remunerated RMB760 (equivalent to US120) to compensate for travel expenses and time loss.

Behavioral Test—Matrix Test

The adapted matrix test (Cornoldi et al. 1991) was administered to assess the participants’ visuospatial working memory. There were two haptic subtests: one 2D matrix (3 × 3 squares) comprised of 9 wooden cubes (2 cm per side) and one 3D matrix (2 × 2 × 2 squares) comprised of eight wooden cubes (2 cm per side). Each participant was to mentally maneuver a designated target on the surface of the matrix according to verbal scripts. In each trial, the starting position of the target was presented to the participant by means of a sandpaper pad attached to the surface of a designated square on the matrix. The participant was to tactually recognize and memorize the location of the target. The participant then heard instructions for relocating the target, such as forward–backward and right–left for the 2D matrix, or forward–backward, right–left, and up–down for the 3D matrix. The relocation instructions were delivered to the participant using a tape recorder. To demonstrate performance with a moderate level, we manipulated 2–3 targets together with 2–4 steps of relocation instructions in each trial. After playback of the instructions, the participant was given the blank matrix without targets, which indicated the terminal location of the imagery target. There were 12 trials in each of the 2D and 3D matrices. The performance scores were the percentages of the accurate terminal locations indicated by the participant.

Behavioral Test—Intelligence Test

Most studies indicated that the verbal intelligence performance of visually impaired individuals was comparable to their sighted counterparts on the Wechsler Adult Intelligence Scale (WAIS) (Vander Kolk 1977, 1982). To evaluate intelligence of the blind participants, the WAIS-RC) (Gong 1982; Wechsler 1955) was administered to each participant by an examiner. The WAIS-RC has been validated using factor analysis in a large sample of Chinese urban (N = 2029) and rural (N = 992) residents (Dai et al. 1990). Since then, the WAIS-RC has been widely used in educational and clinic settings in China. The WAIS-RC verbal test (Gong 1982; Wechsler 1955) included six subtests: information, vocabulary, comprehension, similarities, digit span, and arithmetic. All tests were conducted verbally by the examiner following the standardized procedures.

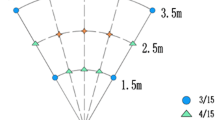

fMRI Tasks

The auditory stimuli were similar to those fabricated in Chan et al. (2012), which were generated from the “Bat-ears” device. Ultrasonic pulses were emitted from a generator located in the center of the “Bat-ears.” The pulses, when meeting a designated obstacle, were reflected as echoes, which were captured by the binaural receivers mounted on the two sides of the “Bat-ears.” These ultrasound echoes were converted to da–da–da sounds (peak frequencies 3,200–4,700 Hz) and recorded by a KEMAR Manikin, on which the “Bat-ears” were placed (Burkhard and Sachs 1975). The entire procedure was conducted in an acoustic laboratory. The obstacle was made of an erected piece of cardboard (30 × 30 cm) located at specific designations in the sound-proof chamber. The “Bat-ears” and the center of the obstacle were placed at a height of 1.5 m. The designations were organized in a fan-shape space that was organized into five azimuths (−30° [left side], −15°, 0°, +15°, +30° [right side]), and three distances (1.5, 2.5, and 3.5 m from the “Bat-ears” and Manikin) (Fig. 1). There were a total of 15 locations from which the echoes were reflected and recorded as the da–da–da stimuli which were of higher resolution than those recorded from three locations as in Chan et al. (2012) study.

The sound localization task required the participant to listen to the “Bat-ears” stimulus (peak frequencies 3,200–4,700 Hz, 70 dB) and identify the location on the 5 azimuths × 3 distances space from which the sound would have been emitted. The task process required the participant to listen to the stimulus and extract the spatial information embedded in the sound (such as intensity and frequency). Based on the information, the participant was to estimate the location of the sound source and indicate it using a joystick with the right hand. The participant made a response by maneuvering the joystick to one location, which indicated both azimuth and distance of the sound source. The calibration of the joystick was: left/straight/right indicated −15°/0°/+15°, and outer left/right indicated −30°/+30°; backward/horizontal/forward indicated 1.5/2.5/3.5 m. So a joystick position of forward-outer-left would mean a sound location of −30° at 3.5 m. The control task was a pitch discrimination task that required the participant to differentiate whether the “Bat-ears” sound had been inserted with a 15-ms sound clip of a different pitch (6,000–8,000 Hz, 70 dB). The task process was to listen to the sound and extract its specific frequency information. The participant judged whether the sound had or did not have an inserted pitch. A “Yes” or “No” response was made by pressing or by not pressing on the joystick, respectively. The discrimination control task would produce baseline BOLD responses associated with non-spatial auditory processing of the “Bat-ears” stimuli. The auditory stimuli were the same in both the sound localization and pitch differentiation tasks, which could control for possible confounding factors associated with the physical attributes of the stimuli. For each trial, an auditory cue was presented for 750 ms to indicate the task type: localization (2,000 Hz, 70 dB) or differentiation (500 Hz, 70 dB). There was a 1,750 ms delay, during which the participant was to orientate himself to the task and recall its process and requirement (Fig. 2). The da–da–da stimulus was presented for 3,000 ms, which was followed by a 500 ms auditory cue (2,000 Hz, 70 dB) for the participant to prepare to make the response with the joystick. The time available for response was 4,000 ms. The inter-trial interval (ITI) was set at 12,500/15,000/17,500 ms, with a uniform distribution of jitters (2,500, 5,000, or 7,500 ms). Response time was not used as a behavioral measure because localization responses at a farther distance (e.g., 3.5 m) and at the outer left/right side (e.g., ±30°) took a longer time to register on the joystick than those at a closer distance (e.g., 1.5 m) and at the center (e.g., 0°). Performance for the localization task was measured in terms of the accuracy of the location of the sound source estimated by the participant in the localization task trials. The participants in general found that localizing the sounds required some effort particularly when the task was carried out in the scanner. Lenient criteria, i.e. localization of the exact correct or neighboring positions, were applied to defining correct trials. For instance, responses at two neighboring locations were regarded as “correct” for localizing a stimulus emitted from the outer-left farthest-distance location (−30°, 3.5 m). They were the outer-left medium-distance (−30°, 2.5 m) or left farthest-distance (−15°, 3.5 m). Therefore, the chance level for different positions was varied from 20 to 33.33 % (Fig. 3).

The sound localization paradigm with auditory spatial processing occurred during the presentation of the 3-s “Bat-ears” sound. For each trial, an auditory cue was presented for 750 ms to indicate the task type: the localization task trial was indicated by a high-tone (peaked at 2,000 Hz) and the differentiation control trial was indicated by a low-tone (peaked at 500 Hz). After 1,750 ms delay, the 3-s auditory stimulus was presented and followed by a 500-ms cue to indicate the preparation for response. Finally, the left time was for the joystick response

Definition for correct responses. Neighboring positions having the same azimuth or distance as the exact correct positions but with one-step difference in distance or azimuth are also regarded as correct responses. Therefore, the locations indicated as blue circles (e.g., −30°/1.5 m) each has two neighboring positions as correct responses indicated as yellow triangles (e.g., −30°/2.5 m or −15°/1.5 m); the locations indicated as green stars (e.g., +15°/2.5 m) each has four neighboring positions as correct responses. The chance level of accuracy for the blue, yellow and green locations are 20, 26.67, and 33.33 % respectively. The lenient criteria used would lower difficulty level of the sound localization task which increases power of the analyses (Color figure online)

fMRI Data Acquisition

The auditory stimuli were bilaterally presented via an MRI compatible headphone system, and the sound-pressure level of the stimuli was adjusted from 70 to 80–90 dB to compensate for the noisy environment inside the scanner. Each participant was scanned in four fMRI runs using an event-related design. In each run, the number of the sound localization/differentiation trials was unbalanced, with 17–20 localization task trials and 8–11 differentiation control trials. The order of the runs was counterbalanced among the participants. These gave a total of 75 localization task trials and 37 differentiation control trials. The fMRI series were captured by a 3-T Siemens machine with a 12-channel head coil. Functional T2* images were obtained with a gradient echo-planar sequence (repetition time [TR] = 2,500 ms; echo time [TE] = 30 ms; flip angle [FA] = 90°; voxel size = 3.1 × 3.1 × 3.2 mm3). Structural T1 images (TR = 2,530 ms; TE = 3.39 ms; voxel size = 1.3 × 1.0 × 1.3 mm3) were also acquired.

fMRI Image Analysis

Analyses were carried out using SPM8 (Welcome Department of Imaging Neuroscience, London, UK), implemented in MATLAB (Mathworks). Preprocessing included slice timing for correcting differences in the timing of acquisition between slices, realignment of functional time series for correcting head motion, coregistration of functional and anatomical data, segmentation for extracting grey matter, spatial normalization to the Montreal Neurological Institute (MNI) space, and spatial smoothing (Gaussian kernel, 6 mm FWHM).

The preprocessed data were fitted to a general linear model (GLM) in SPM8 (Friston et al. 1994) using two event-related regressors. The two regressors modeled the BOLD signals corresponding to the correct responses made in the localization task trials and differentiation control trials, which were constructed by convolving the onset times of the “Bat-ears” sound with the canonical hemodynamic response function. The motion parameters detected by the Artifact Detection Tools (ART, developed by the Gabrieli Lab, Massachusetts Institute of Technology, available at: http://web.mit.edu/swg/software.htm) were included in the GLM for further regression of the motion-dependent confound (Mazaika et al. 2005). Slow changes in the data were removed by applying a high-pass filter with a cut-off of 128 s, and a first-order autoregressive process was used to correct for autocorrelation of residual signals in the GLM.

Whole Brain and Region-of-Interest Analyses

Whole-brain analyses were first conducted separately for the early- and late-onset blind groups. The contrast of interest involved comparing correct responses of the localization task trials and of the differentiation control trials, and the linear contrast tested the main effect of interest (localization > discrimination). One-sample t-tests with random effects (Holmes and Friston 1998) were performed. The statistical threshold for the t-images was P < 0.05, corrected for family-wise error (FWE corrected) at the voxel level. Two-sample t-tests and cluster-level inference (Friston et al. 1996) were then performed to identify group differences between the early- and late-onset blind groups. The thresholds for the t-images were P < 0.001 (uncorrected) at the voxel level and P < 0.05 (FWE corrected) at the cluster level. All of the significant BOLD responses were overlaid on the structural template in MNI space, as provided in SPM8. The automated anatomical labeling (AAL) method was used to label the peak coordinates of the activation clusters (Tzourio-Mazoyer et al. 2002).

To answer the question of how visual experience would modulate the auditory spatial processing, an exploratory region of interest (ROI) analysis was performed on the basis of the current results. The ROIs were defined in two ways: (1) conjunction analysis (Nichols et al. 2005)—common BOLD responses between the two groups of participants with threshold of t-image set at P < 0.05 (FWE corrected) at the voxel level; and (2) two-sample t test analysis—between-group BOLD responses with thresholds of t-image set at P < 0.001 (uncorrected) at the voxel level and P < 0.05 (FWE corrected) at the cluster level. All functional ROIs were created with a 9-mm radius spherical mask centered at the local peaks of the activation clusters. For the ROIs that were identified by the conjunction analysis, two-sample t-tests were conducted to identify possible difference in BOLD responses between the two participant groups. Stepwise linear regression was conducted on all ROIs to identify the extent to which the mean contrast values of ROIs predicted performance on the localization task for each of the two participant groups. Pearson’s product-moment correlations were obtained between mean contrast values of all ROIs and onset age of late-onset blind participants and scores on the adapted matrix test for the late-onset blind participants, respectively.

Results

Behavioral—fMRI Tasks

In the early-onset blind group, the mean accuracy rate for the sound localization task was 46.8 % (SD = 3.8 %). In the late-onset blind group, the mean accuracy rate was 43.6 % (SD = 4.4 %). Participants with early-onset blindness had a significantly higher accuracy rate than their late-onset blindness counterparts (t(30) = 2.21, P = 0.035). It was noteworthy that the participants in both groups performed above the chance level of 33.33 %. The performance on the pitch discrimination task was found comparable between the early- and late-onset blind groups (t(30) = 0.18, P = 0.86).

Behavioral—Matrix Test

The number of correct trials on the 2D and 3D subtests of the matrix test were counted. The results for one early-onset and two late-onset blind participants were excluded from analysis as they were found unable to perform the task. The final sample size for the matrix test was 14 for the early-onset and 15 for the late-onset blind group. In the early-onset blind group, the mean accuracy rate for the 2D subtest was 43.3 % (SD = 25.2 %); for the 3D subtest, it was 41.9 % (SD = 26.4 %). In the late-onset blind group, the mean accuracy rate for the 2D subtest was 54.7 % (SD = 13.5 %), and for the 3D subtest, it was 45.8 % (SD = 13.5 %). The results did not reveal significant difference in the accuracy rates of the 2D subtest (t(19.58) = −1.49, P = 0.15) and 3D subtest (t(19.06) = −0.49, P = 0.63) between the early- and late-onset blind groups.

Behavioral—Intelligence Test

Similarly, the results for one early-onset and two late-onset blind participants were regarded as invalid due to non-compliance observed during the testing. The final sample size for the intelligence test was 14 for the early-onset and 15 for the late-onset blind group. Raw scores on each subtest were calculated and converted to the standard scores. Scores on the six subtests were summed and converted to a Verbal IQ score. All participants scored within the normal range (>70) on the Wechsler Adult Intelligence Scale-Revised for China (WAIS-RC) (Gong 1982; Wechsler 1955). The Verbal IQ scores for the early-onset blind group ranged from 74 to 131, with a mean of 102.9 (SD = 16.7), and the Verbal IQ scores for the late-onset blind group ranged from 96 to 122, with a mean of 107.5 (SD = 7.1). The results did not reveal significant difference in the Verbal IQ performance between the early- and late-onset blind groups (t(17.34) = −0.95, P = 0.35).

BOLD Responses Associated with Auditory Spatial Processing

Group analyses on the BOLD responses of the linear contrast (localization > discrimination) for the early-onset blind participants (n = 15) revealed maxima in the left middle occipital gyrus (MOG), the left precuneus, the bilateral superior parietal gyrus (SPG), the left superior frontal gyrus (SFG), the right supplementary motor area (SMA), the right precentral gyrus, and the right postcentral gyrus (Table 2 [under early-onset blind] and Fig. 4a). A comparable pattern of results was revealed for the late-onset blind participants (n = 17). Increases of BOLD responses of the linear contrast (localization > discrimination) were identified in the left MOG, the left precuneus, the left SFG, and the right precentral gyrus (Table 2 [under late-onset blind] and Fig. 4b). Group comparisons revealed that the early-onset blind group had significantly greater BOLD responses than the late-onset blind group in the right inferior temporal gyrus (ITG) and in the occipital cortex, which included the right lingual gyrus (LG), the right MOG, the right superior occipital gyrus (SOG), and the right fusiform gyrus (Table 3; Fig. 5a). Conjunction analysis revealed common BOLD responses across the early- and late-onset blind groups, including the left MOG, the left precuneus, the right SPG, the left SFG, and the right precentral gyrus (Fig. 5b). As the male-to-female ratios were differed in the early- and late-onset blind groups, the gender of participants was tested for its effect on the BOLD responses. Two-sample t-tests and conjunction analysis between the two blind groups were repeated with gender as a covariate. The results of the two runs of analyses were comparable except the coordinates of SPG in the conjunction analysis were modified from (18, −69, 52) to (15, −69, 49) (Table 3). It appears that gender would not be a significant factor confounding the results.

Significant increases in BOLD responses in the contrasts of (localization > differentiation) for the two blind groups. The threshold was P < 0.05 (FWE corrected) at the voxel level. a The early-onset blind group. Significant increases in BOLD responses were revealed in the left middle occipital gyrus, left precuneus, bilateral superior parietal gyrus, and left superior frontal gyrus. b The late-onset blind group. Significant increases in BOLD responses were revealed in the left middle occipital gyrus, left precuneus, and left superior frontal gyrus

Different and common BOLD responses in the early- and late-onset blind groups. The thresholds are P < 0.001 (uncorrected) at the voxel level, and P < 0.05 (FWE corrected) at the cluster level. a Significant differences in BOLD responses between the two blind groups in the condition: early-onset blind × (localization > differentiation) > late-onset blind × (localization > differentiation). The revealed neural substrates include the right middle occipital gyrus, right lingual gyrus, and right inferior temporal gyrus. b Common BOLD responses between the early- and late-onset blind groups in the contrast of (localization > differentiation). The neural substrates include the left middle occipital gyrus, left precuneus, right superior parietal gyrus, and left superior frontal gyrus

ROIs: Auditory Spatial Processing

Four ROIs were identified from the conjunction analysis: the left MOG, left precuneus, right SPG, and left SFG (Table 4). Three ROIs were identified from the two-sample t-test analysis: the right ITG, right MOG, and right LG. Between-group comparisons on the conjunction ROIs (ROIs 1–4) revealed that the early-onset blind group (mean = 20.20) had significantly higher mean contrast values than the late-onset blind group (mean = 13.89) in the right SPG (t(30) = 2.47, P = 0.02). Regression analyses revealed that only the changes in the mean contrast values in the left SFG significantly predicted performance on the sound localization task (β = 0.543, P < 0.05) in the late-onset blind participants (Fig. 6). In contrast, only the changes in the mean contrast values in the right MOG significantly predicted localization task performance in the early-onset blind participants (β = 0.530, P < 0.05). The onsets of blindness of the late-onset blind participants was negatively correlated with the mean contrast values in the left precuneus (r = −0.493, P = 0.044) and had a trend for negative correlation with the mean contrast values in the right MOG (r = −0.424, P = 0.090). A trend for correlation was found between the duration of blindness and the mean contrast values in the left MOG (r = 0.473, P = 0.055). As for the correlations with the visuospatial working memory, we found significant correlations between the mean contrast values in the left SFG and performance on the 2D (r = 0.585, P = 0.022) and 3D matrix tests (r = 0.562, P = 0.029) among the late-onset blind participants. These were not observed in the early-onset blind participants.

ROI analyses on auditory spatial processing among the early- and late-onset blind groups. Only ROIs that significantly predict the localization performance are presented. The mean contrast values in a the right middle occipital gyrus were entered in the regression model for the early-onset blind group, β = 0.530, P = 0.042; b the left superior frontal gyrus were entered in the regression model for the late-onset blind group, β = 0.543, P = 0.024

Discussion

The results revealed involvement of the occipital, parietal, and frontal regions during the auditory spatial processing among the late-onset blind participants. Between-group analyses indicated dissociations of neural substrates between the early- and late-onset blind cohorts, which were likely to be attributed to the visual experience gained only by the late-onset blind group. The most significant neural substrates were the right MOG (whole-brain analysis) and the right SPG (ROI analysis). Furthermore, ROI results indicated between-group dissociations in the left SFG and the right MOG. BOLD responses in the left SFG were revealed to associate with performance on the sound localization task among the late-onset blind participants. The role of the SFG in these participants might be attributed to their visuospatial working memory ability, which was reported unique to visual experience. The BOLD responses in the right MOG, in contrast, were revealed to largely mediate auditory spatial processing among the early-onset blind participants who were relatively deprived of visual experience. This was largely in agreement with previous findings on the involvement of the MOG in the spatial analysis of sounds in early-onset blind individuals (Collignon et al. 2011; Renier et al. 2010). Our findings suggested that prior visual experience enhances the involvement of visuospatial processing mediated by the SFG in the late-onset blind individuals. Without prior visual experience, the early-onset blind individuals were found to rely on spatial processing mediated by the MOG for sound localization.

In this study, ROI analyses revealed higher contrast values in the right SPG in the early- than late-onset blind group. This is further supported by the whole brain analyses showing that sound localization was associated with greater BOLD responses in the bilateral SPG, observed only in the early-onset blind group. Neuroimaging studies on blind individuals revealed involvement of the SPG and SPL during auditory spatial processing (Arno et al. 2001; Chan et al. 2012; Gougoux et al. 2005; Sadato et al. 2002). Our results are in accordance with Voss et al. (2008), which showed more SPG recruitment in early-onset blind individuals in discrimination of sound sources. The inconsistent findings of the SPL versus SPG might be attributed to the use of different definitions for labeling neural substrates across studies.

Functional Specialization of MOG in Auditory Spatial Processing

A group comparison of whole brain analyses revealed more occipital recruitment, particularly from the right MOG during sound localization in the early-onset group (Fig. 2). Stronger occipital responses have been found during cross-modal processing in sound source discrimination (Voss et al. 2008), auditory motion perception (Bedny et al. 2010), Braille reading (Burton et al. 2002a), and language perception (Bedny et al. 2012). Collignon et al. (2013) found more occipital recruitment for auditory processing of pitch and location in the congenitally blind group.

Spatial processing in general is mediated by the dorsal visual pathway (Haxby et al. 1991; Mishkin et al. 1983; Ungerleider and Mishkin 1982). Among various neural structures in the pathway, the MOG has consistently been found to be involved in processing spatial information of different modalities among early-onset blind individuals (Collignon et al. 2009; Dormal and Collignon 2011). For instance, Renier et al. (2010) reported greater BOLD responses in the right MOG when processing spatial information than non-spatial information. Collignon et al. (2011) also demonstrated that, among congenitally blind individuals, preferential BOLD response was observed in the right MOG while processing auditory spatial information over pitch of sounds. The MOG was found to mediate sound localization among individuals with early-onset blindness (Gougoux et al. 2005; Renier et al. 2010). In this study, the changes in mean contrast values in the right MOG of participants in the early-onset group were the only significant predictor of their performance on the sound localization task. The results were consistent with those revealed in previous studies, which supports the notion that the MOG mediates auditory spatial processing among those who had been deprived of visual experience in early life.

It is noteworthy that the late-onset blind participants in this study showed greater BOLD responses in the left MOG during auditory spatial processing. The BOLD responses in the MOG, however, were not found to significantly relate to the behavioral performance. The mean contrast values in the left MOG showed marginal positive correlation with the participants’ duration of blindness. Our results were consistent with Voss et al. (2008, 2011), who reported significant bilateral BOLD responses in the MOG among a group of late-blind individuals. Similarly, the BOLD responses in the MOG appeared to produce no behavioral advantage. Voss et al. (2008) also revealed a significant negative correlation between the BOLD responses in the right MOG and onset age of blindness. The findings seemed to suggest that prior visual experience before blindness influence the structure of and the functions associated with the MOG. The BOLD responses in the MOG appeared to be de-facilitated by the amount of prior visual experience gained by the late-onset blind individuals. Ironically, prior visual experience did not seem to help in preserving the spatial function mediated by the MOG after impairment of the visual system. Our findings lend support to the proposal of a critical period in functional preservation of the dorsal occipital regions for mediating spatial processing among blind individuals (Dormal and Collignon 2011). The critical period would correspond to that of early-onset blind participants of this study, which was within the first year of age.

Experience-Dependent SFG in Auditory Spatial Processing

Behavioral results showed that the late-onset blind participants performed above the chance level on the sound localization test but performed significantly lower than the early-onset blind participants. This indicated that participants in both groups managed to extract the spatial information embedded in the novel “Bat-ears” sounds for making correct responses. The left SFG was the only neural substrate among the other six ROIs tested that showed significant correlation with the performance on the sound localization task among the late-onset blind participants, but this was not the case with the early-onset blind participants. Therefore, this suggested that the left SFG played a key role in mediating the auditory spatial processing in late-onset blind individuals.

Among the behavioral parameters used in this study, the change in the mean contrast values in the left SFG were found to significantly relate to late-onset blind participants’ scores on the matrix subtests. Such a relationship was not observed in the early-onset blind participants. The matrix test is a measure of visuospatial working memory requiring encoding and retrieval of a series of verbal instructions describing spatial locations. Similarly, encoding of the sound stimuli and retrieving their spatial correlates are one of the critical steps in the sound localization task. Brain imaging studies on visuospatial working memory of late-onset blind individuals cannot be found. Studies on individuals with normal vision revealed that the prefrontal cortex plays a key role in mediating visuospatial working memory (Goldman-Rakic 1994, 1995). Other studies using non-visual tasks revealed recruitment of the dorsal “visual” stream in the dorsolateral prefrontal cortex (Courtney et al. 1996; Nelson et al. 2000). Courtney et al. (1998) further identified the superior frontal sulcus as the main neural substrate mediating spatial working memory. Specifically, the BOLD responses in the medial frontal gyrus, superior frontal sulcus (SFS) and SFG, and intraparietal sulcus were found to be dependent on the memory load required by the tasks. With prior visual experience, the late-onset blind participants in this study would tend to involve visuospatial working memory for processing the spatial information embedded in the “Bat-ears” sounds, such as their intensity and frequency. The decision on the location of the reflected sounds which would induce a memory load is likely to be mediated by the left SFG.

Limitations

This study has a few limitations. First, the participants were recruited by means of convenience sampling. The results can only be generalized to those who share similar onsets of blindness and cognitive abilities such as visuospatial working memory and intelligence. The auditory spatial processing was based on the “Bat-ears” echo sounds, which were novel to the participants. The experimental task involved sound localization among 15 positions, which demanded intense attention and was less easy to perform, whereas the control task required detection of a different pitch, which required low attention and was easy to perform. The different difficulty levels between the sound localization and pitch discrimination tasks may be a confounding factor in the results, since the major analysis was based on contrast subtraction. Future studies may consider using a psychophysical staircase procedure to control for the level of attention and other task taking processes (Collignon et al. 2011, 2013). The sound localization task appeared to be more difficult for the late- than early-onset blind individuals. Similarly, this would confound the between-group comparisons as difficult task would have called for more intense attention among the participants. Despite present findings did not confirm such possibility, future study should attempt to address this issue. Besides, our findings may not be comparable to those obtained from simple sound localization tasks. Last, but not least, the participants were not well trained on the sound localization task before the scanning. The participants may have employed other varied methods in response to the instructions given. This could increase the variances of the results and hence decrease the power of the group contrasts. Interpretation of the results should therefore be made with caution.

Conclusion

This study explored how prior visual experience would modulate auditory spatial processing. Participants in the early- and late-blind groups were differed in terms of duration of living with an intact vision. Between-group analyses indicated dissociations of the right MOG, right SPG, and left SFG, which are likely attributable to the prior visual experience gained by the late-onset blind individuals. The right MOG played a significant role in auditory spatial processing among the early-onset blind individuals. In contrast, the left SFG contributed significantly to auditory spatial processing among the late-onset blind individuals. Prior visual experience modulates auditory spatial processing by means of enhancing the development of the visuospatial working memory for analyzing the spatial information embedded in the “Bat-ears” sounds and relating them to the different locations of the sound sources. Future studies should further manipulate the load on the visuospatial working memory and validate the role of SFG among the late-onset blind individuals.

References

Arno P, De Volder AG, Vanlierde A, Wanet-Defalque MC, Streel E, Robert A, Sanabria-Bohorquez S, Veraart C (2001) Occipital activation by pattern recognition in the early blind using auditory substitution for vision. Neuroimage 13(4):632–645. doi:10.1006/nimg.2000.0731

Arnott SR, Alain C (2011) The auditory dorsal pathway: orienting vision. Neurosci Biobehav Rev 35(10):2162–2173. doi:10.1016/j.neubiorev.2011.04.005

Bedny M, Konkle T, Pelphrey K, Saxe R, Pascual-Leone A (2010) Sensitive period for a multimodal response in human visual motion area MT/MST. Curr Biol 20:1900–1906. doi:10.1016/j.cub.2010.09.044

Bedny M, Pascual-Leone A, Dravida S, Saxe R (2012) A sensitive period for language in the visual cortex: distinct patterns of plasticity in congenitally versus late blind adults. Brain Lang 122:162–170. doi:10.1016/j.bandl.2011.10.005

Beer AL, Batson MA, Watanabe T (2011) Multisensory perceptual learning reshapes both fast and slow mechanisms of crossmodal processing. Cogn Affect Behav Neurosci 11:1–12. doi:10.3758/s13415-010-0006-x

Blumsack JT, Ross ME (2007) Self-report spatial hearing and blindness. Vis Impair Res 9(1):33–38. doi:10.1080/13882350701239365

Büchel C, Price C, Frackowiak RSJ, Friston K (1998) Different activation patterns in the visual cortex of late and congenitally blind subjects. Brain 121:409–419. doi:10.1093/brain/121.3.409

Burkhard MD, Sachs RM (1975) Anthropometric manikin for acoustic research. J Acoust Soc Am 58(1):214–222. doi:10.1121/1.380648

Burton H, Snyder AZ, Conturo TE, Akbudak E, Ollinger JM, Raichle ME (2002a) Adaptive changes in early and late blind: a FMRI study of Braille reading. J Neurophysiol 87(1):589–607

Burton H, Snyder AZ, Diamond JB, Raichle ME (2002b) Adaptive changes in early and late blind: a fMRI study of verb generation to heard nouns. J Neurophysiol 88(6):3359–3371

Chan CH, Wong WK, Ting KH, Whitfield-Gabrieli S, He JF, Lee MC (2012) Cross auditory-spatial learning in early-blind individuals. Hum Brain Mapp 33(11):2714–2727. doi:10.1002/hbm.21395

Cohen LG, Weeks RA, Sadato N, Celnik P, Ishii K, Hallett M (1999) Period of susceptibility for cross-modal plasticity in the blind. Ann Neurol 45(4):451–460. doi:10.1002/1531-8249(199904)45:4<451:AID-ANA6>3.0.CO;2-B

Collignon O, Lassonde M, Lepore F, Bastien D, Veraart C (2007) Functional cerebral reorganization for auditory spatial processing and auditory substitution of vision in early blind subjects. Cereb Cortex 17(2):457–465. doi:10.1093/cercor/bhj162

Collignon O, Davare M, Olivier E, De Volder AG (2009) Reorganisation of the right occipito-parietal stream for auditory spatial processing in early blind humans. A transcranial magnetic stimulation study. Brain Topogr 21:232–240. doi:10.1007/s10548-009-0075-8

Collignon O, Vandewalle G, Voss P, Albouy G, Charbonneau G, Lassonde M, Lepore F (2011) Functional specialization for auditory-spatial processing in the occipital cortex of congenitally blind humans. PNAS 108(11):4435–4440. doi:10.1073/pnas.1013928108

Collignon O, Dormal G, Albouy G, Vandewalle G, Voss P, Phillips C, Lepore F (2013) Impact of blindness onset on the functional organization and the connectivity of the occipital cortex. Brain 136(9):2769–2783. doi:10.1093/brain/awt176

Cornoldi C, Vecchi T (2000) Mental imagery in blind people: the role of passive and active visuo-spatial processes. In: Heller M (ed) Touch, representation, and blindness. Oxford University Press, Oxford, pp 143–181

Cornoldi C, Cortesi A, Preti D (1991) Individual differences in the capacity limitations of visuospatial short-term memory: research on sighted and totally congenitally blind people. Mem Cognit 19(5):459–468. doi:10.3758/BF03199569

Courtney SM, Ungerleider LG, Keil K, Haxby JV (1996) Object and spatial visual working memory activates separate neural systems in human cortex. Cereb Cortex 6(1):39–49. doi:10.1093/cercor/6.1.39

Courtney SM, Petit L, Maisog JM, Ungerleider LG, Haxby JV (1998) An area specialized for spatial working memory in human frontal cortex. Science 279(5355):1347–1351. doi:10.1126/science.279.5355.1347

Dai XY, Ryan JJ, Paolo AM, Harrington RG (1990) Factor analysis of the mainland Chinese version of the Wechsler Adult Intelligence Scale (WAIS-RC) in a brain-damaged sample. Int J Neurosci 55(2–4):107–111. doi:10.3109/00207459008985956

Dormal G, Collignon O (2011) Functional selectivity in sensory-deprived cortices. J Neurophysiol 105:2627–2630. doi:10.1152/jn.0.0109.2011

Emier M (2004) Multisensory integration: how visual experience shapes spatial perception. Curr Biol 14(3):R115–R117. doi:10.1016/j.cub.2004.01.018

Friston KJ, Holmes AP, Worsley KJ, Poline JP, Frith CD, Frackowiak RSJ (1994) Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp 2(4):189–210. doi:10.1002/hbm.460020402

Friston KJ, Holmes A, Poline JB, Price CJ, Frith CD (1996) Detecting activations in PET and fMRI: levels of inference and power. Neuroimage 4(3):223–235. doi:10.1006/nimg.1996.0074

Goldman-Rakic PS (1994) The issue of memory in the study of prefrontal functions. In: Theiry AM, Glowinski J, Goldman-Rakic PS, Christen Y (eds) Motor and cognitive functions of the prefrontal cortex. Springer, Berlin, pp 112–122

Goldman-Rakic PS (1995) Architecture of the prefrontal cortex and the central executive. Ann N Y Acad Sci 769:71–84. doi:10.1111/j.1749-6632.1995.tb38132.x

Gong YX (1982) Manual of modified Wechsler Adult Intelligence Scale (WAIS-RC) (in Chinese). Hunan Medical College, Changsha

Gougoux F, Zatorre R, Lassonde M, Voss P, Lepore F (2005) A functional neuroimaging study of sound localization: visual cortex activity predicts performance in early-blind individuals. PLoS Biol 3(2):e27. doi:10.1371/journal.pbio.0030027

Haxby JV, Grady CL, Horwitz B, Ungerleider LG, Misgkin M, Carson RE, Herscovitch P, Schapiro MB, Rapoport SI (1991) Dissociation of object and spatial visual processing pathways in human extrastriate cortex. Proc Natl Acad Sci USA 88(5):1621–1625

Holmes AP, Friston KJ (1998) Generalizability, random effects, and population inference. Neuroimage 7:S754

Kujala T, Alho K, Huotilainen M, Ilmoniemi RJ, Lehtokoski A, Leinonen A, Rinne T, Salonen O, Sinkkonen J, Standertskjöuld-Nordenstam C-G, Näuätäunen R (1997) Electrophysiological evidence for cross-modal plasticity in humans with early- and late-onset blindness. Psychophysiology 34(2):213–216. doi:10.1111/j.1469-8986.1997.tb02134.x

Lehnert G, Zimmer HD (2006) Auditory and visual spatial working memory. Mem Cognit 34(5):1080–1090. doi:10.3758/BF03193254

Lehnert G, Zimmer HD (2008) Common coding of auditory and visual spatial information in working memory. Brain Res 1230:158–167. doi:10.1016/j.brainres.2008.07.005

Macaluso E, Driver J (2005) Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci 28(5):264–271. doi:10.1016/j.tins.2005.03.008

Mark DM (1993) Human spatial cognition. In: Medyckyj-Scott D, Heamshaw HM (eds) Human factors in geographical information systems. Balhaven Press, London, pp 51–60

Martinkauppi S, Rämä P, Aronen HJ, Korvenoja A, Carlson S (2000) Working memory of auditory localization. Cereb Cortex 10(9):889–898. doi:10.1093/cercor/10.9.889

Mazaika PK, Whitfield S, Cooper JC (2005) Detection and repair of transient artifacts in fMRI data. Neuroimage 26:S36

Mishkin M, Ungerleider LG, Macko KA (1983) Object vision and spatial vision: two cortical visual pathways. Trends Neurosci 6:414–417. doi:10.1016/0166-2236(83)90190-X

Nelson CA, Monk CS, Lin J, Carver LJ, Thomas KM, Truwit CL (2000) Functional neuroanatomy of spatial working memory in children. Dev Psychol 36(1):109–116. doi:10.1037/0012-1649.36.1.109

Nichols T, Brett M, Wager J, Poline TB (2005) Valid conjunction inference with the minimum statistic. Neuroimage 25(3):653–660. doi:10.1016/j.neuroimage.2004.12.005

Renier LA, Anurova I, De Volder AG, Carlson S, VanMeter J, Rauschecker JP (2010) Preserved functional specialization for spatial processing in the middle occipital gyrus of the early blind. Neuron 68(1):138–148. doi:10.1016/j.neuron.2010.09.021

Ricciardi E, Bonino D, Gentili C, Sani L, Pietrini P, Vecchi T (2006) Neural correlates of spatial working memory in humans: a functional magnetic resonance imaging study comparing visual and tactile processes. Neuroscience 139(1):339–349. doi:10.1016/j.neuroscience.2005.08.045

Rieser JJ, Hill EW, Talor CR, Bradfield A, Rosen S (1992) Visual experience, visual field size, and the development of nonvisual sensitivity to the spatial structure of outdoor neighborhoods explored by walking. J Exp Psychol Gen 121(2):210–221. doi:10.1037/0096-3445.121.2.210

Sadato N, Okada T, Honda M, Yonekura Y (2002) Critical period for cross-modal plasticity in blind humans: a functional MRI study. Neuroimage 16(2):389–400. doi:10.1006/nimg.2002.1111

Thinus-Blanc C, Gaunet F (1997) Representation of space in blind persons: vision as a spatial sense? Psychol Bull 121(1):20–42. doi:10.1037/0033-2909.121.1.20

Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M (2002) Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage 15(1):273–289. doi:10.1006/nimg.2001.0978

Ungerleider LG, Mishkin M (1982) Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW (eds) Analysis of visual behavior. The MIT Press, Cambridge, pp 549–586

Vander Kolk CJ (1977) Intelligence testing for visually impaired persons. J Vis Impair Blind 77(4):158–163

Vander Kolk CJ (1982) A comparison of intelligence test score patterns between visually impaired subgroups and the sighted. Rehabil Psychol 27(2):115–120

Vecchi T, Monticelli ML, Cornoldi C (1995) Visuo-spatial working memory: structures and variables affecting a capacity measure. Neuropsychologia 33(11):1549–1564. doi:10.1016/0028-3932(95)00080-M

Voss P, Gougoux F, Zatorre RJ, Lassonde M, Lepore F (2008) Differential occipital responses in early- and late-blind individuals during a sound-source discrimination task. Neuroimage 40(2):746–758. doi:10.1016/j.neuroimage.2007.12.020

Voss P, Lepore F, Gougoux F, Zatorre RJ (2011) Relevance of spectral cues for auditory spatial processing in the occipital cortex of the blind. Front Psychol 48:1–12. doi:10.3389/fpsyg.2011.00048

Wechsler D (1955) Manual for the Wechsler adult intelligence scale. The Psychological Corporation, Oxford

Weeks R, Horwitz B, Aziz-Sultan A, Tian B, Wessinger CM, Cohen LG, Hallett M, Rauschecker JP (2000) A positron emission tomographic study of auditory localization in the congenitally blind. J Neurosci 20:2664–2672

Wittenberg GF, Werhahn KJ, Wassermann EM, Herscovitch P, Cohen LG (2004) Functional connectivity between somatosensory and visual cortex in early blind humans. Eur J Neurosci 20(7):1923–1927. doi:10.1111/j.1460-9568.2004.03630.x

Acknowledgments

The authors thank the associations of people with blindness and participants for participating in this study. This study was supported by the Collaborative Research Fund awarded by Research Grant Council of Hong Kong to CCH Chan and TMC Lee (PolyU9/CRF/09). This study was partially supported by an internal research grant from Department of Rehabilitation Sciences, The Hong Kong Polytechnic University to CCH Chan.

Author information

Authors and Affiliations

Corresponding authors

Additional information

This is one of several papers published together in Brain Topography on the ‘‘Special Issue: Auditory Cortex 2012”.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Tao, Q., Chan, C.C.H., Luo, Yj. et al. How Does Experience Modulate Auditory Spatial Processing in Individuals with Blindness?. Brain Topogr 28, 506–519 (2015). https://doi.org/10.1007/s10548-013-0339-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10548-013-0339-1