Abstract

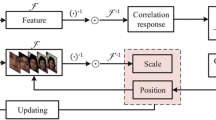

In recent years, the correlation filter (CF)-based method has significantly advanced in the tracking for unmanned aerial vehicles (UAVs). As the core component of most trackers, CF is a discriminative classifier to distinguish the object from the surrounding environment. However, the poor representation of the object and lack of contextual information have restricted the tracker to gain better performance. In this work, a robust framework with multi-kernelized correlators is proposed to improve robustness and accuracy simultaneously. Both convolutional features extracted from the neural network and hand-crafted features are employed to enhance expressions for object appearances. Then, the adaptive context analysis strategy helps filters to effectively learn the surrounding information by introducing context patches with the GMSD index. In the training stage, multiple dynamic filters with time-attenuated factors are introduced to avoid tracking failure caused by dramatic appearance changes. The response maps corresponding to different features are finally fused before the novel resolution enhancement operation to increase distinguishing capability. As a result, the optimization problem is reformulated, and a closed-form solution for the proposed framework can be obtained in the kernel space. Extensive experiments on 100 challenging UAV tracking sequences demonstrate that the proposed tracker outperforms other 23 state-of-the-art trackers and can effectively handle unexpected appearance variations under the complex and constantly changing working conditions.

Similar content being viewed by others

References

Bertinetto L, Valmadre J, Golodetz S, Miksik O, Torr PH (2016) Staple: Complementary learners for real-time tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 1401–1409

Bolme DS, Beveridge JR, Draper BA, Lui YM (2010) Visual object tracking using adaptive correlation filters. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 2544–2550

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), vol 1, pp 886–893

Danelljan M, Häger G, Khan F, Felsberg M (2014a) Accurate scale estimation for robust visual tracking. In: The British machine vision conference (BMVC)

Danelljan M, Shahbaz Khan F, Felsberg M, Van de Weijer J (2014b) Adaptive color attributes for real-time visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 1090–1097

Danelljan M, Hager G, Shahbaz Khan F, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE international conference on computer Vision (ICCV), pp 4310–4318

Danelljan M, Robinson A, Khan FS, Felsberg M (2016) Beyond correlation filters: learning continuous convolution operators for visual tracking. In: Proceedings of the European conference on computer vision (ECCV), pp 472–488

Fan H, Ling H (2017) Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp 5486–5494

Felzenszwalb PF, Girshick RB, McAllester D, Ramanan D (2010) Object detection with discriminatively trained part-based models. IEEE Trans Pattern Anal Mach Intell 32(9):1627–1645

Fu C, Duan R, Kayacan E (2019) Visual tracking with online structural similarity-based weighted multiple instance learning. Inf Sci 481:292–310

Gladh S, Danelljan M, Khan FS, Felsberg M (2016) Deep motion features for visual tracking. In: 2016 23rd international conference on pattern recognition (ICPR), pp 1243–1248

Hare S, Golodetz S, Saffari A, Vineet V, Cheng M, Hicks SL, Torr PHS (2016) Struck: structured output tracking with kernels. IEEE Trans Pattern Anal Mach Intell 38(10):2096–2109

Henriques JF, Caseiro R, Martins P, Batista JP (2012) Exploiting the circulant structure of tracking-by-detection with kernels. In: Proceedings of the European conference on computer vision (ECCV)

Henriques JF, Caseiro R, Martins P, Batista J (2015) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Hong Z, Chen Z, Wang C, Mei X, Prokhorov D, Tao D (2015) Multi-store tracker (muster): a cognitive psychology inspired approach to object tracking. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp 749–758

Hore A, Ziou D (2010) Image quality metrics: PSNR vs. SSIM. In: 2010 20th international conference on pattern recognition, pp 2366–2369

Kalal Z, Mikolajczyk K, Matas J (2012) Tracking-learning-detection. IEEE Trans Pattern Anal Mach Intell 34(7):1409–1422

Kiani Galoogahi H, Fagg A, Lucey S (2017) Learning background-aware correlation filters for visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 1135–1143

Leichter I (2012) Mean shift trackers with cross-bin metrics. IEEE Trans Pattern Anal Mach Intell 34(4):695–706

Li F, Yao Y, Li P, Zhang D, Zuo W, Yang MH (2017) Integrating boundary and center correlation filters for visual tracking with aspect ratio variation. In: Proceedings of the IEEE international conference on computer vision, pp 2001–2009

Li F, Tian C, Zuo W, Zhang L, Yang MH (2018) Learning spatial-temporal regularized correlation filters for visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 4904–4913

Li Y, Zhu J (2014) A scale adaptive kernel correlation filter tracker with feature integration. In: ECCV workshop, pp 254–265

Lin S, Garratt MA, Lambert AJ (2017) Monocular vision-based real-time target recognition and tracking for autonomously landing an UAV in a cluttered shipboard environment. Auton Robots 41(4):881–901

Ma C, Huang JB, Yang X, Yang MH (2015a) Hierarchical convolutional features for visual tracking. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp 3074–3082

Ma K, Yeganeh H, Zeng K, Wang Z (2015b) High dynamic range image compression by optimizing tone mapped image quality index. IEEE Trans Image Process 24(10):3086–3097

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for UAV tracking. In: Proceedings of the European conference on computer vision (ECCV), pp 445–461

Mueller M, Smith N, Ghanem B (2017) Context-aware correlation filter tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 1396–1404

Olivares-Mendez M, Fu C, Ludivig P, Bissyandé T, Kannan S, Zurad M, Annaiyan A, Voos H, Campoy P (2015) Towards an autonomous vision-based unmanned aerial system against wildlife poachers. Sensors 15(12):31362–31391

Pednekar GV, Udupa JK, McLaughlin DJ, Wu X, Tong Y, Simone CB, Camaratta J, Torigian DA (2018) Image quality and segmentation. In: Medical imaging 2018: image-guided procedures, robotic interventions, and modeling, vol 10576, p 105762N

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations (ICLR)

Van De Weijer J, Schmid C, Verbeek J, Larlus D (2009) Learning color names for real-world applications. IEEE Trans Image Process 18(7):1512–1523

Wang C, Zhang L, **e L, Yuan J (2018a) Kernel cross-correlator. In: AAAI conference on artificial intelligence (AAAI)

Wang N, Zhou W, Tian Q, Hong R, Wang M, Li H (2018b) Multi-cue correlation filters for robust visual tracking. In: Proceedings of the ieee conference on computer vision and pattern recognition (CVPR), pp 4844–4853

Wang N, Song Y, Ma C, Zhou W, Liu W, Li H (2019) Unsupervised deep tracking. In: Proceedings of the IEEE Conference on computer vision and pattern recognition (CVPR), pp 1308–1317

Wen D, Han H, Jain AK (2015) Face spoof detection with image distortion analysis. IEEE Trans Inf Forensics Secur 10(4):746–761

Wu Y, Lim J, Yang MH (2015) Object tracking benchmark. IEEE Trans Pattern Anal Mach Intell 37(9):1834–1848

Xue W, Zhang L, Mou X, Bovik AC (2014) Gradient magnitude similarity deviation: a highly efficient perceptual image quality index. IEEE Trans Image Process 23(2):684–695

Yi S, Jiang N, Feng B, Wang X, Liu W (2016) Online similarity learning for visual tracking. Inf Sci 364:33–50

Zhang J, Ma S, Sclaroff S (2014) MEEM: robust tracking via multiple experts using entropy minimization. In: Proceedings of the European Conference on computer vision (ECCV), pp 188–203

Zhang K, Song H (2013) Real-time visual tracking via online weighted multiple instance learning. Pattern Recognit 46(1):397–411

Zhang L, Suganthan PN (2017) Robust visual tracking via co-trained kernelized correlation filters. Pattern Recognit 69:82–93

Zhang L, Zhang L, Mou X, Zhang D (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386

Zhang T, Xu C, Yang MH (2017) Multi-task correlation particle filter for robust object tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4335–4343

Acknowledgements

The work was supported by the National Natural Science Foundation of China (No. 61806148) and the Fundamental Research Funds for the Central Universities (No. 22120180009).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this section, a more detailed derivation from Eq. (5) to Eq. (8) is presented.

Because all operations in the Fourier domain are performed element-wise, each element of \(\hat{\mathbf{w }}_{n}^{*}\) (indexed by u) can be solved independently, and Eq. (5) can be decomposed as the subproblem \(\hat{{\mathcal {E}}}_{nu}\), which is defined as follows:

where \({\hat{K}}_{u}^{n0} = {\hat{k}}_{u}^\mathbf{x _{n0} \mathbf{x} _{n0}}\) and \({\hat{K}}_{u}^{ns} = {\hat{k}}_{u}^\mathbf{x _{ns} \mathbf{x} _{ns}}\) are used to simplify the denotation. Then, Eq. (20) can be expanded according to the property of the vector operation, that is equivalent to

Therefore, the solution to the optimization target can be calculated by setting the first derivative of \({\hat{w}}_{nu}^{*}\) to zero, i.e.,

Hence, Eq. (22) can be reformulated as follows:

A closed-form solution to \({\hat{w}}_{nu}^{*}\) can be obtained:

which is the sub-solution of \(\hat{\mathbf{w}}_{n}^{*}\) in Eq. (8). \(\square\)

Rights and permissions

About this article

Cite this article

Fu, C., He, Y., Lin, F. et al. Robust multi-kernelized correlators for UAV tracking with adaptive context analysis and dynamic weighted filters. Neural Comput & Applic 32, 12591–12607 (2020). https://doi.org/10.1007/s00521-020-04716-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-04716-x