Abstract

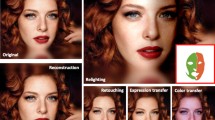

Meme, usually represented by an image of exaggerated expressive face captioned with short text, are increasingly produced and used online to express people’s strong or subtle emotions. Meanwhile, meme mimic apps continuously appear, such as the meme filming feature in WeChat App that allow users to imitate meme expressions. Motivated by such scenarios, we focus on transferring exaggerated or unique expressions which is rarely noticed by previous works. We present a technique—“expressive style transfer”—which allows users to faithfully imitate popular memes’ unique expression styles both geometrically and textually. To conduct distortion-free transferring of exaggerated geometry, we propose a novel accurate feature curve-based face reconstruction algorithm for 3D-aware image war**. Furthermore, we propose an identity preserving blending model, based on a deep neural network, to enhance facial expressive textural details. We demonstrate the effectiveness of our method on a collection of Internet memes.

Similar content being viewed by others

References

Averbuch-Elor, H., Cohen-Or, D., Kopf, J., Cohen, M.F.: Bringing portraits to life. ACM Trans. Graph. (TOG) 36(6), 196 (2017)

Bas, A., Smith, W.A., Bolkart, T., Wuhrer, S.: Fitting a 3d morphable model to edges: a comparison between hard and soft correspondences. In: Asian Conference on Computer Vision, pp. 377–391. Springer (2016)

Besl, P.J., McKay, N.D.: Method for registration of 3-d shapes. In: Sensor Fusion IV: Control Paradigms and Data Structures, vol. 1611, pp. 586–607. International Society for Optics and Photonics (1992)

Blanz, V., Vetter, T.: A morphable model for the synthesis of 3d faces. In: Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, pp. 187–194. ACM Press/Addison-Wesley Publishing Co. (1999)

Booth, J., Antonakos, E., Ploumpis, S., Trigeorgis, G., Panagakis, Y., Zafeiriou, S., et al.: 3d face morphable models in-the-wild. In: Proceedings of the IEEE Conference on ComputerVision and Pattern Recognition (2017)

Bouaziz, S., Wang, Y., Pauly, M.: Online modeling for realtime facial animation. ACM Trans. Graph. (TOG) 32(4), 40 (2013)

Cao, C., Hou, Q., Zhou, K.: Displaced dynamic expression regression for real-time facial tracking and animation. ACM Trans. Graph. (TOG) 33(4), 43 (2014)

Cao, C., Weng, Y., Lin, S., Zhou, K.: 3d shape regression for real-time facial animation. ACM Trans. Graph. (TOG) 32(4), 41 (2013)

Cao, C., Weng, Y., Zhou, S., Tong, Y., Zhou, K.: Facewarehouse: a 3d facial expression database for visual computing. IEEE Trans. Vis. Comput. Graph. 20(3), 413–425 (2014)

Choi, Y., Choi, M., Kim, M., Ha, J.W., Kim, S., Choo, J.: Stargan: unified generative adversarial networks for multi-domain image-to-image translation. ar**v preprint 1711 (2017)

Ding, L., Ding, X., Fang, C.: 3d face sparse reconstruction based on local linear fitting. Vis. Comput. 30(2), 189–200 (2014)

Fišer, J., Jamriška, O., Simons, D., Shechtman, E., Lu, J., Asente, P., Lukáč, M., Sỳkora, D.: Example-based synthesis of stylized facial animations. ACM Trans. Graph. (TOG) 36(4), 155 (2017)

Frigo, O., Sabater, N., Delon, J., Hellier, P.: Video style transfer by consistent adaptive patch sampling. Vis. Comput. 35(3), 429–443 (2019)

Garrido, P., Valgaerts, L., Rehmsen, O., Thormahlen, T., Perez, P., Theobalt, C.: Automatic face reenactment. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4217–4224 (2014)

Garrido, P., Zollhöfer, M., Casas, D., Valgaerts, L., Varanasi, K., Pérez, P., Theobalt, C.: Reconstruction of personalized 3d face rigs from monocular video. ACM Trans. Graph. (TOG) 35(3), 28 (2016)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2414–2423 (2016)

Geng, J., Shao, T., Zheng, Y., Weng, Y., Zhou, K.: Warp-guided GANs for single-photo facial animation. In: SIGGRAPH Asia 2018 Technical Papers, p. 231. ACM (2018)

Jackson, A.S., Bulat, A., Argyriou, V., Tzimiropoulos, G.: Large pose 3d face reconstruction from a single image via direct volumetric CNN regression. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1031–1039 (2017)

Jiang, L., Zhang, J., Deng, B., Li, H., Liu, L.: 3d face reconstruction with geometry details from a single image. ar**v preprint ar**v:1702.05619 (2017)

**g, Y., Yang, Y., Feng, Z., Ye, J., Song, M.: Neural style transfer: a review. CoRR abs/1705.04058 (2017)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision, pp. 694–711. Springer (2016)

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vision 1(4), 321–331 (1988)

Kemelmacher-Shlizerman, I.: Internet based morphable model. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3256–3263 (2013)

Kim, H., Garrido, P., Tewari, A., Xu, W., Thies, J., Nießner, M., Pérez, P., Richardt, C., Zollhöfer, M., Theobalt, C.: Deep video portraits. ar**v preprint ar**v:1805.11714 (2018)

Kingma, D.P., Dhariwal, P.: Glow: generative flow with invertible \(1\times 1\) convolutions. ar**v preprint ar**v:1807.03039 (2018)

Li, C., Wand, M.: Precomputed real-time texture synthesis with Markovian generative adversarial networks. In: European Conference on Computer Vision, pp. 702–716. Springer (2016)

Li, H., Yu, J., Ye, Y., Bregler, C.: Realtime facial animation with on-the-fly correctives. ACM Trans. Graph. 32(4), 42–1 (2013)

Li, Y., Ma, L., Fan, H., Mitchell, K.: Feature-preserving detailed 3d face reconstruction from a single image. In: Proceedings of the 15th ACM SIGGRAPH European Conference on Visual Media Production, pp. 1:1–1:9. ACM, New York, NY, USA (2018)

Liao, J., Yao, Y., Yuan, L., Hua, G., Kang, S.B.: Visual attribute transfer through deep image analogy. ar**v preprint ar**v:1705.01088 (2017)

Luan, F., Paris, S., Shechtman, E., Bala, K.: Deep photo style transfer. CoRR, ar**v:1703.07511 2 (2017)

Ma, M., Peng, S., Hu, X.: A lighting robust fitting approach of 3d morphable model for face reconstruction. Vis. Comput. 32(10), 1223–1238 (2016)

Parkhi, O.M., Vedaldi, A., Zisserman, A., et al.: Deep face recognition. In: BMVC, vol. 1, p. 6 (2015)

Pumarola, A., Agudo, A., Martinez, A.M., Sanfeliu, A., Moreno-Noguer, F.: Ganimation: anatomically-aware facial animation from a single image. ar**v preprint ar**v:1807.09251 (2018)

Qiao, F., Yao, N., Jiao, Z., Li, Z., Chen, H., Wang, H.: Emotional facial expression transfer from a single image via generative adversarial nets. Comput. Anim. Virtual Worlds 29(3–4), e1819 (2018)

Reinhard, E., Adhikhmin, M., Gooch, B., Shirley, P.: Color transfer between images. IEEE Comput. Graph. Appl. 21(5), 34–41 (2001)

Romdhani, S., Vetter, T.: Estimating 3d shape and texture using pixel intensity, edges, specular highlights, texture constraints and a prior. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005. CVPR 2005, vol. 2, pp. 986–993. IEEE (2005)

Snape, P., Panagakis, Y., Zafeiriou, S.: Automatic construction of robust spherical harmonic subspaces. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 91–100 (2015)

Snape, P., Zafeiriou, S.: Kernel-PCA analysis of surface normals for shape-from-shading. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1059–1066 (2014)

Sorkine, O., Cohen-Or, D., Lipman, Y., Alexa, M., Rössl, C., Seidel, H.P.: Laplacian surface editing. In: Proceedings of the 2004 Eurographics/ACM SIGGRAPH Symposium on Geometry Processing, pp. 175–184. ACM (2004)

Sumner, R.W., Popović, J.: Deformation transfer for triangle meshes. In: ACM Transactions on Graphics (TOG), vol. 23, pp. 399–405. ACM (2004)

Thies, J., Zollhofer, M., Stamminger, M., Theobalt, C., Nießner, M.: Face2face: Real-time face capture and reenactment of RGB videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2387–2395 (2016)

Wang, L., Wang, Z., Yang, X., Hu, S.M., Zhang, J.: Photographic style transfer. Vis. Comput. (2018). https://doi.org/10.1007/s00371-018-1609-4

Wu, W., Zhang, Y., Li, C., Qian, C., Loy, C.C.: Reenactgan: Learning to reenact faces via boundary transfer. ar**v preprint ar**v:1807.11079 (2018)

Yang, F., Wang, J., Shechtman, E., Bourdev, L., Metaxas, D.: Expression flow for 3d-aware face component transfer. ACM Trans. Graph. (TOG) 30(4), 60 (2011)

Zhu, X., Lei, Z., Liu, X., Shi, H., Li, S.Z.: Face alignment across large poses: A 3d solution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 146–155 (2016)

Acknowledgements

We thank the anonymous reviewers for the insightful and constructive comments, Matt Boyd-Surka for proofreading this manuscript, **ghui Zhou, Keli Cheng and **aodong Gu for valuable discussions, and Yuan Yao for providing their deep image analogy result [29]. This paper was supported by the National Natural Science Foundation of China (No. 61832016) and the Science and Technology Project of Zhejiang province (No.2018C01080). This work was also funded in part by the Pearl River Talent Recruitment Program Innovative and Entrepreneurial Teams in 2017 under Grant No. 2017ZT07X152 and the Shenzhen Fundamental Research Fund under Grants No. KQTD2015033114415450 and No. ZDSYS201707251409055.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tang, Y., Han, X., Li, Y. et al. Expressive facial style transfer for personalized memes mimic. Vis Comput 35, 783–795 (2019). https://doi.org/10.1007/s00371-019-01695-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-019-01695-6