Abstract

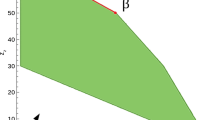

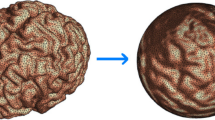

We characterize the first-order sensitivity of approximately recovering a low-rank matrix from linear measurements, a standard problem in compressed sensing. A special case covered by our analysis is approximating an incomplete matrix by a low-rank matrix. This is one customary approach to build recommender systems. We give an algorithm for computing the associated condition number and demonstrate experimentally how the number of measurements affects it. In addition, we study the condition number of the best rank-r matrix approximation problem. It measures in the Frobenius norm by how much an infinitesimal perturbation to an arbitrary input matrix is amplified in the movement of its best rank-r approximation. We give an explicit formula for its condition number, which shows that it depends on the relative singular value gap between the rth and \((r+1)\)th singular values of the input matrix.

Similar content being viewed by others

Notes

In the framework of [30], a slightly different approach is proposed where the Riemannian metric should be chosen to measure relative errors.

Equivalently, it projects the column space of A to \(U^*\), the r-dimensional subspace of left singular vectors associated to the largest r singular values.

This means that its smooth structure is compatible with the smooth structure on \(\mathbb {R}^\ell \).

Erdös’s result [44] implies that the set of points which have several infima of the distance function to \(\mathcal {S}_r\) is of Lebesgue measure zero. However, \(\mathcal {S}_r\) may not be closed, and so there can be points with no minimizer on \(\mathcal {S}_r\). The set of such points can even be full-dimensional. Think of a cusp with the node removed. Recently, explicit examples of nonclosedness were presented in [22] in the context of matrix completion.

If \(Y = U S V^T\) is a compact SVD of Y, then \(\textrm{P}_{\textrm{N}_{Y} {\mathcal {M}_r}}(A) = A - UU^T A V V^T\).

References

Candès, E.J., Romberg, J.K., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 59(8), 1207–1223 (2006). https://doi.org/10.1002/cpa.20124

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006). https://doi.org/10.1109/TIT.2006.871582

Duarte, M.F., Eldar, Y.C.: Structured compressed sensing: from theory to applications. IEEE Trans. Signal Process. 59(9), 4053–4085 (2011). https://doi.org/10.1109/TSP.2011.2161982

Eldar, Y.C., Kutyniok, G. (eds.): Compressed Sensing: Theory and Applications. Cambridge University Press, Cambridge, UK (2012). https://doi.org/10.1017/cbo9780511794308

Foucart, S., Rauhut, H.: A Mathematical Introduction to Compressive Sensing. Birkhäuser, New York (2013). https://doi.org/10.1007/978-0-8176-4948-7

Bennett, J., Lanning, S.: The Netflix Prize. In: Proceedings of KDD Cup and Workshop (2009)

Koren, Y.: Factorization meets the neighborhood: A multifaceted collaborative filtering model. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ’08, pp. 426–434, New York (2008). https://doi.org/10.1145/1401890.1401944

Koren, Y.: Collaborative filtering with temporal dynamics. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ’09, pp. 447–456, New York, NY, USA (2009). https://doi.org/10.1145/1557019.1557072

Koren, Y.: The BellKor Solution to the Netflix Grand Prize. https://netflixprize.com/assets/GrandPrize2009_BPC_BellKor.pdf

Bell, R., Koren, Y., Volinsky, C.: Matrix factorization techniques for recommender systems. Computer 42(8), 30–37 (2009). https://doi.org/10.1109/mc.2009.263

Rennie, J.D.M., Srebro, N.: Fast maximum margin matrix factorization for collaborative prediction. In: Proceedings of the International Conference of Machine Learning (2005). https://doi.org/10.1145/1102351.1102441

Saul, L.K., Weinberger, K.Q.: Unsupervised learning of image manifolds by semidefinite programming. Int. J. Comput. Vis. 70(1), 77–90 (2006). https://doi.org/10.1109/cvpr.2004.1315272

So, A., Ye, Y.: Theory of semidefinite programming for sensor network localization. Math. Program. 109(2–3, Ser. B), 367–384 (2007). https://doi.org/10.1007/s10107-006-0040-1

Linial, N., London, E., Rabinovich, Y.: The geometry of graphs and some of its algorithmic applications. Combinatorica 15(2), 215–245 (1995). https://doi.org/10.1109/sfcs.1994.365733

Guillemot, C., Le Meur, O.: Image inpainting: overview and recent advances. IEEE Signal Process. Mag. 31, 127–144 (2014). https://doi.org/10.1109/MSP.2013.2273004

Kim, J.-H., Sim, J.-Y., Kim, C.-S.: Video deraining and desnowing using temporal correlation and low-rank matrix completion. IEEE Trans. Image Process. 24, 2658–2670 (2015). https://doi.org/10.1109/tip.2015.2428933

Li, W., Zhao, L., Lin, Z., Xu, D., Lu, D.: Non-local image inpainting using low-rank matrix completion. Comput. Graph. Forum 34, 111–122 (2015). https://doi.org/10.1111/cgf.12521

Argyriou, A., Evgeniou, T., Pontil, M.: Convex multi-task feature learning. Mach. Learn. 73(3), 243–272 (2008). https://doi.org/10.2139/ssrn.1031158

Obozinski, G., Taskar, B., Jordan, M.I.: Joint covariate selection and joint subspace selection for multiple classification problems. Stat. Comput. 20(2), 231–252 (2010). https://doi.org/10.1007/s11222-008-9111-x

Eisenberg, R.: Reflections on big data and sensitivity of results. SIAM News 53, (2020)

Ferrari Dacrema, M., Boglio, S., Cremonesi, P., Jannach, D.: A troubling analysis of reproducibility and progress in recommender systems research. ACM Trans. Inform. Sys. 39(20) (2021). https://doi.org/10.1145/3434185

Tanner, J., Thompson, A., Vary, S.: Matrix rigidity and the ill-posedness of robust PCA and matrix completion. SIAM J. Math. Data Sci. 1, 537–554 (2019). https://doi.org/10.1137/18m1227846

Adcock, B., Dexter, N.: The gap between theory and practice in function approximation with deep neural networks. SIAM J. Math. Data Sci. 3, 624–655 (2021). https://doi.org/10.1137/20m131309x

Breiding, P., Vannieuwenhoven, N.: The condition number of Riemannian approximation problems. SIAM J. Optim. 31, 1049–1077 (2020) [math.NA]. https://doi.org/10.1137/20m1323527

Harris, J.: Algebraic Geometry, A First Course. Graduate Text in Mathematics, vol. 133, p. 328. Springer, New York (1992). https://doi.org/10.1007/978-1-4757-2189-8

Vandereycken, B.: Low-rank matrix completion by Riemannian optimization. SIAM J. Optim. 23(2), 1214–1236 (2013). https://doi.org/10.1137/110845768

Breiding, P., Gesmundo, F., Michalek, M., Vannieuwenhoven: Algebraic compressed sensing. ar**v:2108.13208 [math.NA] (2021)

Rice, J.R.: A theory of condition. SIAM J. Numer. Anal. 3(2), 287–310 (1966). https://doi.org/10.1137/0703023

Blum, L., Cucker, F., Shub, M., Smale, S.: Complexity and Real Computation. Springer, New York (1998). https://doi.org/10.1007/978-1-4612-0701-6

Bürgisser, P., Cucker, F.: Condition: The Geometry of Numerical Algorithms. Springer, Berlin (2013). https://doi.org/10.1007/978-3-642-38896-5

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton, NJ (2008). https://doi.org/10.1515/9781400830244

Boumal, N.: An Introduction to Optimization on Smooth Manifolds. Cambridge University Press, Cambridge (2022)

Drineas, P., Ipsen, I.C.F.: Low-rank matrix approximations do not need a singular value gap. SIAM J. Matrix Anal. Appl. 40(1), 299–319 (2019). https://doi.org/10.1137/18m1163658

Hackbusch, W.: New estimates for the recursive low-rank truncation of block-structured matrices. Numer. Math. 132, 303–328 (2016). https://doi.org/10.1007/s00211-015-0716-7

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011). https://doi.org/10.1137/090771806

Bebendorf, M., Rjasanow, S.: Matrix Compression for the Radiation Heat Transfer in Exhaust Pipes, pp. 183–192 (2000). https://doi.org/10.1007/978-3-662-04015-7_20

Hua, Y., Sarkar, T.K.: A perturbation theorem for sensitivity analysis of SVD based algorithms. In: Proceedings of the 32nd Midwest Symposium on Circuits and Systems, pp. 398–401 (1989). https://doi.org/10.1109/MWSCAS.1989.101875

Vu, T., Chunikhina, E., Raich, R.: Perturbation expansions and error bounds for the truncated singular value decomposition. Linear Algebra Appl. 627, 94–139 (2021). https://doi.org/10.1016/j.laa.2021.05.020

Feppon, F., Lermusiaux, P.F.J.: A geometric approach to dynamical model order reduction. SIAM J. Matrix Anal. Appl. 39, 510–538 (2018). https://doi.org/10.1137/16m1095202

Helmke, U., Moore, J.B.: Optimization and Dynamical Systems. Springer, London (1994). https://doi.org/10.1007/978-1-4471-3467-1

Lee, J.M.: Introduction to Smooth Manifolds, 2nd edn. Springer, New York (2013). https://doi.org/10.1007/978-1-4419-9982-5

Baraniuk, R.G., Cevher, V., Wakin, M.B.: Low-dimensional models for dimensionality reduction and signal recovery: a geometric perspective. Proc. IEEE 98(6), 959–971 (2010). https://doi.org/10.1109/JPROC.2009.2038076

Baraniuk, R.G., Wakin, M.B.: Random projections of smooth manifolds. Found. Comput. Math. 9(1), 51–77 (2009). https://doi.org/10.1007/s10208-007-9011-z

Erdös, P.: Some remarks on the measurability of certain sets. Bull. Am. Math. Soc. 52, 107–109 (1945). https://doi.org/10.1090/s0002-9904-1945-08429-8

Lee, J.M.: Introduction to Riemannian Manifolds. Springer, Heidelberg (2018). https://doi.org/10.1007/978-3-319-91755-9

do Carmo, M.: Riemannian Geometry. Birkhäuser, Boston, MA (1993)

Petersen, P.: Riemannian Geometry, 2nd edn. Graduate Texts in Mathematics, vol. 171, p. 401. Springer, New York (2006). https://doi.org/10.1007/978-0-387-29403-2

O’Neill, B.: Semi-Riemannian Geometry: With Applications to Relativity. Academic Press, Cambridge (1983)

O’Neill, B.: Elementary Differential Geometry, Revised second edition edn. Academic Press, Cambridge (2006). https://doi.org/10.1090/chel/341/01

Amelunxen, D., Bürgisser, P.: Intrinsic volumes of symmetric cones and applications in convex programming. Math. Program. 149 Ser. A, 105–130 (2015). https://doi.org/10.1007/s10107-013-0740-2

Eckart, C., Young, G.: The approximation of one matrix by another of lower rank. Psychometrika 1(3), 211–218 (1936). https://doi.org/10.1007/BF02288367

Absil, P.-A., Mahony, R., Trumpf, J.: An extrinsic look at the Riemannian Hessian. In: Geometric Science of Information. Lecture Notes in Computer Science, pp. 361–368. Springer, Berlin (2013). https://doi.org/10.1007/978-3-642-40020-9_39

Horn, R.A., Johnson, C.R.: Matrix Analysis, 2nd edn. Cambridge University Press, New York (2012)

Acknowledgements

We thank Sebastian Krämer for valuable feedback that led to several improvements in the presentation of our results. In particular, formulating Example 1 by formalizing the introductory example was suggested by him. We thank Joeri Van der Veken for interesting discussions and suggestions on computing second fundamental forms. We also thank three reviewers and the editor for their plentiful questions and comments that led to substantial improvements throughout this paper. Paul Breiding is supported by the Deutsche Forschungsgemeinschaft (DFG), Projektnummer 445466444. Nick Vannieuwenhoven was partially supported by a Postdoctoral Fellowship of the Research Foundation—Flanders (FWO) with project 12E8119N, and partially by the KU Leuven BOF STG/19/002 (Tensor decompositions for multimodal big data analytics) grant from the Belgian Science Policy Office.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proof of Lemma 4

Proof of Lemma 4

We restate Lemma 4 in terms of vector fields, as required by the proof, and then we prove it.

Lemma 4 (The second fundamental form under affine linear diffeomorphisms)

Consider Riemannian embedded submanifolds \(\mathcal {U}\subset \mathbb {R}^N\) and \(\mathcal {W} \subset \mathbb {R}^\ell \) both of dimension s. Let \(L : \mathbb {R}^N \rightarrow \mathbb {R}^\ell , Y\mapsto M(Y)+b\) be an affine linear map that restricts to a diffeomorphism from \(\mathcal {U}\) to \(\mathcal {W}\). For a fixed \(Y\in \mathcal {U}\) let \(\mathcal {E} =(E_1,\ldots , E_s)\) be a local smooth frame of \(\mathcal {U}\) in the neighborhood of Y. For each \(1\le i\le s\) let \(F_i\) be the vector field on \(\mathcal {W}\) that is \(L\vert _{\mathcal {U}}\)-related to \(E_i\). Then \(\mathcal {F} = (F_1,\ldots ,F_s)\) is a smooth frame on \(\mathcal {W}\) in the neighborhood of \(X=L(Y)\), and we have

Herein, \( I\!I _Y \in \textrm{T}_{Y} {\mathcal {U}} \otimes \textrm{T}_{Y} {\mathcal {U}} \otimes \textrm{N}_{Y} {\mathcal {U}}\) should be viewed as an element of the tensor space \((\textrm{T}_{Y} {\mathcal {U}})^* \otimes (\textrm{T}_{Y} {\mathcal {U}})^* \otimes \textrm{N}_{Y} {\mathcal {U}}\) by dualization relative to the Riemannian metric, and likewise for \( I\!I _{X}\).

Proof

Our assumption of \(L\vert _{\mathcal {U}}:\mathcal {U} \rightarrow \mathcal {W}\) being a diffeomorphism implies that \(\mathcal {F}\) is a smooth frame. Let \(1\le i,j\le s\), and \(\varepsilon _i(t) \subset \mathcal {U}\) be the integral curve of \(E_i\) starting at Y (see [41, Chapter 9]). Let \(\phi _i(t) = L(\varepsilon _i(t))\) be the corresponding integral curve on \(\mathcal {W}\) at \(X=L(Y)\). Since \(F_j\) is \(L\vert _{\mathcal {U}}\)-related to \(E_j\), we have

where \(\textrm{d}_{\varepsilon _i(t)}L\vert _{\mathcal {U}}\) is the derivative \(\textrm{d}_{\varepsilon _i(t)}L: \mathbb {R}^N\rightarrow \mathbb {R}^\ell \) of L at \(\varepsilon _i(t)\) restricted to the tangent space \(\textrm{T}_Y\mathcal {U}\subset \mathbb {R}^N\). On the other hand, \(\textrm{d}_{\varepsilon _i(t)}L\ = M \), so that

The fact that the derivative of L is constant is the key part in the proof. Interpreting \(F_{j}\vert _{\phi _i(t)}\) as a smooth curve in \(\textrm{T}_{\phi _i(t)} {\mathbb {R}^\ell } \simeq \mathbb {R}^\ell \) and \(E_j\vert _{\varepsilon _i(t)}\) as a smooth curve in \(\textrm{T}_{\varepsilon _i(t)} {\mathbb {R}^N} \simeq \mathbb {R}^N\), we can take the usual derivatives at \(t=0\) on both sides of (26):

Recall that \(Y=\varepsilon _i(0)\). We can decompose the right hand side into tangent and normal part at \(Y\in \mathcal {U}\), so that

Observe that that \(M(\textrm{T}_{Y} {\mathcal {U}}) = \textrm{T}_{X} {\mathcal {W}}\), where \(X=L(Y)\). Projecting both sides to the normal space of \(\mathcal {W}\) at X yields

The claim follows by applying the Gauss formula for curves [45, Corollary 8.3] on both sides. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Breiding, P., Vannieuwenhoven, N. Sensitivity of low-rank matrix recovery. Numer. Math. 152, 725–759 (2022). https://doi.org/10.1007/s00211-022-01327-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-022-01327-7