Abstract

Background

Despite the potential for Early Care and Education (ECE) settings to promote healthy habits, a gap exists between current practices and evidence-based practices (EBPs) for obesity prevention in childhood.

Methods

We will use an enhanced non-responder trial design to determine the effectiveness and incremental cost-effectiveness of an adaptive implementation strategy for Together, We Inspire Smart Eating (WISE), while examining moderators and mediators of the strategy effect. WISE is a curriculum that aims to increase children’s intake of carotenoid-rich fruits and vegetables through four evidence-based practices in the early care and education setting. In this trial, we will randomize sites that do not respond to low-intensity strategies to either (a) continue receiving low-intensity strategies or (b) receive high-intensity strategies. This design will determine the effect of an adaptive implementation strategy that adds high-intensity versus one that continues with low-intensity among non-responder sites. We will also apply explanatory, sequential mixed methods to provide a nuanced understanding of implementation mechanisms, contextual factors, and characteristics of sites that respond to differing intensities of implementation strategies. Finally, we will conduct a cost effectiveness analysis to estimate the incremental effect of augmenting implementation with high-intensity strategies compared to continuing low-intensity strategies on costs, fidelity, and child health outcomes.

Discussion

We expect our study to contribute to an evidence base for structuring implementation support in real-world ECE contexts, ultimately providing a guide for applying the adaptive implementation strategy in ECE for WISE scale-up. Our work will also provide data to guide implementation decisions of other interventions in ECE. Finally, we will provide the first estimate of relative value for different implementation strategies in this setting.

Trial registration

NCT05050539; 9/20/21.

Similar content being viewed by others

Background

Excess weight is linked with higher risk of 13 cancers [1], and the US has the highest rate of cancer attributable to body mass index (BMI) [2]. Dietary habits and weight trajectories in early life predict later health outcomes [3, 4]; thus, obesity prevention efforts must target young children. Specifically, children are 5 times more likely to be overweight or obese in adulthood if they are overweight in preschool [5]. On average, 60% of US children under age 5 have at least 1 non-parental childcare arrangement per week [6]. This equates to about 15 million children. Children spend 36 h a week in ECE settings, on average [7]. Thus, the early care and education (ECE) environment may be a prime setting to contribute to obesity prevention. Despite the potential for ECE to promote healthy habits, a gap exists between current practices and evidence-based practices (EBPs) [8].

Consistent with World Cancer Research Fund (WCRF) recommendations [9], Together, We Inspire Smart Eating (WISE) aims to increase children’s intake of carotenoid-rich fruits and vegetables (FV). WISE was co-developed with end users to meet the curricular and budgetary needs of the ECE context [10, 11] and is included in the US Department of Agriculture SNAP-Ed toolkit [12]. Research supports each WISE EBP: (1) multiple hands-on exposures to FV support food acceptance [13,14,15,16,17,18,19]; (2) role modeling by educators allows children to observe a trusted adult eating FV [20,21,22]; (3) positive feeding practices support children’s self-regulation [22,23,24]; and (4) mascot use associates a familiar character with FV [25,26,27,28,29,30]. Each EBP aligns with the Academy of Nutrition and Dietetics’ “Benchmarks for Nutrition in Childcare.” [31] Evidence also supports WISE as a whole [32, 33]. Compared to usual education, WISE increased FV intake [32] (8% increase in healthy carotenoid levels; 4% decrease in unhealthy range) [33]. Also consistent with WCRF guidance [9], parents reported significantly decreased fast food and sugar-sweetened beverages intake after a year of WISE [32, 34]. Thus, WISE has a positive impact in areas related to child obesity and adult cancer risk.

Standard approaches to WISE implementation have resulted in challenges and suboptimal fidelity to EBPs [35]. Little research exists to guide solutions. For example, although studies have demonstrated that implementation strategies can promote policy implementation (e.g., menu offerings) and improve the environment (e.g., access to water) [36], few studies have assisted educators to implement EBPs in ECE [37]. Further, no available studies report on implementation mechanisms in ECE [37, 38] (how and why strategies work for whom) or on cost-effectiveness of implementation strategies in ECE. Thus, practitioners lack data to drive decisions on EBP implementation in ECE. A prior small-scale trial by the study team demonstrated that stakeholder-selected implementation strategies were successful at improving fidelity to WISE EBPs, organizational readiness for change, and perceived appropriateness of the intervention [39]. This strategy cost $261 per classroom beyond intervention costs. These data suggest the package of stakeholder-selected strategies was effective for improving WISE EBP implementation. Yet, we do not know if all sites require all strategies to succeed. Scaling all strategies to all sites may be too resource- and time-intensive for wide dissemination [40].

The integrated Promoting Action on Research Implementation in Health Services (i-PARIHS) framework posits that components of successful implementation include characteristics of the innovation (the EBPs), recipients, context, and facilitation (i.e., implementation support) [41]. Successful implementation takes place when facilitation promotes the acceptance and use of an innovation based on the recipients’ and context’s needs. Facilitation exists along a continuum [42]. On one end, task-focused support provides technical and practical help. On the other end, holistic facilitation provides enabling support to cultivate shared meaning, connected networks, and personal development [42]. A central tenant of i-PARIHS is that successful implementation requires different levels and kinds of facilitation depending on characteristics of the innovation, the context, and recipients. The i-PARIHS framework guides our proposal in several ways. Our prior work identified determinants of WISE EBP implementation by applying i-PARIHS. These determinants guided engagement with stakeholders to select and tailor the proposed implementation strategies. Stakeholders prioritized facilitation as a key strategy to improve WISE EBP implementation, and we will tailor facilitation to reflect recipient and contextual needs. While i-PARIHS is ideal to inform implementation strategy tailoring, research has not tested it in this way. Further, i-PARIHS has received limited tests of underlying mechanisms [43,44,45], with most studies in health care [46, 47].

Consistent with i-PARIHS, adaptive implementation strategies reflect that a one-size-fits-all approach may not serve all settings well [48]. Not all sites may need all strategies; giving sites more than they need is expensive and wasteful. An adaptive implementation strategy provides decision points and tailoring variables to optimize resources. Table 1 presents the design features of an adaptive implementation strategy. In sum, an adaptive implementation strategy provides a “replicable guide” for who gets what implementation support and when [49]. Such a guide, if effective for optimizing implementation, would provide practical information for serving settings like ECE with limited resources.

The overall objectives of this study are to determine the effectiveness and cost-effectiveness of an adaptive implementation approach to improve adoption of the EBPs of WISE while also examining implementation mechanisms. Using a mixed methods enhanced non-responder trial, we will execute the following aims:

Specific aim 1. Determine the effectiveness of an adaptive implementation strategy that tailors the intensity of implementation support versus a low-intensity strategy

We will compare the effect of continuing low-intensity strategies vs. augmenting with high-intensity strategies. We hypothesize that sites receiving high-intensity strategies will outperform sites continuing the low-intensity strategies on the primary outcome of intervention fidelity and on secondary child health outcomes.

Specific aim 2. Examine moderators and mediators of implementation outcomes in a mixed-methods design

We will test organizational readiness and teacher experience as moderators of response to the implementation strategies. We will test educators’ perceptions of barriers, local implementation climate, and implementation leadership as mediators of the effect of the strategies on implementation outcomes.

Specific aim 3. Assess the incremental cost-effectiveness of the adaptive implementation strategy

We will estimate the cost per unit of fidelity associated with the adaptive implementation strategy versus continuing low-intensity strategies. Results will also determine the incremental cost-effectiveness of applying the adaptive implementation strategy for improving BMI and other child health outcomes.

Methods

Study design

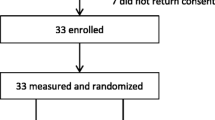

We will use an enhanced non-responder trial [48] design to determine the effectiveness (Aim 1) and incremental cost-effectiveness (Aim 3) of an adaptive implementation strategy for WISE, while examining moderators and mediators of the strategy effect (Aim 2). In this trial, we will randomize sites that do not respond to low-intensity strategies to either (a) continue receiving low-intensity strategies or (b) receive high-intensity strategies (See Fig. 1). We will also use an explanatory, sequential mixed methods design (QUANT→qual) to provide a nuanced understanding of implementation mechanisms and contextual factors (Aim 2) [50, 51].

Decisions about key elements of our adaptive design were made based on stakeholder input. That is, educators who had received the intensive strategy package shared input through semi-structured interviews (N = 10). These interviews helped to delineate the low and high-intensity packages, define the tailoring variable, define non-response, and adjust timing of delivery of strategies and assessment of need for tailoring. The resulting adaptive implementation strategy package is specified as recommended by Proctor et al. [52] (Table 2). Low-intensity strategies include those that all sites receive at the beginning. High-intensity strategies are added at non-responders sites. Specifically, the facilitator receives data from the fidelity observation to coach and guide the educators in behaviors needed to support non-responders in achieving fidelity to EBPs.

Community partnership is key to reduce cancer-related health disparities [66, 67]. To this end, we will draw on Evidence-Based Quality Improvement (EBQI) methods throughout our study [68,69,70]. This process will develop researcher–stakeholder partnerships for joint decision making [68,69,70,71,72,73,74], consistent with Community-Engaged Dissemination and Implementation principles [75]. Our EBQI panel will include educators, directors, and staff from ECE as well as state policy leaders who can inform WISE scale-up. For example, at least 3 professional networks could use the adaptive approach we develop: Child and Adult Care Food Programs (CACFP) sponsors, Childcare Resource and Referral (CCR&R) agents, and USDA (United States Department of Agriculture) Cooperative Extension agents. The panel will meet 3 times per year and provide input into study recruitment, roll out of study protocol, interpretation of findings, and future planning. We will also disseminate our results to participants and stakeholders through infographic-style summaries and presentations at community events.

Setting

Site selection

Sites will be from 4 geographic regions: Central AR, AR River Valley, North Central LA, and Southeast LA. A site is one ECE location; a site may have multiple classrooms with up to 20 children per classroom. A director provides leadership at each site; educators implement WISE in their classrooms. Sites within a 100-mile radius of staff offices; participating in CACFP and the state’s quality rating system; serving 15+ children ages 3 to 5 years; agreeing to participate in data collection; and not currently using WISE can be included. We will exclude sites unwilling to adopt WISE for all classrooms. Focusing on CACFP will maximize equitable reach, generalizability, and study impact. Most importantly, CACFP is a federal system that serves 3 million children per year [76], “targeting benefits to those children most in need.” [77]

We will recruit 80 sites, 40 in Arkansas (AR) and 40 in Louisiana (LA). We expect recruited sites will be diverse in Quality Rating and Improvement Systems scores [78], and we will model ratings in our analyses. We will recruit sites in 3 cohorts, 25–28 sites per year in 3 school years (across Y1-Y4). We will pool data across years for analyses and we will include cohort assignment as a control variable. A cohort-based design will allow us to limit the number of sites to 4 or less per facilitator per year—a number for which prior work indicates the greatest effect [46].

Classroom and child inclusion

All classrooms at a site will receive the same implementation strategies and participate in data collection. This reflects stakeholder input that sites would not participate unless all classrooms are treated equally. For primary analyses, we will include only classrooms that are non-responders (i.e. responding classrooms at non-responding sites will be excluded). In this way, we avoid contaminating analyses with classrooms that respond to the low-intensity strategy against the site trend. Based on our ECE experience, we expect 3 non-responding classrooms per site, on average; we have powered accordingly. Thus, we plan to include 192 total classrooms at 64 sites in our primary analyses. We will select one classroom at random per site to participate in collection of child outcomes (N = 64 classrooms, 15 children per classroom = 960 children total). Parents will provide consent.

Site randomization

The study will be cluster-randomized at the site level; we will randomize non-responder sites (i.e., those where < 60% of classrooms are not meeting fidelity standards). In our prior small-scale implementation trial, about 10% of classrooms were responders by the fall assessment (achieved fidelity to 3 of 4 EBPs). Considering these factors and that this study is larger and more diverse than our prior work, we anticipate at least 20% of sites will be responders after 2 months, leaving 80% of sites as non-responders for randomization. Higher non-response rates would improve statistical power. Sites will be randomized in a 1:1 ratio to the low-intensity or high-intensity strategies consistent with procedures of minimization and balancing [79, 80]. First, the balance between groups on potential confounding factors (e.g., site size, number of non-responder classrooms, demographics) will be examined. Then, we will randomly select one balanced assignment from the list of random assignments, which will balance sites on key factors while preserving advantages of randomly assigning sites [79, 80].

Aim 1

Aim 1 measures

Table 3 presents the Aim 1 data collection plan. Measures align with Proctor’s Outcomes for Implementation Research taxonomy [81]. The school year calendar informs measurement timing. The primary outcome is fidelity to the WISE EBPs at the classroom level, using the WISE fidelity observational measure [82]. The measure includes 2 to 3 items per EBP on a 1 to 4 scale to receive an average, continuous fidelity score with 4 representing the highest fidelity. For each item, values are anchored to concrete, observable behaviors. Trained and field-reliable staff blinded to the study condition will collect fidelity data consistent with published protocols [77]. Secondary implementation outcomes are adoption as well as acceptability, feasibility, and appropriateness of WISE and the implementation strategies [61]. We will collect secondary outcomes through self-report from educators on the schedule in Table 3. The WISE delivery survey [35] captures the number/content of lessons delivered and material dissemination to parents. In the next school year, we will assess EBP sustainment (i.e., delivery and fidelity 12–18 months after initial implementation).

To measure the effect on child health outcomes, we will use Resonance Raman Spectroscopy (RRS), which measures skin carotenoid levels as a biomarker for colorful FV intake [62] with an optical hand scan [63, 83]. RRS reflects intake over the prior 4 weeks and is sensitive to individual differences and experimental changes [64, 85]. Trained staff will assess BMI with a standardized protocol [65] and interpret the data with 2000 CDC growth charts [86]. Finally, we will observe children’s target food intake with a standardized protocol used by our team in prior studies [84]. We will weigh food portions (to the nearest 0.1 g) before and after observation.

Implementation processes

First, site leadership will meet with WISE facilitators to discuss the formal commitment and implementation blueprint. Next, all staff will receive WISE training. At training, educators will receive the “reminder cutting board,” showing the 4 WISE EBPs for use during lessons. Next, sites will select a “champion” to be a liaison between the site and WISE facilitator. Champions receive standardized training to navigate WISE implementation before September.

In the low-intensity group, WISE facilitators will provide monthly task-focused facilitation targeted to site directors and champions. Facilitators in the low-intensity group will monitor implementation, identify and solve problems related to contextual barriers, and assist with navigating structural changes needed for WISE. In the high-intensity group, WISE facilitators will provide enabling, holistic facilitation tailored to the needs of the educators twice per month and more upon request. Facilitation in the high-intensity group will support educators in a one-on-one fashion, hel** to set goals, fostering peer networking, develo** shared vision among leaders and staff, and building meaningful relationships that support change efficacy. This will include the provision of tailored educational materials and coaching based on observed fidelity reports.

Each study region will have 2 trained facilitators with experience in the ECE setting and/or WISE. Further, all facilitators will receive standard training and toolkits (e.g., sample scripts, testimonials, motivational interview examples). This is based on the Veterans Health Administration Implementation Facilitation Training [87], which 2 study staff completed in 2019. This training has been adapted for WISE and condensed for delivery in a 4-h session. After training, new facilitators will accompany experienced facilitators for 2 field visits to observe. The new facilitators will lead at least 2 visits with support and feedback from the experienced facilitator. Facilitators will take part in monthly reflective supervision calls led by the PI aimed to process experiences in the field, sharing lessons learned, and collaborating on ideas for supporting sites and teachers. All facilitators will log their activities (e.g., visits, calls, emails, champion contacts). The PI will compare the facilitator logs against the core implementation facilitation activities checklist for fidelity monitoring [88] and provide corrective guidance as needed.

Aim 1 analyses

We will manage data with REDCap [89], a secure, web-based electronic data capture tool hosted at UAMS. For Aim 1 analyses, analysts will examine data for missing values, extreme scores, and variable distributions. We expect missing values on the primary outcome to be minimal because study staff will collect these data. If the missing values percentage exceeds 5% [90], we will use an appropriate method for using all available data such as multiple imputation or full information maximum likelihood (FIML) estimation for analyses. For our primary analysis, we will use linear mixed-effects regression models [91] to test for group differences in fidelity outcomes at the school year end, while accounting for classroom nesting within site. Covariates will include state, site size, cohort, turnover rate, October fidelity, quality rating, and demographics. The statistical significance of the treatment group predictor (α = .05) will be used to determine significant differences in fidelity outcomes for the low- vs high-intensity groups. Additional analyses will include repeated outcomes from all time points to test for treatment group differences across time and time-by-treatment effects. We will repeat these analyses for secondary implementation outcomes. We will also examine child-level outcomes using linear mixed-effects regression models, which account for a child’s nesting within classrooms and sites. Parallel to primary analyses, we will first test treatment group differences at the spring assessment and then examine treatment and time-by-treatment effects using all time points. For all analyses, a significant, positive effect of treatment group will support the effectiveness of applying high-intensity strategies at sites that do not respond to low-intensity strategies initially. An exploratory analysis will describe the number of sites that were early responders and maintained response until the April assessment (versus regressing to non-responders over time).

For power analysis, we used Optimal Design software [92] to accommodate the clustered design of classrooms nested in sites. Our estimated sample size is based on the primary fidelity outcome and is analogous to powering a 2-arm randomized controlled trial. We have powered our study to detect a practically meaningful 1-point difference on our fidelity scale: 1 point would differentiate an educator who implements a practice only somewhat (e.g., score of 2) from an educator who implements a practice to a significant degree (e.g., score of 3). Based on standard deviations from prior small-scale trial, a 1-point difference would yield Cohen’s d effect sizes between .83 (Mascot) and 1.68 (Role Model). Assuming 64 non-responder sites (assigned 1:1) with an average of 3 non-responding classrooms per site (192 classrooms), the largest previously observed 0.20 Interclass Correlation Coefficient (ICC), and 2-sided α == .05, we will have 80% power to detect an effect size of d = 0.49 or larger. We do not anticipate site-level attrition, but even with ~ 20% attrition (N = 50 sites), we would have 80% power to detect an effect size of d == .56 or greater. Assuming one randomly chosen classroom per site, 15 children per classroom (N = 64*15 = 960), a 0.10 ICC (largest observed child-level ICC in the prior small-scale trial), and 2-sided α == .05, we will have 80% power to detect an effect size of d == .29 or larger for child-level outcomes, which corresponds to an effect size of between small and medium [93].

Aim 2

Aim 2 quantitative measures

Quantitative analyses will test 2 moderators and 3 mediators specified a priori (See Fig. 2). During the baseline period (prior to October), educators at participating sites will complete assessments of potential moderators and mediators. Educators will also complete surveys mid-year and at the school year end to assess proposed mediators. We expect 2 educators per classroom to complete the survey, 6 per site on average. This follows the best practice of assessing moderators before randomization [94, 95] and assessing mediators at a minimum of three points in time [96, 97]. Further, our design includes key features to establish causal inference including temporality and experimental manipulation of dosages of facilitation [98]. Educator responses will reflect site experiences, and we will aggregate educator responses to the site level for analyses. The research team will collect these data in person with paper surveys or emailed survey links (reflecting technology access and use in ECE). We will capture data with REDCap for secure storage. All classroom staff (lead and assistants) will complete assessments.

Moderation

Moderation measures will include The Organizational Readiness to Change Assessment (ORCA) [99], which we adapted and tested in ECE in our prior work. For this study, we will focus on the context subscale, which is consistent with our focal moderator and based on i-PARIHS. The baseline survey will also capture educator background including years of experience and type/ frequency of prior nutrition and feeding training to create a composite educator experience variable.

Mediation

First, we hypothesize that facilitation will decrease the perceived barriers to implementation (e.g., by hel** to identify problems and solutions) [42]. Second, we expect that facilitation will improve implementation climate (e.g., by develo** shared meaning [42], assisting with boundary navigation, and supporting role clarity [42, 100]). Finally, we hypothesize that facilitation will improve implementation leadership and the knowledge and behaviors leaders leverage to support EBP implementation [101] (e.g., by navigating group interests, modeling empowerment, and building organizational structures [42, 100]). Based on the recommended process by Lewis et al. [102], Fig. 2 presents our proposed model that links facilitation to the proposed mediators (proximal outcomes) and targeted implementation outcomes (distal outcomes). Facilitation (e.g., dose, target) will be measured using the facilitation logs described in Aim 1; however, we will conceptualize facilitation dichotomously for analyses (high and low-intensity). We will apply widely used and validated measures of proposed mechanisms, including the Implementation Climate Scale [103] and Implementation Leadership Scale [101], recently adapted for educational settings [104]; the perceived barriers measure [105] is a checklist of challenges educators reported in our formative work was used in our prior work.

Aim 2 quantitative data analyses

Analyses will include 64 sites randomized (1:1) to the low- or high-intensity strategies. Moderator analyses will be conducted using mixed effects logistics regression models with a treatment main effect (low- vs high-intensity), the moderator main effect (organizational readiness and educator experience), and the interaction between the two. The interaction term significance (α = .05) will be evaluated to test moderation. Models will account for the classroom nesting within sites and include controls for state, quality rating, and key demographics. For mediation analyses, we will test a multilevel, multiple mediator model in a Structural Equation Modeling (SEM) framework [106] to account for students clustering in classrooms and classrooms within sites. That is, all 3 mediators will be tested simultaneously. Specifying a multiple mediator model is less biased than testing single mediators one at a time [106,107,108]. Using 95% bootstrap confidence intervals [53], significance tests in SEM are also less biased than sequential hypothesis testing approaches to mediation tests [109]. Using data at three time points, we will be able to model that Y (independent variable) precedes M (mediator) in time, and M precedes Y (dependent variable) in time; prior levels of M and Y can be controlled.

Statistical power for secondary moderation and mediation analyses

For moderator analyses, we will have 80% power detect a Cohen’s f 2 of .13 which falls between a small (f 2 = .02) and medium (f 2 = .15) effect size [93]. In mediation analyses, the indirect effect is the product of 2 regression coefficients and is not distributed normally, which poses a challenge to power calculations [110]. However, Fritz and MacKinnon [111] recommend a bias-corrected bootstrap method for the indirect effect that, in our sample size of 64 sites, would provide 80% power to detect an indirect effect composed of 2 large-sized constituent effects, or a mix of a large-sized and a medium-sized effects.

Aim 2 qualitative measures

Qualitative methods will provide detail and elaborate on potential additional candidate moderators and mediators at a subset of purposively selected sties based on response type. We will use qualitative data to provide detailed understanding of response to low- and high-intensity strategies. Specifically, quantitative data from the enhanced non-responder trial will identify 5 categories of response to implementation strategies (Fig. 1): (1) early responders to low-intensity (by October), (2) late responders to low-intensity (by school year end), (3) non-responders to low-intensity, (4) responders to high-intensity, and 5) non-responders to high-intensity. Through purposive site visits, we will collect qualitative data within each response type. We expect to target 3 sites per response type to reach a total sample of 15 sites (split across state and study years). We expect to reach saturation with 15 sites, but we are prepared to increase to 20 if needed to reach saturation. During site visits, the research team will conduct semi-structured, key informant interviews with directors and focus groups with educators (4 to 6 educators per Krueger [50, 112]). This format is cost-effective and will allow educators to share experiences (independent of directors) [50]. Director interviews and educator focus groups will elicit perceived reasons why the strategies worked (or failed) at their site, practical strategies of leadership support, and relevant factors in the implementation climate. Concepts from the i-PARIHS framework will inform interviews and focus groups guides (Table 4). Additionally, the research team will capture field notes of the site activities, processes, and interactions that may influence response to the strategies.

Aim 2 qualitative data analyses

Qualitative analyses will focus on identifying similarities and differences between site response types. Transcripts will be matched with observed field notes and coded using directed content analysis [113]. The i-PARIHS framework will provide a template of sensitizing concepts to label significant, recurrent ideas [114], particularly ideas that suggest emergent candidate mediators and moderators. We will incorporate inductive codes as we identify additional salient factors [115]. Primary and secondary coders (at least 1 each from AR and LA) will code the same transcripts until inter-rater reliability is established. Minimum reliability will be set at Kappa of 0.75, which reflects excellent agreement between coders [116]. Coding will be independent after establishing reliability. Coders will hold weekly meetings to discuss iterative expansions to the codebook, to reach consensus about unclear codes and to document tentative patterns in the data. A third-party team member will resolve disagreements [117]. Participants and stakeholders will review site-level summaries of findings. We will conduct analyses of qualitative interviews yearly and use findings to revise the interview guide for subsequent interviews (e.g., identify probing needs, generate new questions).

Integrating findings

As we interpret Aim 2 findings, we will connect quantitative and qualitative data. This will include: 1) expansion of quantitative findings to provide detail through qualitative data and 2) complementarity to deepen understanding and identify other potential moderators and mediators not focused on in quantitative analyses. Thus, qualitative data will explain and elaborate on quantitative findings.

Aim 3

Aim 3 measures

The cost-effectiveness analysis (CEA) will construct incremental cost-effectiveness ratios (ICER) to estimate the marginal differences in costs and in fidelity and child outcomes between the adaptive implementation strategy and continuing the low-intensity implementation strategy. We will calculate implementation strategy costs based on time and travel data collected in the facilitation log and known material purchase costs. Based on work by Ritchie et al. [118], facilitators will log all activities and travel time using REDCap, which was tested and found feasible in our prior work.

Aim 3 analyses

We will calculate the incremental cost-effectiveness ratio in 4 steps. First, we will use data from Aim 1 as estimates of fidelity and child outcome changes (i.e., BMI, RRS, target food consumption) for both study conditions. We will aggregate these findings to the site. Next, we will calculate the costs associated with implementation at each site. The WISE intervention cost is the same at all sites, and the ECE system does not accrue downstream costs or benefits. Therefore, we focus on implementation costs only, which comprise 4 categories (Table 5). These will be collected using a micro-costing approach, and expenses will be applied to the appropriate site.

Then, we will estimate covariates to adjust for site-level differences in fidelity. We will use the same covariates used to control for site variation in Aim 1 and aggregate child-level covariates to the site. Incremental costs will be calculated using intent-to-treat analysis to estimate the effect of treatment allocation. We will use generalized linear models (GLMs) to estimate the effect of implementation intensity on fidelity, child outcomes, and implementation costs. We will compute 2 outcome predictions for each site based on the coefficients from the GLM regressions and the covariates for each analysis [119]. The first prediction will be as if the site was randomized to the adaptive strategy, and the second prediction will be as if the site was randomized to the low-intensity strategy. The difference between these predictions represents the incremental effect of the implementation strategy on fidelity, child outcomes, or costs. Lastly, we will calculate the incremental cost-effectiveness of adding the adaptive strategy relative to continuing the low-intensity strategy. The numerator will be the incremental difference in total implementation costs incurred at sites receiving the adaptive strategy compared to sites continuing the low-intensity strategy. The denominator will be the difference in the changes in fidelity or child outcomes between the fall implementation and spring implementation assessments for the adaptive strategy compared to sites continuing the low-intensity. We will use a nonparametric bootstrap with replacement method with 1000 replications to generate an empirical joint distribution of incremental implementation costs and fidelity or child outcome change scores. Analysts will build preliminary models using data from the first cohort (Y2) to promote analysis expedience when all three cohorts are completed (Y4).

Discussion

Arkansas (AR) and Louisiana (LA) are among the states in the US with the highest obesity rates, lowest quality diets, and highest cancer rates [120]. Given the limited economic resources of these states, community systems need obesity prevention efforts that optimize resources through innovative, tailored implementation in settings serving community populations. ECE is a key real-world context for nutrition promotion and obesity intervention [31, 121, 122], but implementation gaps persist in this setting. Thus, this research has substantial potential to inform pragmatic, scalable guidance on the strategy intensity needed to implement and scale health-related EBPs in ECE.

This study has the potential to advance the field of implementation science in several ways. First, this study can contribute to a shift from one-size-fits-all implementation strategies to strategically sequenced implementation strategy packages. This is critical given that ECE, particularly programs in our study, serves children of minority and lower-SES status, populations at higher child obesity risk [123] and often have limited resources to serve children families. Our approach may help to optimize the minimum resources needed to achieve desired outcomes. This study also represents a significant contribution to testing theory and examining mechanisms in implementation science. Specifically, our examination of i-PARIHS suggested moderators and mediators will test the “encapsulated theory” implied by this framework [41]. This aspect of our work represents an emphasis on identifying the who, what, and how of facilitation as an implementation strategy and adds to the limited body of research using mixed methods to explore implementation mechanisms [ 38]. Finally, our research models implementation outcomes, health outcomes, and implementation costs for WISE in ECE. Measuring this combination is rare but necessary to optimize feasible implementation approaches [124].

At the conclusion of this study, we expect several tangible outcomes. First, we expect data from this study to serve practitioners in ECE (e.g., CACFP, CCR&R, and Extension), local agency directors, and state-level policy makers in allocating resources to implement EBPs and providing data on characteristics of sites that are likely to need higher-intensity support. Specifically, this study will provide crucial data to inform WISE scale-up and dissemination with applicable lessons for other interventions and contexts. Follow-up assessments will reveal how the implementation strategies affect WISE EBP sustainability in school years following the initial WISE launch. For example, we will be able to compare sustainability between sites that respond early to low-intensity strategies (by October assessment) and sites that respond late to low-intensity strategies (by school year end). Qualitative data from Aim 2 and quantitative data on fidelity in the sustainability assessment from Aim 1 will be linked to understand the specific ways low- and high-intensity strategies influence sustainability as well as characteristics of sites that achieve sustainment. According to a recent review, [125] fewer than 25% of funded R01s in Dissemination and Implementation Science studies have evaluated “the impact of a strategy on sustainability.”

This study will also provide unique knowledge for the implementation science field about facilitation. Our design will allow comparisons between the effect of a strategy bundle with narrowly focused facilitation and a more holistic, individualized facilitation. Further, we will be able to provide insight into mechanisms by which facilitation influences implementation [100, 126]. Our random assignment to different facilitation levels embedded in each strategy package, our use of multiple measurements of mediators at key time points, and our multilevel SEM approach will improve causal inference about the relationship between facilitation, the proposed mediators, and the targeted implementation outcomes [109, 127]. Thus, we expect to provide an important test of the i-PARIHS theory, while illustrating best-practices in mediation analyses in an implementation science study.

Finally, this study will provide important data on incremental costs for fidelity and child health outcomes. We will also explore costs of increasing implementation outcomes of potential interest to future implementers (e.g., acceptability, feasibility). This will inform future scale-up of our adaptive implementation strategy for relevant ECE stakeholders (e.g., education state departments, state and federal Head Start programs). Further, data on the cost-effectiveness implementation strategies in ECE programs receiving federal CACFP support (e.g., Head Start) can support policy for these government-funded programs. No available studies in ECE report on implementation strategy costs [37].

This study has potential challenges and limitations. A primary challenge is that staff turnover can be significant in ECE. At the low-intensity sites, facilitators would encourage local site champions to demonstrate a WISE lesson and follow up by providing resources and inviting questions; at the high-intensity sites, WISE facilitators would visit new educators’ classrooms during a WISE lesson to answer questions and identify resource needs. We will include turnover as a covariate in analyses. In addition, we may not have selected the most salient moderators and mediators for testing. However, parsimony and power considerations require us to specify theorized moderators and mediators a priori. For moderation, we have focused on two potential moderators with support in the literature and in our prior work. For mediation, we have focused on targets of holistic facilitation to determine if facilitation in the high-intensity strategy activates shifts in the climate and organization as proposed [42, 100]. We are not powered for examination of amplification effects (e.g., moderated mediation); nor is it a scientific focus of our study. Qualitative data will explain quantitative findings and elaborate on other potential moderators and mediators. If we do not find significant mediation effects, we can still assess if our strategy failed to produce the desired effects on the proposed mechanisms and/or if the proposed mechanisms failed to produce the desired effects on the targeted implementation outcome [127]. This distinction would inform future work, including strategy selection and theory refinement. Finally, prior studies are unclear on the relationship between early childhood BMI and quality of life, limiting the current study to an estimate of cost per unit of BMI change. While a generalizable measure of effectiveness (e.g., quality adjusted life year), may be preferable for comparison across interventions, it is beyond the scope of this study.

Conclusions

We expect our study to provide an evidence base for structuring implementation support in real-world ECE contexts, ultimately providing a guide for applying the adaptive implementation strategy in ECE for WISE scale-up. Our work will also provide data to guide implementation decisions of other interventions in ECE through improving targeted application of strategy intensity to optimize resources. The results from this study will position us for future research to test the transfer of the adaptive implementation support away from the research team and to the ECE systems. This research path will advance us toward our long-term goal of EBP implementation in ECE to improve diet quality and health outcomes for children.

Availability of data and materials

The datasets used and/or analyzed during the current study will be available from the corresponding author on reasonable request.

Abbreviations

- ECE:

-

Early care and education

- WISE:

-

Together, We Inspire Smart Eating

- BMI:

-

Body mass index

- EBPs:

-

Evidence-based practices

- WCRF:

-

World Cancer Research Fund

- FV:

-

Fruits and vegetables

- i-PARIHS:

-

Integrated Promoting Action on Research Implementation in Health Services

- EBQI:

-

Evidence-Based Quality Improvement

- CACFP:

-

Child and Adult Care Food Programs

- CCR&R:

-

Childcare Resource and Referral

- RRS:

-

Resonance Raman Spectroscopy

- REDCap:

-

Research and Evaluation Data Capture Application

- ICC:

-

Interclass Correlation Coefficient

- ORCA:

-

Organizational Readiness to Change Assessment

- SEM:

-

Structural Equation Modeling

- GLMs:

-

Generalized linear models

- AR:

-

Arkansas

- LA:

-

Louisiana

References

Lauby-Secretan B, Scoccianti C, Loomis D, Grosse Y, Bianchini F, Straif K. Body fatness and cancer — viewpoint of the IARC working group. N Engl J Med. 2016;375(8):794–8. https://doi.org/10.1056/NEJMsr1606602.

Whiteman DC, Wilson LF. The fractions of cancer attributable to modifiable factors: a global review. Cancer Epidemiol. 2016;44:203–21. https://doi.org/10.1016/j.canep.2016.06.013.

Mennella JA. Ontogeny of taste preferences: basic biology and implications for health. Am J Clin Nutr. 2014;99(3):704S–11S. https://doi.org/10.3945/ajcn.113.067694.

Simmonds M, Llewellyn A, Owen CG, Woolacott N. Predicting adult obesity from childhood obesity: a systematic review and meta-analysis. Obes Rev. 2016;17(2):95–107. https://doi.org/10.1111/obr.12334.

Centers for Disease Control. Progress on childhood obesity. CDC Morb Mortal Wkly Rep Vital Signs. Published online August 2013. https://www.cdc.gov/vitalsigns/pdf/2013-08-vitalsigns.pdf.

America CCA of. About child care. Child Care Aware of America. Published 2019. https://usa.childcareaware.org/families-programs/about-child-care/.

Child Care Aware of America. Checking in: a snapshot of the child care landscape. 2017. http://usa.childcareaware.org/wp-content/uploads/2017/07/FINAL_SFS_REPORT.pdf. Accessed 8 May 2018.

Larson N, Ward DS, Neelon SB, Story M. What role can child-care settings play in obesity prevention? A review of the evidence and call for research efforts. J Am Diet Assoc. 2011;111(9):1343–62. https://doi.org/10.1016/j.jada.2011.06.007.

World Cancer Research Fund International. Diet, nutrition, physical activity and cancer: a global perspective. 2018. https://www.wcrf.org/dietandcancer/about.

Whiteside-Mansell L, Swindle TM. Together we inspire smart eating: a preschool curriculum for obesity prevention in low-income families. J Nutr Educ Behav. 2017;49(9):789–792.e1. https://doi.org/10.1016/j.jneb.2017.05.345.

Whiteside-Mansell L, Swindle T, Selig JP. Together, We Inspire Smart Eating (WISE): an examination of implementation of a WISE curriculum for obesity prevention in children 3 to 7 years. Glob Pediatr Health. 2019;6:2333794X19869811.

SNAP-Ed Toolkit. Together, we inspire smart eating (WISE). Obesity prevention interventions and evaluation framework. Published 2019. https://snapedtoolkit.org/interventions/programs/together-we-inspire-smart-eating-wise/. Accessed 24 Mar 2020.

Mustonen S, Rantanen R, Tuorila H. Effect of sensory education on school children’s food perception: a 2-year follow-up study. Food Qual Prefer. 2009;20(3):230–40. https://doi.org/10.1016/j.foodqual.2008.10.003.

Anzman-Frasca S, Savage JS, Marini ME, Fisher JO, Birch LL. Repeated exposure and associative conditioning promote preschool children’s liking of vegetables. Appetite. 2012;58(2):543–53. https://doi.org/10.1016/j.appet.2011.11.012.

Reverdy C, Chesnel F, Schlich P, Köster EP, Lange C. Effect of sensory education on willingness to taste novel food in children. Appetite. 2008;51(1):156–65. https://doi.org/10.1016/j.appet.2008.01.010.

Knai C, Pomerleau J, Lock K, McKee M. Getting children to eat more fruit and vegetables: a systematic review. Prev Med (Baltim). 2006;42(2):85–95. https://doi.org/10.1016/j.ypmed.2005.11.012.

Wardle J, Herrera M-L, Cooke L, Gibson EL. Modifying children’s food preferences: the effects of exposure and reward on acceptance of an unfamiliar vegetable. Eur J Clin Nutr. 2003;57(2):341–8. https://doi.org/10.1038/sj.ejcn.1601541.

Wardle J, Chida Y, Gibson EL, Whitaker KL, Steptoe A. Stress and adiposity: a meta-analysis of longitudinal studies. Obesity. 2011;19(4):771–8. https://doi.org/10.1038/oby.2010.241.

Schindler JM, Corbett D, Forestell CA. Assessing the effect of food exposure on children’s identification and acceptance of fruit and vegetables. Eat Behav. 2013;14(1):53–6. https://doi.org/10.1016/j.eatbeh.2012.10.013.

Hendy HM, Raudenbush B. Effectiveness of teacher modeling to encourage food acceptance in preschool children. Appetite. 2000;34(1):61–76. https://doi.org/10.1006/appe.1999.0286.

Hendy HM. Comparison of five teacher actions to encourage children’s new food acceptance. Ann Behav Med. 1999;21(1):20–6. https://doi.org/10.1007/BF02895029.

Gibson EL, Kreichauf S, Wildgruber A, et al. A narrative review of psychological and educational strategies applied to young children’s eating behaviours aimed at reducing obesity risk. Obes Rev. 2012;13(Suppl 1):85–95. https://doi.org/10.1111/j.1467-789X.2011.00939.x.

Galloway AT, Fiorito LM, Francis LA, Birch LL. “Finish your soup”: counterproductive effects of pressuring children to eat on intake and affect. Appetite. 2006;46(3):318–23. https://doi.org/10.1016/j.appet.2006.01.019.

Birch LL, McPheee L, Shoba B, Steinberg L, Krehbiel R. “Clean up your plate”: effects of child feeding practices on the conditioning of meal size. Learn Motiv. 1987;18(3):301–17. https://doi.org/10.1016/0023-9690(87)90017-8.

Borzekowski D, Robinson T. The 30-second effect: an experiment revealing the impact of television commercials on food preferences of preschoolers. J Am Diet. Published online 2001. http://www.sciencedirect.com/science/article/pii/S0002822301000128. Accessed 21 Oct 2016.

Boyland E, Harrold J, Kirkham T, Halford J. Persuasive techniques used in television advertisements to market foods to UK children. Appetite. 2012;58(2):658–64.

Kraak V, Story M. Influence of food companies’ brand mascots and entertainment companies’ cartoon media characters on children’s diet and health: a systematic review and. Obes Rev. Published online 2015. http://onlinelibrary.wiley.com/doi/10.1111/obr.12237/full. Accessed 21 Oct 2016.

Keller K, Kuilema L, Lee N, Yoon J, Mascaro B. The impact of food branding on children’s eating behavior and obesity. Physiol. Published online 2012. http://www.sciencedirect.com/science/article/pii/S0031938412001163. Accessed 21 Oct 2016.

Roberto C, Baik J, Harris J, Brownell K. Influence of licensed characters on children’s taste and snack preferences. Pediatrics. Published online 2010. http://pediatrics.aappublications.org/content/126/1/88.short. Accessed 21 Oct 2016.

Weber K, Story M, Harnack L. Internet food marketing strategies aimed at children and adolescents: a content analysis of food and beverage brand web sites. J Am Diet Assoc. 2006;106(9):1463–6.

Benjamin-Neelon SE. Position of the academy of nutrition and dietetics: benchmarks for nutrition in child care. J Acad Nutr Diet. Published online 2018. https://doi.org/10.1016/j.jand.2018.05.001.

Whiteside-Mansell L, Swindle TM. Evaluation of together we inspire smart eating: pre-school fruit and vegetable consumption. Health Educ Res. 2019;34(1):62–71. https://doi.org/10.1093/her/cyy048.

Whiteside-Mansell L, Swindle T, Davenport K. Evaluation of “Together, We Inspire Smart Eating”(WISE) nutrition intervention for young children: assessment of fruit and vegetable consumption with parent reports and measurements of skin carotenoids as biomarkers. J Hunger Environ Nutr. 2021;16(2):235–45.

Rutledge, J. M., Swindle, T., McBride, N., Selig, J. P., Percle, P. M., & Whiteside-Mansell L. Home-based health outcomes of a childcare-based nutrition intervention: pester power examined. In: International society of behavioral nutrition and physical activity. 2019.

Swindle T, Johnson SL, Whiteside-Mansell L, Curran GM. A mixed methods protocol for develo** and testing implementation strategies for evidence-based obesity prevention in childcare: a cluster randomized hybrid type III trial. Implement Sci. 2017;12(1):90. https://doi.org/10.1186/s13012-017-0624-6.

Benjamin SE, Ammerman A, Sommers J, Dodds J, Neelon B, Ward DS. Nutrition and physical activity self-assessment for child care (NAP SACC): results from a pilot intervention. J Nutr Educ Behav. 2007;39(3):142–9. https://doi.org/10.1016/j.jneb.2006.08.027.

Wolfenden L, Jones J, Williams C. Strategies to improve the implementation of healthy eating, physical activity and obesity prevention policies, practices or programmes within childcare services. Cochrane. Published online 2016. http://onlinelibrary.wiley.com/doi/10.1002/14651858.CD011779.pub2/full. Accessed 7 Aug 2017.

Byrd-Williams CE, Dooley EE, Thi CA, Browning C, Hoelscher DM. Physical activity, screen time, and outdoor learning environment practices and policy implementation: a cross sectional study of Texas child care centers. BMC Public Health. 2019;19(1):1–11. https://doi.org/10.1186/s12889-019-6588-5.

Swindle T, McBride NM, Selig JP, et al. Stakeholder selected strategies for obesity prevention in childcare: results from a small-scale cluster randomized hybrid type III trial. Implement Sci. 2021;16(1):1–19. https://doi.org/10.1186/S13012-021-01119-X.

Shumba E, Nzombe P, Mbinda A, et al. Weighing the costs: implementing the SLMTA programme in Zimbabwe using internal versus external facilitators. Afr J Lab Med. 2014;3(2). https://doi.org/10.4102/ajlm.v3i2.248.

Damschroder LJ. Clarity out of chaos: use of theory in implementation research. Psychiatry Res. Published online June 23, 2019. https://doi.org/10.1016/J.PSYCHRES.2019.06.036.

Harvey G, Kitson A. Facilitation as the active ingredient. In: Implementing evidence-based practice in healthcare: a facilitation guide. Routledge; 2015. p. 169–184. https://doi.org/10.4324/9780203557334-11.

Ullrich PM, Sahay A, Stetler CB. Use of implementation theory: a focus on PARIHS. 2000. https://sigmapubs.onlinelibrary.wiley.com/doi/pdf/10.1111/wvn.12016. Accessed 27 June 2019.

Helfrich CD, Damschroder LJ, Hagedorn HJ, et al. A critical synthesis of literature on the promoting action on research implementation in health services (PARIHS) framework. Implement Sci. 2010;5(1):82. https://doi.org/10.1186/1748-5908-5-82.

Harvey G, Loftus-Hills A, Rycroft-Malone J, et al. Getting evidence into practice: the role and function of facilitation. J Adv Nurs. Published online 2002. https://doi.org/10.1046/j.1365-2648.2002.02126.x.

Baskerville NB, Liddy C, Hogg W. Systematic review and meta-analysis of practice facilitation within primary care settings. Ann Fam Med. Published online 2012. https://doi.org/10.1370/afm.1312.

Culler SD, Parchman ML, Lozano-Romero R, et al. Cost estimates for operating a primary care practice facilitation program. Ann Fam Med. Published online 2013. https://doi.org/10.1370/afm.1496.

Almirall D, Nahum-Shani I, Wang L, Kasari C. Experimental designs for research on adaptive interventions: singly and sequentially randomized trials. In: Collins LM, Kugler KC, editors. Optimization of behavioral, biobehavioral, and biomedical interventions: Springer; 2018. https://doi.org/10.1007/978-3-319-91776-4_4.

Almirall D, Chronis-Tuscano A. Adaptive interventions in child and adolescent mental health. J Clin Child Adolesc Psychol. 2016;45(4):383–95. https://doi.org/10.1080/15374416.2016.1152555.

Green CA, Duan N, Gibbons RD, Hoagwood KE, Palinkas LA, Wisdom JP. Approaches to mixed methods dissemination and implementation research: methods, strengths, caveats, and opportunities. Adm Policy Ment Health Ment Health Serv Res. Published online 2014. https://doi.org/10.1007/s10488-014-0552-6.

Creswell JW, Klassen AC, Clark VLP, Smith KC. Best practices for mixed methods research in the health sciences. 2011.

Proctor EK, Powell BJ, Mcmillen JC. Implementation strategies: recommendations for specifying and reporting. 2013;8:139. http://search.proquest.com/docview/1468019737?pq-origsite=gscholar. Accessed 5 July 2017.

Shaw EK, Howard J, West DR, et al. The role of the champion in primary care change efforts: from the state networks of Colorado ambulatory practices and partners (SNOCAP). J Am Board Fam Med. 2012;25(5):676–85. https://doi.org/10.3122/jabfm.2012.05.110281.

Kirchner JAE, Parker LE, Bonner LM, Fickel JJ, Yano EM, Ritchie MJ. Roles of managers, frontline staff and local champions, in implementing quality improvement: stakeholders’ perspectives. J Eval Clin Pract. 2012;18(1):63–9. https://doi.org/10.1111/j.1365-2753.2010.01518.x.

Perry CK, Damschroder LJ, Hemler JR, Woodson TT, Ono SS, Cohen DJ. Specifying and comparing implementation strategies across seven large implementation interventions: a practical application of theory. Implement Sci. 2019;14(1):32. https://doi.org/10.1186/s13012-019-0876-4.

Kousgaard MB, Siersma V, Reventlow S, Ertmann R, Felding P, Waldorff FB. The effectiveness of computer reminders for improving quality assessment for point-of-care testing in general practice-a randomized controlled trial. Implement Sci. 2013;8(1). https://doi.org/10.1186/1748-5908-8-47.

Sales A, Helfrich C, Ho PM, et al. Implementing electronic clinical reminders for lipid management in patients with ischemic heart disease in the veterans health administration: QUERI series. Implement Sci. 2008;3(1). https://doi.org/10.1186/1748-5908-3-28.

Parchman M, Noel P. A randomized trial of practice facilitation to improve the delivery of chronic illness care in primary care: initial and sustained effects. 2013;8(1):93. Accessed 11 Sept 2017. https://implementationscience.biomedcentral.com/articles/10.1186/1748-5908-8-93

Fernandez ME, ten Hoor GA, van Lieshout S, et al. Implementation map**: using intervention map** to develop implementation strategies. Front Public Health. 2019;7:158. https://doi.org/10.3389/fpubh.2019.00158.

Kitson AL, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement Sci. 2008;3(1):1. https://doi.org/10.1186/1748-5908-3-1.

Weiner BJ, Lewis CC, Stanick C, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12(1):108. https://doi.org/10.1186/s13012-017-0635-3.

Darvin M, Sterry W, Lademann J, Vergou T. The role of carotenoids in human skin. Molecules. 2011;16(2):1710–1738. http://www.mdpi.com/1420-3049/16/12/10491. Accessed 24 Oct 2016.

Ermakov IV, Gellermann W. Dermal carotenoid measurements via pressure mediated reflection spectroscopy. J Biophotonics. 2012;5(7):559–70. https://doi.org/10.1002/jbio.201100122.

Meinke M, Darvin M, Vollert H, Lademann. Bioavailability of natural carotenoids in human skin compared to blood. Eur J Pharm Biopharm 2010;76(2):269–274. http://www.sciencedirect.com/science/article/pii/S0939641110001530. Accessed 24 Oct 2016.

Lohman TG, Roche AF, Martorell R. Anthropometric standardization reference manual: Human Kinetics Books; 1988.

McCracken JL, Friedman DB, Brandt HM, et al. Findings from the community health intervention program in South Carolina: implications for reducing cancer-related health disparities. J Cancer Educ. 2013;28(3):412–9. https://doi.org/10.1007/s13187-013-0479-8.

Braun KL, Stewart S, Baquet C, et al. The national cancer institute’s community networks program initiative to reduce cancer health disparities: outcomes and lessons learned. Prog Community Heal Partnerships Res Educ Action. 2015;9:21–32. https://doi.org/10.1353/cpr.2015.0017.

Curran GM, Mukherjee S, Allee E, Owen RR. A process for develo** an implementation intervention: QUERI series. Implement Sci. 2008;3(1):17. https://doi.org/10.1186/1748-5908-3-17.

Curran GM, Pyne J, Fortney JC, et al. Development and implementation of collaborative care for depression in HIV clinics. AIDS Care. 2011;23(12):1626–36. https://doi.org/10.1080/09540121.2011.579943.

Fortney J, Enderle M, McDougall S, et al. Implementation outcomes of evidence-based quality improvement for depression in VA community based outpatient clinics. Implement Sci. 2012;7(1):30. https://doi.org/10.1186/1748-5908-7-30.

Rubenstein L, Stockdale S, Sapir N, Altman L. A patient-centered primary care practice approach using evidence-based quality improvement: rationale, methods, and early assessment of implementation. J Gen. Published online 2014. http://springer.longhoe.net/article/10.1007/s11606-013-2703-y. Accessed 21 Oct 2016.

Rubenstein LV, Parker LE, Meredith LS, et al. Understanding team-based quality improvement for depression in primary care. Health Serv Res. 2002;37(4):1009–29. https://doi.org/10.1034/j.1600-0560.2002.63.x.

Smith JL, Williams JW, Owen RR, Rubenstein LV, Chaney E. Develo** a national dissemination plan for collaborative care for depression: QUERI series. Implement Sci. 2008;3(1):59. https://doi.org/10.1186/1748-5908-3-59.

Mendel P, Meredith LS, Schoenbaum M, Sherbourne CD, Wells KB. Interventions in organizational and community context: a framework for building evidence on dissemination and implementation in health services research. Adm Policy Ment Health Ment Health Serv Res. 2008;35(1–2):21–37. https://doi.org/10.1007/s10488-007-0144-9.

Holt CL, Chambers DA. Opportunities and challenges in conducting community-engaged dissemination/implementation research. Transl Behav Med. Published online September 7, 2017:1–4. https://doi.org/10.1007/s13142-017-0520-2.

Food and Research Action Center. Child & adult care food program: participation trends 2018. 2019. https://frac.org/wp-content/uploads/CACFP-participation-trends-2018.pdf. Accessed 12 Aug 2020.

United States Department of Agriculutre. Child Day Care Centers | USDA-FNS. https://www.fns.usda.gov/cacfp/child-day-care-centers. Accessed 11 Aug 2020.

Elicker J, Ruprecht K. Child Care Quality Rating and Improvement Systems (QRIS). In: The Wiley handbook of early childhood care and education. Wiley; 2019. p. 515–536. https://doi.org/10.1002/9781119148104.ch23.

Scott NW, McPherson GC, Ramsay CR, Campbell MK. The method of minimization for allocation to clinical trials: a review. Control Clin Trials. Published online 2002. https://doi.org/10.1016/S0197-2456(02)00242-8.

Taves DR. Minimization: a new method of assigning patients to treatment and control groups. Clin Pharmacol Ther. Published online 1974. https://doi.org/10.1002/cpt1974155443.

Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health Ment Health Serv Res. 2011;38(2):65–76. https://doi.org/10.1007/s10488-010-0319-7.

Swindle T, Selig J, Rutledge J, Whiteside-Mansell L, Curran G. Fidelity monitoring in complex interventions: a case study of the WISE intervention. Arch Public Health.

Scarmo S, Henebery K, Peracchio H, et al. Skin carotenoid status measured by resonance Raman spectroscopy as a biomarker of fruit and vegetable intake in preschool children. Eur J Clin Nutr. 2012;66(5):555–60. https://doi.org/10.1038/ejcn.2012.31.

Johnson SL, Ryan SM, Kroehl M, Moding KJ, Boles RE, Bellows LL. A longitudinal intervention to improve young children’s liking and consumption of new foods: findings from the Colorado LEAP study. Int J Behav Nutr Phys Act. 2019;16(1). https://doi.org/10.1186/s12966-019-0808-3.

Blume-Peytavi U, Rolland A, Darvin ME, et al. Cutaneous lycopene and β-carotene levels measured by resonance Raman spectroscopy: high reliability and sensitivity to oral lactolycopene deprivation and supplementation. Eur J Pharm Biopharm. 2009;73(1):187–94. https://doi.org/10.1016/j.ejpb.2009.04.017.

Centers for Disease Control and Prevention. CDC growth charts: United States; length-for-age percentiles: boys, birth to 36 months. 2000.

Ritchie, M. J., Dollar, K. M., Miller, C. J., Oliver, K. A., Smith, J. L., Lindsay, J. A., & Kirchner JE. Using implementation facilitation to improve care in the veterans health administration (version 2). Veterans Health Administration, Quality Enhancement Research Initiative (QUERI) for Team-Based Behavioral Health; 2017. https://www.queri.research.va.gov/tools/implementation/Facilitation-Manual.pdf.

Smith JL, Ritchie MJ, Kim B, et al. Sco** review to identify the Spectrum of activities applied in implementation facilitation strategies. In: 2nd international conference on practice facilitation. 2018.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)-a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81. https://doi.org/10.1016/j.jbi.2008.08.010.

Jakobsen JC, Gluud C, Wetterslev J, Winkel P. When and how should multiple imputation be used for handling missing data in randomised clinical trials - a practical guide with flowcharts. BMC Med Res Methodol. 2017;17(1):162. https://doi.org/10.1186/s12874-017-0442-1.

Snijders TAB, Bosker RJ. MULTILEVEL ANALYSIS An introduction to basic and advanced multilevel modeling. Published online 1999. https://www.researchgate.net/profile/Tom_Snijders2/publication/44827177_Multilevel_Analysis_An_Introduction_to_Basic_and_Advanced_Multilevel_Modeling/links/0c96051ffabd4ca210000000/Multilevel-Analysis-An-Introduction-to-Basic-and-Advanced-Multilevel-Modeling.pdf. Accessed 5 May 2017.

Spybrook J, Raudenbush SW, Liu X-F, Congdon R, Martínez A. Optimal design for longitudinal and multilevel research: documentation for the " optimal design " software. 2008. http://people.cehd.tamu.edu/~okwok/epsy652/OD/od-manual-20080312-v176.pdf. Accessed 12 Mar 2018.

Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. L. Erlbaum Associates; 1988. http://www.worldcat.org/title/statistical-power-analysis-for-the-behavioral-sciences/oclc/17877467. Accessed 24 May 2018.

Papakostas GI, Fava M. Predictors, moderators, and mediators (Correlates) of treatment outcome in major depressive disorder. 2008. www.dialogues-cns.org. Accessed 26 Oct 2020.

Almirall D, Mccaffrey DF, Ramchand R, Corporation R, Murphy SA, Author PS. Subgroups analysis when treatment and moderators are time-varying NIH public access author manuscript. Prev Sci. 2013;14(2):169–78. https://doi.org/10.1007/s11121-011-0208-7.

Rucker DD, Preacher KJ, Tormala ZL, Petty RE. Mediation analysis in social psychology: current practices and new recommendations. Soc Personal Psychol Compass. 2011;5(6):359–71. https://doi.org/10.1111/j.1751-9004.2011.00355.x.

Memon MA, Cheah J, Ramayah T, Ting H, Chuah F. Mediation analysis: issues and recommendations. J Appl Struct Equ Model 2018;2(1):1–9. https://www.researchgate.net/publication/322293701. Accessed 11 July 2019.

Fedak KM, Bernal A, Capshaw ZA, Gross S. Applying the Bradford Hill criteria in the 21st century: how data integration has changed causal inference in molecular epidemiology. Emerg Themes Epidemiol. 2015;12(1):14. https://doi.org/10.1186/s12982-015-0037-4.

Helfrich C, Li Y, Sharp N, Sales A. Organizational readiness to change assessment (ORCA): development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implement Sci 2009;4:38. http://implementationscience.biomedcentral.com/articles/10.1186/1748-5908-4-38. Accessed 24 Oct 2016.

Berta W, Cranley L, Dearing JW, Dogherty EJ, Squires JE, Estabrooks CA. Why (we think) facilitation works: insights from organizational learning theory. Implement Sci. 2015;10(1):141. https://doi.org/10.1186/s13012-015-0323-0.

Aarons GA, Ehrhart MG, Farahnak LR. The implementation leadership scale (ILS): development of a brief measure of unit level implementation leadership. Implement Sci. 2014;9(1):45. https://doi.org/10.1186/1748-5908-9-45.

Lewis CC, Klasnja P, Powell BJ, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136. https://doi.org/10.3389/fpubh.2018.00136.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS). Implement Sci. 2014;9(1):157. https://doi.org/10.1186/s13012-014-0157-1.

Lyon AR, Cook CR, Brown EC, et al. Assessing organizational implementation context in the education sector: confirmatory factor analysis of measures of implementation leadership, climate, and citizenship. Implement Sci. Published online 2018. https://doi.org/10.1186/s13012-017-0705-6.

Swindle T, Johnson SL, Davenport K, et al. A mixed-methods exploration of barriers and facilitators to evidence-based practices for obesity prevention in head start. J Nutr Educ Behav. Published online 2019. https://doi.org/10.1016/j.jneb.2019.06.019.

Preacher KJ, Zyphur MJ, Zhang Z. A general multilevel SEM framework for assessing multilevel mediation. Psychol Methods. Published online 2010. https://doi.org/10.1037/a0020141.

Preacher KJ, Hayes AF. Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behav Res Methods. 2008;40(3):879–91. https://doi.org/10.3758/BRM.40.3.879.

Preacher KJ, Selig JP. Advantages of Monte Carlo confidence intervals for indirect effects. Commun Methods Meas. 2012;6(2):77–98. https://doi.org/10.1080/19312458.2012.679848.

MacKinnon DP, Fairchild AJ, Fritz MS. Mediation analysis. Annu Rev Psychol. 2007;58(1):593–614. https://doi.org/10.1146/annurev.psych.58.110405.085542.

Selig JP, Preacher KJ. Mediation models for longitudinal data in developmental research. Res Hum Dev. Published online 2009. https://doi.org/10.1080/15427600902911247.

Fritz MS, MacKinnon DP. Required sample size to detect the mediated effect. Psychol Sci. Published online 2007. https://doi.org/10.1111/j.1467-9280.2007.01882.x.

Krueger R, Casey M. Focus groups: a practical guide for applied research - Richard A. Krueger, Mary Anne Casey - Google Books. Sage Publications; 2014. Accessed 28 May 2018.

Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–88. https://doi.org/10.1177/1049732305276687.

Crabtree B, Miller W. A template approach to text analysis: develo** and using codebooks. In: Crabtree B, Miller L, eds. Doing qualitative research. 3rd ed. SAGE Publications; 1992. https://psycnet.apa.org/record/1992-97742-005. Accessed 23 Oct 2020.

Fereday J, Muir-Cochrane E. Demonstrating rigor using thematic analysis: a hybrid approach of inductive and deductive coding and theme development. Int J Qual Methods. 2006;5(1):80–92. https://doi.org/10.1177/160940690600500107.

Fleiss J. The measurement of interrater agreement. Stat methods rates proportions. Published online 1981.

Syed M, Nelson SC. Guidelines for establishing reliability when coding narrative data. Emerg Adulthood. Published online 2015:1–13. https://doi.org/10.1177/2167696815587648.

Ritchie MJ, Liu CF, Townsend JC, Pitcock J, Kirchner JE. Time and cost of “extreme” implementation facilitation to address challenging contexts. In: Soceity for Implementation Research Collaborative. 2017.

Hoomans T, Severens JL. Economic evaluation of implementation strategies in health care. Implement Sci. 2014;9(1):168. https://doi.org/10.1186/s13012-014-0168-y.

Centers for Disease Control and Prevention. Leading cancer cases and deaths, male and female, 2016. United States Cancer Statistics: Data Visualizations. Published 2016. https://gis.cdc.gov/Cancer/USCS/DataViz.html.

Hassink SG. Early child care and education: a key component of obesity prevention in infancy. Pediatrics. Published online 2017. https://doi.org/10.1542/peds.2017-2846.

Flynn MAT, McNeil DA, Maloff B, et al. Reducing obesity and related chronic disease risk in children and youth: a synthesis of evidence with “best practice” recommendations. Obes Rev. 2006;7(s1):7–66. https://doi.org/10.1111/j.1467-789X.2006.00242.x.

Jones A. Race, socioeconomic status, and health during childhood: a longitudinal examination of racial/ethnic differences in parental socioeconomic timing and child obesity risk. Int J Environ Res Public Health. 2018;15(4):728. https://doi.org/10.3390/ijerph15040728.

Roberts SLE, Healey A, Sevdalis N. Use of health economic evaluation in the implementation and improvement science fields—a systematic literature review. Implement Sci. 2019;14(1):72. https://doi.org/10.1186/s13012-019-0901-7.

Johnson AM, Moore JE, Chambers DA, Rup J, Dinyarian C, Straus SE. How do researchers conceptualize and plan for the sustainability of their NIH R01 implementation projects? Implement Sci. 2019;14(1):50. https://doi.org/10.1186/s13012-019-0895-1.

Stetler CB, Legro MW, Rycroft-Malone J, et al. Role external facilitation in implementation of research findings: a qualitative evaluation of facilitation experiences in the veterans health administration. Implement Sci. 2006;1(1):23. https://doi.org/10.1186/1748-5908-1-23.

Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Adm Policy Ment Health Ment Health Serv Res. 2016;43(5):783–98. https://doi.org/10.1007/s10488-015-0693-2.

Acknowledgements

We would like to acknowledge our community partners who were integral in the development of this protocol and who will be critical to the success of this project.

Funding

Research reported in this publication was supported by the National Cancer Institute of the National Institutes for Health (NIH) under Award Number NIH NCI R37CA25113. Drs. Curran and Swindle are supported by the Translational Research Institute (TRI), UL1TR003107, through the National Center for Advancing Translational Sciences of the NIH. Drs. Swindle and Rutledge are supported by NIH R03 DK117197 and the Lincoln Health Foundation. Dr. Swindle is supported by NIH R21CA237985 and NIH P20GM109096. The content is solely the responsibility of the authors and does not necessarily represent the official views of funding agencies.

Author information

Authors and Affiliations

Contributions

T.S. led the conception and design of this study in addition to leading funding and drafting this manuscript; J.M.R. contributed to the development of the research design, writing of the protocol, and background research; J.P.S. led the power analyses and quantitative analyses plan; J.P designed the cost analyses and contributed to writing of the manuscript, D.Z. supported power analyses and the quantitative analyses plans, J.M. contributed to the stakeholder engagement for implementation strategy design and the writing of this manuscript, S.L.J. contributed to the design of child outcome data collection; L.W.M. contributed to the development of the intervention; D.A. and T.B.E. contributed to the design of this study; GC contributed to design of this study and writing this manuscript. All authors contributed to editing of the manuscript. All author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This protocol was approved by the UAMS Institutional Review Board (IRB 262638). This study will be conducted in accordance with all applicable government regulations and University of Arkansas for Medical Sciences research policies and procedures. Consent will be collected for teachers and parents of children using IRB-approved processes.

Waivers of informed consent and Health Insurance Portability and Accountability Act of 1996 (HIPAA) authorization were approved for this project as this research involves no more than minimal risk to the subjects; waivers will not adversely affect the rights and welfare of the subjects; and the research could not practicably be carried out with the waiver.

Consent for publication

Not applicable.

Competing interests

Dr. Leanne Whiteside-Mansell, Dr. Taren Swindle, and UAMS have a financial interest in the technology (WISE) discussed in this presentation/publication. These financial interests have been reviewed and approved in accordance with the UAMS conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Swindle, T., Rutledge, J.M., Selig, J.P. et al. Obesity prevention practices in early care and education settings: an adaptive implementation trial. Implementation Sci 17, 25 (2022). https://doi.org/10.1186/s13012-021-01185-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-021-01185-1