Abstract

Helium bubbles, which are typical radiation microstructures observed in metals or alloys, are usually investigated using transmission electron microscopy (TEM). However, the investigation requires human inputs to locate and mark the bubbles in the acquired TEM images, rendering this task laborious and prone to error. In this paper, a machine learning method capable of automatically identifying and analyzing TEM images of helium bubbles is proposed, thereby improving the efficiency and reliability of the investigation. In the proposed technique, helium bubble clusters are first determined via the density-based spatial clustering of applications with noise algorithm after removing the background and noise pixels. For each helium bubble cluster, the number of helium bubbles is determined based on the cluster size depending on the specific image resolution. Finally, the helium bubble clusters are analyzed using a Gaussian mixture model, yielding the location and size information on the helium bubbles. In contrast to other approaches that require training using numerous annotated images to establish an accurate classifier, the parameters used in the established model are determined using a small number of TEM images. The results of the model formulated according to the proposed approach achieved a higher F1 score validated through some helium bubble images manually marked. Furthermore, the established model can identify bubble-like objects that humans cannot facilely identify. This computationally efficient method achieves object recognition for material structure identification that may be advantageous to scientific work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A number of researchers have reported that the analysis of the evolution behavior of metals or alloys exposed to the complex and extreme environment in a reactor is a considerably exigent task [1,2,3]. The irradiation-induced damage to nuclear reactor components is one of the major problems that extremely detrimental to nuclear power generation because of the high cost of component replacement and the introduction of uncertainty in predicting the lifecycle of components. Neutron interactions with the material lead to atomic collisions that create point defects and defect clusters. A problem of particular interest is the accumulation of nanoscale helium bubbles in nickel-based superalloys used in nuclear reactors. Helium is mainly produced through the interaction of thermal neutrons emitted by the reactor core with nickel atoms when collisions occur. The nuclear reaction channel indicates that the nickel nucleus can crack into two nuclei of iron and helium after the absorption of one neutron. The presence of transmutation-generated helium has a critical role in the microstructural evolution of metals exposed to neutron irradiation [4,5,32,33,34].

In contrast to the deep learning approach, where learning numerous labeled real or synthetic images is generally required to establish an accurate classifier, this paper proposes a new machine learning method. This proposed technique is implemented to automatically identify and analyze helium bubbles in the TEM images of nickel-based alloys implanted with helium ions at high temperatures. The images were processed and analyzed using the density-based spatial clustering of applications with noise (DBSCAN) algorithm and Gaussian Mixture Model (GMM). After determining the key parameters of the model, the optimized model could be used to identify and count the helium bubbles in the TEM images of the nickel-based alloy. In case only a few marked TEM images were available for training, the performance achieved by the proposed approach is comparable to manual identification and analysis of helium bubbles.

This paper is mainly composed of three parts. Section 1 describes the research background of the identification and analysis of helium bubbles. Section 2 presents the proposed analysis methods as well as the details of the datasets used for modeling and training. Section 3 elaborates on the experimental results obtained using the proposed model; further discussions are also presented. Finally, the paper concludes with a brief summary.

2 Method

2.1 Dataset preparation

The helium bubble images analyzed in this work were captured from nickel-based alloy samples irradiated with helium ions at 650 °C on a 4-MV electrostatic accelerator at the Shanghai Institute of Applied Physics. The samples containing helium bubbles were prepared using a focused ion beam, and the microstructure was characterized using a Tecnai-G2-F20 transmission electron microscope under bright-field imaging conditions.

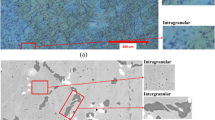

Helium bubbles can be identified under either out-of-focus or under-focused imaging conditions. As demonstrated in Fig. 1, the helium bubble images analyzed in this work are mainly captured under bright-field under-focused TEM imaging conditions. All bright-field TEM images contained three channels with the same size (1024 × 1024 pixels). For convenient processing, each image was equally cropped into 16 small images (256 × 256 pixels).

To evaluate the detection performance of the proposed algorithm, two experts in TEM analysis were requested to manually mark and double check the bubbles found in the TEM images. The annotated images were used for training and evaluating the capability of the new method in identifying TEM bubble images.

2.2 Statistical analysis of helium bubbles

In this study, the helium bubble TEM image was processed and analyzed in three steps: First, the image was preprocessed, eliminating the background and noise pixels from the image. Second, the remaining pixels were clustered by DBSCAN. Finally, the GMM was applied to analyze the helium clusters. A schematic of the entire model and a detailed description of the implementation steps are shown in Fig. 2.

2.2.1 Background and noise pixel elimination

The pixel matrix of the helium bubble image has three channels, each with different intensity values. To afford utmost convenience in data processing and maintaining the three-dimensional signal information, merging the three channel datasets into a single channel dataset is more advantageous. The intensity of each pixel is converted into a single value using the following:

where gray represents the combined intensity value of the components of the three channels; and R, G, and B are the red, green, and blue channel intensities, respectively.

Before attempting to remove the background intensity from the image matrix, the background threshold should be defined. The helium bubbles are assumed to be evenly distributed in the TEM image and have different intensity depths from the background. The matrix data of helium bubble intensities are considerably distinguishable from the background, and the most appropriate threshold value is derived by the training process.

After determining the background threshold value, the image matrix can be processed using the following:

where \(v_{{{\text{th}}}}\) corresponds to the threshold of background.

The background pixel value of the helium bubble image has a nonuniform distribution owing to the nonuniform illumination intensity; hence, determining an appropriate background threshold is difficult. To resolve this, the histogram equalization technology is introduced to adjust the helium bubble brightness against the background. This approach enhances the imaging contrast and facilitates the identification of helium bubbles.

Histogram equalization is a simple and effective image enhancement technology for improving the contrast of an image with small dynamic range intensity. It alters the grayscale value of each pixel to distribute the intensity histogram across the entire intensity range; consequently, the new image tends to be clearer. For example, the grayscale level of an overexposed image is concentrated in the high brightness range, whereas that of an underexposed image is concentrated in the low brightness range. Through histogram equalization, the histogram of an original image can be transformed into a uniformly distributed (equalized) form. This transformation increases the dynamic range of the grayscale value difference among pixels to enhance the overall image contrast. In other words, histogram equalization enhancement is achieved by compressing the grayscale value with numerous pixels (i.e., the grayscale value that performs a major function in the image) and expanding the grayscale value with a small number of pixels (i.e., the grayscale value that does not have a primary function in the image). The original bright-field under-focused TEM image and the results of histogram equalization operation are shown in Fig. 3. The separation of the helium bubble from the background is evidently more difficult in some regions, especially in the upper-left area. Nevertheless, the image contrast is significantly improved after performing histogram equalization on the original TEM micrograph. Thus, to a certain extent, preprocessing through histogram equalization can aid in setting a proper threshold value for distinguishing helium bubbles from the background.

2.2.2 Clustering

Clustering analysis and detection, which are known to have led to the rapid development of data mining techniques, are widely applied to fields, such as pattern recognition, data analysis, market research, and image processing. As an unsupervised machine learning algorithm, its basic principle is to group similar objects into the same cluster. The more similar the objects in the cluster, the better the clustering effect. Highly similar helium bubbles evidently belong to a particular bubble type. Accordingly, a clustering detection algorithm is adopted to analyze the irradiated-alloy TEM micrograph for helium bubble detection and statistics. Specifically, the DBSCAN algorithm was applied to this study. This algorithm assigns cluster labels based on dense regions of points. In DBSCAN, the notion of density is defined as the number of points within a specified radius.

Numerous helium bubble clusters can be obtained after the background and noise are eliminated; thus, clustering the remaining pixels in the preprocessed TEM image data is necessary. This section presents the application of DBSCAN to cluster the calculated helium bubble pixels.

A special label is assigned to each sample point using the following criteria.

-

(1)

A point is considered as a core point if a specified number of neighboring points falls within the specified radius.

-

(2)

A border point is one that has fewer neighbors than the specified number within a specified threshold; however, it lies within the threshold radius of the core point.

-

(3)

All points that are neither core nor border points are considered as noise points.

After labeling the points as core, border, or noise, the DBSCAN algorithm processes them following two simple steps.

Step 1: A separate cluster for each core point or connected group of core points is formed (core points are connected if they are not more distant than the threshold).

Step 2: Each border point is assigned to the cluster of its corresponding core point.

The DBSCAN algorithm can be used to identify the degree of pixel density and classify the pixels according to their distribution based on the pixel distribution density. At the same time, the relatively isolated pixels are marked as noise points, rather than helium bubbles.

Two parameters are extremely important in the DBSACN algorithm: eps and \( {{neib}}_{{{\text{th}}}}\). The first, eps, is the scanning radius whose value is set to 1 in this model; that is, only eight pixels around the current pixel were considered. The second, \({{neib}}_{{{\text{th}}}}\), is a threshold value for identifying kernel points; its value is obtained by the training process. The main steps of DBSCAN are as follows:

-

1.

Randomly choose an unvisited pixel as a starting point, and then find all of its neighbors within the scanning radius (eps) range.

-

2.

If the number of neighboring pixels is greater than or equal to \( {{neib}}_{{{\text{th}}}}\), mark the current point and its neighboring pixels as kernel points, and put the neighbors in a queue. Otherwise, the current pixel is temporarily marked as a noise point.

-

3.

Consider another unvisited point from the queue, find its neighboring pixels, mark the unvisited pixels as kernel points, and put them in the queue.

-

4.

Repeat step 3 until the queue is empty; at this instance, the marked kernel points form a new cluster.

-

5.

Repeat steps 1–4 until all pixels are marked as kernel points or noise points, and terminate the algorithm.

The shape of the cluster able to be detected from the above steps can be any of the forms; hence, DBSCAN remains considerably suitable for clustering helium bubbles with irregular shapes.

2.2.3 GMM

The GMM is a well-known basic statistical machine learning model widely applied to visual media fields. It is a type of unsupervised clustering model and density model consisting of a number of Gaussian function components. Within its framework, the feature density function is regarded as belonging to different component variables whose combination provides multimodal density. The GMM can afford greater flexibility and precision in modeling as well as deriving the underlying statistics of sample data; accordingly, it is popularly used in object recognition and classification.

Virtually all helium bubbles in metals or alloys irradiated by helium ions are spherical or ellipsoidal; thus, their two-dimensional images are represented by circles or ellipses. Based on this observation and GMM characteristics, the assumption that helium bubble images follow a normal distribution is reasonable. In addition, considering that some helium bubbles in TEM images may intersect or coincide, the multidimensional GMM, which is a probabilistic model for representing normally distributed subpopulations within the overall population, is applicable to helium cluster analysis. In the multidimensional GMM, knowing the subpopulation to which a data point belongs to is unnecessary; hence, the subpopulations are automatically learned.

The multidimensional GMM involves two types of parameters: the mixture component weights and component means as well as its covariance. For a GMM with K components, the \(k^{{{\text{th}}}}\) component has a mean of \(\overrightarrow {{\mu_{{{k}}} }}\) and a covariance matrix of \({\Sigma }_{{{k}}}\). The mixture component weights are defined as \(\phi_{{{k}}}\) for component \(C_{{{k}}}\). To ensure that the total probability distribution normalizes to 1, \(\mathop \sum \limits_{i = 1}^{K} \phi_{i} = 1\) is applied as constraint. The mathematical expressions of multidimensional GMM can be summarized as Eqs. (3)–(5):

where D is the dimension of the Gaussian distribution (in this work, D = 2 because the helium bubble cluster pixels have two dimensions).

If the component weights are not learned, then they can be considered as the a priori distribution over components such that p(\(x\)) = \(\phi_{{{k}}}\) (where \(x\) is generated by component \(C_{{{k}}}\)). If they are learned, then they are the a posteriori estimates of the component probabilities given by the data.

Parameters, such as \(\phi_{{{k}}}\), \(\overrightarrow {{\mu_{{{k}}} }}\), and \({\Sigma }_{{{k}}}\), may be evaluated using the expectation maximization (EM) algorithm. The EM algorithm is a numerical technique for maximum likelihood estimation and typically used when closed-form expressions for updating model parameters can be calculated. The EM is an iterative algorithm with the property that the maximum likelihood of data strictly increases with subsequent iterations. This guarantees that the local maximum or saddle point is approached.

The EM for GMM consists of two steps: the expectation step (E step) and the maximization step (M step). The main objective of E step is to compute the expectation of the log-likelihood estimation. It uses the current estimated distributions of latent variables based on the parameters inferred from the previous step. For the E step, the probability, \(\hat{\omega }_{{{{ik}}}}\), that \(x_{{{i}}}\) is generated by component \(C_{{{k}}}\) is evaluated using the following equation:

where \(\hat{\omega }_{{{{ik}}}} = P(C_{{{k}}} |x_{{{i}}} ,\mathop{\phi }\limits^{\rightharpoonup} ,\mathop{\mu }\limits^{\rightharpoonup} ,\mathop{\Sigma }\limits^{\rightharpoonup} )\). In the M step, the main function is to calculate the parameters maximizing the expected log-likelihood from the E step. These parameters are then utilized to determine the distribution of latent variables in the next E step. Specifically, along with \(\hat{\omega }_{{{{ik}}}}\) calculated in the E step, \(\phi_{{{k}}}\), \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\rightharpoonup}$}}{{\mu_{{{k}}} }}\), and \({\Sigma }_{{{k}}}\) are renewed by Eqs. (7)–(9), respectively:

The E and M steps are alternately run until the parameters converge. The EM algorithm is employed to iteratively optimize the parameter estimate. Note that the EM requires the a priori selection of the model order. Usually, the user selects a suitable number approximately corresponding to the length of the training expression.

In this work, the pixel value, \({{pixel}}\left( i \right)\), is used as the pixel mesh weight required in the GMM. Therefore, Eqs. (7)–(9) can be transformed into Eqs. (10)–(12), respectively, in this model.

Based on the foregoing statement and formulae, an automatic bubble detection model is developed, and the clustering classification algorithm is formulated. The results yielded by the GMM-based model provide the helium bubble information; that is, \(\overrightarrow {{\mu_{{{k}}} }}\) describes the central points, and the covariance matrix contains the size and shape information of helium bubbles, respectively.

2.3 Assessment criteria

As described above, several parameters have to be determined to formulate the proposed model. The various parameters evidently lead to considerably different detection results and statistics. Therefore, it is necessary to first determine the assessment criteria for identifying the optimal parameters to achieve excellent detection results.

In machine learning, the three important indices that are frequently used for clustering evaluation are recall, precision, and F1 values. The precision index indicates the portion of positive identifications in a classification set that are actually correct. In contrast, the recall index represents the proportion of actual positives that are correctly identified. In the statistical analysis of binary classification, the F1 value (also called F1-score or F1-measure) considers the precision and recall of the test. The F1 value can be interpreted as a weighted average of precision and recall; the best F1 value is 1, whereas the worst score is 0.

The precision and recall values were calculated and monitored during the training and formulation of the proposed model. The deduced F1 value was calculated using these two parameters and utilized as the comprehensive performance evaluation criterion to determine the optimal parameters of the model. The F1, precision, and recall indices are expressed in the following forms:

where P and R represent the precision and recall of the model, respectively, and F1 is the harmonic mean value of precision and recall; \(N_{{{\text{model}}}}\) is the number of helium bubbles recognized by the desired model; \(N_{{{\text{label}}}}\) is the number of helium bubbles manually marked by the TEM analysis experts; and \(N_{{{\text{ml}}}}\) indicates the number of helium bubbles detected by the clustering analysis algorithm (these bubbles are concurrently marked manually by the experts). Precision P and recall R cannot be promoted at the same time; hence, F1 is defined to balance these two assessment criteria.

3 Results and discussion

As described in the previous section, first, the determination of four parameters is necessary: \( {{bg}}_{{{\text{th}}}}\) (the threshold value of the pixel background), \({{neib}}_{{{\text{th}}}}\) (the minimum number of neighboring points of a pixel), \({{cluster}}_{{{\text{th}}}}\) (the minimum number of a cluster), and \( n_{{{\text{dot}}}}\) (the number of pixels of a helium bubble). These parameters vary with a limited number of discrete integer values. The rational values can be conveniently determined by testing the combinations of all values. In this study, eight TEM images and corresponding annotated images were employed for model training to determine the best combination of the four parameters. Based on the generated results, eight candidate models were established and denoted as Models 1–8. For each TEM image, a combination of parameters is given at the highest F1 value, as well as the P and R results, as listed in Table 1.

Second, eight candidate models were formed using a combination of the four parameters after the training process. As listed in Table 2, four TEM images are selected to determine the optimal parameters for the proposed model with the F1 value as the evaluation criterion. The parameters of Model 5 are easily identified as having the best values; hence, they are chosen as candidates for the final model for bubble clustering selection.

Finally, four different TEM images were used to test the performance of the formulated model for bubble clustering. The corresponding performance evaluation results including the four datasets and their mean values are listed in Table 3.

The position of the helium bubble was evaluated under the Gaussian mixture analysis framework, and the coordinate deviation was compared with the manually marked images in this model. The mean bias for describing the deviation is computed using the following:

where \(P_{{\text{l}}}\) and \(P_{{\text{M}}}\) are the central points of helium bubbles manually marked and estimated using the model, respectively. Here, the Manhattan distance is adopted to compute the distance between \(P_{{\text{l}}}\) and \(P_{{\text{M}}}\).

The list in Table 3 indicates that the mean coordinate bias is 3 pixels and the results yielded by the model approximate those marked manually.

For example, Figs. 4–7 show the helium bubble detection results yielded by the formulated model and manual marking. In Fig. 4, the red points represent the helium bubbles detected by the described model, and the blue points represent those marked by the TEM analysis experts. The numbers of red and blue points are expressed as \(n_{{{\text{red}}}}\) and \(n_{{{\text{blue}}}}\), respectively.

As shown in Figs. 4–7, the number of red points exceeds that of the blue points. Virtually all helium bubbles have been recognized by the formulated model. Moreover, some objects similar to the bubbles, but with no manual markings, have also been identified by the established model. The superior recall (i.e., 94%) achieved by the proposed model means that it is capable of automatically locating and marking most of the manually marked helium bubbles.

In contrast to the excellent recall performance, the precision level achieved by the model was relatively low. This may have been caused by two factors. First, some objects with a similar morphology as the bubbles are extremely dark or minuscule to be observed by human sight, but they can be identified by the machine learning method. Second, helium bubbles with irregular shapes may be manually marked as a single bubble; however, they are distinguished as multiple bubbles by the model. Using the proposed model, these two aspects may yield higher counting results compared with those manually marked. The foregoing may also explain the relatively low precision associated with the manual approach. Accordingly, the model established in this work is observed to be extremely sensitive. It can detect bubble-like objects and identify more candidates of helium bubble clusters than the manual marking approach. By considering Fig. 4 as an example, Fig. 8 indicates that the model developed in this work is considerably sensitive and capable of dealing with situations in which helium bubbles may have irregular shapes or small sizes.

4 Summary

This study is the first to employ the DBSCAN clustering method and the GMM model for efficient identification and analysis of helium bubbles in the TEM images of alloys in a high-temperature irradiation environment. In the current practice, considering that the manual counting of bubbles is time-consuming, the ability of the formulated model to identify and count these bubbles automatically considerably saves time. The new method is found to have a higher recall but low precision performance because it can identify objects with irregular shapes and those unnoticed by the naked eye. The nature of automatic identification and the substantial time saved afforded by the designed method highlights new opportunities in the use of machine learning to expedite helium bubble identification and counting.

The results obtained in this study are based exclusively on TEM datasets under the under-focused condition of bright-field TEM micrographs. Nevertheless, the model is also expected to be capable of adapting to the circumstances of the over-focused condition. A more accurate helium bubble counting performance can be derived by considering the results of these two conditions together. Furthermore, the variation in the shape and size of bubbles must also be considered because helium bubbles evolve dynamically and diversely throughout the entire service. Hence, considerable work awaits completion and verification through follow-up research.

References

T. Loussouarn, L. Beck, P. Trocellier et al., Implementation of heavy-ion elastic recoil detection analysis at JANNUS-Saclay for quantitative helium depth profiling. Nucl. Instrum. Methods Phys. Res. Sect. B 360, 9–15 (2015). https://doi.org/10.1016/j.nimb.2015.07.040

M.S. Stal’tsov, I.I. Chernov, B.A. Kalin et al., Development of gas porosity along the ion range in vanadium alloys during sequential helium and hydrogen ion irradiation. Rus Metall. (Metally). 2019, 1161–1166 (2019). https://doi.org/10.1134/S0036029519110119

R. Ramachandran, C. David, P. Magudapathy et al., Study of defect complexes and their evolution with temperature in hydrogen and helium irradiated RAFM steel using positron annihilation spectroscopy. Fusion Eng. Des. 142, 55–62 (2019). https://doi.org/10.1016/j.fusengdes.2019.04.061

C. Dethloff, E. Gaganidze, V.V. Svetukhin et al., Modeling of helium bubble nucleation and growth in neutron irradiated boron doped RAFM steels. J. Nucl. Mater. 426, 287–297 (2012). https://doi.org/10.1016/j.jnucmat.2011.12.025

M. Klimenkov, A. Möslang, E. Materna-Morris, Helium influence on the microstructure and swelling of 9%Cr ferritic steel after neutron irradiation to 163 dpa. J. Nucl. Mater. 453, 54–59 (2014). https://doi.org/10.1016/j.jnucmat.2014.05.001

G. Lei, R. **e, H. Huang et al., The effect of He bubbles on the swelling and hardening of UNS N10003 alloy. J. Alloy. Compd. 746, 153–158 (2018). https://doi.org/10.1016/j.jallcom.2018.02.291

J.A. Knappa, D.M. Follstaedt, S.M. Myers, Hardening by bubbles in He-implanted Ni. J. Appl. Phys. 103, 013518 (2008). https://doi.org/10.1063/1.2831205

Y.G. Li, W.H. Zhou, R.H. Ning et al., A cluster dynamics model for accumulation of helium in tungsten under helium ions and neutron irradiation. Commun. Comput. Phys. 11, 1547–1568 (2012). https://doi.org/10.4208/cicp.030311.090611a

D. Maroudas, B.D. Wirth, Atomic-scale modeling toward enabling models of surface nanostructure formation in plasma-facing materials. Curr. Opin. Chem. Eng. 23, 77–84 (2019). https://doi.org/10.1016/j.coche.2019.03.001

A. Kashinath, P. Wang, J. Majewski et al., Detection of helium bubble formation at fcc-bcc interfaces using neutron reflectometry. J. Appl. Phys. 114, 043505 (2013). https://doi.org/10.1063/1.4813780

R. Coppola, M. Klimenkov, A. Möslang et al., Experimental investigation of high He/dpa microstructural effects in neutron irradiated B-alloyed Eurofer97 steel by means of small angle neutron scattering (SANS) and electron microscopy. Nucl. Mater. Energy. 9, 194–198 (2016). https://doi.org/10.1016/j.nme.2016.09.013

R. Coppola, M. Klimenkov, R. Lindau et al., Radiation damage studies in fusion reactor steels by means of small-angle neutron scattering (SANS). Phys. B Condensed Matter. 55, 407–412 (2018). https://doi.org/10.1016/j.physb.2017.12.040

Q. Cao, X. Ju, L. Guo et al., Helium-implanted CLAM steel and evolutionary behavior of defects investigated by positron-annihilation spectroscopy. Fusion Eng. Des. 89, 1101–1106 (2014). https://doi.org/10.1016/j.fusengdes.2013.11.008

V. Krsjak, J. Degmova, S. Sojak et al., Effects of displacement damage and helium production rates on the nucleation and growth of helium bubbles—Positron annihilation spectroscopy aspects. J. Nucl. Mater. 499, 38–46 (2018). https://doi.org/10.1016/j.jnucmat.2017.11.007

T. Zhu, S. **, P. Zhang et al., Characterization of helium-vacancy complexes in He-ion implanted Fe9Cr by using positron annihilation spectroscopy. J. Nucl. Mater. 505, 69–72 (2018). https://doi.org/10.1016/j.jnucmat.2018.03.048

M. Thompson, P. Kluth, R.P. Doerner et al., Probing helium nano-bubble formation in tungsten with grazing incidence small angle x-ray scattering. Nucl. Fusion 55, 042001 (2015). https://doi.org/10.1088/0029-5515/55/4/042001

J. Gao, L. Bao, H. Huang et al., ERDA, RBS, TEM and SEM characterization of microstructural evolution in helium-implanted Hastelloy N alloy. Nucl. Instrum. Methods Phys. Res. Sect. B 399, 62–68 (2017). https://doi.org/10.1016/j.nimb.2017.03.148

D. Chen, N. Li, D. Yuryev et al., Self-organization of helium precipitates into elongated channels within metal nanolayers. Sci. Adv. 3, eaao2710 (2017). https://doi.org/10.1126/sciadv.aao2710

M.S. Ding, L. Tian, W.Z. Han et al., Nanobubble fragmentation and bubble-free-channel shear localization in helium-irradiated submicron-sized copper. Phys. Rev. Lett. 117, 215501 (2016). https://doi.org/10.1103/PhysRevLett.117.215501

M.S. Ding, J.P. Du, L. Wan et al., Radiation-induced helium nanobubbles enhance ductility in submicron-sized single-crystalline copper. Nano Lett. 16, 4118–4124 (2016). https://doi.org/10.1021/acs.nanolett.6b00864

M. Tunwal, K.F. Mulchrone, P.A. Meere, Image based particle shape analysis toolbox (IPSAT). Comput. Geosci. 135, 104391 (2020). https://doi.org/10.1016/j.cageo.2019.104391

L.Q. Jia, C.Z. Peng, H.M. Liu et al 2011 in A fast randomized circle detection algorithm. 4th international congress on image and signal processing, Shanghai, pp. 820–823 https://doi.org/10.1109/CISP.2011.6100372

J. Kim, J. Kim, S. Choi, et al 2017 in Robust template matching using scale-adaptive deep convolutional features. Asia-Pacific signal and information processing association annual summit and conference (APSIPA ASC), Kuala Lumpur, pp. 708–711 https://doi.org/10.1109/APSIPA.2017.8282124

G. Akbarizadeh, A new statistical-based kurtosis wavelet energy feature for texture recognition of SAR images. IEEE Trans. Geosci. Remote Sens. 50, 4358–4368 (2012). https://doi.org/10.1109/TGRS.2012.2194787

Z. Tirandaz, G. Akbarizadeh, A two-phase algorithm based on kurtosis curvelet energy and unsupervised spectral regression for segmentation of SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sen. 9, 1244–1264 (2015). https://doi.org/10.1109/JSTARS.2015.2492552

Z. Tirandaz, G. Akbarizadeh, H. Kaabi, PolSAR image segmentation based on feature extraction and data compression using weighted neighborhood filter bank and hidden Markov random field-expectation maximization. Measurement 153, 107432 (2020). https://doi.org/10.1016/j.measurement.2019.107432

C.M. Anderson, J. Klein, H. Rajakumar et al., Automated detection of helium bubbles in irradiated X-750. Ultramicroscopy 217, 113068 (2020). https://doi.org/10.1016/j.ultramic.2020.113068

M. Zalpour, G. Akbarizadeh, N. Alaei-Sheini, A new approach for oil tank detection using deep learning features with control false alarm rate in high-resolution satellite imagery. Int. J. Remote Sens. 41, 2239–2262 (2020). https://doi.org/10.1080/01431161.2019.1685720

N. Davari, G. Akbarizadeh, E. Mashhour, Intelligent diagnosis of incipient fault in power distribution lines based on corona detection in UV-Visible videos. IEEE Trans. Power Deliv. 1 (2020). https://doi.org/10.1109/TPWRD.2020.3046161

J. Han, M. Kamber, Data Mining: Concepts and Techniques, 2nd edn. (Morgan Kaufmann Publishers, San Francisco, USA, 2006).

A. B. Chan, Z. S. J. Liang, N (2008)cin Vasconcelos, Privacy preserving crowd monitoring: Counting people without people models or tracking. 2008 IEEE conference on computer vision and pattern recognition (CVPR 2008), Anchorage, AK, USA, pp. 1–7 https://doi.org/10.1109/CVPR.2008.4587569

C. Arteta, V. Lempitsky, J.A. Noble, et al. (2012) Learning to detect cells using non-overlap** extremal regions. Medical image computing and computer-assisted intervention (MICCAI), vol. 15, Springer, pp. 348–356. https://doi.org/10.1007/978-3-642-33415-3_43

O. Barinova, V. Lempitsky, P. Kholi, On detection of multiple object instances using hough transforms. IEEE Trans. Pattern Anal. Mach. Intell. 34, 1773–1784 (2012). https://doi.org/10.1109/TPAMI.2012.79

W. **e, J. Alison Noble, A. Zisserman, Microscopy cell counting and detection with fully convolutional regression networks. Comput. Methods Biomech.. Biomed. Eng. Imaging Vis. 6, 283–292 (2018). https://doi.org/10.1080/21681163.2016.1149104

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Zhong-Hang Wu, Ju-Ju Bai, Di-Da Zhang, Tian-Bao Zhu and Ren-Duo Liu. The first draft of the manuscript was written by Zhong-Hang Wu and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding authors

Additional information

This work was supported by the National Natural Science Foundation of China (Nos. 12005128 and 81830052), Construction Project of Shanghai Key Laboratory of Molecular Imaging (No. 18DZ2260400), and Shanghai Municipal Education Commission (Class II Plateau Disciplinary Construction Program of Medical Technology of SUMHS, 2018–2020).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, ZH., Bai, JJ., Zhang, DD. et al. Statistical analysis of helium bubbles in transmission electron microscopy images based on machine learning method. NUCL SCI TECH 32, 54 (2021). https://doi.org/10.1007/s41365-021-00886-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41365-021-00886-y