Abstract

Currently, single-stage point-based 3D object detection network remains underexplored. Many approaches worked on point cloud space without optimization and failed to capture the relationships among neighboring point sets. In this paper, we propose DCGNN, a novel single-stage 3D object detection network based on density clustering and graph neural networks. DCGNN utilizes density clustering ball query to partition the point cloud space and exploits local and global relationships by graph neural networks. Density clustering ball query optimizes the point cloud space partitioned by the original ball query approach to ensure the key point sets containing more detailed features of objects. Graph neural networks are very suitable for exploiting relationships among points and point sets. Additionally, as a single-stage 3D object detection network, DCGNN achieved fast inference speed. We evaluate our DCGNN on the KITTI dataset. Compared with the state-of-the-art approaches, the proposed DCGNN achieved better balance between detection performance and inference time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

3D object detection is holding great importance in computer vision with the rapid development of many real-life applications, including autonomous driving[3], augmented reality [12, 14], and etc. LiDAR can capture point cloud data, which is a mainstream form of 3D data representation [7] and widely used in 3D object detection. In contrast to RGB-D images, point clouds can provide accurate depth and adequate geometric information [28], which benefits feature extraction processes in deep-learning-based 3D object detection approaches. However, because of the unordered, irregular, and unstructured characteristics of point clouds [1], effectively leveraging point cloud data for 3D object detection still remains challenging.

To address the above issue, 3D object detection from point cloud is mainly divided into two branches: voxel-based [11, 19, 25, 31] and point-based [2b, which shows that half of the point set (a sphere) is empty in geometry, indicating that those point sets are not optimal.

Graph neural networks (GNNs) [24] are very suitable for processing irregular and unstructured data; more specifically, they can extract relationships among points and point sets. GNNs represent data and their relationships in the form of graphs and utilize neural networks to extract features. Due to the irregular and unstructured nature of the point clouds, we believe that GNNs are more suitable for dealing with point clouds.

In this paper, we propose DCGNN: A Single-Stage 3D Object Detection Network based on Density Clustering and Graph Neural Network. We design a multi-scale hierarchical single-stage 3D object detection network with graph neural networks. Besides, we propose a unique point set optimization approach based on density clustering to better capture local features in grouped point sets.

Our key contributions are summarized as follows:

-

(1)

We propose a novel optimization approach in point cloud partition with density clustering. We utilize density clustering approaches to find the optimal centers of point sets and move the point sets towards the new centers. Performing such movements properly can better fill the point sets with point clouds, thus leading to better local feature extraction.

-

(2)

We design a backbone network with graph neural networks, which is able to capture both local relationships in point sets and global relationships between point sets. We design a local GNN layer and a global GNN layer to extract local and global features of point sets. Feature fusion is also performed between those layers.

-

(3)

We design a novel hierarchical single-stage 3D object detection network. Experiments on KITTI dataset show our proposed network achieves satisfactory results in inference speed and detection performance.

The rest of this paper is organized as follows: the following section discusses the related works. The subsequent section explains our proposed network. “Experiments” illustrates the experiments of our network. The summary and conclusion of the paper are given in “Conclusions”.

Related works

LiDAR-based 3D object detection approaches can be mainly divided into two streams: voxel-based approaches and point-based approaches [5]. There are also projection-based approaches [\({m}_{i}\), we use the original center and point set instead to prevent loss of important detail information in the original point set. The whole process is explained in Algorithm 1 and illustrated in Fig. 3.

In Algorithm 1, we take \(C\) and \(V\) as input, where \(C\) stands for the centers sampled from FPS, and \(V\) stands for the whole point cloud set. The algorithm outputs \({S}_{d}\) and \({C}_{d}\). \({S}_{d}\) stands for the new point sets grouped by DC-ball query, and \({C}_{d}\) stands for the new centers. \({m}_{i}\) is a conditional parameter, which stands for the point number limit of points moving out of the original point set, and it is used to prevent loss of important detail information in the original grou**.

Figure 3a illustrates a single point set by performing original ball query from Fig. 2b, and Fig. 3b illustrates the point set from Fig. 3a with moved center calculated by the proposed DC-ball query algorithm. The point set in Fig. 3b is a new grou** result of the point set in Fig. 3a. The grou** result contains more points by performing DC-ball query, which indicates more detailed features.

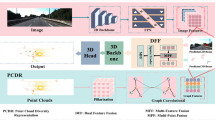

The DCGNN architecture

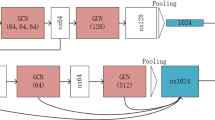

Overall, our proposed DCGNN network (Fig. 1) consists of three similar structures \({S}_{1}\), \({S}_{2}\), \({S}_{3}\) and a final layer \(F\). \({S}_{1}\), \({S}_{2}\) and \({S}_{3}\) are of identical architecture, but with different parameters (e.g. sizes of layers, number of points in FPS). Therefore, we only illustrate the detailed architecture of \({S}_{1}\), and use the dark blue and purple strip to represent \({S}_{2}\) and \({S}_{3}\). The parameters of \({S}_{1}\), \({S}_{2}\) and \({S}_{3}\) are documented in Sect. 4.1.

Grou** with Density Clustering Similar to many point-based networks, we first perform furthest point sampling and grou** to construct the initial point sets. Inspired by 3DSSD, we use F-FPS to ensure more points are sampled into an object by calculating distances with X–Y-Z coordinates and semantic features, as shown in Formula 1,

where \({L}_{d}\left(A,B\right)\) and \({L}_{f}\left(A,B\right)\) represent Euclidean X–Y-Z distance and feature distance, respectively.

In \({S}_{1}\), we perform DC-ball query to optimize the point sets. We also perform multi-scale grou** to capture features of objects in different scales.

After FPS and ball query, the grouped point sets (balls) are created for two scales \({R}_{1}\), \({R}_{2}\) and \({R}_{3}\), and MLP is used to derive local point-wise features.

Local GNN Layer We design a graph neural network to extract local point-wise features in grouped point sets. A graph is a pair \(G=(V, E)\), where \(V\) is a set of vertices and \(E\) is a set of edges. In our case, we construct \(N\) complete graphs for \({R}_{1}\) and \({R}_{2}\) respectively. In jth (\(j\in 1\dots N\)) grouped point set of scale \({R}_{k} (k\in \mathrm{1,2},3)\), graph \({G}_{j}\) is constructed, where \({V}_{j}\) consists of all points in a grouped point set, and \({E}_{j}\) is generated according to Formula 2.

Then, GraphSAGE[8] is used to extract the relationship within the complete graphs, as shown in Formula 3,

where \(\mathcal{N}(i)\) represents the neighbor of point \(i\), \(W\) represents the weight of a linear layer, and \({h}_{i}^{l}\) represents the features of point \(i\) in the lth layer.

Global GNN Layer We design another graph neural network to extract the relationship of features between each grouped point sets. For each grouped point set, a max pooling operation is performed to obtain determinant as vertices in \({V}_{0}\), and we construct KNN graph \({G}_{0}\) in \({V}_{0}\), where \({E}_{0}\) is generated according to Formula 4,

where \({KNN}_{k}\left(u\right)\) represents \(k\) nearest neighbor(s) of \(u\) (when \(k=1\), the neighbor is \(u\) itself).

We perform the same GraphSAGE in Formula 3 to extract the relationship within the KNN graph.

Complete graphs cannot be used to extract global features due to computational complexity, as \(N\) is much bigger than vertex counts in \({V}_{1}\) to \({V}_{n}\).

Feature Fusion After the completeness of graphs to extract local features and the KNN graph to extract global features, each vertex in the KNN graph is divided equally and added into the features of each points in the corresponding grouped point set. Then, a max pooling is performed to obtain the determinant of each grouped point set.

Finally, we concatenate the features of all scales. An MLP is then used to reduce redundancy, and the output of the MLP is sent to the next structure.

Vote Layer After \({S}_{3}\), we add a vote layer to shift the center points queried by FPS to the real center of objects, as in 3DSSD and VoteNet [15]. F-FPS is also used in this layer.

Final Layer We design a layer \(F\) positioned after the vote layer to generate dense predictions for detection head. From parctice, we know that dense predictions are beneficial to detection results. This layer takes the shifted center in the vote layer as input, performs ball query with large radius, and then generates dense prediction using MLPs.

Followed by layer \(F\), the classification scores and bounding boxes are ouput by the detection head. We use a single stage detection head, which perform direct classification and regression, enabling us to train the network in an end-to-end manner.

Loss function

The total loss consists of classification loss, residual loss and shifting loss, as in Formula 5 below:

where \({N}_{c}\) and \({N}_{p}\) are the numbers of total center points and positive center points in objects. In the classification loss \({L}_{c}\), \({s}_{i}\) and \({u}_{i}\) represent the predicted classification score and the center-ness label introduced in 3DSSD, respectively, and we use cross entropy loss for \({L}_{c}\).

The regression loss \({L}_{r}\) consists of distance regression loss \({L}_{dist}\), size regression loss \({L}_{size}\), angle regression loss \({L}_{angle}\), and corner loss \({L}_{corner}\). We use smooth-L1 loss for \({L}_{dist}\) and \({L}_{size}\). \({L}_{angle}\) and \({L}_{corner}\) are calculated by Formula 6 and Formula 7, respectively:

In angle loss, \({d}_{c}^{a}\) and \({d}_{r}^{a}\) are predicted angle class and residual, while \({t}_{c}^{a}\) and \({t}_{r}^{a}\) are their ground truths. In corner loss, \({P}_{m}\) and \({G}_{m}\) are the predicted and ground-truth location of 8 corners of a bounding box.

Shifting loss is guided by the ground-truth centers of object in Vote layer. We use a smooth-L1 loss to calculate the distance between the predicted centers and the ground-truth centers of objects. \({N}_{p}^{*}\) is the number of positive centers from F-FPS.

Experiments

We evaluate the proposed DCGNN on KITTI Object Detection Benchmark [6], which includes 7481 training samples and 7518 testing samples with three levels of difficulties: easy, moderate and hard. The KITTI dataset contains three classes: car, pedestrian, and cyclist. We evaluate our DCGNN on cars because of complicated scene and large quantity of data. More importantly, most existing works evaluate their network on cars. and the proposed DCGNN can be objectively compared with these studies. We divide the training samples into a training set (3712 scenes) and a validation set (3769 scenes) at a ratio about 1:1, the same as other works. We perform ablation study on the 1:1-splitting train-val set. We also divide a 4:1-splitting train-val set to train DCGNN submitted to the KITTI official benchmark. Following PV-RCNN, we utilize the 20% local validation set to obtain an approximate trend to the performance of our trained model submitted to the KITTI official benchmark. We compare the results of DCGNN's KITTI official benchmark with state-of-the-art models. The average precision (AP) is used to compare performance of different models for evaluation and the IoU threshold for car category is 0.7.

Implementation details

Network architecture As shown in Fig. 1, there are three similar structures \({S}_{1}\), \({S}_{2}\) and \({S}_{3}\) in DCGNN.

In \({S}_{1}\), we sample 4,096 points by FPS, and perform our proposed DC-ball query for grou** with radii 0.2, 0.4, 0.8 for each scale. We choose DBSCAN [4] as clustering algorithm with parameter \(eps=0.1\), \(minPts=4\) and \({m}_{i}=4\). We use MLPs with [16, 32], [16, 32], [32, 64] for each scale to extract initial feature. We perform one local GNN layer and one global GNN layer (\(K=3\)) for each of three scales using GraphSAGE [8]. The output channels of the local GNNs in each scale are 32, 32, 32. The output channels of the global GNNs in each scale are identical to the local GNN. Two-layer MLPs with the same channel as input are performed after the GNNs of each scale. Finally, we concatenate the features of three scales, and aggregate them to 64 channels by a one-layer MLP to get the output.

In \({S}_{2}\) and \({S}_{3}\), the numbers of sampled points are 1,024 and 512. We perform F-FPS and D-FPS in the two layers. For \({S}_{2}\), we use 0.4, 0.8, 1.6 as radii. For \({S}_{3}\), we use 1.6, 3.2, 4.8 as radii. The MLPs for initial feature extraction is with [128], [128], [128] and [256], [256], [256] for \({S}_{2}\) and \({S}_{3}\) respectively. In addition, we perform GNN layers the same as \({S}_{1}\) in \({S}_{2}\) and \({S}_{3}\), but with different sizes. The output channel of the local GNNs in \({S}_{2}\) is 128, 128, 128 and 256, 256, 256 for \({S}_{3}\). We also perform two-layer MLPs for each scale of \({S}_{2}\) and \({S}_{3}\). Finally, the aggregation channel is 128 and 256 respectively.

In Vote layer, we sample 256 points using F-FPS and D-FPS. We use 128-channel feature to shift the center. We do not change the features of centers.

In \(F\), we take the shifted centers from Vote layer. There are only two scales in \(F\). The radii of ball query is 4.8, 6.4. The MLPs for feature extraction is with [256, 256, 512] and [256, 512, 1024]. There is no GNN layer in this layer. Finally, the features are aggregated into 512 channels.

Training The network is trained in an end-to-end manner on a Tesla T4 GPU. We use ADAM [Main results We evaluate our model on the 3D detection benchmark of the KITTI test server. The name of the proposed network on the KITTI test server is DCGNN. As shown in Table 1, we compare our results with state-of-the-art single-stage point-based 3D object detection approaches. Note that the APs in Table 1 are of 40 recall points according to the KITTI online benchmark. We selected state-of-the-art single-stage point-based models: 3DSSD [26], Point-GNN [21], and SVGA-Net [

Inference time

3DSSD [26] is the fastest single-stage and point-based network so far to the best of our knowledge. We evaluate the TensorFlow version of 3DSSD provided by the authors and our DCGNN locally using NVIDIA RTX2080Ti GPU with single batch size and compared them with respect to inference time, and the results are shown in Table 6.

From Table 6, we can see that our DCGNN is slightly slower than 3DSSD (2fps). However, our DCGNN is much faster than PointGNN, which is the only open-source GNN-based network so far.

We also evaluate the inference time of DC-ball query and GNNs in a single batch to better justify the fast inference speed of our network, as shown in Table 7.

From Table 7, we can see that although DC-ball query and GNNs are considered time-consuming, our fine-optimized implementation can still achieve fast inference speed.

Conclusions

In this paper, we proposed a single-stage 3D object detection network based on density clustering and graph network (DCGNN). We proposed a novel optimization method of point set division (DC-ball query) and verified its effectiveness through systematic experiments. By constructing local and global graphs and making use of graph neural networks, we captured both the local and global relationships among point clouds. Compared with the state-of-the-art networks, the proposed DCGNN achieves satisfactory results in inference speed and detection performance.