Abstract

Relation extraction is a key task for knowledge graph construction and natural language processing, which aims to extract meaningful relational information between entities from plain texts. With the development of deep learning, many neural relation extraction models were proposed recently. This paper introduces a survey on the task of neural relation extraction, including task description, widely used evaluation datasets, metrics, typical methods, challenges and recent research progresses. We mainly focus on four recent research problems: (1) how to learn the semantic representations from the given sentences for the target relation, (2) how to train a neural relation extraction model based on insufficient labeled instances, (3) how to extract relations across sentences or in a document and (4) how to jointly extract relations and corresponding entities? Finally, we give out our conclusion and future research issues.

Similar content being viewed by others

References

Bollacker K, Evans C, Paritosh P, et al. Freebase: A collaboratively created graph database for structuring human knowledge. In: Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data. New York, 2008

Speer R, Havasi C. Representing general relational knowledge in ConceptNet 5. In: Proceedings of the Eighth International Conference on Language Resources and Evaluation (LREC’12). Istanbul: European Language Resources Association (ELRA), 2012. 3679–3686

Bizer C, Lehmann J, Kobilarov G, et al. DBpedia—A crystallization point for the Web of Data. J Web Semantics, 2009, 7: 154–165

Suchanek F M, Kasneci G, Weikum G. YAGO: A large ontology from Wikipedia and WordNet. J Web Semantics, 2008, 6: 203–217

Dong X, Gabrilovich E, Heitz G, et al. Knowledge vault: A web-scale approach to probabilistic knowledge fusion. In: Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. London, 2014. 601–610

Mitchell T, Cohen W, Hruschka E, et al. Never-ending learning. Commun ACM, 2018, 61: 103–115

Etzioni O, Cafarella M J, Downey D, et al. Web-scale information extraction in knowitall. In: Proceedings of the 13th International Conference on World Wide Web. New York, 2004. 100–110

Banko M, Cafarella M J, Soderland S, et al. Open information extraction from the web. In: Proceedings of the 20th International Joint Conference on Artificial Intelligence. Hyderabad, 2007. 2670–2676

Fader A, Soderland S, Etzioni O. Identifying relations for open information extraction. In: Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing. Edinburgh, 2011. 1535–1545

Hendrickx I, Kim S N, Kozareva Z, et al. Semeval-2010task8: Multi-way classification of semantic relations between pairs of nominals. In: Proceedings of the Workshop on Semantic Evaluations: Recent Achievements and Future Directions. Boulder, 2009. 94–99

Zhang Y, Zhong V, Chen D, et al. Position-aware attention and supervised data improve slot filling. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Copenhagen: Association for Computational Linguistics, 2017. 35–45

Roth D, Yih W t. A linear programming formulation for global inference in natural language tasks. In: Proceedings of the Eighth Conference on Computational Natural Language Learning. Boston, 2004

Riedel S, Yao L, McCallum A. Modeling relations and their mentions without labeled text. In: Proceedings of the Learning and Knowledge Discovery in Databases, European Conference. Barcelona, 2010. 148–163

Jat S, Khandelwal S, Talukdar P P. Improving distantly supervised relation extraction using word and entity based attention. In: Proceedings of the 6th Workshop on Automated Knowledge Base Construction. Long Beach, 2017

Zeng X, Zeng D, He S, et al. Extracting relational facts by an end-to-end neural model with copy mechanism. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne: Association for Computational Linguistics, 2018. 506–514

Zeng X, He S, Zeng D, et al. Learning the extraction order of multiple relational facts in a sentence with reinforcement learning. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 367–377

Zheng S, Wang F, Bao H, et al. Joint extraction of entities and relations based on a novel tagging scheme. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vancouver: Association for Computational Linguistics, 2017. 1227–1236

Han X, Zhu H, Yu P, et al. FewRel: A large-scale supervised few-shot relation classification dataset with state-of-the-art evaluation. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 4803–4809

Luan Y, Wadden D, He L, et al. A general framework for information extraction using dynamic span graphs. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis: Association for Computational Linguistics, 2019. 3036–3046

Zhao Y, Wan H, Gao J, et al. Improving relation classification by entity pair graph. In: Proceedings of The Eleventh Asian Conference on Machine Learning. Nagoya, 2019. 1156–1171

Soares L B, FitzGerald N, Ling J, et al. Matching the blanks: Distributional similarity for relation learning. In: Proceedings of the 57th Conference of the Association for Computational Linguistics. Florence, 2019. 2895–2905

Eberts M, Ulges A. Span-based joint entity and relation extraction with transformer pre-training. CoRR, 2019

Wei Z, Su J, Wang Y, et al. A novel hierarchical binary tagging framework for joint extraction of entities and relations. CoRR, 2019

Hu L, Zhang L, Shi C, et al. Improving distantly-supervised relation extraction with joint label embedding. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 3821–3829

Zhang Z, Han X, Liu Z, et al. ERNIE: Enhanced language representation with informative entities. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 1441–1451

Kambhatla N. Combining lexical, syntactic, and semantic features with maximum entropy models for information extraction. In: Proceedings of the ACL Interactive Poster and Demonstration Sessions. Barcelona: Association for Computational Linguistics, 2004. 178–181

Zhou G, Su J, Zhang J, et al. Exploring various knowledge in relation extraction. In: Proceedings of the Conference on the 43rd Annual Meeting of the Association for Computational Linguistics. University of_Michigan, The Association for Computer Linguistics, 2005. 427–434

Lodhi H, Saunders C, Shawe-Taylor J, et al. Text classification using string kernels. J Mach Learn Res, 2002. 2: 419–444

Collins M, Duffy N. Convolution kernels for natural language. In: Advances in Neural Information Processing Systems 14. Vancouver: MIT Press, 2001. 625–632

Bunescu R C, Mooney R J. A shortest path dependency kernel for relation extraction. In: Proceedings of the Human Language Technology Conference and Conference on Empirical Methods in Natural Language Processing. Vancouver: The Association for Computational Linguistics, 2005. 724–731

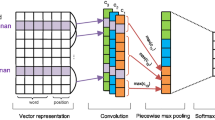

Zeng D, Liu K, Lai S, et al. Relation classification via convolutional deep neural network. In: Proceedings of the Conference on 25th International Conference on Computational Linguistics. Technical Papers. Dublin, 2014. 2335–2344

Mikolov T, Sutskever I, Chen K, et al. Distributed representations of words and phrases and their compositionality. In: Proceedings of the 26th International Conference on Neural Information Processing Systems. Lake Tahoe, 2013. 3111–3119

Pennington J, Socher R, Manning C. Glove: Global vectors for word representation. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP). Doha: Association for Computational Linguistics, 2014. 1532–1543

Joulin A, Grave E, Bojanowski P, et al. Fasttext.zip: Compressing text classification models. CoRR, 2016

Zeng D, Liu K, Chen Y, et al. Distant supervision for relation extraction via piecewise convolutional neural networks. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon: The Association for Computational Linguistics, 2015. 1753–1762

dos Santos C N, **ang B, Zhou B. Classifying relations by ranking with convolutional neural networks. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing of the Asian Federation of Natural Language Processing. Bei**g: The Association for Computer Linguistics, 2015. 626–634

Wang L, Cao Z, de Melo G, et al. Relation classification via multilevel attention CNNs. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin: Association for Computational Linguistics, 2016. 1298–1307

He Z, Chen W, Li Z, et al. Syntax-aware entity representations for neural relation extraction. Artificial Intelligence, 2019, 275: 602–617

Zhang S, Zheng D, Hu X, et al. Bidirectional long short-term memory networks for relation classification. In: Proceedings of the 29th Pacific Asia Conference on Language, Information and Computation. Shanghai, 2015

Xu Y, Mou L, Li G, et al. Classifying relations via long short term memory networks along shortest dependency paths. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon: The Association for Computational Linguistics, 2015. 1785–1794

Sorokin D, Gurevych I. Context-aware representations for knowledge base relation extraction. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Copenhagen: Association for Computational Linguistics, 2017. 1784–1789

Yang D, Wang S, Li Z. Ensemble neural relation extraction with adaptive boosting. In: Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence. Stockholm, 2018. 4532–4538

Shen Y, Huang X. Attention-based convolutional neural network for semantic relation extraction. In: Proceedings of the 26th International Conference on Computational Linguistics. Technical Papers. Osaka, 2016. 2526–2536

Zhou P, Shi W, Tian J, et al. Attention-based bidirectional long short-term memory networks for relation classification. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin: The Association for Computer Linguistics, 2016

Du J, Han J, Way A, et al. Multi-level structured self-attentions for distantly supervised relation extraction. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 2216–2225

Han X, Yu P, Liu Z, et al. Hierarchical relation extraction with coarse-to-fine grained attention. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 2236–2245

Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. In: Advances in Neural Information Processing Systems 30. Curran Associates, Inc., 2017. 5998–6008

Radford A, Narasimhan K, Salimans T, et al. Improving language understanding by generative pre-training. Technical Report. Computer Sciences, OpenAI.com, 2018

Devlin J, Chang M W, Lee K, et al. BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis: Association for Computational Linguistics, 2019. 4171–4186

Alt C, Hübner M, Hennig L. Fine-tuning pre-trained transformer language models to distantly supervised relation extraction. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 1388–1398

Wang H, Tan M, Yu M, et al. Extracting multiple-relations in one-pass with pre-trained transformers. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence, Italy: Association for Computational Linguistics, 2019 1371–1377

Kipf T N, Welling M. Semi-supervised classification with graph convolutional networks. ar**v:1609.02907

Zhang Y, Qi P, Manning C D. Graph convolution over pruned dependency trees improves relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 2205–2215

Guo Z, Zhang Y, Lu W. Attention guided graph convolutional networks for relation extraction. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 241–251

Zhu H, Lin Y, Liu Z, et al. Graph neural networks with generated parameters for relation extraction. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 1331–1339

Song L, Zhang Y, Gildea D, et al. Leveraging dependency forest for neural medical relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 208–218

Christopoulou F, Miwa M, Ananiadou S. Connecting the dots: Document-level neural relation extraction with edge-oriented graphs. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 4925–4936

Peng N, Poon H, Quirk C, et al. Trans Association Comput Linguistics, 2017, 5: 101–115

Fu T J, Li P H, Ma W Y. GraphRel: Modeling text as relational graphs for joint entity and relation extraction. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 1409–1418

Vashishth S, Joshi R, Prayaga S S, et al. RESIDE: Improving distantly-supervised neural relation extraction using side information. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 1257–1266

Sabour S, Frosst N, Hinton G E. Dynamic routing between capsules. In: Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems. Long Beach, 2017. 3856–3866

Zhang N, Deng S, Sun Z, et al. Attention-based capsule networks with dynamic routing for relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 986–992

Zhang X, Li P, Jia W, et al. Multi-labeled relation extraction with attentive capsule network. In: Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, the Thirty-First Innovative Applications of Artificial Intelligence Conference, the Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu: AAAI Press, 2019. 7484–7491

Mintz M, Bills S, Snow R, et al. Distant supervision for relation extraction without labeled data. In: Proceedings of the 47th Annual Meeting of the Association for Computational Linguistics and the 4th International Joint Conference on Natural Language Processing of the AFNLP. Singapore: The Association for Computer Linguistics, 2009. 1003–1011

Lin Y, Liu Z, Sun M. Neural relation extraction with multi-lingual attention. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics. Vancouver: Association for Computational Linguistics, 2017. 34–43

Ji G, Liu K, He S, et al. Distant supervision for relation extraction with sentence-level attention and entity descriptions. In: Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence. San Francisco: AAAI Press, 2017. 3060–3066

Bordes A, Usunier N, Garcia-Duran A, et al. Translating embeddings for modeling multi-relational data. In: Advances in Neural Information Processing Systems 26. Curran Associates, Inc., 2013. 2787–2795

Feng X, Guo J, Qin B, et al. Effective deep memory networks for distant supervised relation extraction. In: Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence. Melbourne, 2017. 4002–4008

Feng J, Huang M, Zhao L, et al. Reinforcement learning for relation classification from noisy data. In: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18). New Orleans: AAAI Press, 2018. 5779–5786

Qin P, Xu W, Wang W Y. Robust distant supervision relation extraction via deep reinforcement learning. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne: Association for Computational Linguistics, 2018. 2137–2147

Yang K, He L, Dai X Y, et al. Exploiting noisy data in distant supervision relation classification. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis: Association for Computational Linguistics, 2019. 3216–3225

Zeng X, He S, Liu K, et al. Large scaled relation extraction with reinforcement learning. In: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18). New Orleans: AAAI Press, 2018. 5658–5665

Qin P, Xu W, Wang W Y. DSGAN: Generative adversarial training for distant supervision relation extraction. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne: Association for Computational Linguistics, 2018. 496–505

Liu T, Wang K, Chang B, et al. A soft-label method for noise-tolerant distantly supervised relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Copenhagen: Association for Computational Linguistics, 2017. 1790–1795

Zeng W, Lin Y, Liu Z, et al. Incorporating relation paths in neural relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Copenhagen: Association for Computational Linguistics, 2017. 1768–1777

Deng X, Sun H. Leveraging 2-hop distant supervision from table entity pairs for relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 410–420

Beltagy I, Lo K, Ammar W. Combining distant and direct supervision for neural relation extraction. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis: Association for Computational Linguistics, 2019. 1858–1867

Huang Y, Du J. Self-attention enhanced CNNs and collaborative curriculum learning for distantly supervised relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 389–398

Lin Y, Shen S, Liu Z, et al. Neural relation extraction with selective attention over instances. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin: Association for Computational Linguistics, 2016. 2124–2133

Ye Z X, Ling Z H. Distant supervision relation extraction with intra-bag and inter-bag attentions. In: Proceedings of theConference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis: Association for Computational Linguistics, 2019. 2810–2819

Jia W, Dai D, **ao X, et al. ARNOR: Attention regularization based noise reduction for distant supervision relation classification. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 1399–1408

Lin H, Yan J, Qu M, et al. Learning dual retrieval module for semi-supervised relation extraction. In: The World Wide Web Conference. San Francisco, 2019. 1073–1083

Wang G, Zhang W, Wang R, et al. Label-free distant supervision for relation extraction via knowledge graph embedding. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 2246–2255

Zhang N, Deng S, Sun Z, et al. Long-tail relation extraction via knowledge graph embeddings and graph convolution networks. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Minneapolis: Association for Computational Linguistics, 2019. 3016–3025

Han X, Liu Z, Sun M. Neural knowledge acquisition via mutual attention between knowledge graph and text. In: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18). New Orleans: AAAI Press, 2018. 4832–4839

Li P, Mao K, Yang X, et al. Improving relation extraction with knowledge-attention. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 229–239

Li Z, Ding N, Liu Z, et al. Chinese relation extraction with multi-grained information and external linguistic knowledge. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 4377–4386

Gao T, Han X, Liu Z, et al. Hybrid attention-based prototypical networks for noisy few-shot relation classification. In: Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, the Thirty-First Innovative Applications of Artificial Intelligence Conference, the Ninth AAAI Symposium on Educational Advances in Artificial Intelligence. Honolulu: AAAI Press, 2019. 6407–6414

Ye Z X, Ling Z H. Multi-level matching and aggregation network for few-shot relation classification. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 2872–2881

Ye Q, Liu L, Zhang M, et al. Looking beyond label noise: Shifted label distribution matters in distantly supervised relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong: Association for Computational Linguistics, 2019. 3839–3848

Gao T, Han X, **e R, et al. Neural snowball for few-shot relation learning. CoRR, 2019

Zelenko D, Aone C, Richardella A. Kernel methods for relation extraction. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2002. 71–78

Chan Y S, Roth D. Exploiting syntactico-semantic structures for relation extraction. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies. Portland: Association for Computational Linguistics, 2011. 551–560

Yu X, Lam W. Jointly identifying entities and extracting relations in encyclopedia text via a graphical model approach. In: Coling 2010: Posters. Bei**g: Coling 2010 Organizing Committee, 2010. 1399–1407

Li Q, Ji H. Incremental joint extraction of entity mentions and relations. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics. Baltimore: Association for Computational Linguistics, 2014. 402–412

Miwa M, Bansal M. End-to-end relation extraction using LSTMs on sequences and tree structures. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin: Association for Computational Linguistics, 2016. 1105–1116

Miwa M, Sasaki Y. Modeling joint entity and relation extraction with table representation. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Doha: Association for Computational Linguistics, 2014. 1858–1869

Gupta P, Schütze H, Andrassy B. Table filling multi-task recurrent neural network for joint entity and relation extraction. In: Proceedings of the 26th International Conference on Computational Linguistics. Technical Papers. Osaka: The COLING 2016 Organizing Committee, 2016. 2537–2547

Zhang M, Zhang Y, Fu G. End-to-end neural relation extraction with global optimization. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Copenhagen: Association for Computational Linguistics, 2017. 1730–1740

Yao Y, Ye D, Li P, et al. DocRED: A large-scale document-level relation extraction dataset. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 764–777

Quirk C, Poon H. Distant supervision for relation extraction beyond the sentence boundary. In: Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics. Valencia: Association for Computational Linguistics, 2017. 1171–1182

Song L, Zhang Y, Wang Z, et al. N-ary relation extraction using graph-state lstm. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels: Association for Computational Linguistics, 2018. 2226–2235

Sahu S K, Christopoulou F, Miwa M, et al. Inter-sentence relation extraction with document-level graph convolutional neural network. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence: Association for Computational Linguistics, 2019. 4309–4316

Akimoto K, Hiraoka T, Sadamasa K, et al. Cross-sentence n-ary relation extraction using lower-arity universal schemas. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 6225–6231

Peters M E, Neumann M, Iyyer M, et al. Deep contextualized word representations. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. New Orleans: Association for Computational Linguistics, 2018. 2227–2237

Radford A, Wu J, Child R, et al. Language models are unsupervised multitask learners. OpenAI Blog, 2018

Petroni F, Rocktäschel T, Riedel S, et al. Language models as knowledge bases? In: Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong: Association for Computational Linguistics, 2019. 2463–2473

Pörner N, Waltinger U, Schütze H. BERT is not a knowledge base (yet): Factual knowledge vs. name-based reasoning in unsupervised QA. CoRR, 2019

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Grant Nos. 61922085 and 61533018), the Natural Key R&D Program of China (Grant No. 2018YFC0830101), the Key Research Program of the Chinese Academy of Sciences (Grant No. ZDBS-SSW-JSC006), Bei**g Academy of Artificial Intelligence (BAAI2019QN0301), the Open Project of Bei**g Key Laboratory of Mental Disorders (2019JSJB06), and the independent research project of National Laboratory of Pattern Recognition.

Rights and permissions

About this article

Cite this article

Liu, K. A survey on neural relation extraction. Sci. China Technol. Sci. 63, 1971–1989 (2020). https://doi.org/10.1007/s11431-020-1673-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-020-1673-6