Abstract

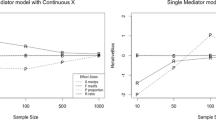

Previous studies have found some puzzling power anomalies related to testing the indirect effect of a mediator. The power for the indirect effect stagnates and even declines as the size of the indirect effect increases. Furthermore, the power for the indirect effect can be much higher than the power for the total effect in a model where there is no direct effect and therefore the indirect effect is of the same magnitude as the total effect. In the presence of direct effect, the power for the indirect effect is often much higher than the power for the direct effect even when these two effects are of the same magnitude. In this study, the limiting distributions of related statistics and their non-centralities are derived. Computer simulations are conducted to demonstrate their validity. These theoretical results are used to explain the observed anomalies.

Similar content being viewed by others

References

Fritz, M. S., Taylor, A. B., & MacKinnon, D. P. (2012). Explanation of two anomalous results in statistical mediation analysis. Multivariate Behavioral Research, 47(1), 61–87.

Greene, W. H. (1993). Econometric analysis (2nd ed.). New York: Macmillan Publishing Company.

Imai, K., Keele, L., & Yamamoto, T. (2010). Identification, inference and sensitivity analysis for causal mediation effects. Statistical Science, 25(1), 51–71.

Kenny, D. A., & Judd, C. M. (2014). Power anomalies in testing mediation. Psychological Science, 25(2), 334–339.

Loeys, T., Moerkerke, B., & Vansteelandt, S. (2014). A cautionary note on the power of the test for the indirect effect in mediation analysis. Frontiers in Psychology, 5, 1549.

MacKinnon, D. P. (2008). Introduction to statistical mediation analysis. New York: Taylor & Francis.

MacKinnon, D. P., Lockwood, C. M., Hoffman, J. M., West, S. G., & Sheets, V. (2002). A comparison of methods to test mediation and other intervening variable effects. Psychological Methods, 7(1), 83–104.

O’Rourke, H. P., & MacKinnon, D. P. (2015). When the test of mediation is more powerful than the test of the total effect. Behavior Research Methods, 47(2), 424–442.

Rucker, D. D., Preacher, K. J., Tormala, Z. L., & Petty, R. E. (2011). Mediation analysis in social psychology: Current practices and new recommendations. Social and Personality Psychology Compass, 5(6), 359–371.

Sobel, M. E. (1982). Asymptotic confidence intervals for indirect effects in structural equation models. Sociological Methodology, 13(1982), 290–312.

Tofighi, D., MacKinnon, D. P., & Yoon, M. (2009). Covariances between regression coefficient estimates in a single mediator model. British Journal of Mathematical and Statistical Psychology, 62(3), 457–484.

Zhao, X., Lynch, J. G., & Chen, Q. (2010). Reconsidering Baron and Kenny: Myths and truths about mediation analysis. Journal of Consumer Research, 37(2), 197–206.

Acknowledgements

We thank the editor-in-chief Dr. Irini Moustaki and three anonymous reviewers for their useful comments.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A

Proof of Proposition 1

Since \(S_{XU} = \sum _i(X_i-\bar{X})U_i\), \(S_{XU}\) follows a normal distribution with mean 0 and variance \(\sigma ^2_US_{XX}\). According to Eq. (3), \({\hat{a}}\) is unbiased since \(E({\hat{a}})=a\). The variance of \({\hat{a}}\) is equal to \(n^{-1}\cdot \sigma ^2_U/(n^{-1}S_{XX})\) and converges to 0 as \(n\rightarrow \infty \). That is, \({\hat{a}}\) is a consistent estimator of a.

Similar argument shows that \({\hat{c}}\) is unbiased for \(ab+c'\). From (4), the variance of \({\hat{c}}\) is equal to \(n^{-1}(b^2\sigma ^2_U+\sigma ^2_{V'})/(n^{-1}S_{XX})\) and converges to 0 as \(n\rightarrow \infty \). That is, \({\hat{c}}\) is a consistent estimator of \(ab+c'\).

To show the unbiasedness of \({\hat{b}}\) and \({\hat{c}}'\), it suffices to show that the expectation of the second term in (5) is \((0, 0)^t\). This is true because

Now we prove the consistency of \({\hat{b}}\). The fact that \(S_{XU}\) follows a normal distribution with mean 0 and variance \(\sigma ^2_US_{XX}\) implies \(n^{-1}S_{XU} = O_p(n^{-1/2})\). Similarly, \(n^{-1}S_{XV'} = O_p(n^{-1/2})\). The second term of expression (5) can be rewritten

Because

it suffices to show that \(n^{-2}A\) and \(n^{-2}B\) converge to 0 in probability. Since the product \(U_iV_i'\) are independently and identically distributed, \(n^{-1/2}\sum _{i=1}^n U_iV'_i= O_p(1)\). Hence,

We have

This shows \({\hat{b}}\) is consistent for b. In addition,

So \({\hat{c}}'\) is consistent for \(c'\).

Appendix B

Proof of Proposition 2

The normality of \({\hat{a}}\) and \({\hat{c}}\) is obvious. Their variances and the covariance between them are

and

This completes the proof of part 1.

In the proof of Proposition 1, we have seen that

From expression (5), there is

Furthermore,

Since \(n^{-1/2}S_{UV'}\) converges to \(N(0, \sigma ^2_U\sigma ^2_{V'})\) in distribution and \(n^{-1/2}S_{XV'}\) follows \(N(0, s^2_X\sigma ^2_{V'})\), we have

and

Combining these results and expression (6) gives the result of part 2 by repeatedly using Slutsky’s theorem.

To prove part 3, we first note that

and

Using expression (9) and Slutsky’s theorem, both Cov\([{\sqrt{n}}({\hat{a}}-a), {\sqrt{n}}({\hat{b}}-b)]\) and Cov\([{\sqrt{n}}({\hat{a}}-a), {\sqrt{n}}({\hat{c}}'-c')]\) converge to 0 in probability.

Similarly, because of (10) and (11),

and

The results for Cov\([{\sqrt{n}}({\hat{c}} -(ab+c')), {\sqrt{n}}({\hat{b}}-b)]\) and Cov\([{\sqrt{n}}({\hat{c}} -(ab+c')), {\sqrt{n}}({\hat{c}}'-c')]\) can be proved in a similar manner.

Rights and permissions

About this article

Cite this article

Wang, K. Understanding Power Anomalies in Mediation Analysis. Psychometrika 83, 387–406 (2018). https://doi.org/10.1007/s11336-017-9598-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-017-9598-1