Abstract

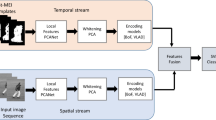

In this paper, a simple yet efficient activity recognition method for first-person video is introduced. The proposed method is appropriate for the representation of high-dimensional features such as those extracted from convolutional neural networks (CNNs). The per-frame (per-segment) extracted features are considered as a set of time series, and inter and intra-time series relations are employed to represent the video descriptors. To find the inter-time relations, the series are grouped and the linear correlation between each pair of groups is calculated. The relations between them can represent the scene dynamics and local motions. The introduced grou** strategy helps to considerably reduce the computational cost. Furthermore, we split the series in the temporal direction in order to preserve long term motions and better focus on each local time window. In order to extract the cyclic motion patterns, which can be considered as primary components of various activities, intra-time series correlations are exploited. The representation method results in highly discriminative features which can be linearly classified. The experiments confirm that our method outperforms the state-of-the-art methods in recognizing first-person activities on the three challenging first-person datasets.

Similar content being viewed by others

References

Abebe G, Cavallaro A, Parra X (2016) Robust multi-dimensional motion features for first-person vision activity recognition. Comput Vis Image Underst 149:229–248

Aggarwal JK, Ryoo MS (2011) Human activity analysis: a review. ACM Computing Surveys (CSUR) 43:16

Agrawal P, Girshick R, Malik J (2014) Analyzing the performance of multilayer neural networks for object recognition," in European Conference on Computer Vision, pp. 329–344

Betancourt A, Morerio P, Regazzoni CS, Rauterberg M (2015) The Evolution of First Person Vision Methods: A Survey

Chatfield K, Lempitsky VS, Vedaldi A, Zisserman A (2011) The devil is in the details: an evaluation of recent feature encoding methods, in BMVC, p. 8

Choi J, Jeon WJ, Lee SC (2008) "Spatio-temporal pyramid matching for sports videos," in Proceedings of the 1st ACM international conference on Multimedia information retrieval, pp. 291–297

Csurka G, Dance C, Fan L, Willamowski J, Bray C (2004) Visual categorization with bags of keypoints, in Workshop on statistical learning in computer vision, ECCV, pp. 1–2

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection," in Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on, 2005, pp. 886–893

Dalal N, Triggs B, Schmid C (2006) Human detection using oriented histograms of flow and appearance, in Computer Vision–ECCV 2006, ed: Springer, pp. 428–441

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) "Imagenet: A large-scale hierarchical image database," in Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on, pp. 248–255

Dollar P, Rabaud V, Cottrell G, Belongie S (2005) Behavior recognition via sparse spatio-temporal features," in Visual Surveillance and Performance Evaluation of Tracking and Surveillance, 2005. 2nd Joint IEEE International Workshop on, pp. 65–72

Donahue J, Anne Hendricks L, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, et al. (2015) "Long-term recurrent convolutional networks for visual recognition and description," in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2625–2634

Feichtenhofer C, Pinz A, Wildes R (2016) Spatiotemporal residual networks for video action recognition," in Advances in Neural Information Processing Systems, pp. 3468–3476

Fei-Fei L, Perona P (2005) A bayesian hierarchical model for learning natural scene categories," in Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on, pp. 524–531

Fu Y (2016) "Human Activity Recognition and Prediction," ed: Springer

Gong S, **ang T (2011) Action Recognition, in Visual Analysis of Behaviour: From Pixels to Semantics, ed London: Springer London, pp. 133–160

Graham D, Langroudi SHF, Kanan C, Kudithipudi D (2017) Convolutional Drift Networks for Video Classification, ar**v preprint ar**v:1711.01201

Gregor K, Danihelka I, Graves A, Rezende DJ, Wierstra D (2015) "DRAW: A recurrent neural network for image generation," ar**v preprint ar**v:1502.04623

He K, Zhang X, Ren S, Sun J (2015) Deep residual learning for image recognition, ar**v preprint ar**v:1512.03385, 2015

Iwashita Y, Takamine A, Kurazume R, Ryoo M (2014) "First-person animal activity recognition from egocentric videos," in Pattern Recognition (ICPR), 2014 22nd International Conference on, pp. 4310–4315

Jaakkola T, Haussler D (1999) "Exploiting generative models in discriminative classifiers," Advances in neural information processing systems, pp. 487–493

Jain M, Gemert J, Snoek CG (2014) University of amsterdam at thumos challenge 2014

Jia Y, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, et al. (2014) Caffe: Convolutional architecture for fast feature embedding, in Proceedings of the ACM International Conference on Multimedia, 2014, pp. 675–678

Kahani R, Talebpour A, Mahmoudi-Aznaveh A (2016) Time series correlation for first-person videos, in Electrical Engineering (ICEE), 2016 24th Iranian Conference on, 2016, pp. 805–809

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Kitani KM, Okabe T, Sato Y, Sugimoto A (2011) Fast unsupervised ego-action learning for first-person sports videos, in Computer Vision and Pattern Recognition (CVPR), 2011 IEEE Conference on, 2011, pp. 3241–3248

Klaser A, Marszałek M, Schmid C (2008) A spatio-temporal descriptor based on 3d-gradients, in BMVC 2008-19th British Machine Vision Conference, pp. 275: 1–10

Kong Y, Fu Y (2016) Discriminative relational representation learning for RGB-D action recognition. IEEE Trans Image Process 25:2856–2865

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks," in Advances in neural information processing systems, pp. 1097–1105

Laptev I (2005) On space-time interest points. Int J Comput Vis 64:107–123

Laptev I, Marszałek M, Schmid C, Rozenfeld B (2008) Learning realistic human actions from movies," in Computer Vision and Pattern Recognition, 2008. CVPR 2008. IEEE Conference on, pp. 1–8

Lazebnik S, Schmid C, Ponce J (2006) "Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories," in Computer Vision and Pattern Recognition, 2006 IEEE Computer Society Conference on, pp. 2169–2178

Moreira TP, Menotti D, Pedrini H (2017) First-person action recognition through Visual Rhythm texture description, in Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference on, pp. 2627–2631

Narayan S, Kankanhalli MS, Ramakrishnan KR (2014) Action and Interaction Recognition in First-person videos, in Computer Vision and Pattern Recognition Workshops (CVPRW), 2014 IEEE Conference on, 2014, pp. 526–532

Nowak E, Jurie F, Triggs B (2006) "Sampling strategies for bag-of-features image classification," in Computer Vision–ECCV 2006, ed: Springer, pp. 490–503

Ozkan F, Arabaci MA, Surer E, Temizel A (2017) Boosted Multiple Kernel Learning for First-Person Activity Recognition, ar**v preprint ar**v:1702.06799

Perronnin F, Dance C (2007) Fisher kernels on visual vocabularies for image categorization," in Computer Vision and Pattern Recognition, 2007. CVPR'07. IEEE Conference on, pp. 1–8

Perronnin F, Sánchez J, Mensink T (2010) Improving the fisher kernel for large-scale image classification, in Computer Vision–ECCV 2010, ed: Springer, pp. 143–156

Philbin J, Chum O, Isard M, Sivic J, Zisserman A (2008) Lost in quantization: Improving particular object retrieval in large scale image databases, in Computer Vision and Pattern Recognition, 2008. CVPR 2008. IEEE Conference on, pp. 1–8

Piergiovanni A, Fan C, Ryoo MS (2017) Learning latent sub-events in activity videos using temporal attention filters, in Proceedings of the 31st AAAI conference on artificial intelligence

Razavian A, Azizpour H, Sullivan J, Carlsson S (2014) CNN features off-the-shelf: an astounding baseline for recognition," in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 806–813

Ryoo MS, Matthies L (2013) "First-person activity recognition: What are they doing to me?," in Computer Vision and Pattern Recognition (CVPR), 2013 IEEE Conference on, pp. 2730–2737

Ryoo M, Matthies L (2015) First-Person Activity Recognition: Feature, Temporal Structure, and Prediction," International Journal of Computer Vision, pp. 1–22

Ryoo M, Matthies L (2016) Video-based convolutional neural networks for activity recognition from robot-centric videos," in SPIE Defense+ Security, pp. 98370R-98370R-6

Ryoo MS, Rothrock B, Matthies L (2015) Pooled motion features for first-person videos, in Computer Vision and Pattern Recognition (CVPR), 2015 IEEE Conference on, pp. 896–904

Scovanner P, Ali S, Shah M (2007) A 3-dimensional sift descriptor and its application to action recognition," in Proceedings of the 15th international conference on Multimedia, pp. 357–360

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos, in Advances in Neural Information Processing Systems, , pp. 568–576

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition," ar**v preprint ar**v:1409.1556

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition, ICLR

Sudhakaran S, Lanz O (2017) Convolutional Long Short-Term Memory Networks for Recognizing First Person Interactions, in Computer Vision Workshop (ICCVW), 2017 IEEE International Conference on, pp. 2339–2346

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. (2015) Going deeper with convolutions, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9

van Gemert JC, Geusebroek JM, Veenman CJ, Smeulders AW (2008) Kernel codebooks for scene categorization, in Computer Vision–ECCV 2008, ed: Springer, pp. 696–709

Wang X (2011) Action recognition using topic models," in Visual Analysis of Humans, ed: Springer, pp. 311–332

Wang H, Schmid C (2013) Action recognition with improved trajectories, in Computer Vision (ICCV), 2013 IEEE International Conference on, 2013, pp. 3551–3558

Wang H, Kläser A, Schmid C, Liu C-L (2013) Dense trajectories and motion boundary descriptors for action recognition. Int J Comput Vis 103:60–79

Wang J, Liu Z, Wu Y, Yuan J (2014) "learning actionlet ensemble for 3D human action recognition," Pattern Analysis and Machine Intelligence. IEEE Transactions on 36:914–927

Wang L, Qiao Y, Tang X (2015) "Action recognition with trajectory-pooled deep-convolutional descriptors," ar**v preprint ar**v:1505.04868

Wang L, **ong Y, Wang Z, Qiao Y, Lin D, Tang X, et al. (2016) Temporal segment networks: towards good practices for deep action recognition, in European Conference on Computer Vision, pp. 20–36

Wang X, Gao L, Song J, Zhen X, Sebe N, Shen HT (2017) Deep appearance and motion learning for egocentric activity recognition, Neurocomputing

Willems G, Tuytelaars T, Van Gool L (2008) An efficient dense and scale-invariant spatio-temporal interest point detector, in Computer Vision–ECCV 2008, ed: Springer, pp. 650–663

Yan Y, Ricci E, Liu G, Sebe N (2015) Egocentric daily activity recognition via multitask clustering. IEEE Trans Image Process 24:2984–2995

Zhen X, Shao L, Tao D, Li X (2013) Embedding motion and structure features for action recognition. IEEE Transactions on Circuits and Systems for Video Technology 23:1182–1190

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kahani, R., Talebpour, A. & Mahmoudi-Aznaveh, A. A correlation based feature representation for first-person activity recognition. Multimed Tools Appl 78, 21673–21694 (2019). https://doi.org/10.1007/s11042-019-7429-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7429-3