Abstract

Although recent studies have shown that a Bayesian network classifier (BNC) that maximizes the classification accuracy (i.e., minimizes the 0/1 loss function) is a powerful tool in both knowledge representation and classification, this classifier: (1) focuses on the majority class and, therefore, misclassifies minority classes; (2) is usually uninformative about the distribution of misclassifications; and (3) is insensitive to error severity (making no distinction between misclassification types). In this study, we propose to learn the structure of a BNC using an information measure (IM) that jointly maximizes the classification accuracy and information, motivate this measure theoretically, and evaluate it compared with six common measures using various datasets. Using synthesized confusion matrices, twenty-three artificial datasets, seventeen UCI datasets, and different performance measures, we show that an IM-based BNC is superior to BNCs learned using the other measures—especially for ordinal classification (for which accounting for the error severity is important) and/or imbalanced problems (which are most real-life classification problems)—and that it does not fall behind state-of-the-art classifiers with respect to accuracy and amount of information provided. To further demonstrate its ability, we tested the IM-based BNC in predicting the severity of motorcycle accidents of young drivers and the disease state of ALS patients—two class-imbalance ordinal classification problems—and show that the IM-based BNC is accurate also for the minority classes (fatal accidents and severe patients) and not only for the majority class (mild accidents and mild patients) as are other classifiers, providing more informative and practical classification results. Based on the many experiments we report on here, we expect these advantages to exist for other problems in which both accuracy and information should be maximized, the data is imbalanced, and/or the problem is ordinal, whether the classifier is a BNC or not. Our code, datasets, and results are publicly available http://www.ee.bgu.ac.il/~boaz/software.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and related work

Classifiers, e.g., the neural network (NN), random forest (RF), and support vector machine (SVM), excel in prediction but not in knowledge representation, which is needed in problems for which key factor identification is sought, such as in an attempt to understand possible causes of accidents, a disease, or a machine/process fault. The Bayesian network (BN) excels in knowledge representation, which makes it ideal to identify key factors, but it is not considered a supreme classifier. To achieve high accuracy (ACC), learning the structure of a BN classifier (BNC) should maximize a (discriminative) score that is specific to classification and not a generative one based on the likelihood function that may fit a general BN structure, but not necessarily that of a BNC structure. Indeed, when a BNC was learned to minimize the 0/1 loss function, it showed superiority to BNCs learned using marginal and class-conditional likelihood-based scores and even to state-of-the-art classifiers like NN and SVM (Kelner and Lerner 2012).

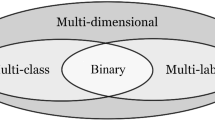

However, by maximizing accuracy (minimizing the 0/1 loss function) in learning its structure, the BNC—similar to other machine learning classifiers—cannot account for the error distribution and, thus, is not informative enough about the classification result and the contribution of each class to the error (Provost et al. 1998; García et al. 2010), and it may also be sub-optimal (Ranawana and Palade 2006). Other discriminative measures used in learning a classifier, such as the area under curve (AUC), suffer from the same shortcoming, because they all relate to ACC. Moreover, in most cases, these measures only suit binary classification problems. Also, it may explain why other studies (García et al. 2009) suggested measures such as the consensus measure of accuracy.

On the other hand, measures that maximize information and account for error distribution, e.g., mutual information (MI) (Cover and Thomas 2012), the Matthew correlation coefficient (MCC) (Baldi et al. 2000), and the confusion entropy (CEN) (Wei et al. 2010) usually are not accurate enough. Labatut and Cherifi (2011) claimed that most of the non-accuracy measures were initially developed for other purposes than to compare/evaluate classifiers (e.g., to measure the association between two random variables, the alignment between two raters, or the similarity between two sets). Therefore, they may lead to confusing terminology or even to wrong interpretation, or they may be noisy and ad hoc for a particular problem.

A second challenge for a BNC, as well as for all other machine-learning classifiers, is that for imbalanced data, they usually predict all (or almost all, depending on the imbalance level) samples of the minority classes as of the majority class. These classifiers show high accuracy, which is in the order of the prior probability of the majority class, since they classify all samples to this class, but at the same time, they may misclassify all samples of the minority classes. Class imbalance can traditionally be tackled using different approaches, e.g., random sampling—upsampling the minority class(es) or downsampling the majority class (Chawla 2005; Provost 2000). However, these two sampling methods result in over-fitting and domain deformation or loss of data, respectively. In addition, tackling imbalance by random downsampling or upsampling, or applying different costs to different misclassifications provides an optimistic ACC estimate, and thus is not recommended (Provost 2000). Also other accuracy-driven measures, e.g., precision, sensitivity, and specificity lead to sub-optimal solutions in the presence of class imbalance (Ranawana and Palade 2006). More advanced methods to tackle class imbalance include feature selection (Wasikowski and Chen 2010); sampling subsets of the classes (Liu et al. 2009); combination of down- and upsampling using e.g., the synthetic minority over-sampling technique (SMOTE) (Chawla et al. 2002); combination of down–upsampling with an ensemble of classifiers (Galar et al. 2012) or with feature selection (Lerner et al. 2007); cost-sensitive learning (Domingos 1999); measuring the balanced accuracy (over all classes) (Brodersen et al. 2010) or its geometric mean (García et al. 2010); and hierarchical decomposition of the classification task, where each hierarchy level is designed to tackle a simpler problem that is represented by classes that are approximately balanced (Lerner et al. 2007). Although probably never tested, classifiers—BNCs and others—learned using information measures such as MI, MCC, and CEN should be less affected by class imbalance data but at the same time also less accurate.

A third challenge is that 0/1 loss-function classifiers do not account differently for different error severities, as they count all misclassifications the same, both for performance evaluation and in learning. However, when the class (target) variable is ordinal, exploiting the ordinal nature of this variable may facilitate learning the classifier and make it more accurate. Considering an ordinal target variable Y, taking one of M values, such that \(V_1< \cdots <V_M\), a learning algorithm can take into account the natural ordering of this variable to induce a classifier, which harnesses this extra information to improve its accuracy. One such classifier is the cumulative probability tree (Frank and Hall 2001), for which Y is transformed into \(M-1\) binary variables such that the ith binary variable represents the test \(Y>V_i\). The model then comprises \(M-1\) tree classifiers, where the ith tree is trained to output \(P(Y>V_i)\). Another ordinal classifier is the cumulative link model (CLM) (Agresti 2011) that is an extension of the generalized linear model (GLM) for ordinal classification. A third ordinal classifier is the ordinal decision tree, which generalizes the classification and regression tree (CART) (Breiman et al. 1984) to ordinal target variables by considering splitting functions based on ordinal impurity functions (Piccareta 2008), which are specific implementations of the generalized Gini impurity function for a node. Principally—although we are not aware of any such study—the mean absolute error, MAE, (Hyndman and Koehler 2006), which sometimes is used to evaluate the error between a prediction and the true value, may also be used to augment learning an ordinal classifier. While such a measure can capture the ordinal information in a problem and potentially penalize different errors differently as we desired, it is not informative regarding the error distribution and is still sensitive to class imbalance.

To motivate this study further, let’s consider two examples. The first is prediction of the severity of young-driver (YD) motorcycle accidents (MAs). Road injuries are the leading cause of death among YDs (ages 18–24) (Toledo et al. 2012); YDs make up 9–13% of the population, but their percentage in driver fatalities is 18–30% (OECD 2006). Besides the tragic human cost, a fatal accident costs (OECD 2006) around $1.5M, where in the US alone, the cost of YD road accidents in 2002 was $40 billion. MAs are particularly deadly, and luckily fatal MAs are only \(\sim 1\%\) of all accidents, whereas severe and minor accidents are around 12% and 87% of the accidents, respectively. However, experiments show that MA classifiers tend to focus on the majority class of minor accidents at the expense of the minority classes of severe and fatal accidents (Halbersberg and Lerner 2019). In addition they are uninformative about their error distribution and are insensitive to error severity (making, e.g., no distinction between misclassification of fatal accidents as severe or minor although the former is less harsh than the latter). Road-safety experts wish their MA classifier to not only maximize accuracy, but also to be informative about its errors, to be as indifferent as possible to data imbalance between minor and fatal accidents, and to penalize misclassifications of fatal accidents as severe and as minor differently.

The second example is prediction of the disease state of an ALS patient. Amyotrophic lateral sclerosis (ALS) is a devastating neurodegenerative illness of the human motor system with an unknown pathogenesis (Kiernan et al. 2011), which is still not visibly affected by the therapies available today, and from which 50% of patients die within three to five years of onset, and about 20% survive between five to ten years (Mitchell and Borasio 2007; Kiernan et al. 2011). The ALS functional rating scale (ALSFRS) is a widely accepted metric in the ALS medical community for the evaluation of ALS-related disability and progression (Brooks et al. 1996), with values between 0 for no functionality and 4 for full functionality for ten ALSFRS items describing physical functionalities in, e.g., breathing, speaking, and walking. By considering the ALSFRS as the target (class label), we may define ALS disease state prediction as an ordinal problem. With respect to the relative frequencies of ALSFRS values, which typically may vary from around 1% for ALSFRS of 0 to 42% and 35% for values of 3 and 4, respectively, disease state prediction also becomes a class imbalance problem. ALS patients, along with their doctors and carers, wish for disease state prediction to be very accurate (Gordon and Lerner 2019) but at the same time informative, to not be fooled by the imbalance among disease states, and to consider mild misclassification less harshly than severe misclassification.

In this study, we propose to learn a BNC, which leverages knowledge representation, using measures replacing the 0/1 loss function and trading accuracy and information. We are interested in learning the BNC using a measure that maximizes both accuracy and information, considers the error distribution, admits class imbalance, and accounts for error severity (which is significant only for ordinal problems). First, we consider existing measures, such as MI, MCC, and CEN, that all use the entire confusion matrix and not just its diagonal (as ACC) and, therefore, have the potential to meet at least some of our concerns. In addition, we evaluate the MAE, which naturally accounts for error severity. Second, since none of these measures accounts for all concerns, we propose next a novel information measure (IM), trading accuracy and information, that accounts for all of them. Third, we extend this measure further, adding to it a term that trades off accuracy and IM, giving the measure an additional degree of flexibility. Then we motivate the proposed measures and thoroughly evaluate them, comparing them with the existing measures theoretically and using several performance measures (which are the same learning measures), synthesized confusion matrices, artificial datasets, UCI ordinal datasets, and three real ordinal problems. We show the advantages of the IM-based BNC compared with BNCs that are learned using alternative measures and other state-of-the-art classifiers with respect to maximization of accuracy and information in ordinal class-imbalance problems. These advantages are manifested here for many databases and several real-world problems, but we believe they hold true for other problems (e.g., ranking problems) having the same requirements, and for classifiers other than the BNC.

In summary, our contribution is that: (1) We propose to utilize the BNC using a measure replacing the 0/1 loss function to jointly maximize accuracy and information, consider the error distribution, admit class imbalance, and account for error severity in tackling class-imbalance ordinal classification problems; (2) Since our theoretical and empirical evaluation of existing measures showed that none of the existing measures accounts for all these concerns, we suggest a novel information measure (IM) that has all the above desired properties; (3) We motivate the proposed measure and thoroughly evaluate it theoretically in comparison with the existing measures and empirically using several performance measures, synthesized confusion matrices, artificial datasets, UCI ordinal datasets, and three real ordinal problems; and (4) We demonstrate the advantages of the IM-based BNC compared with BNCs that are learned using existing measures and with other state-of-the-art classifiers (e.g., NN, SVM, BNC, and RF) with respect to maximization of accuracy and information in ordinal class-imbalance problems. We manifested these advantages using many databases and several real-world problems, and we believe these hold true for other problems (e.g., ranking problems) having the same requirements, and for classifiers other than the BNC.

The rest of this paper is organized as follows. In Sects. 2 and 3, we review the BNC and candidate measures for learning its structure, respectively. In Sect. 4, we propose new measures for learning a BNC and demonstrate how to control their values to trade learning among the conflicting requirements of accuracy, information, and error severity. In Sect. 5, we experimentally evaluate our information measures comparing them with existing measures using synthesized confusion matrices that pose different classification scenarios and challenges. In Sect. 6, we expand our evaluation and compare empirically BNCs learned based on our (as well as other) measures with state-of-the-art classifiers using databases representing artificial and real-world problems. Finally in Sect. 7, we summarize the study and draw important conclusions.

2 Bayesian network classifiers

The BN compactly represents the joint probability distribution P over a set of variables \(X=\{X_1, \ldots ,X_n\}\), each, in the discrete case, having a finite set of mutually exclusive states. It consists of a network structure G and a set of parameters \(\theta \), where \(G=(V,E)\) is a directed acyclic graph in which the nodes V in G are in one-to-one correspondence with the variables in X, and the edges E in G encode a set of conditional independence assertions about variables in X. \(\theta \) consists of local probability distributions, each for each variable \(X_i\) given its parents \(PA(X_i)\) in G. Given the network, the joint probability distribution over X comprises the local distributions as (Heckerman 1998):

Learning the structure of the BN from a dataset D is NP hard (Cooper and Herskovits 1992), and thus is usually performed heuristically and sub-optimally using, e.g., the search and score (S&S) approach by which the structure that maximizes a score function, which measures the fitness of the structure to the data, is selected. One such score (measure) is the a posteriori probability of the network given the data, P(G|D) (or the marginal likelihood, P(D|G), for equally probable structures) (Cooper and Herskovits 1992), and another measure is based on the minimum description length principle (Lam and Bacchus 1994), penalizing model complexity, where both scores are asymptotically equivalent and correct. However, these scores, similar to other log likelihood (LL) or information-based scores, either likelihood-equivalent or not (Heckerman et al. 1995), cannot optimize a classifier (Friedman et al. 1997) because they are not directed in maximizing the classification accuracy. Instead, it was suggested to learn a BNC by maximizing the conditional log likelihood (CLL) of G given D (Grossman and Domingos 2004):

where \(v_{i}'\) and \(c_i\) are the feature vector and class label, respectively, for the ith of N instances. However, the computation of CLL is exponential in the number of instances N, and also, although CLL is asymptotically correct, for a finite sample, the class maximizing CLL can only indicate the correct classification, but it can not guarantee it (Kelner and Lerner 2012).

A score that measures the degree of compatibility between a possible state of the class variable C and the correct class is the 0/1 loss function:

where \({\widehat{c}}_i\) is the estimated class label for the ith instance. Instead of selecting a structure based on summation of supervised marginal likelihoods over the dataset (2), the risk minimization by cross validation (RMCV) score selects a structure based on summation of false decisions about the class state over the dataset (Kelner and Lerner 2012),

where the training set D is divided into K non overlap** validation sets \(D_k^K\) (each having N/K instances of the form \(v_{ki}=(c_{ki},v_{ki}')\)), and for each such validation set, an effective training set has \(|D \setminus D_k^K|\) (i.e., \(N(K-1)/K\)) instances. As part of the cross validation (CV), the classification error rate, i.e., the RMCV score, is measured on all vectors of \(D_k^K\) and averaged over the K validation sets. No use of the test set is made during learning. Note that the RMCV score is normalized by the dataset size N, whereas (2) is not. Although normalization has the same effect on all learned structures, it can clarify the meaning of the score (i.e., an error rate) and help in comparing scores over datasets. Moreover, sharing the same range of values ([0, 1]), RMCV establishes its correspondence to classification accuracy. Also, note that the same RMCV measure can be used for learning the BNC and for evaluating its accuracy, which makes learning oriented towards classification.

To compute the RMCV, the candidate structure has to be turned into a classifier by learning its parameters. Local probabilities are modeled using the unrestricted multinominal distribution (Heckerman 1998), where the distribution parameters are obtained using maximum likelihood (ML) (Cooper and Herskovits 1992), similar to (Kontkanen et al. 1999). Moreover, it has been empirically shown (Grossman and Domingos 2004) that ML parameter estimation does not deteriorate the results compared to maximum conditional likelihood estimation, which can only be obtained by computationally expensive numerical approximation. Learning a BN rather than a structure has an additional cost of parameter learning, though this cost is negligible while using ML estimation and fully observed data.

Starting with the empty or naïve Bayesian graph and using a simple hill-climbing search with the RMCV score establish the RMCV structure learning algorithm for BNCs (Kelner and Lerner 2012). The hill-climbing implementation includes a search over all neighbor graphs at each iteration. A neighbor graph is defined as a single modification of the current graph using one of the following operators: edge addition, deletion, or reversal provided that the derived graph remains a directed acyclic graph. The RMCV BNC showed superiority to other BNCs and state-of-the-art classifiers using synthetic and UCI datasets and, thus, is used in this study to represent a BNC. However, as it is based on the 0/1 loss function, RMCV, similar to other classifiers, is prone to all weaknesses of classifiers as described in Sect. 1.

3 Evaluating classifier performance

How can we know whether the classification model we have constructed is the most suitable one? Performance measures that evaluate multi-class classifiers are usually based on the confusion matrix between predicted and true classes (Baldi et al. 2000). Although this matrix summarizes all correct and wrong predictions (Table 1), and thereby may represent the classifier error distribution, the common way to evaluate classifier performance is based on the classification accuracy (Ferri et al. 2009; Jurman et al. 2012), i.e., the 0/1 loss function (RMCV score), which is the (normalized) matrix trace.

However, researchers have made claims against the use of accuracy (Ranawana and Palade 2006; García et al. 2010). Provost et al. (1998) and Chawla (2005) argued that accuracy ignores misclassification costs and, therefore, may lead to misleading conclusions. Brodersen et al. (2010) concluded that even while CV is used, measuring performance by accuracy has two critical shortcomings: first, it is a non-parametric approach that does not make it possible to compute a meaningful confidence interval of a true underlying quantity. Second, it does not properly handle imbalanced datasets. As we noted above, upsampling the minority class or downsampling the majority class result in over-fitting and domain deformation or loss of data, respectively. Also, tackling data imbalance by these methods provides an optimistic accuracy estimate and, thus, is not recommended (Provost et al. 1998). Others have stated that accuracy is inappropriate when there are a great number of classes (Caballero et al. 2010).

Indeed, many studies have been conducted trying to suggest other measures for evaluating the classifier performance. For example, Wallace and Boulton (1968) suggested measuring the goodness of classification based on the minimum message length borrowed from information theory. Ferri et al. (2009) compared and analyzed relationships of 18 classifier performance measures. They concluded that measures providing a qualitative understanding of error, such as accuracy, perform badly when distortion occurs during the learning phase because the dataset is too small or a bad algorithm is used. Moreover, they confirmed that some measures suffer from the imbalanced data limitation. They offered to use the area under curve (AUC) measure. Baldi et al. (2000) compared nine binary classifier performance measures, among them information measures and quadratic error measures. However, none of them adequately combines information, error severity, and ways to handle class imbalance.

Before we start reviewing relevant classifier performance measures, let’s recall that besides the question of which measure to use to evaluate a classifier, there is also the question of which measure to use for learning (training the classifier). Not always are the two measures the same, which raises the question why. For example, the NN and RF classifiers are evaluated using classification accuracy, but usually are trained according to some (non classification) error and information gain, respectively. This was also the case with BNCs, until very recently (Kelner and Lerner 2012), when the classifiers were trained according to an LL-driven measure, but evaluated using accuracy.

Following, we review several common measures as a replacement for the classification accuracy for learning and evaluating a BNC.

3.1 Mutual information

In information theory and statistics, entropy is used to measure the uncertainty about a certain variable (Cover and Thomas 2012). If X is a discrete random variable with K values, then the information content in each value k of this variable is \(h(k)=-\text{log}P(X=k)\). Therefore, a less likely value of X contains more information than a highly probable one. The entropy is the average information content of X that is distributed according to P:

where in this paper, we use the natural base logarithm. Similarly, the joint entropy between two variables X and Y, which measures how much uncertainty there is in the two variables together, is defined as: \(H(X,Y)=-\sum _{x,y} P(x,y) \log P(x,y)\) (Cover and Thomas 2012).

The mutual information (MI) between X and Y can be defined as the reduction in entropy (uncertainty) of Y by the conditional entropy of Y on X, i.e., \(I(X;Y)=H(Y)-H(Y|X)\). For classification, if X and Y are holding predictions and true values, respectively, MI measures the reduction in uncertainty for the true class \(Y=y\) due to the prediction \(X=x\) (Baldi et al. 2000),

Since MI measures how prediction decreases the uncertainty regarding the true class, we should prefer a classifier with a high MI value.

3.2 Confusion entropy

The confusion entropy (CEN) (Wei et al. 2010) exploits the distribution of misclassifications of a class as any other of \(M-1\) classes and of the \(M-1\) classes as that class:

where \(P_m\) refers to the confusion probability of class m,

where \(C_{m,k}\) is the (m, k) element of the confusion matrix between X and Y.

The denominator for all classes is equal to the sum of all confusion matrix elements multiplied by two, and the numerator for \(P_m\) equals the sum of row m and column m (i.e., the sum of all samples that belong to class m and those that were classified to class m). \(CEN_m\) refers to the confusion entropy of class m,

where \(P_{k,m}^m\) is the probability of misclassifying samples of class k to class m subject to class m,

i.e., the misclassification is normalized by the sum of all samples that belong to class m and those that were classified as class m.

For an M class problem, the misclassification information involves both information on how the samples with true class label \(c_i\) have been misclassified to one of the other \(M-1\) classes and information on how the samples of the other \(M-1\) classes have been misclassified to class \(c_i\) (Wei et al. 2010).

3.3 Matthew correlation coefficient

The Matthew correlation coefficient (MCC), known also as the Pearson correlation, has been used in the binary classification case (Baldi et al. 2000). Its generalization to the multiclass problem was introduced by (Gorodkin 2004), where MCC is the correlation between the true (\({\mathbf{U}}\)) and predicted (\({\mathbf{V}}\)) class matrices (Jurman et al. 2012),

\({\mathbf{U}}\) and \({\mathbf{V}}\) are \(N \times M\), and N and M are the numbers of samples and classes, respectively, and \(COV({\mathbf {U}},{\mathbf {V}})\) is:

where the average prediction and true value of class m are \({\overline{v}}_m=\frac{1}{N} \sum _{i=1}^{N} v_{im}\) and \({\overline{u}}_m=\frac{1}{N} \sum _{i=1}^{N} u_{im}\), respectively.

Consider the case in which the class variable is perfectly balanced and all off-diagonal entries in the confusion matrix are F, for false, and all main diagonal entries are T, for true. That is, F is the number of misclassifications of class i to class j, \(\forall j \ne i\) (and thus there are \((M-1) F\) misclassifications for each class), and T is the number of correct classifications of class i, \(\forall i\). A strong (monotone) connection between CEN and MCC for this case is (Jurman et al. 2012):

According to (13), the relation between CEN and MCC depends on the log of the ratio of the number of samples belonging to class i (in this case, this number is shared by all classes as the class variable is perfectly balanced) to the number of misclassifications of this class.

Similarly, we can write the relationship between MI and MCC as:

and for the case for which \(F=1\) and \(T>\!\!>\ M\), we can derive an approximation:

3.4 Mean absolute error

The mean absolute error (MAE) measures the prediction error as the average deviation of the predicted class vector (X) from the true class vector (Y) (Hyndman and Koehler 2006),

which is the sum of all possible errors, each is the (x, y) element of the confusion matrix, weighted by their relative prevalence according to the confusion matrix, P(x, y).

4 Trading between information and accuracy

As our experimental evaluation shows (Sect. 5), when applied in learning a BNC, none of the presented measures can accomplish all we ask—maximization of both accuracy and information, tackling class imbalance, and accounting for error severity. By using the joint probability distribution P(x, y) between predictions X and true classes Y (as in Sects. 3.1 and 3.4, where (x, y) is an element in the confusion matrix), we suggest the information measure (IM) that balances between the mutual information between X and Y (Sect. 3.1) and a score, we call total error severity (ES), that evaluates the classifier error simultaneously over all classes, penalizing errors by their severity (Halbersberg and Lerner 2016),

where \(|x-y|\) is the “severity” of a specific error, that of predicting x where the true value is y. \(ES(X,Y)=\sum _{x=1}^{M} \sum _{y=1}^{M} P(x,y) \log (1+|x-y|)\) measures weighted [by the joint probability P(x, y)] errors between predictions the classifier has made and labels for the M true classes. Since ES refers to the “distance” measured on an ordinal scale between two classes, it will contribute to IM only for ordinal classification problems, where such a distance has a meaning, and will not contribute in non-ordinal problems (where only MI between predictions and true values will contribute to IM).

By taking the logarithm of the sum of the error severity \(|x-y|\) and 1 (16), we put ES and MI on common ground, letting them span the same range and be additive. Let’s consider those conditions/scenarios that establish the range of values IM gets. As Table 2 demonstrates, when there is no difference between the true and predicated classes, i.e., perfect classification, \(y=x\), ES takes its minimal value of \(P(x,x) \log (1+0)=0\), as desired. In this scenario, X and Y are identical and, thus, dependent, and MI will take its maximal value when the class variable is uniformly distributed, \(MI(Y,Y)=\sum _{y=1}^{M} \sum _{y=1}^{M} P(y,y) \log \left( \frac{P(y,y)}{P(y) P(y)} \right) =\log (M)\), which is also the entropy of Y, \(MI(Y,Y)=\min \{H(Y),H(Y)\}=H(Y)\) (Cover and Thomas 2012). This scenario sets the minimal (best) value of IM, which is \(-\log (M)\) (Table 2). When, on the other hand, the severity is maximal, i.e., all samples are of true class \(y=1\) and classified as class \(x=M\) (or vice versa), which means that \(P(x=M,y=1)=1\) and \(|x-y|=M-1\), ES is \(P(M,1) \log (1+M-1)= \log (M)\). In this scenario, the only entry in the double sum of MI is \(P(M,1) \log \left( \frac{P(M,1)}{P(x=M) P(y=1)} \right) =\log (1)=0\). Thus, \(-MI(X,Y)+ES(X,Y)=\log (M)\) is the highest value IM takes.

A third interesting scenario in Table 2 is when the confusion matrix distribution is uniform, and then ES takes a middle value of \(\frac{M-1}{M^2} \log (2M!)\)Footnote 1.

In summary, not only that MI and ES are in the same range, but they are in opposite trends, which encouraged us to sum them, where MI is added in a negative sign, as we wish to minimize both \(-MI\) and ES. As Table 2 shows, IM is in the range \([-\log (M),\log (M)]\), where \(-\log (M)\) is for perfect classification (all samples are correctly classified) and the data is balanced across the classes, and \(\log (M)\) is for the extreme misclassification case, when all samples belong to class 1, but are classified as class M (or vice versa). If we identify the error severity with an adaptive cost for penalizing different misclassification errors differently (Grossman and Domingos 2004; Elkan 2001), then the IM can be interpreted as a cost matrix (Table 3).

Now, let us prove that IM gets its minimum at the same point -MI and ES get their minimum. We base our proof on Lemma 1 that shows that a function (IM) that is the sum of two other functions (-MI and ES) that get their global minimum at the same point will get its global minimum at that point.

Lemma 1

A function that is the sum of two functions that get their global minima at the same point will also get its global minimum at that point.

Proof

Let \(x, y \in A\), and let \(\arg \min _{{x \in A}}f(x) = x^{*}\), a global minimum of f, and \(\arg \min _{{x \in A}}g(x)=x^*\), also a global minimum of g. Let us assume by contradiction that \(\arg \min _{{x \in A}}h(x)=g(x)+f(x)=y\), \(\quad y \ne x^*\). It follows that \(f(y) > f(x^*)\) and also \(g(y) > g(x^*)\), which means that \(g(y)+f(y) > g(x^*)+f(x^*)\), but we assumed that y is a global minimum of \(h(x)=g(x)+f(x)\) for all \(x \in A\), which makes the contradiction. \(\square \)

As we have seen, IM is a proper measure to tackle ordinal classification problems, and it answers the requirements of combining information and error severity to classification accuracy, and of handling class imbalance (see Sect. 5 for empirical evaluation). But, it may poorly evaluate the classifier in cases where the classifier has poor performance (e.g., there are more errors than correct classifications), and in these cases, MI dominants IM. It is easy to propose a corresponding theoretical confusion matrix (Sect. 5.5 and Fig. 6), but it can also happen in practice, for example, when the algorithm starts its greedy search with a classifier that is close to random. Therefore, to trade better IM and accuracy, we modify IM with a term \(\alpha \ge 1\) that adjusts the error severity (see “Information measure with alpha” section in Appendix):

Then \(IM_\alpha \) (i.e., IM that is controlled by \(\alpha \)) can be written as (see “Information measure with alpha” section in Appendix):

where \(\alpha \)’s role in practice is to determine the balance between ACC and IM (and not to add costs to error severities). The measure range is \(-\log (\alpha M)<IM_\alpha <\log (M)\). The minimal value \(-\log (\alpha M)\) is achieved for perfect classification, when all samples are correctly classified and the data is balanced. In this case, \(IM=-\log (M)\), and because ACC is 1, \(IM_\alpha =-\log (M)-\log (\alpha )=-\log (\alpha M)\). The maximal value \(\log (M)\) is the extreme misclassification case, when all samples belong to class 1, but are classified as M (in this case, \(ACC=0\), so the second element in Eq. (18), \(-\log (\alpha ) ACC\), cancels out).

Note that when \(\alpha =1\), IM is a special case of \(\hbox {IM}_\alpha \). As \(\alpha \) increases, \(\hbox {IM}_\alpha \) decreases regardless of IM, which is independent of \(\alpha \) and becomes negligible compared to \(\log (\alpha ) ACC\). Then, as the following Lemma shows, ACC becomes a special case of \(\hbox {IM}_\alpha \).

Lemma 2

As \(\alpha \) increases, \(\hbox {IM}_\alpha \) is monotone with ACC.

Proof

Let \(A_i\) and \(A_j\) be two classifiers for a number of classes \(M>2\), and let \(\alpha \)\(\gg M\). For \(A_i\)

Without loss of generality, we assume that \(ACC_i>ACC_j>0\). Since \(\alpha \gg M\), and since IM is upper bounded by \(\log (M)\), IM is negligible to the second element, so

which means that:

\(\square \)

That is, for \(\alpha \)\(\gg M\), \(\hbox {IM}_\alpha \) is monotone with ACC, and thus learning a BNC structure by minimizing \(\hbox {IM}_\alpha \) yields a BNC that also maximizes ACC, and the structure minimizing \(\hbox {IM}_\alpha \) is the same structure maximizing ACC. That is, ACC is a special case of \(\hbox {IM}_\alpha \) for large \(\alpha \) (but only for large \(\alpha \)). Therefore, \(\hbox {IM}_\alpha \) balances between IM and ACC and provides extra sensitivity beyond that provided by IM to different tradeoffs between accuracy and information, error distributions, and error severities.

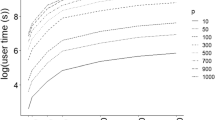

To demonstrate the impact of \(\alpha \) on \(\hbox {IM}_\alpha \), we use a simple example. Let U be a matrix of dimension \(M=3\), where all off-diagonal and main diagonal elements are F (false) and T (true), respectively, and let \(F=\frac{1}{3} T\) (as in Sect. 3.3, F is the number of misclassifications of class i to class j, \(\forall j \ne i\), and T is the number of correct classifications of class i, \(\forall i\)) (Table 4). We executed 81 (\(M^4=3^4\)) scenarios and calculated for each scenario ACC, IM, and \(\hbox {IM}_\alpha \), the latter with a range of \(\alpha \) values in [1,81]. Figure 1 shows that as \(\alpha \) increases, \(\hbox {IM}_\alpha \) increases as \(\log (\alpha )\)ACC, and ACC and IM are, as expected, independent of \(\alpha \). For \(\alpha =1\), \(IM=IM_\alpha \approx \frac{2}{3}\)ACC, and for \(\alpha =81\), \(IM_\alpha \approx 90\%\) of ACC. An interesting intermediate point is \(\alpha =M^2=9\). Up until \(\alpha =9\), the \(\hbox {IM}_\alpha \) gains more than 80% of its maximum value (ACC). But, due to the logarithm function, for \(\alpha >9\), the increase rate is low, and for example for \(\alpha =81\), \(\hbox {IM}_\alpha \) gains only a bit more than 90% (even for \(\alpha =100,000\), it only gains a little bit more than 95% of ACC).

5 Measure evaluation using synthesized confusion matrices

Our first examination of the proposed measures was in six experiments using synthesized confusion matrices that exhibit different scenarios. The advantage in using synthesized confusion matrices is in dispensing with training and testing the classifiers. Since values of different measures are in different ranges, to be able to present all measures on the same graph, we normalize each measure to [0-1] by:

Note that some of the performance measures (e.g., ACC and MCC) should be maximized and some (i.e., CEN and IM) should be minimized.

5.1 Sensitivity to class imbalance

In this experiment, 101 confusion matrices for two classes were created: each for 100 samples and perfect classification (Table 5). The only difference among the matrices is in the number of samples coming from each class, which is measured by m (which control the balance). For \(m=0\), the confusion matrix is highly imbalanced (i.e., all samples belong to class 1). As m increases, the confusion matrices become more balanced, and for \(m=50\), the classes are perfectly balanced. As m increases from 50 towards 100, the confusion matrices become imbalanced again (i.e., for \(m=100\), all samples belong to class 2). Figure 2 presents the experiment results for nine measures and settings: IM, \(\hbox {IM}_\alpha \) (\(\alpha =10\)), \(\hbox {IM}_\alpha \) (\(\alpha =100\)), \(\hbox {IM}_\alpha \) (\(\alpha =1000\)), MI, CEN, MCC, MAE, and ACC. In Fig. 2 (and also in Figs. 3, 4, 5, 6), measures that behave the same share the same symbol and graph color.

Figure 2 shows that while IM, \(\hbox {IM}_\alpha \), and MI are sensitive to the level of balance and peak to a balanced distribution (\(m=50\)), CEN, MCC, MAE, and ACC are indifferent to the level of balance. The latter four measures receive a perfect score for all scenarios since ACC remains 1 throughout the experiment, the correlation remains perfect (MCC), and there are no errors distributed (CEN and MAE). Because the experiment was conducted without misclassification errors, IM = \(\hbox {IM}_\alpha \) = MI. In real problems, a classifier errs and the classes are almost always imbalanced. Thus, when a classifier is trained by CEN, MCC, MAE, or ACC, it will be fooled by the majority class, misclassifying all/most samples of the minority classes, whereas a classifier that is trained by IM, \(\hbox {IM}_\alpha \), or MI is expected to err evenly for all classes.

5.2 Sensitivity to the number of classes

In this experiment, 99 confusion matrices were created, each with a different number of classes ranging from 2 to 100 (i.e., when \(M=2\), the confusion matrix is a matrix of dimension 2, and when \(M=100\), the matrix is of dimension 100). As in the previous experiment, all matrices demonstrate a perfect classifier, with diagonal entries equal to 10 (Table 6). Figure 3 shows that while IM, \(\hbox {IM}_\alpha \), and MI are sensitive to the number of classes, CEN, MCC, MAE, and ACC are not. Although the four latter measures show perfect performance, it is only because there are no errors in this scenario. In real problems, a classifier tends to err more as the number of classes in the classification problem increases. While CEN, MCC, MAE, and ACC show no sensitivity to this number, IM, \(\hbox {IM}_\alpha \), and MI do show such sensitivity.

5.3 Sensitivity to the error severity

In this experiment, 99 confusion matrices with 100 classes were created, each representing the worst classification scenario (all samples are of Class 1 and misclassified), but with a different error severity. That is, the number of misclassifications in each matrix is fixed, but the error severity (i.e., \(|x-y|\)) changes from the mildest (all Class 1’s samples are misclassified to Class 2) to the harshest (all Class 1’s samples are misclassified to Class 100). This severity is represented in the matrices by the parameter S, which changes in [1, 99] according to the position (severity) of the error in the confusion matrix (Table 7) (note that in each matrix, only one cell is non-zero holding the entire error “E”). Figure 4 reveals that only IM, \(\hbox {IM}_\alpha \), MI, and MAE are sensitive to the error severity, losing accuracy with the increase of the severity, as is expected from a performance measure. CEN obtains a perfect score for all error severities, and MCC and ACC are the worst in performance (always 0), but all three measures are insensitive to the error severity regardless of their result, which manifests an additional shortcoming of them as performance measures.

5.4 Sensitivity to the error distribution

In this experiment, 34 confusion matrices represent scenarios of wrongly classifying 99 samples of Class 4 (of four classes) with different error distributions. This distribution is controlled by m (Table 8). As m increases, the distribution becomes more uniform and vice versa. Note that the total error severity is equal in all scenarios/matrices (i.e., \(\sum |x-y|=198 \quad \forall Matrix\)). Figure 5 shows that MI, MCC, MAE, and ACC are not sensitive to the error distribution, whereas the other measures are. However, CEN decreases as m increases because the measure “prefers” the error distribution not to be uniform, whereas IM and \(\hbox {IM}_\alpha \) increase linearly with m because they excel for uniform error distribution.

5.5 ACC–information tradeoff

This experiment demonstrates with a simple example the tradeoff between ACC and information (as we expect will be measured by IM). Let U1 and U2 be two confusion matrices for two classifiers for \(M=3\). In Case 1 (Table 9), U1 has an ACC of 80% compared to a slightly lower accuracy of 79% for U2, but it can easily been seen that U2 reveals more information about the classification than U1, which has information only concerning Class 1’s predictions. Quantitatively, U1’s MI is 0 compared to U2’s MI, which is 0.31. In Case 2 (Table 10), the ACCs of U1 and U2 are equal, but U2’s MI is higher than U1’s (0.32 compared to 0). RMCV (which is learned using ACC), for instance, would not show any difference between the two classifications. However, in both cases, although U1 and U2 are similar (Case 1) or identical (Case 2) with respect to accuracy, they provide different degrees of information about the problem. This is reflected in different IM values, where that of U2 is higher than that of U1 in both cases.

To demonstrate this example in the general case, we created (Table 11) 51 confusion matrices for 100 samples equally distributed between two classes but with different types of errors. The type of error is determined by the value of m, which is the number of Class 1’s samples that are wrongly classified as Class 2 (and the number of Class 2’s samples that are wrongly classified as Class 1), whereas \(50-m\) is the number of Class 1’s (2’s) samples that are correctly classified. Figure 6 presents the experimental results for the same measures, but in this case, we used \(\hbox {IM}_\alpha \) (\(\alpha =3\)), \(\hbox {IM}_\alpha \) (\(\alpha =10\)), and \(\hbox {IM}_\alpha \) (\(\alpha =100\)) to see the differences among the measures more clearly. For \(m=0\), ACC, MCC, and MAE are 1, and they linearly decrease with m until 0 for \(m=50\). Note, however, that as m increases (and the accuracy deteriorates), the information shared by the classifier increases (Table 11).

As Fig. 6 shows, MI decreases with m as the confusion matrix becomes more uniformly distributed until a uniform distribution at \(m=25\) (for which MI = 0). For m greater than 25, MI increases at the same rate of the decrease until \(m=25\) because MI does not distinguish between correct and wrong classifications. Table 12 demonstrates two mirror cases—the first shows perfect classification and the second shows perfect misclassification—but both have the same MI value. This is the main disadvantage of MI that it does not distinguish symmetrical cases, and a high MI value can equally imply a very good or a very bad classifier.

In addition, Fig. 6 shows that IM decreases with m up to a certain point (\(m=35\)) and from that point starts to increase due to an enhanced contribution of MI to IM. This contribution led MI to start its increase at 25, sooner than IM. This seems to be the greatest disadvantage of IM, that following a severe decline in the classification performance, MI becomes more dominant and worsens IM. Models that classify with \(m>35\) are not superior to the model with \(m=35\) regarding classification although their IM improves. CEN seems to be more appropriate in such a scenario since it starts its incline only at 40, and this incline is very moderate compared with IM. Until 35, \(\hbox {IM}_\alpha \) (for all values of alphas) behaves, as expected, between ACC and IM. It decreases from \(m=0\) to \(m=35\) at a higher rate than ACC, which is similar to that of IM. However, it does not increase as IM beyond \(m=35\) due to the increased impact of \(\alpha \) on the classification errors. By that, \(\hbox {IM}_\alpha \) overcomes the above disadvantage of IM. The value of alpha determines the type of behavior. When \(\alpha \) is small, \(\hbox {IM}_\alpha \) behaves similarly to IM, and when \(\alpha \) is large, \(\hbox {IM}_\alpha \) behaves similarly to ACC.

In concluding Sect. 5, Table 13 summarizes how the seven evaluated measures meet requirements we may have from a classification-oriented measure used for learning a BNC. We use a green check-mark to indicate that a specific measure meets a certain property (requirement), a red X-mark to indicate that it does not meet the property, and a combined black mark to indicate that the measure meets the property, but only under certain conditions/constraints. The table shows that IM and \(\hbox {IM}_\alpha \) are the only measures that meet all requirements. Full details and proofs are in “Sensitivity analysis” section of Appendix.

6 Experiments and results

In this section, we empirically evaluate BNCs learned using the seven measures that were described in Sects. 3 and 4: IM, \(\hbox {IM}_{\alpha }\), MI, CEN, MCC, MAE, and the zero-one loss function (i.e., ACC). First, we create seven structure learning algorithms based on the RMCV algorithm (although we could base on other classifiers). For each measure, in each learning iteration of this search and score (S&S) algorithm, all neighboring BNCs (derived from the current BNC by an edge addition, deletion, or reversal) are compared to the current BNC (after learning the graph parameters) based on the measure and the BNC confusion matrix, and learning proceeds as long as more accurate graphs are found in consecutive iterations.

That is, we suggest seven variants of the RMCV algorithm for which learning is performed according to a different measure:

-

Learning BNC according to IM

-

Learning BNC according to \(\hbox {IM}_\alpha \)

-

Learning BNC according to MI

-

Learning BNC according to CEN

-

Learning BNC according to MCC

-

Learning BNC according to MAE

-

Learning BNC according to RMCV (ACC)

Each variant leads to its own classifier with its own confusion matrix. A confusion matrix of each of the seven variants is evaluated according to seven measures: IM, \(\hbox {IM}_\alpha \), MI, CEN, MCC, MAE, and ACC. That is, learning a BNC by each variant is made according to its own measure, but evaluation in the test is made according to all measures. In other words, each of the measures evaluates the confusion matrix derived by each of the trained BNC variants using the test set. Note that since \(\hbox {IM}_\alpha \) decreases with \(\alpha \), we compare performances of classifiers trained with different \(\alpha \) values and select the best \(\hbox {IM}_\alpha \)-based variant (classifier) using the IM measure, which is independent of \(\alpha \).

The BNC based on \(\hbox {IM}_{\alpha }\) is designed as a wrapper algorithm (Algorithm 1), which repeats the learning phase with different \(\alpha \)s selected from the range \([2,M^3]\), as recommended in Sect. 4. In order to avoid an exhaustive search and due to the log behavior of alphas, we only search for alphas between 2 and M (\(\alpha =1\) is exactly IM), \(\frac{M+M^2}{2}\), \(M^2\), \(\frac{M^2+M^3}{2}\), and \(M^3\). The wrapper chooses the alpha that maximizes the IM measure (Sect. 4). Note that there is no use in the testing set in this phase. The wrapper algorithm’s input is similar to that of RMCV and consists of: a training set (\(D_{tr}\)), test set (\(D_{tst}\)), number of classes (M), number of folds for the RMCV’s cross-validation (K), and an initial graph (\(G_0\)). First, the \(\alpha \) value that maximizes IM is found together with the corresponding BNC’s structure. Then, after learning the parameters for this structure to turn it into a classifier, this classifier is tested using the test set to provide a confusion matrix that is evaluated as those yielded by the other measures.

Since the RMCV algorithm must be initialized by a graph, when in the following experiments we evaluate each of the seven algorithms, we do that with both the empty graph and the naïve Bayesian classifier (NBC) as initializations. In total, for each database, we train 14 classifiers (for seven measures X two initializations).

This section is divided into three experiments. In Sect. 6.1, we compare the seven algorithms using 23 (artificial) synthetic datasets. In Sect. 6.2, we compare the seven algorithms using 17 real world and UCI datasets. While in these two sections we evaluate the BNC learned using each of the seven measures, in Sect. 6.3, we compare the BNC learned using \(\hbox {IM}_{\alpha }\) with state-of-the-art machine learning classification algorithms, such as neural network (NN), decision tree (DT), random forest (RF), and support vector machine (SVM).

In each experiment, we evaluate the results using the Friedman non parametric test that was designed for comparing multiple algorithms/classifiers over multiple databases. A Friedman test can be applied to classification accuracies, error ratios, or any other measure (Demšar 2006). Since the Friedman test only tells us if one algorithm is superior to the others, but not which algorithm is the most accurate, Demšar (2006) suggested that the test be followed by a post hoc test, the Nemenyi test or the Wilcoxon signed ranks test. The Nemenyi test compares all algorithms to each other regarding the ranks computed in the Friedman test in order to find which algorithm is superior to the others. The Wilcoxon signed ranks test, in contrast to the Nemenyi test, does not use the Friedman ranks, but rather computes the difference between two algorithms for each dataset and assigns ranks according to the absolute difference (i.e., the Wilcoxon test ranks differences between algorithms and not algorithms directly).

6.1 Artificial datasets

This experiment included 23 artificial (synthetic) databases (Table 14) that were derived from the synthetic BN structure in Fig. 7. The baseline BN consists of 20 variables (nodes) where the target variable is Node 20. We made sure that, on the one hand, this BN would not be too complicated (dense), but on the other hand, it would possess all types of variable connections: diverging, serial, and converging (Ide and Cozman 2002). This BN also has the following properties:

-

Each variable has a cardinality of three.

-

The target variable is fully balanced (each class has the same prior probability), unless otherwise mentioned.

We then derived from the baseline BN, 22 other BNs to perform a sensitivity analysis for: target variable cardinality (number of classes), sample size, and class balance (Table 14):

-

Target variable cardinality: eight databases (Databases 1–8) containing 2, 3, 4,...,9 classes of the target variable (Node 20).

-

Sample size: six databases (Databases 9–14) containing: 500, 1000, 1500, 2000, 2500, and 3000 samples.

-

Class balance: nine databases (Databases 15–23) containing different balances for the target variable. Database 15 is perfectly balancedFootnote 2 and further databases gradually become less balanced. The percentage of samples each class holds was set heuristically.

Further details about the sampling technique for these databases are in “Artificial BN sampling” section of Appendix. Note that since in most evaluations (see below), we tested and report results for three separate category databases for which a single parameter is tested: the number of classes, number of samples, and degree of class imbalance, we included a benchmark database with four classes, 2000 samples, and no imbalance in the three categories (i.e., Databases 3, 12, and 15).

Synthetic BN to create the artificial databases of Table 14

The BN of Fig. 7 was sampled ten times in each setting of the 23 of Table 14 to create ten data permutations for each of the 23 databases. Each permutation is divided into five equally sized datasets (folds) as part of a CV5 experiment, where each fold in its turn is used for the test and the other four folds are used for training. That is, each of the 23 databases in Table 14 is used and tested 50 times using different training and tests sets, and thus 1150 experiments using 1150 datasets are performed in total. Each of the seven algorithms trains and tests two classifiers (one for each initial graph) on each of the 50 datasets of the 23 databases (i.e., 16,100 classifiers). For each database, algorithm, and initial graph, we calculate all scores (i.e., IM, \(\hbox {IM}_\alpha \), MI, CEN, MCC, MAE, and ACC) as averages over the 50 confusion matrices of the 50 corresponding test sets.

Tables 15 and 16 show the average accuracies (ACC) and \(\hbox {IM}_\alpha \) scores, respectively, achieved by the seven learning algorithms initialized by the empty graph. In each row of the two tables, the best classifier is marked in bold font, whereas the worst is marked in italic font. The last row in each table presents the average and standard deviation of the algorithms over all databases. A similar table for IM scores is Table 42 in “IM scores for artificial databases" section of Appendix.

Table 15 reveals that the \(\hbox {IM}_\alpha \)-based BNC (where \(\alpha \) has been optimized according to Algorithm 1) achieves the highest average accuracies although the BNCs were learned with the goal of maximizing \(\hbox {IM}_\alpha \) and not ACC. This is because IM contains ACC components in both MI and ES terms which makes it maximize ACC while maximizing the \(\hbox {IM}_\alpha \) score. Another explanation is that \(\hbox {IM}_\alpha \) trades between IM and ACC; hence, for databases where maximizing accuracy leads to better performance, a large \(\alpha \) is automatically chosen by the algorithm, and for databases where maximizing IM leads to better performance, a small \(\alpha \) is selected. The tuning of \(\alpha \) is done on training and validation sets, while the results shown are for an independent testing set. IM- and ACC- (RMCV) based BNCs (first and last columns of Table 15) do not seem to show any superiority over one another. Again, one would expect an ACC-based BNC to achieve better accuracy results, but the IM-based BNC does not fall behind.

Table 15 also reveals that CEN is the worst method to evaluate classifier performance, due to a major limitation of the measure. When all entries in a confusion matrix belong to one predicted class (which is the case when the initial graph is empty), the measure will take a very low value (CEN is a measure we wish to minimize). That is because, in Eq. (9), all \(\hbox {CEN}_m\) will result in zero except for one. This may also be seen in the following example. In Tables 17(a) and 17(b), we see the confusion matrices for Database 3 (Table 14) for an empty initial graph and for its best neighbor, respectively. The CEN scores are 0.3365 and 0.4138, respectively. Therefore, the algorithm terminates, and the empty graph is chosen by the CEN-based BNC even though it is obvious that the best neighbor which yields Table 17(b) is better in terms of accuracy and information.

Table 16 shows the average \(\hbox {IM}_\alpha \) scores achieved by the seven algorithms. The \(\hbox {IM}_\alpha \) has the highest average \(\hbox {IM}_\alpha \) score. We recall that the \(\hbox {IM}_\alpha \) is normalized; hence, its range is [0, 1]. For the purpose of visualization and to better distinguish between results, we multiply each score by 100. Thus, the following results of normalized \(\hbox {IM}_\alpha \) are in the range [0, 100].

Figure 8 compares the classification performance (\(\hbox {IM}_\alpha \)) between IM-, \(\hbox {IM}_\alpha \)-, and ACC-based BNCs for an empty initial graph and the 23 databases. The first row refers to the comparison of the \(\hbox {IM}_\alpha \)- and IM-based BNCs, the second row to \(\hbox {IM}_\alpha \)- and ACC-based BNCs, and the third row to that between the IM- and ACC-based BNCs. The comparison is given in Figures 8a, d, g for Databases 1–8 (see Table 14) that allow analysis of the influence of the number of classes, Figs. 8b, e, h for Databases 9–14 that allow analysis of the influence of the number of samples, and Figs. 8c, f, i for Databases 15–23 that allow analysis of the influence of class imbalance. We call the three categories according to this division of the databases: class analysis (left column in Fig. 8), samples analysis (middle column), and proportion analysis (right column). The points in Fig. 8 which are above the \(x=y\) (red) line represent databases for which the algorithm written on the y-axes is favored over the one written on the x-axes and vice versa.

Figure 8 (first two rows) shows that the \(\hbox {IM}_\alpha \)-based BNC is superior to the other two classifiers (learned to minimize IM and maximize ACC) with respect to \(\hbox {IM}_\alpha \) score. The superiority is obvious in the samples analysis (Fig. 8b, e) and proportion analysis (Fig. 8c, f) scenarios. However, a closer look reveals that also in the class analysis scenario (Fig. 8a, d), none of the points (each represents a database) are below the red line, which means that the \(\hbox {IM}_\alpha \)-based BNC is also superior to the other two classifiers regarding class analysis (Databases 1–8). The third row in Fig. 8, which presents the comparison of IM- and ACC-based BNCs, shows that the IM-based BNC is superior to the ACC-based BNC in the case of proportion analysis, but is slightly inferior for the case of samples analysis.

In addition, we examine in Fig. 9 how the performance measures for each category of the databases change with the number of classes, number of samples, and balance in the samples among the classes (data proportion). The first row (Fig. 9a–c) shows IM scores, while the second (Fig. 9d–f) shows ACC scores. As can be seen in Fig. 9, the IM score is monotone for each analysis (Fig. 9a–c), whereas ACC is not monotone in the case of the proportion analysis (Fig. 9f). As the number of classes increases, ACC decreases (Fig. 9d) because the classification task becomes more difficult, and IM increases (Fig. 9a) for the same reason. As the number of samples increases, ACC increases (Fig. 9e) and IM decreases (Fig. 9b) (i.e., both performance measures are improved). The reason is that as the number of samples in the dataset increases, the number of samples for each combination of variables increases, which makes the estimated probabilities more reliable and thereby also increases ACC. In the case of proportion analysis, ACC is unstable for all algorithms in contrast to the IM score, which increases as the database becomes imbalanced. This can be attributed to the accuracy limitation that was described in Sect. 5; the accuracy is not sensitive to changes in the level of imbalance. Finally, we see that in terms of IM (Fig. 9a–c) and ACC (Fig. 9d–f), the \(\hbox {IM}_\alpha \)-based BNC is the best algorithm.

Figure 10 reveals that the number of neighbors of the IM- and ACC-based BNCs is similar where the initial graph is empty (Fig. 10a–c) with a slight tendency towards the ACC-based BNC (the ACC line is almost always beneath the IM line, which means fewer neighbors). However, for the NBC initial graph, the ACC-based BNC is significantly superior to the IM-based BNC. In the proportion analysis, for either the empty or NBC initial graphs (Fig. 10c, f), there is a sharp decline starting from Database 6. The explanation for this break point is that from the sixth database (i.e., Database 20), we created very imbalanced databases. Note that we excluded \(\hbox {IM}_\alpha \) to keep the graph scale. Each iteration of the \(\hbox {IM}_\alpha \)-based BNC includes examining several alphas; hence, the number of neighbors is a function of the number of alphas and the number of iterations, and \(\hbox {IM}_\alpha \) is inferior to all seven proposed algorithms with respect to run time. The CEN-based BNC has the lowest number of iterations, which is constant regardless of the scenario and coheres with the explanation described above about CEN limitation and poor performance. More details about run-times are given in Table 43 in “Run time measured by number of neighbors for artificial BNs” section of Appendix.

To demonstrate that the advantage of the \(\hbox {IM}_\alpha \)-based BNC is not biased by the majority classes at the expense of the minority classes in imbalance problems, and that the measure is indeed advantageous to the minority classes, we repeated the experiment with only the non-major classes in Databases 15–23, each having a different degree of imbalance from zero (15) to large (23). Table 18 shows the average ACC value over the non-major classes after excluding the major class for each of the imbalanced databases. The table reveals that indeed the \(\hbox {IM}_\alpha \)-based BNC outperforms all other algorithms for all databases except for the three most imbalanced (21–23) for which the MI-based BNC is superior (and the IM-based BNCs are usually second best). The latter result demonstrates that, for a very highly imbalanced dataset, the MI component in the \(\hbox {IM}_\alpha \) measure is more important than the ES component (we already saw the MI’s supreme sensitivity to class imbalance in Sect. 5.1). For comparison, for these three databases, the MAE and ACC-based BNCs, and actually all BNCs, are very poor, demonstrating the inability of all measures to adequately accommodate a very high class imbalance.

To demonstrate that the advantage of the \(\hbox {IM}_\alpha \)-based BNC for ordinal problems is not due to class imbalance, and that the measure is indeed advantageous when errors have different severities, we computed the MAE for the seven algorithms on the 14 non-imbalanced databases (1–14) (see “Artificial BN sampling” section of Appendix for details on how we created the ordinal problems). Table 19 shows that the \(\hbox {IM}_\alpha \)-based BNC achieves better MAE results than all algorithms regardless of the class-variable cardinality (Databases 1–8) and sample size (Databases 9–14). This superiority applies even to the MAE-based BNC that was trained to minimize MAE, whereas the \(\hbox {IM}_\alpha \)-based BNC was trained to minimize \(\hbox {IM}_\alpha \). In these scenarios, the ES component of \(\hbox {IM}_\alpha \) is the dominant one (which is supported by the superiority of the MAE-based BNC to the MI-based BNC).

Finally, we proceed to Friedman’s non parametric test followed by the Nemenyi post hoc test, as was suggested by Demšar (2006) in order to find which algorithms are superior. The Friedman test results are given for ACC, IM, MAE, and MI in Table 20, rows 1–4 respectively, and those of the Nemenyi post hoc test (with a 0.05 confidence level) for ACC and IM in Table 21, rows 1 and 2, respectively. The rows in Tables 20 and 21 refer to specific measures and initial graphs (empty or NBC), while the columns represent the seven algorithms/classifiers. In Table 20, we present the MAE and MI scores, in addition to IM, since they compose it, which allows us to see if the advantage of the IM-based BNC over the other algorithms is due to either or both of the measures. In Table 21, each column represents a baseline algorithm to which the rest of the algorithms were compared in the Nemenyi post hoc test.

First, we can see that the \(\hbox {IM}_\alpha \)-based BNC has the lowest (best) average rank regardless of the initial graph or the measure (Table 20). The fact that the \(\hbox {IM}_\alpha \)-based BNC shows better results with respect to both MAE and MI (that both compose IM) demonstrates that it simultaneously minimizes error severity and maximizes the information provided by the classifier. Second, as can be seen from the Nemenyi post hoc tests (Table 21), all algorithms were significantly better than the CEN-based BNC almost always, and the \(\hbox {IM}_\alpha \)-based BNC was almost always significantly superior to all other algorithms regardless of the initial graph. With respect to the differences between the IM- and \(\hbox {IM}_\alpha \)-based BNCs, we expanded our evaluation and performed Wilcoxon tests between these BNCs for the two initializations (Empty and NBC) and two measures (IM and ACC) and found, based on all four tests, that \(\hbox {IM}_\alpha \) is superior to IM (with a 0.05 confidence level).

Discussion of the artificial-dataset experiment

The goal of this experiment was to demonstrate using 23 artificial databases the sensitivity of the different measures to the issues that motivated the development of \(\hbox {IM}_\alpha \). This experiment shows that the \(\hbox {IM}_\alpha \)-based BNC (and usually also the IM-based BNC) are superior to the ACC-based BNC. This is especially remarkable since IM and \(\hbox {IM}_\alpha \) are not trained to maximize the classification accuracy as ACC does, yet they achieved better ACC results. This is explained by the fact that IM contains ACC components in both the MI and ES terms and because \(\hbox {IM}_\alpha \) trades IM and ACC, enjoying the benefits from both. Moreover, the experiment shows that the \(\hbox {IM}_\alpha \)-based BNC simultaneously minimizes the error severity and maximizes the amount of information in the classification as is revealed in the MAE and MI scores achieved by the algorithm that outperform those of the MAE and MI-based BNCs, respectively.

The \(\hbox {IM}_\alpha \) superiority as reflected based on the ACC, IM, MI, and \(\hbox {IM}_\alpha \) scores can also be seen through the confusion matrices, which give us insight into additional information. For example, we can see the resultant confusion matrix of the ACC-based BNC (Table 22a) compared to that of the \(\hbox {IM}_\alpha \)-based BNC (Table 22b) over a specific test set of Database 22. The ACC-based BNC totally fails in classifying the minority class (\(C_4\)), whereas the \(\hbox {IM}_\alpha \)-based BNC achieves a 50% accuracy on this minority class. Also, the confusion matrix of the \(\hbox {IM}_\alpha \)-based BNC is superior to that of the ACC-based BNC in terms of MAE (0.22 vs. 0.27) and MI (0.34 vs. 0.22). These differences between the matrices of the two classifiers are typical also to the other sets in the other databases. However, this superiority comes at the expense of run time, which on average is six times higher for the \(\hbox {IM}_\alpha \)-based BNC than for the IM- or ACC-based BNCs (as it examines this approximate number of alphas). Note that we did not run the wrapper in parallel with different alphas, which could reduce the average run time to that of the IM-based BNC.

The proportion analysis (class imbalance) has shown that the ACC measure is noisy compared to the IM measure, which can be explained by the experiments that were conducted in Sect. 5, which demonstrated the ACC limitations, among them the insensitivity to class balance.

In this experiment, the CEN-based BNC was the least accurate. The reason for its poor performance seems to be that it is not sensitive enough to changes (e.g., number of classes, class proportions); hence, it is terminated too quickly. This was demonstrated with an example and was supported by Fig. 10a–f where the CEN-based BNC had on average not more than 200 neighbors regardless of the scenario. Another shortcoming of the CEN-based BNC is that as the sample size increases (500–3000), its accuracy does not increase as is expected from a classifier.

6.2 UCI and real-world databases

This experiment included 17 ordinal databases (Table 23), 14 of them are UCI databases (Lichman 2013), while the other three are original: ALSFootnote 3, Missed due date,Footnote 4 and Motorcycle.Footnote 5 The selected problems show diversity with respect to the sample size, number of variables and classes, and degree of imbalance, posing a range of challenges the classifiers should meet. Similar to the previous experiment, 10 random permutations were made to each database, which were each separated by CV5. That is, a total of 850 datasets are used in this experiment. Again, each of the seven algorithms trains and tests two classifiers (one for each initial graph) on each of the 850 datasets of the 17 databases (i.e., 11,900 classifiers). For each database, algorithm, and initial graph, we calculate all scores as averages over the 50 derived datasets.

Tables 24 and 25 show the average accuracies and \(\hbox {IM}_\alpha \) scores achieved by the seven algorithms (all are initialized by the NBC), respectively. Table 44 in “IM scores for UCI databases” section of Appendix shows similar results for the IM score. Table 24 reveals that CEN on average is the worst method to learn a classifier. The algorithm based on \(\hbox {IM}_\alpha \) (\(\alpha \) is optimized according to Algorithm 1) has the highest ACC score for most databases (10 out of 17) and also the highest average score. Moreover, the \(\hbox {IM}_\alpha \)-based BNC has a slight advantage over IM with respect to ACC for almost all databases with more than two classes (Databases 1, 3, 4, 5, 10, 11, 13, 15, and 16). This result gives another empirical justification to the development of the \(\hbox {IM}_\alpha \) score that was targeted towards multiclass classification problems with a wide range of class number.

Figure 11(a) shows the \(\hbox {IM}_\alpha \)-based BNC superiority to the ACC-based BNC (9 out of 17 points—a point represents a database—are above the red line, four are below the line, and four are on the line), and Fig. 11b shows superiority to the CEN-based BNC for all databases. Table 25 reveals that CEN is the poorest in terms of the \(\hbox {IM}_\alpha \) measure, and the algorithms based on \(\hbox {IM}/\hbox {IM}_\alpha \) have the highest values of this measure.

Area under curve (AUC) is a performance measure considered by many to be an alternative to accuracy because it trades between a true and false positive. The AUC has an important statistical property: the AUC of a classifier is equivalent to the probability that the classifier will rank a randomly chosen positive instance higher than a randomly chosen negative one (Fawcett 2006). In Table 26, we present the average AUC results accomplished by each of the seven algorithms. For non binary databases, we use an extension for AUC to a multiclass problem as introduced by Hand and Till (2001). Table 26 shows that MAE- and \(\hbox {IM}_\alpha \)-based BNCs have the highest average AUC. However, the average results of all algorithms are very similar, and the advantage is not significant. While considering only binary databases (2, 6, 7, 8, 9, 12, 14, and 17), \(\hbox {IM}_\alpha \)-based BNCs ranks first for all; however, in 4 out of 8 of the binary databases, all algorithms achieved the same results, so the advantage of \(\hbox {IM}_\alpha \) regarding AUC in the binary databases is also not significant.

Two other well known measures in statistics and machine learning are precision (positive predictive value) and recall (true positive rate), which are mainly used for binary classification problems (Baccianella et al. 2009), but have an extended version for multiclass problems (Sokolova and Lapalme 2009): \(\hbox {recall}_i=\frac{M_{ii}}{\sum _{j}{M_{ij}}}\) and \(\hbox {precision}_i=\frac{M_{ii}}{\sum _{j}{M_{ji}}}\). These measures are calculated for each class separately. The results for all classes can then be averaged as micro (favors bigger classes) or macro (treats all classes equally) and merged to \(Fmeasure=\frac{2 \cdot precision \cdot recall}{precision + recall}\) (Sokolova and Lapalme 2009). In precision, recall, and F-measure, all error types are equal; hence, no information about the error distribution is taken into consideration (fits to nominal multiclass problems). Table 27 presents the performance of the seven algorithms when the classifier is evaluated using the F-measure. According to Table 27, the \(\hbox {IM}_\alpha \)-based BNC has the best average F-measure and is also ranked first in all but three databases.

We further analyze the time complexity of each algorithm [full results are in Table 45 (“Run Time measured by number of neighbors for UCI BNs” section of Appendix)]. The \(\hbox {IM}_\alpha \)-based BNC suffers from the worst time complexity, which is the number of \(\alpha \)’s checked times longer than that of the other classifiers.

Table 28 summarizes the average Friedman’s ranks according to ACC, IM, MAE, and MI scores in rows 1–4, respectively. Because results regarding the average rank of AUC had shown no superiority to any of the algorithms, they were excluded. Each column represents an algorithm and each row stands for a different evaluation measure and initial graph. Table 28 shows that the \(\hbox {IM}_\alpha \)-based BNC has the lowest (best) average rank followed by IM, regardless of the initial graph or the evaluation measure (again, in addition to the IM measure, we present the MAE and MI measures that compose IM and \(\hbox {IM}_\alpha \) to show that the success of our proposed measure is attributed to the improvement in both measures).

Next, we proceeded to conduct Nemenyi and Wilcoxon post hoc tests. Table 29 summarizes the Nemenyi post hoc test for ACC. Each column represents a baseline algorithm to which the rest of the algorithms are compared. For BNCs initialized by an empty graph, the algorithm based on \(\hbox {IM}_\alpha \) significantly outperforms all other BNCs except for IM. For the NBC initial graph, BNC-ACC and BNC-IM were not dominant by the \(\hbox {IM}_\alpha \)-based BNC; however, according to the Wilcoxon test (Table 30), the \(\hbox {IM}_\alpha \)-based BNC is superior to the IM-based BNC for the NBC initialization and to the ACC-based BNC for the empty graph initialization. Table 31 reveals that in contradiction to the comparison of ACC values, while comparing the IM scores, the IM-based BNC is statistically significantly superior to CEN-, MCC-, MAE-, and ACC-based BNCs regardless of the initial graph. Also, it reveals that the \(\hbox {IM}_\alpha \)-based BNC is not superior to the MI-based classifier, but is superior to all other classifiers. The Wilcoxon test displayed in Table 32 shows that for the NBC initial graph, the \(\hbox {IM}_\alpha \)-based BNC is superior also to the IM-based BNC, which was not the case in the Nemenyi test, besides being significantly superior to the ACC-based BNC, which was not the case when compared based on ACC in Table 30. If we allow the confidence level in the Nemenyi test to be 0.1, then the \(\hbox {IM}_\alpha \)-based BNC is superior to the IM-based BNC also in the Nemenyi post hoc test.

Discussion of UCI and real-world databases experiments

After showing the advantages of our proposed algorithm on artificial databases, in this experiment, we focused on UCI and real-world databases from a variety of problems/domains. The experiment showed that IM and \(\hbox {IM}_\alpha \) did not fall behind the ACC-based BNC, and were even better with respect to classification accuracy (not significant) and IM score (significant). In most of the databases with a high number of classes, such as ALS, Bostonhousing, Car, Missed due date, and Shuttle, the \(\hbox {IM}_\alpha \)-based BNC outperforms all other classifiers (even) with respect to accuracy. Also, on average, it has the best ACC, IM, and \(\hbox {IM}_\alpha \), and the lowest (best) rank regardless of the initial graph. Also, the \(\hbox {IM}_\alpha \)-based BNC showed better AUC and F-measure results. However, the fact that AUC scores for all algorithms were very similar may indicate that there is a lack of ability to compare imbalanced-ordinal databases based on this measure.