Abstract

Recognition of COVID-19 is a challenging task which consistently requires taking a gander at clinical images of patients. In this paper, the transfer learning technique has been applied to clinical images of different types of pulmonary diseases, including COVID-19. It is found that COVID-19 is very much similar to pneumonia lung disease. Further findings are made to identify the type of pneumonia similar to COVID-19. Transfer Learning makes it possible for us to find out that viral pneumonia is same as COVID-19. This shows the knowledge gained by model trained for detecting viral pneumonia can be transferred for identifying COVID-19. Transfer Learning shows significant difference in results when compared with the outcome from conventional classifications. It is obvious that we need not create separate model for classifying COVID-19 as done by conventional classifications. This makes the herculean work easier by using existing model for determining COVID-19. Second, it is difficult to detect the abnormal features from images due to the noise impedance from lesions and tissues. For this reason, texture feature extraction is accomplished using Haralick features which focus only on the area of interest to detect COVID-19 using statistical analyses. Hence, there is a need to propose a model to predict the COVID-19 cases at the earliest possible to control the spread of disease. We propose a transfer learning model to quicken the prediction process and assist the medical professionals. The proposed model outperforms the other existing models. This makes the time-consuming process easier and faster for radiologists and this reduces the spread of virus and save lives.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The CORONA Virus Disease (COVID-19) is a pulmonary disease brought about by Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2). This pandemic has imposed tremendous loss to life so far. World Health Organization (WHO) is persistently observing and publishing all reports of this disease outbreak in various countries. This COVID-19 is a respiratory ailment and to a greater extent spread through droplets in air. The infection is transmitted predominantly via close contact and by means of respiratory droplets delivered when an individual coughs or sneezes. The symptoms of this virus are coughing, difficulty in breathing and fever. Trouble in breathing is an indication of conceivable pneumonia and requires prompt clinical consideration. No antibody or explicit treatment for COVID-19 contamination is available. Emergency clinics provide isolation wards to infected individuals. It is the most common when individuals are symptomatic, yet spread might be conceivable before symptoms appear. The infection can sustain on surfaces for 72 hours. Symptoms of COVID-19 start to appear somewhere in between the range of 2 to 14 days, with a mean of 7 days. The standard technique for analysis is by real time Reverse Transcirption Polymerase Chain Reaction (RT-PCR) performed on a nasopharyngeal swab sample. The same disease can likewise be analyzed from a mix of manifestations, risk factors and a chest CT demonstrating highlights of pneumonia. Many countries are unable to limit the wide spread of COVID-19 quickly due to insufficient medical kits. Many researches are carried out across the globe to handle the pandemic scenario. Many deep leaning models are proposed to predict the COVID-19 symptoms at the earliest to control the spread. We propose a transfer learning model over the deep learning model to further quicken the prediction process.

The literature survey of the proposed work is explained in Section 2. Proposed model is explored in Section 3. Experiment and results are discussed in Section 4. Discussion and conclusion are presented in Section 5 and Section 6 respectively.

2 Related Work

To limit the transmission of COVID-19 [11], screening large number of suspicious cases is needed, followed by proper medication and quarantine. RT-PCR testing is considered to be the gold standard grade of testing, yet with significant false negative outcomes. Efficient and rapid analytical techniques are earnestly anticipated to fight the disease. In view of COVID-19 radio graphical differences in CT images, we propose to develop a deep learning model that would mine the unique features of COVID-19 to give a clinical determination in front of the pathological test, thereby sparing crucial time for sickness control.

Understanding the basic idea of COVID-19 and its subtypes, varieties might be a continual test, and the same should be made and shared all over the world. Baidu Research [14] discharge its LinearFold calculation and administrations, which can be utilized for whole genome optional structure forecasts on COVID-19, and is evidently multiple times quicker than different calculations. Pathological discoveries of COVID-19 related with intense respiratory pain disorder are exhibited in [4]. In [29], Investigations included: 1) rundown of patient attributes; 2) assessment of age appropriations 3) computation of case casualty and death rates; 4) geo-temporal examination of viral spread; 5) epidemiological bend development; and 6) subgroup

Another [6] to examine the degree of COVID-19 disease in chosen populace, as controlled through positive neutralizer tests in everyone has been created. Chen H [5] proposed that limited information is accessible for pregnant ladies with COVID-19 virus. This examination meant to assess the clinical attributes of COVID-19 in women during pregnancy period. Shan F [19] suggested that CT screening is vital for conclusion, appraisal and organizing COVID-19 disease. Follow-up checks of each 3 to 5 days regularly are suggested for illness movement. It is concluded that peripheral and bilateral Ground Glass Opacification (GGO) with consolidation will be more prevalent in COVID-19 infected patients. Gozes [8] created AI-based computerized CT image examination instruments for location, measurement, and following of Coronavirus. Wang S [23] proposed a deep learning system that uses CT scan images to detect COVID- 19 disease. Wang Y [27] presented a critical side effect in COVID-19 as Tachypnea, increasing rapid respiration. The examination can be used to recognize different respiratory conditions and the gadget can be used handy. Rajpurkar P [17] presented the algorithm that detected pneumonia using cheXNet system which is more accurate. Xu X [28] exhibited that the traditional method used for identifying COVID-19 has low positive rate during initial stages. He developed a model which does the early screening of CT images. Yoon SH [31] found that the COVID-19 pneumonia that affected people in Korea exhibits similar characteristics as people living in China. They are found to have same characteristics when analyzed for patients. Singh R [22] evaluated the impact of social distancing on this pandemic with taking age structure into account. The papers provide a solution for this pandemic caused by novel coronavirus. Many researches are also carried out using Image processing techniques on COVID-19. Recently Subhankar Roy [2] proposed the deep learning technique for classification of Lung Ultrasonography (LUS) images. Classification of COVID-19 was carried out with CT Chest images with help of evolution–based CNN network in [18]. In study [3], Harrison X. Bai proposed that main feature for discriminating COVID-19 from Viral pneumonia is peripheral distribution, ground-glass opacity and vascular thickening. This study can distinguish COVID-19 from Viral pneumonia with high specificity using CT scan images and CT scan features. The same study [3] also suggested that both COVID-19 affected patient and Viral pneumonia affected patients develop central+peripheral distribution , air bronchogram , pleural thickening, pleural effusion and lymphadenopathy with no significant differences. General similarities between two viruses is that both cause respiratory disease, which can be asymptomatic or mild but can also cause severe disease and death. Second, both viruses are transmitted by contact or droplets. These predominant similarities with pneumonia viruses (influenza and SARS-CoV-2) urged us to proceed with the proposed work. The primary limitations that are analyzed so far are as follows:

-

1.

Use of CT and CXR images: The COVID-19 virus attacks the cells in the respiratory tracts and predominantly the lung tissues; we can use the images of Thorax to detect the virus without any test-kit. It is inferred that the chest X-Ray are of little incentive in initial stages, though CT scans of the chest are valuable even before the symptoms appear.

-

2.

Less testing period: The test taken to identify the COVID-19 is not fast enough. Especially during initial stages of the virus development, it is very hard to assess the patients. The manual analysis of CXR and CT scans of many patients by radiologists requires tremendous time. So we need the automated system which can save radiologist’s valuable time.

-

3.

Reusing the existing model: In this paper, a novel existing system can be reused for identifying the COVID-19 using CT scan and Chest X-Ray images. This can precisely detect the abnormal features that are identified in the images.

To resolve the limitations stated above, the paper has been proposed using transfer learning model.

3 Proposed work

In this section, the proposed transfer learning model along with Haralick features used in the model are discussed.

3.1 Transfer learning

In this proposed work, a novel system has been presented to identify COVID-19 infection using deep learning techniques. First, transfer learning has been performed to images from NIH [21, 26, 30] Chest X-Ray-14 dataset to identify which disease has maximal similarity to COVID-19. It is found that pneumonia is much similar to COVID-19. The Fig. 1 represents images of the Chest X-Ray for various types of lung conditions.

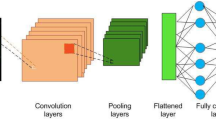

Transfer learning [9, 24] is a method where the knowledge gained by a model (Viral pneumonia detection model) for a given data is transferred to evaluate another problem of similar task (COVID-19 detection model). In transfer learning, a initial training is carried out using a large amount of dataset to perform classification. The architecture of the proposed transfer learning model is delineated in Fig. 2. The CXR and CT images of various lung diseases including COVID-19, are fed to the model. First, the images are preprocessed to get quality images.

The histogram equalization and Weiner filters are applied to increase the contrast and remove the noise respectively as image enhancement techniques to increase the quality of the images. Histogram equalization provides enhanced better quality image without loss of information. Weiner filter is used to determine target process by filtering the noisy regions in an image. The deconvolution is performed to the blurred images by minimizing the Mean Square Error(MSE). The area of interest is chosen using ITK-SNAP software.The image resizing is achieved using python PIL automatically. The images after applying image enhancement techniques are presented in Fig. 3.

Haralick texture features are obtained from the enhanced images and these modified images are then fed into various pre-defined CNN models. The haralick features are discussed in the next following section.

In various pre-defined CNN models like Resnet50, VGG16 and InceptionV3, the convolutional layers are used to extract the image features and max pooling layers are used to down sample the images for dimensionality reduction, intermediate results are shown in Fig. 4.

The regularization is done by the dropout layers which speedup the execution by expelling the neurons whose contribution to the yield is not so high. The values of weights and bias are initialized randomly. The image size is chosen as 226x226. Adam optimizer takes care of updating the weights and bias. The sample images are trained in batches of size 250. Early stop** is employed to avoid over-fitting of data.

The five different CNN models are also built with different configurations to analyze the various results as shown in Fig. 5.

The stride value and dilation is chosen to be 1 which is the default value. Since these models perform one class classification (i.e.) either the sample will belong to that class or not, this is same as binary classification. So sigmoid function is used as the activation function in the fully connected layer as mentioned in Eq (1).

For the convolution layer and max-pooling layers ReLu function is utilized to activate the neurons and it is defined in Eq (2).

Each model is trained with dropout of 0.2 or 0.3. The transfer learning model is applied to predict the COVID-19 images instead of develo** a new deep learning model from the scratch, since it takes more training time. The different pre-trained models and different CNN configured models are trained and tested with different lung disease images, the one, VGG16, had given a lesser misclassification with viral pneumonia, would be taken for prediction of COVID-19 cases by the proposed transfer learning model.

3.2 Haralick Texture Feature Extraction

The Haralick features [16] are extracted from images that are resized as mentioned in the Fig. 2. Haralick features very well describe the relation between intensities of pixels that are adjacent to each other. Haralick presented fourteen metrics for textual features which are obtained from Co-occurrence matrix. It provides the information about how the intensity in a particular pixel with a position is related to neighbouring pixel. The Gray-Level Co-occurrence Matrix (GLCM) is constructed for the images with N dimensions where N refers to the number of intensity levels in the image. For each image GLCM is constructed to evaluate fourteen features. The calculation of those 14 features leads to identification of new inter-relationship between biological features in images. The relationship between intensities of adjacent pixels can be identified using these features. These relationship among pixels contains information related to spatial distribution of tone and texture variations in an image. The homogeneity of image(F1) which is the similarity between pixels, is given by Eq (3), where p(k,l) is position of element in matrix.

The measure of difference between maximum pixel value and minimum pixel value (contrast)(F2), is given by Eq (4) where m is |k−l|. p(k,l) is position of element in matrix.

The dependencies of pixel’s gray levels which are adjacent to each other (F3), is given by Eq (5) also called correlation. Where μk,μl, σk, σl are mean and standard deviations of the probability density functions where p(k,l) is position.

The square differences from mean of an image (F4), are averaged in Eq (6) which is called variance or sum of squares, where p(k,l) is position and μ is the mean value.

The local homogeneity of an image (F5), is given by Eq (7) which is also called Inverse Difference Moment (IDM).

The mean values in a given image (F6), are summed to get sum average as given in the Eq (8), where a and b are row and column positions in the co-occurrence matrix summed to a+b.

The variance values of an image (F7), is summed to get sum variance as exhibited in Eq (9).

The total amount of information that must be coded for an image (F8), is given by Eq (10) which is called sum entropy.

The amount of information that must be coded for an image (F9), is given by the Eq (11) is called entropy.

The variance of an image (F10), is differenced to get difference variance presented in Eq (12).

The entropy values of an image (F11), is differenced to get difference entropy as delineated in Eq (13).

The Eq (14) shows the information measures of correlation1 (F12), where HX and HY are entropies of Fx and Fy.

The Eq (15) shows the information measures of correlation2 (F13), where HX and HY are entropies of Fx and Fy.

In the above equation HXY,HXY1 and HXY2 are as mentioned below in Eq (16), Eq (17) and Eq (18).

The Eq (16) is the linear interdependence of pixels in an image (F14), where p(k,i) and p(l,i) are positions as mentioned in Eq. (19).

4 Experiments and results

In this section, the dataset for carrying out the experiments have been discussed. In addition to this, all the results and statistical analyses have been presented.

4.1 Dataset

The data for COVID-19 is assimilated from various resources available in Github open repository, RSNA and Google images. The data collected from various resources are presented in Table 1.

The data [21, 26, 30] for the Chest X-Ray pulmonary diseases are obtained from NIH with total of 81,176 observations with disease labels from 30,805 unique patients are shown in Table 2. The images are of size 1024x1024.

The data [12] for the viral, bacterial pneumonia and normal images are obtained from Mendeley with total of 5,232 images as shown in Table 3.

4.2 Results

The misclassification rate is calculated for all the pre-trained models like VGG16, Resnet50, and InceptionV3. The misclassification rate in Eq (20) is used to find the models which are similar to COVID-19, where N is total number of images, Fn is number of data that are actually COVID-19 but wrongly classified as not COVID-19 and Fp is number of data that are not COVID-19 but wrongly classified as COVID-19.

From Table 4, we can see the architecture 1 shows a better result when compared with other architectures with less misclassification rate. These architectures are shown in Fig. 5.

From Table 5, we can identify that the COVID-19 data is very much similar to pneumonia, consolidation and effusion. It is evident that COVID-19 data when tested for pneumonia trained model produces less misclassification rate.

In Table 6, we can determine that transfer learning has produced better accuracy (ACCURACY1) compared with traditional learning accuracy (ACCURACY2). This is because the data for COVID-19 is similar to pneumonia. Because of this reason, when model is trained for pneumonia and tested with COVID-19 data, the accuracy is better. The time taken for VGG16 is less because it is only 16 layers deep while resent50 and inceptionV3 are 50 and 48 layers deep respectively even with better accuracy compared with other models. The models are trained using NVIDIA TESLA P100 GPUs provided by Kaggle.

Further analyzing the pneumonia images, we can perform transfer learning for two types of pneumonia. It is found that COVID-19 is as similar as viral pneumonia. The VGG16 model correctly identifies the COVID-19 data with 0.012 misclassification rate as shown in Table 7.

We can find from Table 8 that out of 407 images for COVID-19 and normal images, 385 COVID-19 images are correctly classified as COVID-19 and 22 images are falsely classified under non viral pneumonia class. This shows that COVID-19 is very similar to viral pneumonia. 28 images are misclassified which eventually made misclassification rate for viral pneumonia of 0.012.

From Fig. 6 we can find that the pre-trained VGG16 model has correctly classified the CT scan image of chest as COVID- 19. The top right image has got ground glass opacity on the right lower lobe of chest which is filled with air. While the image on top and bottom left are normal CT chest images. In the bottom right image, we can find extensive ground-glass opacities in lungs involving almost the entire lower left and lower right indicating COVID-19 virus.

From Fig. 7, we can find that the VGG16 model has classified the Chest X-Ray images correctly. Here we can see that the images on the right side of the Fig. 7, has got increased patchy opacity in the right lower lobe. While the images on the left are seems to be more clear. The left side pictures are normal lung images which are correctly classified by VGG16.

CT scan carried out for a same person has peculiar features because the patient does not have any nodules or consolidation like reticular opacities as previous images. The image has got small patchy glass opacity in the center of lungs developed from the peripheral. These images are also precisely classified with more similarity percentage. So it has found a similar image from the training set. This shows that the model has been consistent with all the peculiar cases like the one shown in Fig. 8. This is the reason why the model is trained using both Chest CXR and CT scan images.

The loss and accuracy graphs are shown in Fig. 9 and Fig. 10 respectively. We can see the steady increase in accuracies and steady decrease in loss values while training and testing. This shows that model is more effective and efficient.

After finding the similar models, the final classification is carried out to calculate confusion matrix for model evaluation. Tables 9, 10 and 11 shows the confusion matrix for the conventional and transfer learning models of COVID-19 data when tested for viral pneumonia models. Tables 12, 13 and 14 show the classification reports for all three models with precision, recall and F1-scores to analyze the performance where C1 denotes normal class, C2 is bacterial pneumonia class, C3 is viral pneumonia class and C4 is COVID-19 class. The precision and recall for all the classes are found to be promising. The F1-score as shown in Eq (21) is calculated using precision in Eq (22) and recall in Eq (23), where Tp is number of data that are COVID-19 and are correctly classified as COVID-19. This shows the model is skilled and classified the images precisely. The transfer learning gives better outcomes when compared with normal classification.

Sample of 14 haralick features of 10 sample images are seen through Table 15, Table 16 and Table 17 for Normal image, viral pneumonia and COVID-19 images. Then haralick features of 200 images of normal, viral pneumonia and COVID-19 are analyzed. From this analysis, it is concluded from Tables 18, 19 and 20 that the feature F4, F6 and F7 should lie only within certain ranges. Other features exhibit values with fewer deviations. The range of values for an image to be detected as normal is as shown in the Eq (24), Eq (25) and Eq (26).

For the image to be identified as viral pneumonia affected lung images the values of the features must lie within the range as shown in the Eq (27), Eq (28) and Eq (29).

For the image to be identified as COVID-19 affected lung images the values of the features must lie within the range as shown in the Eq (30), Eq (31) and Eq (32).

4.3 Performance Comparison

The efficacy of the proposed model is compared with other recent studies on COVID-19 conventional classification works and it is given in Table 21. From this performance analysis, the proposed transfer learning model outperforms the other existing models.

4.4 Visualisation

The infected region of lung images are identified using GradCAM. Images in the Fig. 11 shows heatmap visualization based on the prediction made by the transfer learning model(VGG-16) which produces better accuracy. Using GradCAM we can visually validate where the proposed network is scanning and verifying that it is indeed screening the correct patterns in the image and activating around those patterns which will be used by our model for classification.This heatmap generated shows the infected regions that are correctly identified by our model. To sum up GradCAM, the images are passed into the completely trained model and features are extracted from the last convolution layer. Let fi be the ith feature map and let wf,i be the weight in the final classification layer for feature map i leading to f. We obtain a map Mf of the most salient features utilized in categorizing the image as having f by calculting the weighted sum of the features using the assigned weights. It is given in the Eq. (33).

5 Discussion

World Health Organisation(WHO) has recommended RT-PCR testing for the suspicious cases and this has not been followed by many countries due to shortage of the testing kit. Here the transfer learning technique can provide a quick alternative to aid the diagnoses process and thereby limiting the spread. The primary purpose of this work is to provide radiologists with less complex model which can aid in early diagnosis of COVID-19. The proposed model produces precision of 91% , recall of 90% and accuracy of 93% by VGG-16 using transfer learning, which outperforms other existing models for this pandemic period.

6 Conclusion

This COVID-19 detection model has been developed with kee** in mind the challenges prevailing in the field of COVID-19 detection using data assimilated from multiple sources. Analysis of unusual features in the images is required for detection of this virus infection. The earlier we detect the viral infection, the more it helps in saving lives. This paper has been visualized in holistic approach taking into account the critical issues that are daunting in the domain. The results are fairly consistent for all peculiar cases. We hope the outcomes discussed in this paper serves a small steps for constructing cultivated COVID-19 detection model using CXR and CT images. In future work, more data can be assimilated for better results which further strengthen the proposed model.

References

Apostolopoulos I, Tzani M (2020) Covid-19: Automatic detection from x-ray images utilizing transfer learning with convolutional neural networks, vol 43

Ayan E, Unver H (2019) Diagnosis of pneumonia from chest x-ray images using deep learning

Bai H, Hsieh B, **ong Z, Halsey K, Choi J, Tran T, Pan I, Shi L-B, Wang D-C, Mei J, Jiang X-L, Zeng Q-H, Egglin T, Hu P-F, Agarwal S, **e F, Li S, Healey T, Atalay M, Liao W-H (2020) Performance of radiologists in differentiating covid-19 from viral pneumonia on chest ct

Bai Y, Yao L, Wei T, Tian F, ** D-Y, Chen L, Wang M (2020) Presumed asymptomatic carrier transmission of covid-19, vol 323

Chen H, Guo J, Wang C, Luo F, Yu X, Zhang W, Li J, Zhao D, Xu D, Gong Q, Liao J, Yang H, Hou W, Zhang Y (2020) Clinical characteristics and intrauterine vertical transmission potential of covid-19 infection in nine pregnant women: a retrospective review of medical records, vol 395

Dhama K, Khan S, Tiwari R, Sircar S, Bhat S, Malik Y, Singh K, Chaicumpa W, Bonilla-Aldana D, Rodriguez-Morales A (2020) Coronavirus disease 2019 – covid-19

Elasnaoui K, Youness C, Idri A (2020) Automated methods for detection and classification pneumonia based on x-ray images using deep learning

Gozes O, Frid-Adar M, Greenspan H, Browning P, Zhang H, Ji W, Bernheim A (2020) Rapid ai development cycle for the coronavirus (covid-19) pandemic: Initial results for automated detection & patient monitoring using deep learning ct image analysis

Harsono I, Liawatimena S, Cenggoro TW (2019) Lung nodule texture detection and classification using 3d cnn, vol 13

Hemdan E E-D, Shouman M, Karar M (2020) Covidx-net: A framework of deep learning classifiers to diagnose covid-19 in x-ray images

Huang L, Han R, Ai T, Yu P, Kang H, Tao Q, **a L (2020) Serial quantitative chest ct assessment of covid-19: Deep-learning approach. Radiology: Cardiothoracic Imaging 2:e200075. https://doi.org/10.1148/ryct.2020200075

Kermany DS, Zhang K, Goldbaum MH (2018) Labeled optical coherence tomography (oct) and chest x-ray images for classification

Khan A, Shah J, Bhat M (2020) Coronet: A deep neural network for detection and diagnosis of covid-19 from chest x-ray images. Comput Methods Prog Biomed 196:105581. https://doi.org/10.1016/j.cmpb.2020.105581

McCall B (2020) Covid-19 and artificial intelligence: protecting health-care workers and curbing the spread, vol 2

Oh Y, Park S, Ye J (2020) Deep learning covid-19 features on cxr using limited training data sets

Porebski A, Vandenbroucke N, Macaire L (2008) Haralick feature extraction from lbp images for color texture classification

Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, Duan T, Ding D, Bagul A, Langlotz C, Shpanskaya K, Lungren M, Ng A (2017) Chexnet: Radiologist-level pneumonia detection on chest x-rays with deep learning

Roy S, Menapace W, Oei S, Luijten B, Fini E, Saltori C, Huijben I, Chennakeshava N, Mento F, Sentelli A, Peschiera E, Trevisan R, Maschietto G, Torri E, Inchingolo R, Smargiassi A, Soldati G, Rota P, Passerini A, Demi L (2020) Deep learning for classification and localization of covid-19 markers in point-of-care lung ultrasound

Shan F, Gao+ Y, Wang J, Shi W, Shi N, Han M, Xue Z, Shen D, Shi Y (2020) Lung infection quantification of covid-19 in ct images with deep learning

Shi F, **a L, Shan F, Wu D, Wei Y, Yuan H, Jiang H, Gao Y, Sui H, Shen D (2020) Large-scale screening of covid-19 from community acquired pneumonia using infection size-aware classification

Singh D, Chahar V, Vaishali, Kaur M (2020) Classification of covid-19 patients from chest ct images using multi-objective differential evolution-based convolutional neural networks, vol 39

Singh R, Adhikari R (2020) Age-structured impact of social distancing on the covid-19 epidemic in india (updates at https://github.com/rajeshrinet/pyross)

Wang S, Kang B, Ma J, Zeng X, **ao M, Guo J, Cai M, Yang J, Li Y, Meng X, Xu B (2020) A deep learning algorithm using ct images to screen for corona virus disease (covid-19)

Wang S, Kang B, Ma J, Zeng X, **ao M, Guo J, Cai M, Yang J, Li Y, Meng X, Xu B (2020) A deep learning algorithm using ct images to screen for corona virus disease (covid-19)

Wang S, Zha Y, Li W, Wu Q, Li X, Niu M, Wang M, Qiu X, Li H, Yu H, Gong W, Li L, Yongbei Z, Wang L, Tian J (2020) A fully automatic deep learning system for covid-19 diagnostic and prognostic analysis

Wang X, Peng Y, Lu L, Lu Z, Bagheri M, Summers R (2017) Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. ar**v:1705.02315

Wang Y, Hu M-H, Li Q, Zhang X-P, Zhai G, Yao N (2020) Abnormal respiratory patterns classifier may contribute to large-scale screening of people infected with covid-19 in an accurate and unobtrusive manner

Xu X, Jiang X, Ma C, Du P, Li X, Lv S, Yu L, Chen Y, Su J, Lang G, Li Y, Zhao H, Xu K, Ruan L, Wu W (2020) Deep learning system to screen coronavirus disease 2019 pneumonia

Xu Z, Shi L, Wang Y, Zhang J, Huang L, Zhang C, Liu S, Zhao P, Liu H, Zhu L, Tai Y, Bai C, Gao T, Song J-W, **a P, Dong J, Zhao J, Wang F-S (2020) Pathological findings of covid-19 associated with acute respiratory distress syndrome, vol 8. https://doi.org/10.1016/S2213-2600(20)30076-X

Xue Z, You D, Candemir S, Jaeger S, Antani S, Long L, Thoma G (2015) Chest x-ray image view classification. Proceedings - IEEE Symposium on Computer-Based Medical Systems 2015:66–71. https://doi.org/10.1109/CBMS.2015.49

Yoon SH, Lee K, Kim J, Lee Y, Ko H, Kim K, Park C M, Kim Y-H (2020) Chest radiographic and ct findings of the 2019 novel coronavirus disease (covid-19): Analysis of nine patients treated in korea, vol 21

Zhao J, Zhang Y, He X, **e P (2020) Covid-ct-dataset: A ct scan dataset about covid-19

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: Artificial Intelligence Applications for COVID-19, Detection, Control, Prediction, and Diagnosis

Rights and permissions

About this article

Cite this article

Perumal, V., Narayanan, V. & Rajasekar, S.J.S. Detection of COVID-19 using CXR and CT images using Transfer Learning and Haralick features. Appl Intell 51, 341–358 (2021). https://doi.org/10.1007/s10489-020-01831-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01831-z