Abstract

This article provides an overview of the theory of electron transfer. Emphasis is placed on the history of key ideas and on the definition of difficult terms. Among the topics considered are the quantum formulation of electron transfer, the role of thermal fluctuations, the structures of transition states, and the physical models of rate constants. The special case of electron transfer from a metal electrode to a molecule in solution is described in detail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Electron transfer is a type of quantum transition, in which an electron delocalizes from one stationary state and localizes in another stationary state, thereby inducing a change in the occupation number of both states. It is observed in many processes that occur in nature and has been widely studied by chemists, physicists, biochemists, and electrical engineers. This article provides an overview of the theory of electron transfer, with a focus on electron transfer from solid electrodes to species dissolved in electrolyte solutions. The general situation is illustrated in Fig. 1.

Electron transfer from a donor species D to an acceptor species A in an electrolyte solution. In general, D and A may consist of two molecules, or two electrodes, or one molecule and one electrode. Throughout the present work, it is assumed that D and A are surrounded by solvent molecules and electrolyte ions

Across the physical sciences, electron transfer is observed in many different contexts and on many different timescales. In electrochemistry, for example, it is the principal step in corrosion, electroplating, electrowinning, and electrolysis generally. It is also the energy-transducing process in batteries, fuel cells, solar cells, and supercapacitors. In biology, electron transfer plays a crucial role in photosynthesis, respiration, nitrogen fixation, and in many other biochemical cycles. As a result, more than 10% of the structurally characterized proteins in the Protein Data Bank are redox proteins, i.e., proteins that participate in, or catalyze, electron transfer. In environmental science, electron transfer is fundamental to the chemistry of the world’s oceans and to the dispersal and remediation of metals in the natural environment. Finally, electron transfer is central to the emerging new area of molecular electronics.

Interest in electron transfer has grown enormously over the past century, and the development of the field has been aided by contributions from some of the finest minds in science. However, despite its long and intriguing history, I have made no attempt in the present work to summarize the complex interplay of all the various theories that have been suggested at one time or another. That would be an immense undertaking. Instead, I have tried to provide a short, logical introduction to the foundations of the theory, in a form congenial to the nonexpert. How far I have succeeded in that task must be left to the judgment of the reader.

Any description of electron transfer requires knowledge of the behavior and distribution of electrons around atomic nuclei. This fact was realized very shortly after Joseph Thomson confirmed the existence of the electron in 1897 [1]. Indeed, Thomson himself tried to develop an electron theory of valence. However, progress was slow until Niels Bohr developed the first satisfactory dynamic model of an atom in 1913 [2]. In Bohr’s model, each electron was allowed to move in an orbit according to the classical laws of electrostatics, and then some ad hoc assumptions were introduced which had the effect of restricting the electrons to certain energy states, which corresponded to quantized values of angular momentum. A defect of Bohr’s model, however, was that it allowed electrons to have precise values of position and momentum simultaneously, and evidence soon mounted against that. In addition, scattering experiments began to suggest that electrons might have a wavelike character. The resulting intellectual crisis was not resolved until 1926 when Erwin Schrödinger formulated wave mechanics and derived his eponymous equation [3]. It was one of the triumphs of the Schrödinger approach that the existence of discrete energy levels followed from the laws of wave mechanics and not vice versa.

The year after Schrödinger’s work was published, Werner Heisenberg proposed his famous Uncertainty Principle [4]. According to the principle, one can never know the position and momentum of an electron simultaneously. At fixed momentum, we must replace certainty with mere probability that the electron is at a particular location. Mathematically, this means that we must define the location of an electron by means of a probability function. The value of this function necessarily varies from place to place according to the chance that the electron will be found there. If we call this function ρ (rho) and accept that its value might be different in every different volume of space τ (tau), then ρdxdydz (≡ρdτ) is the probability that a single electron will be found in the small volume dτ surrounding the point (x, y, z). For this reason, ρ is called the probability density function. Rather obviously, since the single electron must definitely be somewhere in the totality of space, we also have

The Born interpretation

A further problem that vexed early researchers was how to connect the probabilistic character of the electron with its wavelike character. Max Born solved this problem in 1926 [5, 6]. If we represent the wave function of an electron by a real number ψ (psi), then according to Born

This implies that, for a single electron, the square of the wave function at a certain location is just the probability density that the electron will be found there. This is Born’s Statistical Interpretation of the wave function.

Strictly speaking, Eq. 1.2 works only for an electron in a stationary state because it is only in that state that the wave function is a real number. For an electron in a nonstationary state, the wave function becomes a complex number and ψ 2 must then be replaced by the square of its modulus |ψ|2. Or, what amounts to the same thing, ψ 2 must be replaced by ψψ* where ψ* is the complex conjugate of ψ. However, these mathematical nuances need not detain us—they are readily accommodated within the general scheme of quantum mechanics—and anyway we shall return to them later. For present purposes, it is sufficient to note that, in all cases,

The fact that the probability density ρ(x, y, z) is often concentrated in certain preferred regions of space justifies the well-known interpretation of stationary electronic states as “orbitals” in which the probability of finding the electron is high. In reality, the wave function of an electron stretches to infinity in all directions, and there is always a chance, however small, of finding an electron at an arbitrarily large distance from an atomic nucleus. However, for many purposes, it is often sufficient to note that 90% (say) of the electron’s wave function is concentrated inside a well-defined lobe emanating a short distance from an atom or molecule. The utility of this idea should not be underestimated: as we shall see later on, the ready visualization of the overlap of orbitals provides an intuitive and easy-to-grasp method of deciding if electron transfer will occur or not.

Pathways and probabilities

One of the properties of quantum mechanics that is deeply counterintuitive is the rule for combining probabilities. It is the wave functions that are additive, not the probabilities. This leads to some very peculiar outcomes compared with everyday experience. Suppose, for example, that an electron is able to transfer from a donor species D to an acceptor species A. And suppose, furthermore, that there are two possible pathways between D and A, which for convenience we shall call pathway 1 and pathway 2. Then, it is of some importance to know how these probabilities combine.

Adopting an obvious notation, let us call the probability of electron transfer P 1 if only pathway 1 is open and P 2 if only pathway 2 is open. Now, we ask what would happen if both pathways were open at the same time. One might guess that the combined probability of electron transfer would be

But that would be wrong! In fact, the combined probability of electron transfer is

which may be less than either P 1 or P 2 alone. In other words, opening a second pathway may actually cause a decrease in overall probability for electron transfer. Indeed, this is routinely observed whenever there is destructive interference between the wave functions ψ 1 and ψ 2. It follows that tremendous care must be taken when tracing multiple pathways of electron transfer through complex systems. To avoid problems of this type, we shall, for the remainder of this document, confine our attention to electron transfer events that proceed through single pathways between donor species and acceptor species.

The Schrodinger equation

At the deepest level, the Schrödinger equation is just the quantum equivalent of the conservation of energy. Classically, the conservation of energy states that

where T and V are the kinetic energy and potential energy of the system under consideration, and E is the total energy. For example, for a single electron of mass m moving along the x-axis in an electric field such that its electrostatic potential energy is V(x), Eq. 1.6 would read

or, defining the momentum \( {p_{\rm{x}}} = m\dot x \),

Classically, the solution of this last equation gives the motion (orbit) of the electron. However, in quantum mechanics, the same equation is converted into a wave equation by means of a Hamiltonian transformation (see Table 1).

Thus, converting to Hamiltonian operators,

and so

Hence, for the time-independent case, we have

and for the time-dependent case we have

These are the textbook forms of the Schrödinger equation. In electron transfer theory, we are mainly concerned with the time-dependent form, Eq. 1.12.

Equation 1.12 is a homogeneous linear partial differential equation. (Homogeneous because every term depends on ψ; linear because there are no powers higher than the first.) Perhaps the most surprising feature of this equation is that it has an infinite number of solutions, with each solution representing a possible state of the system. Such an overabundance of outcomes leads to a natural question: if there are infinitely many solutions, which one is appropriate in a given circumstance? Actually, the answer is determined by the initial and boundary conditions of the problem. At room temperature, however, we are mostly concerned with the lowest energy solution, commonly known as the ground-state solution. It is also natural to ask whether mathematical solutions of the Schrödinger equation can be obtained in simple, closed forms. The answer is, generally, no. In most cases, solutions are obtained as infinite series whose coefficients must be determined by recurrence relations.

The Born–Oppenheimer approximation

Closed-form solutions of the time-dependent Schrödinger equation are possible only for certain special cases of the electrostatic potential energy V(x). It is particularly unfortunate that the Schrödinger equation is insoluble for all cases where an electron moves under the influence of more than one atomic nucleus, as in electron transfer. However, the equation may be simplified by noting that atomic nuclei have much greater masses than electrons. (Even a single proton is 1,836 times heavier than an electron.) Thus, in classical terms, an electron may be considered to complete several hundred orbits while an atomic nucleus completes just one vibration. An immediate corollary is that, to a good level of approximation, atomic nuclei may be considered motionless while an electron completes a single orbit in a single stationary state. This approximation, known as the Born–Oppenheimer approximation, greatly simplifies the solution of the Schrödinger equation and finds particular use in the solution of computationally intensive problems.

Today, the Born–Oppenheimer approximation is widely used in computer simulations of molecular structure. Assuming the approximation holds, then the system energy may be calculated uniquely for every possible position of the atomic nuclei (assumed motionless). This then allows one to construct a plot of total energy as a function of all the nuclear coordinates, yielding a multidimensional “potential energy surface” for the system under study. The contributing factors to this potential energy surface are

-

1.

The Coulomb attractions between the electrons and the nuclei

-

2.

The Coulomb repulsions between the electrons

-

3.

The Coulomb repulsions between the nuclei

-

4.

The kinetic energy of the electrons

It is immediately clear that this is not a true potential energy at all because it contains a mixture of potential energy and kinetic energy terms. However, it does determine the position of lowest potential energy of the atomic nuclei, and so the name is not entirely unreasonable.

Remark

The Coulomb attraction between electrons and nuclei is the only attractive force in the whole of chemistry.

The application of computer simulation methods to electron transfer systems raises the question of precisely what is meant by a “system.” Roughly speaking, there are two different answers to this question, depending on one’s point of view. The minimalist view is that the system consists of the donor and acceptor species only. In that case, there is no “friction” (interaction) between the system and the rest of the solution, and consequently the donor and acceptor species conserve their joint energy at all times. As they mutually interact, potential energy may be converted into kinetic energy, or kinetic energy may be converted into potential energy, but the total remains constant, and the system performs deterministic motions along the potential energy profile. Although unphysical, except perhaps in vacuo, this situation is comparatively easy to program on modern computers and has nowadays attained a certain level of acceptability. The alternative view is that the system consists of the donor and acceptor species plus the entirety of the surrounding solution (sometimes called the “heat bath”). In the latter case, there is continual friction (interaction) between the system and the solution, and accordingly the energies of the donor and acceptor species fluctuate wildly. This is more realistic but requires the use of Gibbs energy profiles rather than potential energy profiles to characterize the system and also makes the motion of the system along the reaction coordinate a stochastic (random) variable. Notwithstanding these complications, we have standardized on Gibbs energy profiles throughout the present work.

Remark

The most probable path between the reactants and products across the Gibbs energy profile is called the reaction coordinate, and the maximum energy along this path is called the Gibbs energy of activation.

For a nonconcerted single-step reaction, beginning at thermodynamic equilibrium and ending at thermodynamic equilibrium, the Gibbs energy of activation is supplied by just one degree of freedom of the system. In this primitive case, the reaction coordinate may be defined in the following convenient way.

Definition

For a nonconcerted single-step reaction, the reaction coordinate is just the extensive variable of the single degree of freedom that takes the system to its transition state. This might be, for example, the length of a single chemical bond or the electrical charge on the ionic atmosphere of an ion.

Remark

For a concerted reaction, the reaction coordinate is necessarily a combination of extensive variables. This is the case, for example, when electron transfer is accompanied by bond rupture, a situation that is outside the scope of the present work.

Tunneling

We now come to the fundamental mechanism of quantum transitions between stationary states. I refer, of course, to tunneling. Among the many outstanding successes of quantum theory over the past 80 years, surely the most impressive has been the discovery of tunneling. Tunneling is the quantum mechanical process by which an electron (or any other light particle) penetrates a classically forbidden region of space and thus transfers between two separate points A and B without localizing at any point in between. For electrons, a “classically forbidden” region of space simply means a region of negative electrostatic potential.

The theory of electron tunneling was initiated by Friedrich Hund in a series of papers published in Zeitschrift für Physik in 1927, where he called the effect “barrier penetration” [7–9]. However, his focus was on the tunneling of electrons between the wells of a double-well potential in a single molecule, so the results were not of immediate transferability to electron transfer between molecules. A more widely applicable theory of tunneling appeared 1 year later. In 1928, Ralph Fowler and Lothar Nordheim explained, on the basis of electron tunneling, the main features of electron emission from cold metals [10]. They were motivated by the fact that electron emission could be stimulated by high electric fields, a phenomenon that had deeply puzzled scientists since its first observation by Robert Wood in 1897 [11]. Also in 1928, Edward Condon and Ronald Gurney proposed a quantum tunneling interpretation of alpha-particle emission [12, 13], which led to widespread acceptance of the tunneling concept. Today, electron tunneling is recognized as crucially important throughout chemistry, biology, and solid-state and nuclear physics.

Before proceeding further, we must also say a few words about the Franck–Condon principle, which applies to most cases of electron tunneling. The principle states that, to a good level of approximation, all the atomic nuclei in a reacting system are effectively motionless while the process of electron tunneling takes place. James Franck and E.G. Dymond initially recognized a special case of this principle in their studies of the photoexcitation of electrons in 1926 [14]. Edward Condon then generalized the principle beyond photochemistry in a classic 1928 Physical Review article entitled “Nuclear Motions Associated with Electron Transitions in Diatomic Molecules” [15]. Although the Franck–Condon principle may be justified by arguments similar to those that are used to justify the Born–Oppenheimer approximation, it has a much wider scope, as the following “plain English” definitions make clear.

Definition

The Born–Oppenheimer approximation is the approximation that, in a system of electrons and nuclei, the atomic nuclei may be considered motionless while an electron makes a single orbit of a single stationary state.

Definition

The Franck–Condon principle is the approximation that, in a system of electrons and nuclei, the atomic nuclei may be considered motionless while an electron makes a quantum transition between two stationary states.

Besides the Born–Oppenheimer approximation and the Franck–Condon principle, several other methods of approximation have been developed to help solve the Schrödinger equation. The most important of these is called time-dependent perturbation theory. As we have already mentioned, it is impossible to find exact solutions of the Schrödinger equation for electrostatic potential energies of even moderate complexity. However, time-dependent perturbation theory allows some complex cases to be solved by first solving simpler cases and then modifying the results incrementally. The method was perfected by Paul Dirac in 1927 [16]. Besides introducing an efficient notation, Dirac also established an important criterion for successful electron transfer—an electron will localize in an acceptor species only if it has the same energy as it did in the donor species.

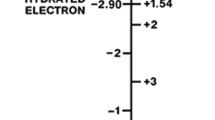

Dirac’s ideas were introduced into electrochemistry by Ronald Gurney in 1931 [17]. Gurney provided a very clear picture of how an electron transfer event occurs. An electron initially resides in a stationary electronic state of a chemical species, which we identify as the donor. Nearby, an empty electronic state exists inside a second chemical species, which we identify as the acceptor. Due to random fluctuations of the electrostatic potential energy of both species, the energies of both states are momentarily equalized, at which point, the wave function of the electron begins to build up on the acceptor. By the Born interpretation, this means that the probability of finding the electron builds up on the acceptor. When averaged over a whole population of donor and acceptor species, the rate of this buildup corresponds to the rate of electron transfer. In a vacuum, there are of course no random fluctuations of electrostatic potential energy and so the coincidence of donor and acceptor energy states is highly improbable. But, in an electrolyte solution, there are so many random fluctuations of electrostatic potential energy that coincidences of electron energy states occur billions of times every second.

To what extent do random fluctuations of electrostatic potential energy in solution affect the energy of an electron inside a molecule? Gurney answered this question with wonderful clarity. Suppose that an electron resides in the nth energy level of a certain molecule, where it experiences an electrostatic potential energy V(x, y, z). Call its energy w n. Then, what we want to know is how this energy changes to a new value \( w_{\rm{n}}\prime \) when the electrostatic potential energy of the surrounding ionic atmosphere changes to a new value V(x, y, z) + v(x, y, z). The precise answer involves an integration over the whole of space, thus

However, an approximate (but still sufficiently accurate) answer can be obtained by noting that the spatial variation of electrostatic potential energy occurs over long range. For comparison, the potential energy of two charges q 1 and q 2 a distance r apart varies as r −1, whereas the electron density in orbitals decays near-exponentially with r. With such a disparity of length scales, it is reasonable to assume that v(x, y, z) is spatially uniform when evaluating the integral. In that case, v(x, y, z) ≠ f(τ), and

So the answer to our question is that the energy of an electron inside a molecule fluctuates by the same amount as the electrostatic potential energy of the surrounding ionic atmosphere. For this reason, the energy of an electron inside an electrolyte solution bounces up and down like a cork in the ocean.

Yet another fascinating question is, “What is the intrinsic timescale of electron tunneling?” While it is not yet possible to give a final answer, we can estimate that the buildup of an electron’s wave function inside an acceptor species in an electrolyte solution typically occurs on a timescale of <1.0 fs. This timescale is determined by the inertia of the “sea of electrons” in the surrounding medium, which must equilibrate with the newly occupied state. Such a timescale is at the limit of present-day measurements and is exceptionally fast compared with transition-state lifetimes, which are typically on the 10–100-fs timescale. Because of this disparity of time scales, the rate-determining step in many-electron transfer reactions is commonly the environmental reorganization needed to equalize the energies of the donor and acceptor states, rather than the buildup of the wave function itself. For this reason, electron transfer is often referred to as a “mixed” classical–quantum phenomenon.

In summary, electron transfer requires the energies of two electronic states to be made equal. In electrolyte solutions, the equalization process occurs by random fluctuations of the electrostatic potential energies of both reactive species. The fluctuations are spontaneous; they are driven by heat; and they occur even at thermodynamic equilibrium. They are, indeed, equilibrium fluctuations. It is therefore to this topic that we turn first.

Fluctuations in electrolyte solutions

In this section, we seek to identify, and quantify, the fluctuations that trigger electron transfer in electrolyte solutions. We do this on the assumption that the fluctuations are drawn from the same distribution as those that occur at equilibrium.

Before beginning, let us briefly define what we mean by an electrolyte solution. An electrolyte is any neutral substance that dissociates into mobile ions when dissolved in a solvent. Thus, an electrolyte solution may be any mixture of mobile ions dissolved in a solvent. Compared with an ideal solution, an electrolyte solution has an additional degree of freedom, namely, an ability to store energy by microscopic displacements of charge.

In 1878, James Clerk Maxwell [18] defined thermodynamics as

... the investigation of the dynamical and thermal properties of bodies, deduced entirely from what are called the first and second laws of thermodynamics, without any hypotheses as to the molecular constitution of the bodies.

By contrast, kinetic theories require molecular information. In this section, we shall try, in the spirit of Maxwell, to see how much we can learn about the theory of equilibrium fluctuations, without explicitly introducing molecular information. While such an approach necessarily excludes the evaluation of rates, nevertheless, it provides powerful insights into the kinds of fluctuations that trigger electron transfer and supplies some stringent bounds on the kinetic theory.

Equipartition of energy

At thermodynamic equilibrium, all classical systems experience fluctuations of energy whose magnitude, on average, is ½ k B T per degree of freedom. This is John James Waterston’s famous equipartition of energy [19]. In the case of electron transfer in an electrolyte solution, both the donor and the acceptor species are surrounded by small volumes of solution that experience such fluctuations, and, in fact, it is these that create the transition state for the electron transfer process. For this reason, it is of utmost importance to describe, and quantify, the various types of fluctuation that occur at equilibrium in electrolyte solutions.

Consider a small system embedded in a much larger system (the heat bath). The situation is illustrated in Fig. 2. The intensive parameters that characterize the small system are T (temperature), P (pressure), f (mechanical force), and ϕ (electric potential). Fluctuations of internal energy are denoted ΔU.

A small system surrounded by a heat bath. Equilibrium fluctuations of internal energy ΔU have a large effect on the small system but a negligible effect on the large system. As a result, the heat bath retains uniform and constant values of all of its intensive parameters whereas the small system does not

We assume that the small system is so tiny that equilibrium fluctuations in its thermodynamic parameters cannot be neglected. Conversely, we assume that the heat bath is so large that equilibrium fluctuations in its thermodynamic parameters can be neglected. The heat bath is also assumed to be isolated from the external world.

Remark

As shown by Boltzmann, equilibrium fluctuations of the intensive parameters of a small system embedded inside a large system form a stationary ergodic process. That is to say, the time-averaged values of the intensive parameters of the small system are identical to the space-averaged values of the intensive parameters of the large system. If it were not so, equilibrium would not be achieved.

From the first law of thermodynamics (the conservation of energy), we know that any fluctuation in the internal energy of the small system ΔU must be accompanied by a complementary fluctuation in the internal energy of the heat bath ΔU HB,

Further, any fluctuation in the volume of the small system ΔV must likewise be accompanied by a complementary fluctuation in the volume of the heat bath ΔV HB,

This latter relation might be called the “no-vacuum” condition. To understand its importance, consider what would happen if the small system shrank, but the large system did not expand by the same amount. Then, a vacuum would appear between the two systems, and thermal and hydraulic contact would be lost. Thus, a necessary property of a perfect heat bath is that it does not lose thermal or hydraulic contact with the small system inside it.

We begin our analysis by noting that, for a fluctuated small system having intensive values (T, P, f, ϕ), any infinitesimal increments in the internal energy U fluc can be written

Here, S is entropy; V is volume; L is extension; and Q is charge. In addition, T is absolute temperature; P is pressure; f is tension/compression force; and ϕ is electrostatic potential. We see that each of the terms on the right-hand side of Eq. 2.3 is the product of an intensive quantity (T, P, f, ϕ) and the derivative of an extensive quantity (S, V, L, Q). The full equation tells us that, in principle, we can change the internal energy of the small system in various ways, e.g., by changing its entropy S, by changing its volume V, by distorting it through a distance L, or by changing its electric charge Q. The absolute magnitudes of the corresponding internal energy changes are then determined by the sizes of the intensive quantities (T, P, f, ϕ). For example, if we increase the charge on the small system by dQ, then the increase in internal energy is large if the electrostatic potential ϕ is large and small if the electrostatic potential is small.

Thermodynamic availability

In the next step, we must connect the behavior of the small system after it has fluctuated with the behavior before it has fluctuated. In the latter case, the small system has temperature T 0; pressure P 0; tension/compression force f 0; and electrostatic potential ϕ 0. (The subscript “0” indicates the parameter values of the unfluctuated system.) Under “unfluctuated” conditions, any infinitesimal increments in the internal energy U unfluc can be written

and therefore the difference in internal energy between the fluctuated small system and the unfluctuated small system is

In a somewhat different context, the parameter Φ (phi) was termed the “availability” by Joseph Keenan in 1951 [20]. In engineering texts, it is also sometimes known as the “exergy” of the overall system, a term coined by Zoran Rant [21]. Regardless of what one calls it, the thermodynamic potential Φ is the natural potential for quantifying the energy that fluctuates reversibly back and forth between a small system and a large one. Thus, Φ is the natural potential for quantifying the equilibrium fluctuations in electron transfer theory (and, indeed, in chemical rate theory).

Remark

The thermodynamic potential Φ (availability, exergy) is the proper measure of the energy gained by a small system as it experiences an equilibrium fluctuation inside a much larger system (heat bath).

The essential difference between the internal energy and the available energy is obvious from the following definitions.

Definition

Internal energy (U) is the total energy of a stationary system that can be dissipated into a vacuum by nonnuclear processes.

Definition

Available energy (Φ) is the total energy of a stationary system that can be dissipated into a heat bath by nonnuclear processes.

Now let T, P, f, and ϕ be the temperature, pressure, tension/compression force, and electrostatic potential of the fluctuated small system inside the heat bath, and let T 0, P 0, f 0, and ϕ 0 be the temperature, pressure, tension/compression force, and electrostatic potential of the unfluctuated small system inside the heat bath. Then, by definition,

Because Φ is a function of state, its differential is exact. Hence,

For a system having n degrees of freedom, we know from the first law of thermodynamics that the detailed expression for dΦ must contain one heat term (T − T 0)dS plus (n − 1) work terms of the form (Y − Y 0)dX. That is,

Such a system has 2n primary variables arranged in conjugate pairs whose products have the dimensions of energy. Examples of such conjugate pairs are (P, V) and (ϕ, Q). In each case, the extensive variables are the independent variables. For chemical species in solution, the work terms are predominantly mechanical (vibrational–librational) or electrical in character.

For small n, Eq. 2.8 looks reassuringly benign. But, as n increases, the formula for Φ rapidly becomes unmanageable. Large values of n arise naturally in complex systems because large numbers of vibrational modes are available. Indeed, it is well known that all nonlinear molecules having N atoms have 3N–6 vibrational degrees of freedom, so that, for example, a redox protein has almost as many vibrational modes as it does atoms. (And even a “small” electron transport protein like rubredoxin contains 850 atoms!) To keep the theory of electron transfer within manageable bounds, we shall therefore find it convenient to limit our attention to four representative extensive variables, namely S, V, L, and Q. This allows us to consider four different types of activation process (thermal, hydraulic, mechanical, and electrical) for electron transfer, without becoming bogged down in detail. Thus, we consider

We can immediately simplify this formula by noting that the unfluctuated state is at both mechanical equilibrium (mechanoneutrality) and electrostatic equilibrium (electroneutrality). Hence, f 0 = 0 and ϕ 0 = 0, so that

Formulas for the mean square values of the fluctuations that occur in the extensive quantities (ΔS, ΔV, ΔL, ΔQ) of a small system inside a heat bath are readily derived from Eq. 2.10 and are collected in Table 2. These formulas are also of interest in nanotechnology because they set limits on the deterministic behavior of nanoscale devices.

At the moment of electron transfer, conservation of energy dictates that the availability of the reactants should match the availability of the products. At this special point, we refer to the value of the availability as the availability of activation and label it by an asterisk; thus,

In an analogous way, we label the entropy of activation as (S − S 0)*, the volume of activation as (V − V 0)*, the extension of activation as (L − L 0)*, and the charge of activation as (Q − Q 0)*. In general, we expect all of these parameters to be finite.

Gibbs energy manifold

At constant temperature and pressure of the heat bath (i.e., under “normal” laboratory conditions), it is more usual for chemists to think in terms of Gibbs energy rather than availability. We can readily convert to Gibbs energy by means of a Legendre transform of Eq. 2.11. Thus,

Since there are four different terms on the right-hand side of this equation, we may think of the Gibbs energy difference (G − G 0) as a four-dimensional manifold whose space is randomly explored by the various combinations of fluctuations that occur along the four axes (T, P, L, and Q). However, the most important path through this manifold is the one that maximizes the probability of reaching the product state. This path is called the “reaction coordinate.”

In chemical kinetics, the temperature and pressure of the transition state are assumed to be the same as those of the reactant and product states. This means that dT = 0 and dP = 0 along the reaction coordinate, so that two full terms disappear from Eq. 2.12, leaving only

The situation is shown schematically in Fig. 3. The Gibbs energy along the reaction coordinate is a function of L and Q, but not of T and P. Notice also that the transition state occurs at a point which is a maximum along the reaction coordinate but a minimum along all other coordinates.

Schematic diagram of the most probable path through the four-dimensional manifold of Eq. 2.12, at constant temperature and pressure of the heat bath. Fluctuations of the temperature and pressure of the small system still occur, but they are orthogonal to the reaction coordinate and so do not contribute to the activation process. The transition state is indicated by the asterisk

Remark

Despite the disappearance of the entropy and volume terms from Eq. 2.12, we emphasize that (S − S 0) and (V − V 0) are not necessarily 0 along the reaction coordinate. On the contrary, they most likely have finite (and, in principle, measurable) values. It is simply that their conjugate parameters dT and dP are 0.

If we now write the Gibbs energy difference in the form

and integrate Eq. 2.13, we obtain

This equation is valid provided only that the heat bath is maintained at constant temperature and pressure. Being thermodynamic, it is also entirely model free. Indeed, it subsumes all known models of electron transfer that use classical equilibrium fluctuations to equalize the energies of reactants and products.

In Eq. 2.15, an important limiting case is observed if f = 0, that is, if the reactants and their inner solvation shells are not distorted during the electron transfer process. In that case, the only fluctuations that are needed to trigger electron transfer are fluctuations of charge in the ionic atmosphere of the reactants. The Gibbs energy profile becomes one dimensional, and the reaction coordinate becomes the charge Q. (The charge fluctuation model [22].)

Remark

∆G RC is the parameter that is sketched in innumerable “Gibbs energy plots” in the electrochemical literature.

It follows from the above analysis that the activation energy for electron transfer may be expressed in the generic form

where the asterisk once again indicates the transition state. This formula, which plays the role of a “master equation,” may also be incorporated directly into transition-state theory (TST).

Transition-state theory

Transition-state theory is one of the most enduring theories of chemical reactions in solution. It is based on the idea that an “energy barrier” exists between reactants and products. The original concept may be traced back to the researches of René Marcelin [23], published posthumously in 1915, although most modern formulations are actually derived from the work of Henry Eyring, Meredith Evans, Michael Polanyi, and Eugene Wigner published in the 1930s [24–26]. Today, the popularity of TST rests on its ability to correlate reaction rates with easily measured quantities such as temperature and concentration.

Many different versions of transition-state theory have evolved over time, but most of them share the following assumptions (at constant temperature and pressure):

-

1.

There is thermodynamic equilibrium between all the degrees of freedom of the system, with the exception of those that contribute to the reaction coordinate.

-

2.

The transition state occurs at a Gibbs energy maximum along the reaction coordinate, but at a Gibbs energy minimum along all other coordinates.

-

3.

Electrons behave according to quantum laws while nuclei behave according to classical laws (i.e., nuclear tunneling is disallowed).

-

4.

The rate of reaction never exceeds the response rate of the heat bath.

-

5.

The rate of reaction is proportional to the number of nuclei in the transition state.

-

6.

The rate of reaction is proportional to the rate that individual nuclei leave the transition state in the forward direction.

In electron transfer theory, all of these assumptions carry over, except for the last. Regarding the last assumption, the rate that individual nuclei leave the transition state must be replaced by the rate at which the electron probability builds up on the acceptor.

In transition-state theory, the rate constant for electron transfer (k et) takes the form

Here, k 0 is the maximum rate constant and \( \Delta G_{\rm{RC}}^{*} \) is the activation energy given by Eq. 2.16. When Eq. 2.17 is combined with Eq. 2.16, we see immediately that electron transfer requires at most just two types of thermodynamic fluctuation (thermodynamic work) in order to be activated, namely (1) elastic distortions of the donor and acceptor species, which take place against their internal force fields, and (2) electrostatic charge injections into the environment of the donor and acceptor species, which take place against the self-repulsions of the charges.

These are powerful insights. However, while the laws of thermodynamics reveal the kinds of energy fluctuations that can trigger electron transfer, the same laws reveal nothing about the structure of a given transition state or the structure of the electrolyte solution that surrounds it. Progress in those areas forms the subject of the next section.

Transition states in electrolyte solutions

In order to understand the structure of transition states in electrolyte solutions, we must first understand the structure of electrolyte solutions. Here, we provide a minimum account of this area, up to and including the theory of solvation. We then proceed to describe the evolution of ideas about “inner-sphere” and “outer-sphere” electron transfer processes.

In the late nineteenth century, the theory of solutions was developed by analogy with the theory of ideal gases. The solute was regarded as a set of uncharged spherical particles, and the solvent was regarded as an inert continuum through which the particles moved at random. Individual particles were assumed to be independent, and their mutual interaction was assumed to be negligible. For nonionic species, some minor success was achieved by this approach. For ionic species, however, the method proved wholly inadequate.

Today, we know why the “ideal solution” approach failed. Firstly, the high charge density of ions causes them to attract (and retain) solvent molecules by ion–dipole coupling. This phenomenon, known as solvation, powerfully inhibits the translational and rotational motions of solvent molecules and hence lowers their entropy. Secondly, at high concentrations, ions lose their mutual independence because their electric fields penetrate far into solution. Indeed, the electrostatic potential energy of a pair of point charges varies as r −1, which is very long range indeed. (Compare the potential energy of the van der Waals interaction, which varies as r −6.) By having such a long-range influence, an ion is typically able to interact with a large population of mobile counterions and co-ions in its vicinity. This population is known as its “ionic atmosphere.” Though fluctuating continually in structure and composition, the ionic atmosphere has a time-averaged charge opposite to that of the central ion and is therefore electrically attracted to it.

Actually, the time-averaged charge on the ionic atmosphere necessarily balances the charge on the central ion because of the principle of electroneutrality. If we denote the valence of the ith type of ion by z i and all the ions are mobile, then in the absence of an externally applied electric field we have

where c i is the time-averaged number of i-ions per unit volume. Thus, the charge on the central ion, plus the time-averaged charge on the ionic atmosphere, equals 0.

Debye–Hückel theory

Historically, the thermodynamics of nonideal solutions appeared to be an intractable problem until the seminal work of Peter Debye and Erich Hückel in the 1920s [27, 28]. In 1923, they succeeded in develo** a mathematical model of ionic solutions which included long-range electrostatic interactions, although they still assumed a continuum model of the solvent. In their mind’s eye, they placed one particular ion at the origin of a spherical coordinate system and then investigated the time-average distribution of electric potential ϕ (phi) surrounding it. Instead of the expected Coulomb potential,

they found the screened Coulomb potential,

Here, Q is the charge on the central ion; ε 0 (epsilon) is the permittivity of free space; ε r is the relative permittivity (dielectric constant) of the solution; r is the distance from the central ion; and r D is a constant. Since the exponential function has a value less than 1, the effect of long-range electrostatic interactions between ions is always to diminish the electric potential of the central ion and, by extension, to diminish the electric potential of every ion in solution. The magnitude of this “screening effect” is determined by the parameter r D, where

and

Here, k B is the Boltzmann constant; N A is the Avogadro number; e is the elementary charge; μ (mu) is the ionic strength of the solution (M); c i is the molar concentration of the ith type of ion; z i is the valence of the ith type of ion; and the sum is taken over all the ions in solution. The parameter r D has the dimensions of length and is widely known as the Debye length. It is noteworthy that the Debye length is inversely proportional to the square root of the ionic strength, which is an experimental variable.

The significance of the Debye length r D is that it measures the radius of the ionic atmosphere surrounding the central ion. It also indicates the scale of length below which fluctuations from electroneutrality are significant and above which they are insignificant. Finally, the Debye length also determines the distance of closest approach of two similarly charged ions in solution, since they cannot approach within 2r D of each other without feeling strong electrostatic repulsion.

Remark

As the ionic strength μ is increased, ions of the same polarity may approach each other more closely and thereby increase the probability of electron tunneling between them.

Overall, the picture that emerges from Debye–Hückel theory is that every ion in solution is surrounded by a supermolecular arrangement of counterions and co-ions. The time-averaged charge on this “supermolecule” (= central ion plus ionic atmosphere) is necessarily 0. However, the instantaneous charge may be positive or negative, depending on whether there is a transient excess of cations or anions.

As far as electron transfer theory is concerned, the fact that the time-averaged charge on each supermolecule is 0 has some very important consequences. Consider, for example, an iron (3+) ion surrounded, on average, by three mobile chloride ions. If the iron (3+) ion takes part in an electron transfer process and receives an electron, then it ends up as an iron (2+) ion, and so its ionic atmosphere must adjust by expelling a surplus chloride ion. In a similar way, if an iron (2+) ion loses an electron, then it ends up as an iron (3+) ion, and at some stage its ionic atmosphere must adjust by attracting an extra chloride ion. These charge-compensating events are an intrinsic part of electron transfer in electrolyte solutions, and the energy associated with them must be included in any theory.

Remark

In the presence of mobile ions, every electron transfer event is charge-compensated by an ion transfer event. This is an elementary consequence of the principle of electroneutrality.

Solvation

Although Debye–Hückel theory is successful in quantifying electrostatic interactions at long range, it still fails badly at short range. In particular, it fails to account for solvation and its many side effects. Quite commonly, solvent molecules that are immediately adjacent to ions lose some of their translational and rotational entropy; their dielectric response becomes saturated; and their molar volume is compressed below normal. None of these effects is captured by classic Debye–Hückel theory.

If the solvent is water, then solvation is referred to as hydration. Formally, the hydration process may be represented as

The values of the Gibbs energy, enthalpy, and entropy of hydration of some main group cations are collected in Table 3. The entropy loss due to the binding of a single water molecule is about −28 J K−1 mol−1 at 298 K. Thus, dividing each entropy of hydration in Table 3 by −28 J K−1 mol−1 yields a “thermodynamic hydration number” n. This parameter gives a useful indication of the time-averaged number of water molecules actually bonded to each ion. Of course, other water molecules are also nearby, but they are less strongly bonded. From the final column of Table 3, we readily see that singly charged cations are weakly hydrated, while multiply charged cations are strongly hydrated.

If electron transfer changes the size of an ion (as it often does), then it follows that some energy must be supplied to expand or contract the associated solvation shell. This idea was first suggested by John Randles in 1952, and it led to the first successful kinetic theory of electron transfer based on molecular properties [33].

Things get even more complicated when we look at transition metal cations. Solutions of these species typically consist of coordination complexes (central metal ions with ligands attached) and mobile counterions to preserve electroneutrality. The structural principles of coordination complexes were largely understood by 1913, when Alfred Werner was awarded the Nobel Prize in Chemistry for his work in this area [34]. In a virtuoso performance, Werner had shown that the structure of the compound CoCl3⋅6NH3 was actually [Co(NH3)6]Cl3, with the Co(3+) ion surrounded by six NH3 ligands at the vertices of an octahedron. The NH3 ligands constituted an “inner” coordination shell, with the three chloride ions constituting an “outer” solvation shell in the form of an ionic atmosphere. Werner had also analyzed analogous complexes containing bidentate ligands, most notably tris(ethylenediamine) cobalt (III) chloride, [Co(en)3]Cl3.

Outer-sphere and inner-sphere kinetics

Despite Werner’s success in establishing the structural principles of coordination complexes, the kinetic principles resisted analysis until much later, mainly because of the complicated mixtures of ligand substitution and electron tunneling that were involved. Indeed, progress was stalled until after World War II, when radioactive tracers such as 60Co and 36Cl became available from cyclotrons in the USA. In 1949, an epoch-making paper [35] was published by W.B. Lewis, Charles DuBois Coryell, and John W. Irvine, Jr., on the mechanism of electron transfer between the tris(ethylenediamine) complex of Co2+ and the corresponding complex of Co3+,

Here, en = NH2⋅CH2⋅CH2⋅NH2. By using a radioactive tracer (60Co), these authors showed that, in this special case, ligand substitution was entirely absent. The ethylenediamine ligands remained firmly bound to the metal centers throughout the course of the reaction, thus proving that electron transfer could occur without any interpenetration of the inner solvation shells of the two reactants. Almost incredibly (or so it seemed at the time), the electron was tunneling from one complex to the other without bond making or bond breaking. It was the first example of what was later to be called an outer-sphere electron transfer process. Today, we know that electrons can tunnel as much as 1.4 nm through free space and even further if intervening species (such as water molecules or ligands) provide conduit electronic states.

Definition

An electron transfer process between two transition metal complexes is classified as outer sphere if the charge-compensating ion transfer process does not penetrate the inner coordination shell of either reactant.

Remark

This definition of outer-sphere electron transfer does not exclude the possibility that the inner coordination shells of both reactants might be elastically distorted during the reaction.

A few years later, in 1953, Henry Taube, Howard Myers, and Ronald L. Rich discovered a counterexample to outer-sphere electron transfer, involving electron transfer from the hexa(aquo) complex of CrII to the chlorido penta(amino) complex of CoIII [36, 37]. This reaction can be written

where (P−) is the perchlorate counterion. By a combination of spectrophotometry and radioactive tracer studies, the authors deduced that the radioactive *Cl− ion was acting as a bridging ligand between the chromium and cobalt metal centers while the electron transfer occurred (Fig. 4). Furthermore, a negligible amount of free *Cl− was found in solution at the end of the reaction. This was the first example of an inner-sphere (bridged) electron transfer process. Soon afterwards, numerous other complexes of the type [CoIII(NH3)5 L]2+⋅2X− were synthesized, such as L = F−, Br−, I−, CNS−, N −3 , and these were all found to behave in a similar way [38].

Definition

An electron transfer process between two transition metal complexes is classified as inner sphere if the charge-compensating ion transfer process penetrates the inner coordination shell of either reactant.

Since the discoveries of Lewis et al. and Taube et al., a vast literature has accumulated on the mechanisms and kinetics of outer-sphere and inner-sphere electron transfer reactions. It is particularly interesting to compare the rate constants of well-attested examples. Some of these are shown in Table 4. It can be seen that both types of reaction may be “fast” (k et > 103 L mol−1 s−1), and both types of reaction may be “slow” (k et < 10−3 L mol−1 s−1), with the observed rate constants spanning more than six orders of magnitude in each category. Even from this small set of data, it is obvious that the rates of electron transfer reactions are extremely sensitive to small differences in molecular structure.

How exactly do small differences in molecular structure exert such a profound effect on the rates of electron transfer reactions? Thermodynamics provides some clues. It tells us that, in order to reach the transition state, elastic distortions of the complexes may be required or charge fluctuations within the Debye radius of the central ion or both. Thus, the elastic moduli of the molecules and the local distribution of electrical charges must both be considered. Once the transition state has been reached, quantum mechanical criteria also come into play. The donor and acceptor orbitals must overlap in order for efficient electron tunneling to occur, and the orbital symmetries must coincide (i.e., constructive interference must occur between the wave functions, not destructive interference). Finally, the chemical identity of the bridging ligand is a further consideration. Each ligand generates a different lifetime of the transition state, and this also affects the probability of electron transfer. In all these various ways, the structures of transition metal complexes may influence the rates of electron transfer.

Research during the 1950s initially found that the vast majority of electron transfer reactions were inner-sphere type. The explanation for this bias lay in the electronic structure of the ligands that were being used. At that time, there was a particular focus on anionic ligands, and most of them had lone pairs of electrons that were arranged at 180° to each other, which meant they were readily able to bridge the metal centers in the transition state. Examples included F−, Cl−, Br−, I−, CNS−, N −3 , CN−, SCN−, and ONO−. Among the few exceptions were H2O and NH3. Later, the use of neutral organic ligands whose lone pairs of electrons were all directed inwards towards the central ion (such as 1,10-phenanthroline, ethylenediamine, 2,2′-bipyridine, and 2,2′:6′,2″-terpyridine) began to reverse the trend, and examples of outer-sphere electron transfer also began to accumulate.

By the 1960s, however, experimentalists had realized that all the known inner-sphere and outer-sphere electron transfer reactions had multiple sensitivities to different aspects of molecular structure, and this prompted them to search for kinetically simpler instances of both cases. In the case of inner-sphere electron transfer reactions, a particularly vexing problem was the short lifetime of the bridged intermediates, which made them very difficult to study. So a search began for stable bridge linkages that would allow spectroscopic measurements on the timescale of seconds. The triumphant result was the synthesis of μ-pyrazine-bis(pentaammineruthenium)(II,II) tosylate by Peter Ford at Stanford in 1967 [43]. This has the formula [(NH3)5Ru(μ-pyz)Ru(NH3)5]4+[(Ts)4]4–, and it was soon oxidized by Carol Creutz and Henry Taube [44] to make the mixed-valent (II,III) ion shown in Fig. 5 (the Creutz–Taube ion). This latter has subsequently become the exemplar of all mixed-valent compounds [45]. The synthesis of the Creutz–Taube ion also made it possible to study electron transfer at fixed separation of the donor and acceptor states, a feat which eliminated the encounter distance as an experimental variable.

In the case of outer-sphere reactions, the revised goal was a redox couple that would not need any elastic distortions in its inner coordination shell in order to convert from reactants to products. After some effort, it was found that the tris(2,2′-bipyridyl)ruthenium complexes Ru(bpy) 2+3 and Ru(bpy) 3+3 had identical octahedral geometries within experimental error (Ru-N distances = 0.2055 ± 0.0005 nm in both cases) [46]. As expected, this couple exhibited a phenomenally fast second-order rate constant in aqueous perchloric acid solution, namely 2 × 109 L mol−1 s−1 (almost diffusion-controlled) [47]. Furthermore, the entropy of reaction was 0 [48, 49]. As a result of these special features, the tris(2,2′-bipyridyl)ruthenium (II/III) reaction gradually became the paradigm of outer-sphere electron transfer reactions, and it still holds that special status today (Fig. 6).

Effects of ionic strength

Despite many careful kinetic studies and the synthesis of innumerable transition metal complexes, one area of research that was comparatively neglected during the 1950s and 1960s was the effect of the ionic strength of solution on the rates of outer-sphere electron transfer processes. In 1982, however, an important paper was published by Harald Bruhn, Santosh Nigam, and Josef F. Holzwarth [50].

These authors investigated outer-sphere electron transfer processes between pairs of highly negatively charged complexes at different ionic strengths of solution. In particular, they determined the second-order rate constants for the reduction of hexachloroiridium(IV) by meso-tetraphenylporphyrin-tetrasulfonate silver(II),

where M+ = Li+, Na+, K+, and Cs+. Some of their data are plotted in Fig. 7. The results demonstrate the effect of changing the ionic strength of the supporting electrolyte (M+Cl−). At low ionic strength (μ < 0.5 M), electron transfer takes place through the diffuse layers of both complexes, and since the probability of electron transfer declines steeply with increasing separation, the reaction “switches off.” But at high ionic strength (μ > 0.5 M), the diffuse layer collapses to near-zero thickness, and the rate of electron transfer saturates.

Influence of ionic strength μ on the second-order rate constant k et for electron transfer between two strongly negatively charged species in aqueous solution. Data from H. Bruhn et al. [50]

One way to picture the effect of ionic strength is to imagine that the solution is composed of neutral ionic clusters (supermolecules), Fig. 8. Embedded inside each supermolecule is a reactant ion surrounded by its ionic atmosphere. As the ionic strength changes, so too does the thickness of the ionic atmosphere, according to the relation \( {r_{\rm{D}}} \propto \sqrt {{{1 \mathord{\left/{\vphantom {1 \mu }} \right.} \mu }}} \) (Eq. 3.4).

In summary, the supporting electrolyte plays two important roles in solution-phase electron transfer. Firstly, it provides long-range screening of electrically charged species, allowing them to approach each other without doing electrostatic work. Secondly, when the species are within electron tunneling distance, it provides the charge fluctuations that cause the electrostatic potentials of the donor and acceptor species to equalize, thus ensuring that the electron transfer takes place with conservation of energy.

Molecular models of electron transfer

In previous sections, we showed that if equilibrium fluctuations trigger electron transfer, then the activation energy can be expressed in the generic form

where \( \Delta G_{\rm{RC}}^{*} \) is the Gibbs energy of activation; f is mechanical force; L is extension; ϕ is electric potential; and Q is charge. We also showed that the two terms on the right-hand side of this equation correspond to two fundamentally different types of activation process, namely (1) elastic distortions of the donor and acceptor species, which take place against their internal force fields, and (2) charge fluctuations within the Debye radius of the donor and acceptor species, which take place against the self-repulsions of the charges. In what follows, we describe molecular models of both of these processes. The elastic distortion model was elaborated by John Randles in 1952 [33], building on some prewar work of Meredith Evans [51]. The charge fluctuation model was proposed by Stephen Fletcher in 2007 [22]. A third model, which assumes that nonequilibrium fluctuations of solvent molecules trigger electron transfer, was proposed by Rudolph Marcus in 1956 [52–55] and will be discussed separately later.

The elastic distortion model

The principal features of the elastic distortion model are sketched in Fig. 9, using the aqueous Fe(II)/Fe(III) couple as an example. The Morse-type curves represent the potential energies of the inner solvation shells of the ferrous and ferric ions as a function of their radii. These potential energies were considered by Randles to be the main contributors to the Gibbs energy of activation of electron transfer. Here, for ease of exposition, we focus on the half-reaction

at an electrode surface and ignore the reverse reaction.

Potential energies of the inner solvation shells of \( {\hbox{F}}{{\hbox{e}}^{\rm{II}}}{\left( {{{\hbox{H}}_2}{\hbox{O}}} \right)_6} \) and \( {\hbox{F}}{{\hbox{e}}^{\rm{III}}}{\left( {{{\hbox{H}}_2}{\hbox{O}}} \right)_6} \) complexes as a function of their radii. From J.E.B. Randles [33]. The energy of the lowest vibrational state of Fe(II) is arbitrarily assigned a value of 0

Since the radii of the inner solvation shells of the ferrous and ferric ions are not identical, it is obvious that some change in radius must take place during electron transfer. But at what stage? Randles’ brilliant insight was that “In accordance with the Franck–Condon principle the electron transfer will correspond to a vertical transition between the curves and it is clear that the reaction must proceed over the energy barrier pqr with the electron transfer occurring at q.” In other words, what Randles proposed was that some fraction of the change in radius takes place before the electron transfer, and some fraction takes place after, with the precise ratio determined by the location of the intersection point on the potential energy curves.

At the intersection point q (the transition state), the inner solvation shells of the Fe(II) and Fe(III) ions have equal radii. Thus, on the Randles model, the activation energy of electron transfer is just the work required to change the radius (bond length) of the inner solvation shell from its lowest vibrational-state value to its transition-state value.

Remark

The Randles approach is clearly not valid for electron transfer to more complex molecules, such as redox proteins, because it neglects distortions of bond angles and distortions of torsion angles. However, if desired, it could readily be extended to include such cases.

A well-known method of calculating the Randles activation energy is the following [56]. At room temperature, all molecules exhibit multiple vibrations, which have a strong tendency to synchronize with each other, forming what are known as normal modes of vibration. In normal modes, all the atoms vibrate with the same frequency and phase. One of the normal modes, known as the spherically symmetric normal mode, is of special interest because it describes the radial in-and-out motion of the entire first solvation shell. By focusing on this mode and excluding all other modes, it becomes possible to determine the precise amount of energy needed to expand or contract the first solvation shell up to its transition-state size.

The beauty of the normal mode analysis is that it allows theorists to ignore all the complicated energy changes of individual ligands—which would normally require a multitude of reaction coordinates—and replace them with a single measure (the energy of extension) which requires only a single reaction coordinate (the radius of the inner solvation shell). A further advantage of the analysis is that the spherically symmetric normal mode is a very loose mode, having energy levels so close together that they effectively form a continuum. This allows the Randles activation energy to be derived without recourse to quantum mechanics. Indeed, the chemical bonds that connect the central ion to its solvation shell can be treated as a single, classical spring that continually exchanges energy with the surrounding solution.

From Eq. 4.1, in the absence of charge fluctuations, we have

where f is force and L is extension. And from Hooke’s law (“As the extension, so the force...”), we may assume the linear response relation

where k is the spring constant. The negative sign merely indicates that the force opposes the extension.

Remark

Because the physical stretching of chemical bonds always exhibits nonlinear behavior at high force, one must not push the linear response relation too far.

Assuming the linear response relation is applicable, the Gibbs energy that must be supplied to expand or contract the inner solvation shell is

In the scientific literature, Eq. 4.6 is widely known as the “harmonic oscillator approximation” or simply the “harmonic approximation.” This is a confusing terminology, however. For while it is true that Morse-type potential energy curves are tolerably well approximated by parabolas and the system would indeed perform simple harmonic motion if it were isolated, the fact is that the system is not isolated—it continually exchanges energy with the bulk of solution—and so the amplitude of the spherically symmetric normal mode actually varies randomly in time. As a result, the atoms of the solvation shell are executing motions considerably more complex than those of simple harmonic motion.

By noting that the extension required to reach the transition state is

where r* is the transition-state radius of the inner solvation shell and r 0 is the ground-state radius of the inner solvation shell, we finally arrive at the textbook formula for the activation energy on the Randles model,

This is highly satisfactory. The radius of the inner solvation shell of the ion acts as the reaction coordinate, and the transition state occurs at the point on the reaction coordinate where the Gibbs energy curves of the donor and acceptor species intersect. Most important of all, this elegant arrangement also guarantees that the Franck–Condon principle and the conservation of energy are satisfied simultaneously.

In passing, we note that the Randles activation energy can also be written in the form

where λ inner is the “reorganization energy” of the inner solvation shell and ∆G 0 is the difference in Gibbs energy between the unfluctuated donor and the unfluctuated acceptor states (i.e., the driving force for the reaction.) In electron transfer theory, the parameter λ inner is a hypothetical quantity, equal to the energy that would be required to give the reactant the inner solvation shell of the product, without electron transfer. A formula of this type was first published by Ryogo Kubo and Yutaka Toyozawa in 1955 [57]. In more recent times, the total reorganization energy λ of an electrochemical system has been written as the sum of an inner contribution λ inner and an outer contribution λ outer, attributed to the complete reorganizations of the redox partners and their environment.

The charge fluctuation model

The successful development of the elastic distortion model by John Randles was a major milestone in the history of solution-phase electron transfer. However, almost as soon as it was published, the question arose as to what was determining the activation energy of electron transfer in cases where the inner solvation shell was not elastically distorted or was distorted only slightly. In various guises, this question has haunted electron transfer theory ever since. Clearly, some process occurs in the outer solvation shell that is able to trigger electron transfer. But what is it?

In 2007, it was suggested by Fletcher that the mysterious process might be the Brownian motion of co-ions and counterions into and out of the ionic atmospheres of the reactants [22]. Such a process—which would occur even at thermodynamic equilibrium—would cause charge fluctuations inside the ionic atmospheres of the donor and acceptor species, which in turn would drive the system towards the transition state for electron transfer. This explanation has many attractive features, including full compatibility with the Franck–Condon principle, the Debye–Hückel theory, and the equipartition of energy.

The charge fluctuation model is based on the following assumptions [22]:

-

1.

There is an ionic atmosphere of co-ions and counterions, so Debye–Hückel screening is present.

-

2.

The charges on the reactant species are fully screened outside the Debye length.

-

3.

Fluctuations of electrostatic potential are generated inside the Debye length by the Brownian motion of co-ions and counterions.

In the original paper, the central results were obtained by develo** an “equivalent circuit” model of the reactant supermolecules. (Recall that a supermolecule is just an ion plus its ionic atmosphere.) Here, we derive the same results in a more elementary way, from Eq. 4.1, in order to illustrate the similarities and differences with the Randles model. Just as we did in the case of the Randles model, we focus on the half-reaction of Eq. 4.2 and ignore the reverse reaction.

From Eq. 4.1, in the absence of elastic distortions, we have

where ϕ is electrostatic potential, and Q is charge. Once again, we assume a linear response relation, explicitly

where the coefficient Λ (lambda, a constant) is the electrical elastance (farad−1) of the ionic atmosphere. Note that this relation is just the electrical analog of Hooke’s law. Accordingly, the work that must be supplied to concentrate the charge on the ionic atmosphere of the donor or acceptor in the transition state is

Alternatively, writing the elastance Λ as the reciprocal capacitance 1/C, we have

This formula will of course be familiar to electrical engineers—it is just the work required to charge a conducting sphere in a dielectric medium. This suggests that we might justifiably model a supermolecule (ion + ionic atmosphere) as a conducting sphere in a dielectric medium. Let us do that here. Since the capacitance of a conducting sphere is

where ε 0 (epsilon) is the permittivity of free space, ε r(ω) is the relative permittivity (dielectric constant) of the solution as a function of frequency ω (omega), and a is the radius of the sphere, it follows immediately that the Gibbs energy of activation is

Remark

The radius of the supermolecule consists of three main contributions: the radius of the central ion, the thickness of the inner solvation shell, and the thickness of the diffuse layer. Through the latter, the radius a depends on the ionic strength of solution.

At this point, a new problem must be confronted. Since the relative permittivity (dielectric constant) of the solution depends on the frequency ω at which it is perturbed (or, equivalently, depends on the time scale ω −1 over which the perturbation is applied), it is necessary to decide which value of ω is appropriate to our problem. A reasonable estimate can be obtained from the Franck–Condon principle, which tells us that molecules possess so much inertia that they cannot move during the elementary act of electron tunneling. This implies that the only part of the relative permittivity that can respond is the electronic part (i.e., the high-frequency component), viz.

So we finally obtain

where Q* is the transition-state value of the charge fluctuation. Just as we found in the Randles model, the transition state occurs at the point where the Gibbs energy curves of the donor and acceptor species intersect. Unlike the Randles model, however, the reaction coordinate is now the electrical charge on the supermolecule rather than the mechanical extension of the ligands.

Interestingly, since the mechanical extension and the electrical charge are independent degrees of freedom of the system, the total activation energy may be decomposed into two terms:

However, identification of the dominant term in any given system must be a matter of empirical enquiry.

For the charge fluctuation model, the results for one supermolecule can readily be extended to the case of electron transfer between two supermolecules, D and A, where D is an electron donor and A is an electron acceptor [22]. The goal in this case is a formula for the activation energy of two charge fluctuations of identical magnitude but opposite sign, one on each supermolecule. The result is

where a D is the radius of the donor supermolecule, and a A is the radius of the acceptor supermolecule. Under normal conditions, the charge fluctuation on the donor is fractionally negative, and the charge fluctuation on the acceptor is fractionally positive, and these reverse polarity at the moment of electron transfer.

The charge fluctuation theory has also been extended to highly exergonic reactions (the “inverted region”) and to highly endergonic reactions (the “superverted region”), as shown in Fig. 10 [58]. The principal results are: (1) In the inverted region, the donor supermolecule remains positively charged both before and after the electron transfer event. (2) In the normal region, the donor supermolecule changes polarity from negative to positive during the electron transfer event. (3) In the superverted region, the donor supermolecule remains negatively charged both before and after the electron transfer event [58]. This overall pattern of events makes it possible for polar solvents to catalyze electron transfer in the inverted and superverted regions by screening the charge fluctuations on the supermolecules—a completely new type of chemical catalysis.

Schematic diagram showing how the rate constants for electron transfer (k et) vary with driving force (−ΔG 0) and reorganization energy (λ) on the theory of charge fluctuations [58]

In the normal region, where the vast preponderance of experimental data has been obtained, solvent molecules outside the supermolecules are not preferentially orientated in the transition state. If they were, they would have to reverse their orientation at the moment of electron transfer, and that process would violate the Franck–Condon principle. On the other hand, solvent molecules inside the supermolecules do become attached to the charge fluctuations by ion–dipole coupling and thereby lose rotational and translational entropy. This means that, on the charge fluctuation model, the entropy of activation of electron transfer is necessarily negative. Due to electrostriction, the volume of activation must likewise be negative.

The solvent fluctuation model

So far, we have discussed the theory of electron transfer from the viewpoint of processes that occur at equilibrium or close to equilibrium. Now, we broaden our horizons to include processes that occur far from equilibrium. Explicitly, we consider the solvent fluctuation model [52, 53]. Since its introduction by Rudolph A. Marcus in the mid-1950s, this model has enjoyed a tremendous vogue, and today it represents the most widely accepted theory of electron transfer in the world.

The solvent fluctuation model is based on the following assumptions [54, 55]:

-

1.

The ionic atmosphere of co-ions and counterions can be neglected, so Debye–Hückel screening is absent.

-

2.

The bare charges on the reactant species are screened only by solvent molecules.

-

3.

Fluctuations of electrostatic potential are generated by the ordering/disordering of solvent dipoles near the reactant molecules.

Marcus used a continuum dielectric model for the solvent, rather than a molecular model, which led to a number of compact formulas for electron transfer between ions (but which also made his reaction coordinate difficult to understand). He also used the same formalism to model electron transfer between ions and electrodes. However, due to the unscreened nature of the bare charges on the reactant species, he was compelled to introduce work terms for bringing the reactants together and separating the products, something that is not needed in concentrated solutions of supporting electrolytes.

On the Marcus model, the transition state for electron transfer is created by solvent fluctuations. In the normal region of electron transfer, for a self-exchange reaction, the idea is that the less-charged species becomes more solvated, and the more-charged species becomes less solvated, so that the solvation shells of both species come to resemble each other. The net effect is a rough cancelation of any entropy changes in forming the transition state. This contrasts markedly with the charge fluctuation model, which predicts that the entropy of activation of electron transfer should always be negative.

Unfortunately for the Marcus model, there is no well-attested evidence that the entropies of activation of simple self-exchange electron transfer reactions are close to 0. There is, however, a great deal of evidence that the entropies of activation are substantially negative. Some examples are given in Table 5. Where high-pressure data are available, as in Table 6, the volumes of activation of electron transfer are also negative, implying the existence of electrostriction in the transition states. In summary, both categories of data suggest that the process of electron transfer in solution involves an increase in charge in the transition state.