Abstract

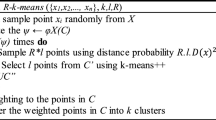

The k-means algorithm is widely used in various research fields because of its fast convergence to the cost function minima; however, it frequently gets stuck in local optima as it is sensitive to initial conditions. This paper explores a simple, computationally feasible method, which provides k-means with a set of initial seeds to cluster datasets of arbitrary dimensions. Our technique consists of two stages: firstly, we use the original data space to obtain a set of prototypes (cluster centers) by applying k-means to bootstrap replications of the data and, secondly, we cluster the space of centers, which has tighter (thus easier to separate) groups, and search the deepest point in each assembled cluster using a depth notion. We test this method with simulated and real data, compare it with commonly used k-means initialization algorithms, and show that it is feasible and more efficient than previous proposals in many situations.

Similar content being viewed by others

References

Aloise, D., Damasceno, N. C., Mladenović, N., & Pinheiro, D. N. (2017). On strategies to fix degenerate k-means solutions. Journal of Classification, 34(2), 165–190.

Arcones, M. A., & Giné, E. (1992). On the bootstrap of M-estimators and other statistical functionals. In R. Lepage, & L. Billard (Eds.) Exploring the limits of the bootstrap (pp. 13–47). New York: Wiley.

Arthur, D., & Vassilvitskii, S. (2007). k-means++: the advantages of careful seeding. In Proceedings of the 18th Annual ACM-SIAM Symposium on Discrete Algorithms (pp. 1027–1035).

Äyrämö, S., Kärkkäinen, T., & Majava, K. (2007). Robust refinement of initial prototypes for partitioning-based clustering algorithms. In C.H. Skiadas (Ed.) Recent advances in stochastic modelling and data analysis (pp. 473–482). Crete: World Scientific.

Bradley, P. S., & Fayyad, U. (1998). Refining initial points for k-means clustering. In Proceedings of the 15th International Conference of Machine Learning (pp. 91–99).

Brusco, M. J. (2004). Clustering binary data in the presence of masking variables. Psychological Methods, 9(4), 510–523.

Brusco, M. J., & Cradit, J. D. (2001). A variable-selection heuristic for k-means clustering. Psychometrika, 66, 249–270.

Celebi, M. E. (2011). Improving the performance of k-means for color quantization. Image and Vision Computing, 29(4), 260–271.

Celebi, M. E., Kingravi, H. A., & Vela, P. A. (2013). A comparative study of efficient initialization methods for the k-means clustering algorithm. Expert Systems with Applications, 40(1), 200–210.

Dolnicar, S., & Leisch, F. (2001). Behavioral market segmentation of binary guest survey data with bagged clustering. In B.H.H.K. Dorffner G (Ed.) Artificial neural networks ICANN 2001, volume 2130 of lecture notes in computer science (pp. 111–118). Berlin: Springer.

Dudoit, S., & Fridlyand, J. (2003). Bagging to improve the accuracy of a clustering procedure. Bioinformatics, 19(9), 1090–1099.

El Agha, M., & Ashour, W. M. (2012). Efficient and fast initialization algorithm for k-means clustering. International Journal of Intelligent Systems and Applications, 1, 21–31.

Forgy, E. (1965). Cluster analysis of multivariate data: efficiency vs interpretability of classifications. Biometrics, 21, 768–780.

García, C. (2016). BoCluSt: bootstrap clustering stability algorithm for community detection. PLOS One, 1(6), e0156576. https://doi.org/10.1371/journal.pone.0156576.

Garey, M. R., & Johnson, D. S. (1979). Computers and intractability: a guide to the theory of NP-completeness. New York: Freeman.

Gonzalez, T. (1985). Clustering to minimize the maximum intercluster distance. Theoretical Computer Science, 38, 293–306.

Hand, D. J., & Krzanowski, W. J. (2004). Optimising k-means clustering results with standard software packages. Computational Statistics & Data Analysis, 49, 969–973.

He, J., Lan, M., Tan, C. L., Sung, S. Y., & Low, H. B. (2004). Initialization of cluster refinement algorithms: a review and comparative study. In IEEE International Joint Conference on Neural Networks, Budapest. https://doi.org/10.1109/IJCNN.2004.1379917.

Hennig, C. (2007). Cluster-wise assessment of cluster stability. Computational Statistics and Data Analysis, 52, 258–271.

Hofmans, J., Ceulemans, E., Steinley, D., & Van Mechelen, I. (2015). On the added value of bootstrap analysis for k-means clustering. Journal of Classification, 32(2), 268–284.

Hubert, L., & Arabie, P. (1985). Comparing partitions. Journal of Classification, 2, 193–198.

Jain, A. K. (2010). Data clustering: 50 years beyond k-means. Pattern Recognition Letters, 31(8), 651–666.

Jörnsten, R. (2004). Clustering and classification based on the L1-data depth. Journal of Multivariate Analysis, 90, 67–89.

Jörnsten, R., Vardi, Y., & Zhang, C. H. (2002). A robust clustering method and visualization tool based on data depth. In Y. Dodge (Ed.) Statistical data analysis based on the L1–norm and related methods (pp. 313–366). Birkhäuser Verla: Basel.

Katsavounidis, I., Kuo, C., & Zhang, Z. (1994). A new initialization technique for generalized Lloyd iteration. IEEE Signal Processing Letters, 1, 144–146.

Kaufman, L., & Rousseeuw, P. J. (1990). Finding groups in data: an introduction to cluster analysis. New York: Wiley.

Kerr, M. K., & Churchill, G. A. (2001). Bootstrap** cluster analysis: assessing the reliability of conclusions from microarray experiments. Proceedings of the National Academy of Sciences of the United States of America, 98(16), 8961–8965.

Khan, S. S., & Ahmad, A. (2004). Cluster center initialization algorithm for k-means clustering. Pattern Recognition Letters, 25(22), 1293–1302.

Liao, H., Jihjai, X., Sun, W., Dai, J., & Yu, S. (2014). Adaptative initialization method based on spatial local information for k-means algorithm. Mathematical Problems in Engineering. Article ID 761468, 11 pp.

Lichman, M. (2013). Machine Learning Repository, University of California, Irvine, School of Information and Computer Sciences. http://archive.ics.uci.edu/ml. Accessed 24 Nov 2017.

Liu, R. (1990). On a notion of data depth based on random simplices. Annals of Statistics, 18, 405–414.

López-Pintado, S., & Romo, J. (2009). On the concept of depth for functional data. Journal of the American Statistical Association, 104(486), 718–734.

MacQueen, J. B. (1967). Some methods for classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium in Mathematics and Probability (pp. 281–297).

Mahajan, M., Nimnhorkar, P., & Varadarajan, K. (2012). The planar k- means problem is NP-hard. Theoretical Computer Science, 442, 13–21.

Maitra, R., & Melnykov, V. (2010). Simulating data to study performance of finite mixture modeling and clustering algorithms. Journal of Computational and Graphical Statistics, 19(2), 354–376.

Milligan, G. W. (1980). An examination of the effect of six types of error perturbation on fifteen clustering algorithms. Psychometrika, 45, 325–342.

Milligan, G. W., & Cooper, M. C. (1988). A study of standardization of variables in cluster analysis. Journal of Classification, 5, 181–204.

Milligan, G. W., Soon, S. C., & Sokol, L. M. (1983). The effect of cluster size, dimensionality, and the number of clusters on recovery of true cluster structure. IEEE Transactions of Pattern Analysis and Machine Intelligence, PAMI-5 (1), 40–47.

Oja, H. (1983). Descriptive statistics for multivariate distributions. Statistics & Probability Letters, 1, 327–332.

Peña, J. M., Lozano, J. A., & Larrañaga, P. (1999). An empirical comparison of four initialization methods for the k-means algorithm. Pattern Recognition Letters, 20, 1027–1040.

Pillai, K. C. S. (1954). On some distribution problems in multivariate analysis. Mimeograph Series No. 88, Institute of Statistics, University of North Carolina.

Pollard, D. (1982). A central limit theorem for k-means clustering. Annals of Probability, 10(4), 919–926.

Punj, G., & Stewart, D. W. (1983). Cluster analysis in marketing research: review and suggestions for application. Journal of Marketing Research, 20(2), 134–148.

R Core Team. (2014). R: a language and environment for statistical computing. Vienna: R Foundation for Statistical Computing. http://www.R-project.org.

Reddy, D., Mishra, D., & Jana, P. K. (2011). MST-based cluster initialization for k-means. Advances in Computer Science and Information Technology 2011, Part I, Communications in Computer and Information Science, 131, 329–338.

Redmonds, S. J., & Heneghan, C. (2007). A method for initialising the k-means clustering algorithm using kd-trees. Pattern Recognition Letters, 28(8), 965–973.

Selim, S. Z., & Ismail, M. A. (1984). k-means type algorithms: a generalized convergence theorem and characterization of local optimality. IEEE Transactions on Pattern Analysis, 6(1), 81–87.

Steinley, D. (2003). Local optima in k-means clustering: what you don’t know may hurt you. Psychological Methods, 8(3), 294–304.

Steinley, D. (2004). Properties of the Hubert-Arabie adjusted rand index. Psychological Methods, 9(3), 386–396.

Steinley, D. (2006). Profiling local optima in k-means clustering: develo** a diagnostic technique. Psychological Methods, 11(2), 178–192.

Steinley, D., & Brusco, M. J. (2007). Initializing k-means batch clustering: a critical evaluation of several techniques. Journal of Classification, 24, 99–121.

Su, T., & Dy, J. G. (2007). In search of deterministic methods for initializing k-means and Gaussian mixture clustering. Intelligent Data Analysis, 11, 319–338.

Torrente, A., López-Pintado, S., & Romo, J. (2013). DepthTools: an R package for a robust analysis of gene expression data. BMC Bioinformatics, 14, 237. https://doi.org/10.1186/1471-2105-14-237.

Tou, J. T., & González, R. C. (1974). Pattern recognition principles. Massachusetts: Addison Wesley.

Tukey, J. W. (1975). Mathematics and the picturing of data. In Proceedings of the International Congress of Mathematicians (pp. 523–531).

Vardi, Y., & Zhang, C. H. (2000). The multivariate L1-median and associated data depth. Proceedings of the National Academy of Sciences of the United States of America, 97, 1423–1426.

Vega-Pons, S., & Ruiz-Schulcloper, J. (2011). A survey of clustering ensemble algorithms. International Journal of Patter Recognition and Artificial Intelligence, 25(3), 337–372.

Ward, J. J. (1963). Hierarchical grou** to optimize an objective function. Journal of the American Statistical Association, 58, 236–244.

Yi, B., Qiao, H., Yang, F., & Xu, C. (2010). An improved initialization center algorithm for k-means clustering. In International Conference on Computational Intelligence and Software Engineering (CiSE). https://doi.org/10.1109/CISE.2010.5676975.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Torrente, A., Romo, J. Initializing k-means Clustering by Bootstrap and Data Depth. J Classif 38, 232–256 (2021). https://doi.org/10.1007/s00357-020-09372-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00357-020-09372-3