Abstract

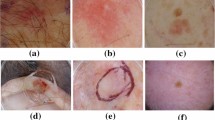

Convolutional neural networks (CNN) (e.g., UNet) have become the de facto standard and attained immense success in medical image segmentation. However, CNN based methods fail to build long-range dependencies and global context connections due to the limited receptive field of the convolution operation. Therefore, Transformer variants have been proposed for medical image segmentation tasks due to their innate capability of capturing long-range correlations through the attention mechanism. However, since Transformers are not designed to capture local information, object boundaries are not well preserved, especially in difficult segmentation scenarios with partly overlap** objects. To address this issue, we propose a contextual attention network that includes a boundary representation on top of the CNN and Transformer features. It utilizes an CNN encoder to capture local semantic information and includes a Transformer module to model the long-range contextual dependency. The object-level representation is included by extracting hierarchical features that are then fed to the contextual attention module to adaptively recalibrate the representation space using local information. In this way, informative regions are emphasized while taking into account the long-range contextual dependency derived by the Transformer module. The results show that our approach is amongst the top performing methods on the skin lesion segmentation benchmark, and specifically shows its strength on the SegPC challenge benchmark which also includes overlap** objects. Implementation code in

.

.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Asadi-Aghbolaghi, M., Azad, R., Fathy, M., Escalera, S.: Multi-level context gating of embedded collective knowledge for medical image segmentation. ar**v preprint ar**v:2003.05056 (2020)

Azad, R., Asadi-Aghbolaghi, M., Fathy, M., Escalera, S.: Bi-directional convlstm u-net with densely connected convolutions. In: 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), pp. 406–415 (2019). https://doi.org/10.1109/ICCVW.2019.00052

Azad, R., Bozorgpour, A., Asadi-Aghbolaghi, M., Merhof, D., Escalera, S.: Deep frequency re-calibration u-net for medical image segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3274–3283 (2021)

Ba, J.L., Kiros, J.R., Hinton, G.E.: Layer normalization. ar**v preprint ar**v:1607.06450 (2016)

Bozorgpour, A., Azad, R., Showkatian, E., Sulaiman, A.: Multi-scale regional attention deeplab3+: multiple myeloma plasma cells segmentation in microscopic images. ar**v preprint ar**v:2105.06238 (2021)

Cai, S., Tian, Y., Lui, H., Zeng, H., Wu, Y., Chen, G.: Dense-unet: a novel multiphoton in vivo cellular image segmentation model based on a convolutional neural network. Quant. Imaging Med. surg. 10(6), 1275 (2020)

Cai, Y., Wang, Y.: Ma-unet: an improved version of unet based on multi-scale and attention mechanism for medical image segmentation. ar**v preprint ar**v:2012.10952 (2020)

Chen, C.F.R., Fan, Q., Panda, R.: Crossvit: cross-attention multi-scale vision transformer for image classification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 357–366 (2021)

Chen, J., et al.: Transunet: transformers make strong encoders for medical image segmentation. ar**v preprint ar**v:2102.04306 (2021)

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFS. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2017)

Codella, N., et al.: Skin lesion analysis toward melanoma detection 2018: a challenge hosted by the international skin imaging collaboration (isic). ar**v preprint ar**v:1902.03368 (2019)

Codella, N.C., et al.: Skin lesion analysis toward melanoma detection: a challenge at the 2017 international symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC). In: 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), pp. 168–172. IEEE (2018)

Dosovitskiy, A., et al.: An image is worth 16x16 words: transformers for image recognition at scale. ar**v preprint ar**v:2010.11929 (2020)

Gupta, A., Mallick, P., Sharma, O., Gupta, R., Duggal, R.: Pcseg: color model driven probabilistic multiphase level set based tool for plasma cell segmentation in multiple myeloma. PloS one 13(12), e0207908 (2018)

Hatamizadeh, A., et al.: Unetr: transformers for 3d medical image segmentation. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 574–584 (2022)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Huang, H., et al.: Unet 3+: a full-scale connected unet for medical image segmentation. In: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1055–1059. IEEE (2020)

Lei, B., et al.: Skin lesion segmentation via generative adversarial networks with dual discriminators. Med. Image Anal. 64, 101716 (2020)

Li, M., Lian, F., Wang, C., Guo, S.: Accurate pancreas segmentation using multi-level pyramidal pooling residual u-net with adversarial mechanism. BMC Med. Imaging 21(1), 1–8 (2021)

Mendonça, T., Ferreira, P.M., Marques, J.S., Marcal, A.R., Rozeira, J.: Ph 2-a dermoscopic image database for research and benchmarking. In: 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 5437–5440. IEEE (2013)

Oktay, O., et al.: Attention u-net: Learning where to look for the pancreas. ar**v preprint ar**v:1804.03999 (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Sinha, A., Dolz, J.: Multi-scale self-guided attention for medical image segmentation. IEEE J. Biomed. Health Inform. 25(1), 121–130 (2020)

Valanarasu, J.M.J., Oza, P., Hacihaliloglu, I., Patel, V.M.: Medical transformer: gated axial-attention for medical image segmentation. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12901, pp. 36–46. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87193-2_4

Valanarasu, J.M.J., Sindagi, V.A., Hacihaliloglu, I., Patel, V.M.: KiU-Net: towards accurate segmentation of biomedical images using over-complete representations. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12264, pp. 363–373. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59719-1_36

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7794–7803 (2018)

Wu, H., Chen, S., Chen, G., Wang, W., Lei, B., Wen, Z.: Fat-net: feature adaptive transformers for automated skin lesion segmentation. Med. Image Anal. 76, 102327 (2022)

Zheng, S., et al.: Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6881–6890 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Azad, R., Heidari, M., Wu, Y., Merhof, D. (2022). Contextual Attention Network: Transformer Meets U-Net. In: Lian, C., Cao, X., Rekik, I., Xu, X., Cui, Z. (eds) Machine Learning in Medical Imaging. MLMI 2022. Lecture Notes in Computer Science, vol 13583. Springer, Cham. https://doi.org/10.1007/978-3-031-21014-3_39

Download citation

DOI: https://doi.org/10.1007/978-3-031-21014-3_39

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-21013-6

Online ISBN: 978-3-031-21014-3

eBook Packages: Computer ScienceComputer Science (R0)