Abstract

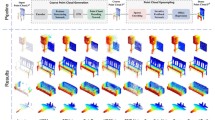

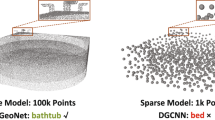

Estimating the complete 3D point cloud from an incomplete one is a key problem in many vision and robotics applications. Mainstream methods (e.g., PCN and TopNet) use Multi-layer Perceptrons (MLPs) to directly process point clouds, which may cause the loss of details because the structural and context of point clouds are not fully considered. To solve this problem, we introduce 3D grids as intermediate representations to regularize unordered point clouds and propose a novel Gridding Residual Network (GRNet) for point cloud completion. In particular, we devise two novel differentiable layers, named Gridding and Gridding Reverse, to convert between point clouds and 3D grids without losing structural information. We also present the differentiable Cubic Feature Sampling layer to extract features of neighboring points, which preserves context information. In addition, we design a new loss function, namely Gridding Loss, to calculate the L1 distance between the 3D grids of the predicted and ground truth point clouds, which is helpful to recover details. Experimental results indicate that the proposed GRNet performs favorably against state-of-the-art methods on the ShapeNet, Completion3D, and KITTI benchmarks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The source code is available at https://github.com/hzxie/GRNet.

- 2.

References

Achlioptas, P., Diamanti, O., Mitliagkas, I., Guibas, L.J.: Learning representations and generative models for 3D point clouds. In: ICML 2018 (2018)

Cadena, C., et al.: Past, present, and future of simultaneous localization and map**: toward the robust-perception age. IEEE Trans. Rob. 32(6), 1309–1332 (2016)

Dai, A., Qi, C.R., Nießner, M.: Shape completion using 3D-encoder-predictor CNNs and shape synthesis. In: CVPR 2017 (2017)

Fan, H., Su, H., Guibas, L.J.: A point set generation network for 3D object reconstruction from a single image. In: CVPR 2017 (2017)

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: the KITTI dataset. Int. J. Robot. Res. (IJRR) 32(11), 1231–1237 (2013)

Groueix, T., Fisher, M., Kim, V.G., Russell, B.C., Aubry, M.: A papier-mâché approach to learning 3D surface generation. In: CVPR 2018 (2018)

Han, X., Li, Z., Huang, H., Kalogerakis, E., Yu, Y.: High-resolution shape completion using deep neural networks for global structure and local geometry inference. In: ICCV 2017 (2017)

Hassani, K., Haley, M.: Unsupervised multi-task feature learning on point clouds. In: ICCV 2019 (2019)

Hermosilla, P., Ritschel, T., Vázquez, P., Vinacua, A., Ropinski, T.: Monte Carlo convolution for learning on non-uniformly sampled point clouds. ACM Trans. Graph. 37(6), 235:1–235:12 (2018)

Hua, B., Tran, M., Yeung, S.: Pointwise convolutional neural networks. In: CVPR 2018 (2018)

Jiang, L., Shi, S., Qi, X., Jia, J.: GAL: geometric adversarial loss for single-view 3D-object reconstruction. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11212, pp. 820–834. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01237-3_49

Kar, A., Häne, C., Malik, J.: Learning a multi-view stereo machine. In: NIPS 2017 (2017)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: ICLR 2015 (2015)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: ICLR 2017 (2017)

Lan, S., Yu, R., Yu, G., Davis, L.S.: Modeling local geometric structure of 3D point clouds using Geo-CNN. In: CVPR 2019 (2019)

Lei, H., Akhtar, N., Mian, A.: Octree guided CNN with spherical kernels for 3D point clouds. In: CVPR 2019 (2019)

Li, D., Shao, T., Wu, H., Zhou, K.: Shape completion from a single RGBD image. IEEE Trans. Visual Comput. Graphics 23(7), 1809–1822 (2017)

Li, K., Pham, T., Zhan, H., Reid, I.: Efficient dense point cloud object reconstruction using deformation vector fields. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11216, pp. 508–524. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01258-8_31

Li, R., Li, X., Fu, C., Cohen-Or, D., Heng, P.: PU-GAN: a point cloud upsampling adversarial network. In: ICCV 2019 (2019)

Li, Y., Bu, R., Sun, M., Wu, W., Di, X., Chen, B.: PointCNN: convolution on x-transformed points. In: NeurIPS 2018 (2018)

Lin, C., Kong, C., Lucey, S.: Learning efficient point cloud generation for dense 3D object reconstruction. In: AAAI 2018 (2018)

Lin, H., **ao, Z., Tan, Y., Chao, H., Ding, S.: Justlookup: One millisecond deep feature extraction for point clouds by lookup tables. In: ICME 2019 (2019)

Liu, M., Sheng, L., Yang, S., Shao, J., Hu, S.M.: Morphing and sampling network for dense point cloud completion. In: AAAI 2020 (2020)

Liu, Y., Fan, B., Meng, G., Lu, J., **ang, S., Pan, C.: DensePoint: learning densely contextual representation for efficient point cloud processing. In: ICCV 2019 (2019)

Liu, Y., Fan, B., **ang, S., Pan, C.: Relation-shape convolutional neural network for point cloud analysis. In: CVPR 2019 (2019)

Liu, Z., Tang, H., Lin, Y., Han, S.: Point-voxel CNN for efficient 3D deep learning. In: NeurIPS 2019 (2019)

Mandikal, P., Radhakrishnan, V.B.: Dense 3D point cloud reconstruction using a deep pyramid network. In: WACV 2019 (2019)

Mao, J., Wang, X., Li, H.: Interpolated convolutional networks for 3D point cloud understanding. In: ICCV 2019 (2019)

Nguyen, D.T., Hua, B., Tran, M., Pham, Q., Yeung, S.: A field model for repairing 3D shapes. In: CVPR 2016 (2016)

Paszke, A., et al.: PyTorch: an imperative style, high-performance deep learning library. In: NeurIPS 2019 (2019)

Peng, S., Liu, Y., Huang, Q., Zhou, X., Bao, H.: PVNet: pixel-wise voting network for 6DoF pose estimation. In: CVPR 2019 (2019)

Qi, C.R., Su, H., Mo, K., Guibas, L.J.: PointNet: deep learning on point sets for 3D classification and segmentation. In: CVPR 2017 (2017)

Qi, C.R., Yi, L., Su, H., Guibas, L.J.: PointNet++: deep hierarchical feature learning on point sets in a metric space. In: NIPS 2017 (2017)

Sharma, A., Grau, O., Fritz, M.: VConv-DAE: deep volumetric shape learning without object labels. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9915, pp. 236–250. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-49409-8_20

Stutz, D., Geiger, A.: Learning 3D shape completion from laser scan data with weak supervision. In: CVPR 2018 (2018)

Su, H., et al.: SPLATNet: sparse lattice networks for point cloud processing. In: CVPR 2018 (2018)

Tatarchenko, M., Richter, S.R., Ranftl, R., Li, Z., Koltun, V., Brox, T.: What do single-view 3D reconstruction networks learn? In: CVPR 2019 (2019)

Tchapmi, L.P., Kosaraju, V., Rezatofighi, H., Reid, I.D., Savarese, S.: TopNet: structural point cloud decoder. In: CVPR 2019 (2019)

Thomas, H., Qi, C.R., Deschaud, J., Marcotegui, B., Goulette, F., Guibas, L.J.: KPConv: flexible and deformable convolution for point clouds. In: ICCV 2019 (2019)

Varley, J., DeChant, C., Richardson, A., Ruales, J., Allen, P.K.: Shape completion enabled robotic gras**. In: IROS 2017 (2017)

Wang, K., Chen, K., Jia, K.: Deep cascade generation on point sets. In: IJCAI 2019 (2019)

Wang, Y., Sun, Y., Liu, Z., Sarma, S.E., Bronstein, M.M., Solomon, J.M.: Dynamic graph CNN for learning on point clouds. ACM Trans. Graph. 38(5), 146:1–146:12 (2019)

Wang, Z., Lu, F.: VoxSegNet: volumetric CNNs for semantic part segmentation of 3D shapes. IEEE Trans. Vis. Comput. Graph. (2019). https://doi.org/10.1109/TVCG.2019.2896310

Wu, W., Qi, Z., Li, F.: PointConv: deep convolutional networks on 3D point clouds. In: CVPR 2019 (2019)

Wu, Z., et al.: 3D ShapeNets: a deep representation for volumetric shapes. In: CVPR 2015 (2015)

**e, H., Yao, H., Sun, X., Zhou, S., Zhang, S.: Pix2Vox: context-aware 3D reconstruction from single and multi-view images. In: ICCV 2019 (2019)

**e, H., Yao, H., Zhang, S., Zhou, S., Sun, W.: Pix2Vox++: multi-scale context-aware 3D object reconstruction from single and multiple images. Int. J. Comput. Vision 128(12), 2919–2935 (2020). https://doi.org/10.1007/s11263-020-01347-6

Xu, Q., Wang, W., Ceylan, D., Mech, R., Neumann, U.: DISN: deep implicit surface network for high-quality single-view 3D reconstruction. In: NeurIPS 2019 (2019)

Xu, Y., Fan, T., Xu, M., Zeng, L., Qiao, Yu.: SpiderCNN: deep learning on point sets with parameterized convolutional filters. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11212, pp. 90–105. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01237-3_6

Yang, Y., Feng, C., Shen, Y., Tian, D.: FoldingNet: point cloud auto-encoder via deep grid deformation. In: CVPR 2018 (2018)

Yuan, W., Khot, T., Held, D., Mertz, C., Hebert, M.: PCN: point completion network. In: 3DV 2018 (2018)

Zhang, K., Hao, M., Wang, J., de Silva, C.W., Fu, C.: Linked dynamic graph CNN: learning on point cloud via linking hierarchical features. ar** Zhang

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

**e, H., Yao, H., Zhou, S., Mao, J., Zhang, S., Sun, W. (2020). GRNet: Gridding Residual Network for Dense Point Cloud Completion. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12354. Springer, Cham. https://doi.org/10.1007/978-3-030-58545-7_21

Download citation

DOI: https://doi.org/10.1007/978-3-030-58545-7_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58544-0

Online ISBN: 978-3-030-58545-7

eBook Packages: Computer ScienceComputer Science (R0)