Abstract

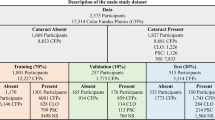

Cataract is one of the most common eye diseases, which occupies 4.2% of the population all over the world. Automatic cataract detection not only can help people prevent visual impairment and decrease the possibility of blindness but also can save the medical resources. Previous researchers have achieved automatic medical images detection using the Convolution Neural Network (CNN), which may be non-transparent, unexplained and doubtful. In this paper, we propose a novel idea of interpretable learning for explaining the result of cataract detection generated by CNNs, which is a result-oriented explanation. The AlexNet-CAM and GoogLeNet-CAM are reestablished on basis of AlexNet and GoogLeNet by replacing two fully-connected layers with global average pooling layer. Four models are used to test whether class activation map** (CAM) make the accuracy dropped. Then, we use gradient-class activation map** (Grad-CAM) combined with existed fine-grained visualization to generate heat-maps that show the important pathological features clearly. As a result, the accuracy of AlexNet (GoogLeNet) is 94.48% (94.89%), and that of AlexNet-CAM (GoogLeNet-CAM) is 93.28% (94.93%). Heat-maps corresponding with non-cataract fundus images highlighted the lens and parts of big vessels and small vessels; and the clarity of three kinds of heat-maps corresponding with cataract images declined in turn, which are mild, medium and severe. The results prove our approaches can keep the accuracy stable and increase the interpretability for cataract detection, which also can be generalized to any fundus image diagnosis in the medical field.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ackland, P.: The accomplishments of the global initiative VISION 2020: The Right to Sight and the focus for the next 8 years of the campaign. Indian J. Ophthalmol. 60(5), 380 (2012)

Isaacs, R., Ram, J., Apple, D.: Cataract blindness in the develo** world: is there a solution? J Agromedicine 9(2), 207–220 (2004)

Raskar, R., Pamplona, V., Passos, E., et al.: Methods and apparatus for cataract detection and measurement: U.S. Patent 8,746,885. 2014-6-10

Li, H., Gao, X., Tan, M.H., et al.: Lens image registration for cataract detection. In: 2011 6th IEEE conference on industrial electronics and applications (ICIEA). IEEE, pp. 132–135 (2011)

Genglin, L., Cuizhen, C.: The ESR study of free radicals in lens with different cataract. J. Capital Univ. Med. Sci. 4 (1994)

Nayak J (2013) Automated classification of normal, cataract and post cataract optical eye images using SVM classifier. Proc. World Congr Eng Comput Sci 1:23–25

Yang, J.J., Li, J., Shen, R., et al.: Exploiting ensemble learning for automatic cataract detection and grading. Comput. Methods Programs Biomed. 124, 45–57 (2016)

Lawrence, S., Giles, C.L., Tsoi, A.C., et al.: Face recognition: a convolutional neural-network approach. IEEE Trans. Neural Netw. 8(1), 98–113 (1997)

Li, Q., Cai, W., Wang, X., et al.: Medical image classification with convolutional neural network. In: 2014 13th International Conference on Control Automation Robotics and Vision (ICARCV), IEEE, pp. 844–848 (2014)

Lin, M., Chen, Q., Yan, S.: Network in network. ar**v preprint ar**v:1312.4400, (2013)

Zhou, B., Khosla, A., Lapedriza, A., et al.: Learning deep features for discriminative localization. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, pp. 2921–2929 (2016)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Szegedy, C., Liu, W., Jia, Y., et al.: Going deeper with convolutions. In: Cvpr (2015)

Selvaraju, R.R., Cogswell, M., Das, A., et al.: Grad-cam: visual explanations from deep networks via gradient-based localization. See https://arxiv.org/abs/1610.02391v3, 7(8) (2016)

Chylack, L.T., Leske, M.C., McCarthy, D., et al.: Lens opacities classification system II (LOCS II). Arch. Ophthalmol. 107(7), 991–997 (1989)

Guo, L., Yang, J.J., Peng, L., Li, J., Liang, Q.: A computer-aided healthcare system for cataract classification and grading based on fundus image analysis. Comput. Ind. 69, 72–80 (2015)

Anthimopoulos, M., Christodoulidis, S., Ebner, L., et al.: Lung pattern classification for interstitial lung diseases using a deep convolutional neural network. IEEE Trans. Med. Imaging 35(5), 1207–1216 (2016)

Bar, Y., Diamant, I., Wolf, L., et al.: Deep learning with non-medical training used for chest pathology identification. Medical Imaging 2015: Computer-Aided Diagnosis. In: International Society for Optics and Photonics, vol. 9414, pp. 94140 V (2015)

Ribeiro, M.T., Singh, S., Guestrin, C.: Why should i trust you?: explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, pp. 1135–1144 (2016)

Bau, D., Zhou, B., Khosla, A., et al.: Network dissection: quantifying interpretability of deep visual representations. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, pp. 3319–3327 (2017)

Zhang, L., Li, J., Han, H., et al.: Automatic cataract detection and grading using deep convolutional neural network. In: 2017 IEEE 14th International Conference on Networking, Sensing and Control (ICNSC), IEEE, pp. 60–65 (2017)

Jia, Y., Shelhamer, E., Donahue, J., et al.: Caffe: Convolutional architecture for fast feature embedding. In: Proceedings of the 22nd ACM International Conference on Multimedia. ACM, 675–678 (2014)

Acknowledgements

This work is supported by the project of the State of Key Program of National Natural Science of China (Grant No. 71432004) and China National Science and Technology Major Project with no. 2017YFB1400803.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, J. et al. (2019). Interpretable Learning: A Result-Oriented Explanation for Automatic Cataract Detection. In: Hung, J., Yen, N., Hui, L. (eds) Frontier Computing. FC 2018. Lecture Notes in Electrical Engineering, vol 542. Springer, Singapore. https://doi.org/10.1007/978-981-13-3648-5_33

Download citation

DOI: https://doi.org/10.1007/978-981-13-3648-5_33

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-3647-8

Online ISBN: 978-981-13-3648-5

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)