Abstract

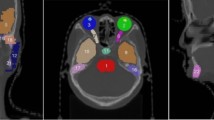

While deep learning models are known to be able to solve the task of multi-organ segmentation, the scarcity of fully annotated multi-organ datasets poses a significant obstacle during training. The 3D volume annotation of such datasets is expensive, time-consuming and varies greatly in the variety of labeled structures. To this end, we propose a solution that leverages multiple partially annotated datasets using disentangled learning for a single segmentation model. Dataset-specific encoder and decoder networks are trained, while a joint decoder network gathers the encoders’ features to generate a complete segmentation mask. We evaluated our method using two simulated partially annotated datasets: one including the liver, lungs and kidneys, the other bones and bladder. Our method is trained to segment all five organs achieving a dice score of 0.78 and an IoU of 0.67. Notably, this performance is close to a model trained on the fully annotated dataset, scoring 0.80 in dice score and 0.70 in IoU respectively.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Aljabri M, AlGhamdi M. A review on the use of deep learning for medical images segmentation. Neurocomputing. 2022;506:311–35.

Hesamian M, Jia W, He X et al. Deep learning techniques for medical image segmentation: achievements and challenges. J Digit Imaging. 2019;32:582–96.

Lei Y, Fu Y, Wang T, Qiu RLJ, Curran WJ, Liu T et al. Deep learning in multi-organ segmentation. 2020.

Fu Y, Lei Y, Wang T, Curran WJ, Liu T, Yang X. A review of deep learning based methods for medical image multi-organ segmentation. Physica Medica. 2021;85:107–22.

Rister B, Yi D, Shivakumar K, Nobashi T, Rubin DL. CT-ORG, a new dataset for multiple organ segmentation in computed tomography. Sci. Data. 2020;7(1):381.

Ji Y, Bai H, Ge C, Yang J, Zhu Y, Zhang R et al. Amos: a large-scale abdominal multi-organ benchmark for versatile medical image segmentation. Proc NeuroIPS. 2022;35:36722–32.

Zhu X. Semi-supervised learning literature survey. Comput Sci. 2008;2.

Pan SJ,Yang Q.Asurvey on transfer learning. IEEETrans Knowl Data Eng. 2010;22(10):1345– 59.

Zhou ZH. A brief introduction to weakly supervised learning. Natl Sci Rev. 2018;5(1):44–53.

Zhou T, Ruan S, Canu S. A review: deep learning for medical image segmentation using multi-modality fusion. Array. 2019;3-4:100004.

Lyu Y, Liao H, Zhu H, Zhou SK. A3DSegNet: anatomy-aware artifact disentanglement and segmentation network for unpaired segmentation, artifact reduction, and modality translation. 2021.

Yang Q, Guo X, Chen Z,Woo PYM,Yuan Y. D2-Net: dual disentanglement network for brain tumor segmentation with missing modalities. IEEE Trans Med Imaging. 2022;41(10):2953– 64.

Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. Proc MICCAI. 2015:234–41.

Salimi Y, Shiri I, Mansouri Z, Zaidi H. Deep learning-assisted multiple organ segmentation from whole-body CT images. medRxiv. 2023.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 Der/die Autor(en), exklusiv lizenziert an Springer Fachmedien Wiesbaden GmbH, ein Teil von Springer Nature

About this paper

Cite this paper

Wang, T., Liu, C., Rist, L., Maier, A. (2024). Multi-organ Segmentation in CT from Partially Annotated Datasets using Disentangled Learning. In: Maier, A., Deserno, T.M., Handels, H., Maier-Hein, K., Palm, C., Tolxdorff, T. (eds) Bildverarbeitung für die Medizin 2024. BVM 2024. Informatik aktuell. Springer Vieweg, Wiesbaden. https://doi.org/10.1007/978-3-658-44037-4_76

Download citation

DOI: https://doi.org/10.1007/978-3-658-44037-4_76

Published:

Publisher Name: Springer Vieweg, Wiesbaden

Print ISBN: 978-3-658-44036-7

Online ISBN: 978-3-658-44037-4

eBook Packages: Computer Science and Engineering (German Language)