Abstract

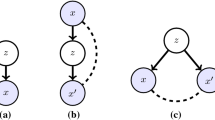

By using the underlying theory of proper scoring rules, we design a family of noise-contrastive estimation (NCE) methods that are tractable for latent variable models. Both terms in the underlying NCE loss, the one using data samples and the one using noise samples, can be lower-bounded as in variational Bayes, therefore we call this family of losses fully variational noise-contrastive estimation. Variational autoencoders are a particular example in this family and therefore can be also understood as separating real data from synthetic samples using an appropriate classification loss. We further discuss other instances in this family of fully variational NCE objectives and indicate differences in their empirical behavior.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We omit the possibility of using general Bernoulli RV for notational simplicity.

- 2.

For brevity we use sums to refer to marginalization of RV, but these sums should always be understood as the appropriate Lebesque integrals.

- 3.

If we use unnormalized models \(p_\theta ^0\), then Eq. 37 is bounded by \(Z(\theta )\).

- 4.

This is only necessary for continuous latent variables as pmf’s are always in [0, 1].

References

Ceylan, C., Gutmann, M.U.: Conditional noise-contrastive estimation of unnormalised models. In: International Conference on Machine Learning, pp. 726–734. PMLR (2018)

Chen, X., Duan, Y., Houthooft, R., Schulman, J., Sutskever, I., Abbeel, P.: Infogan: Interpretable representation learning by information maximizing generative adversarial nets. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Dawid, A.P., Musio, M.: Theory and applications of proper scoring rules. METRON 72(2), 169–183 (2014). https://doi.org/10.1007/s40300-014-0039-y

Dayan, P., Hinton, G.E., Neal, R.M., Zemel, R.S.: The helmholtz machine. Neural Comput. 7(5), 889–904 (1995)

Dhariwal, P., Nichol, A.: Diffusion models beat gans on image synthesis. Adv. Neural. Inf. Process. Syst. 34, 8780–8794 (2021)

Ghosh, P., Sajjadi, M.S., Vergari, A., Black, M.: From variational to deterministic autoencoders. In: 8th International Conference on Learning Representations (2020)

Gneiting, T., Raftery, A.E.: Strictly proper scoring rules, prediction, and estimation. J. Am. Stat. Assoc. 102(477), 359–378 (2007)

Goodfellow, I.J., et al.: Generative adversarial nets. In: NIPS (2014)

Gutmann, M., Hyvärinen, A.: Noise-contrastive estimation: A new estimation principle for unnormalized statistical models. In: Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, pp. 297–304 (2010)

Gutmann, M.U., Hyvärinen, A.: Noise-contrastive estimation of unnormalized statistical models, with applications to natural image statistics. J. Mach. Learn. Res. 13(Feb), 307–361 (2012)

Hyvärinen, A.: Estimation of non-normalized statistical models by score matching. J. Mach. Learn. Res. 6(4) (2005)

Jordan, M.I., Ghahramani, Z., Jaakkola, T.S., Saul, L.K.: An introduction to variational methods for graphical models. Mach. Learn. 37(2), 183–233 (1999)

Kanamori, T., Hido, S., Sugiyama, M.: A least-squares approach to direct importance estimation. J. Mach. Learn. Res. 10, 1391–1445 (2009)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. In: Proceedings of the 2nd International Conference on Learning Representations (ICLR) (2014)

Kingma, D.P., Dhariwal, P.: Glow: Generative flow with invertible 1x1 convolutions. In: Advances in Neural Information Processing Systems, vol. 31 (2018)

Kirichenko, P., Izmailov, P., Wilson, A.G.: Why normalizing flows fail to detect out-of-distribution data. Adv. Neural. Inf. Process. Syst. 33, 20578–20589 (2020)

Liu, W., Wang, X., Owens, J., Li, Y.: Energy-based out-of-distribution detection. Adv. Neural. Inf. Process. Syst. 33, 21464–21475 (2020)

Mirza, M., Osindero, S.: Conditional generative adversarial nets. ar**v preprint ar**v:1411.1784 (2014)

Oord, A.v.d., Li, Y., Vinyals, O.: Representation learning with contrastive predictive coding. ar**v preprint ar**v:1807.03748 (2018)

Parry, M., Dawid, A.P., Lauritzen, S., et al.: Proper local scoring rules. Ann. Stat. 40(1), 561–592 (2012)

Pihlaja, M., Gutmann, M., Hyvärinen, A.: A family of computationally efficient and simple estimators for unnormalized statistical models. In: Proceedings of the Twenty-Sixth Conference on Uncertainty in Artificial Intelligence, pp. 442–449 (2010)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks. ar**v preprint ar**v:1511.06434 (2015)

Ren, J., et al.: Likelihood ratios for out-of-distribution detection. In: Advances in Neural Information Processing Systems, vol. 32 (2019)

Rezende, D., Mohamed, S.: Variational inference with normalizing flows. In: International conference on machine learning, pp. 1530–1538. PMLR (2015)

Rezende, D.J., Mohamed, S., Wierstra, D.: Stochastic backpropagation and approximate inference in deep generative models. In: Proceedings of the 31st International Conference on Machine Learning (ICML-14), pp. 1278–1286 (2014)

Rhodes, B., Gutmann, M.U.: Variational noise-contrastive estimation. In: The 22nd International Conference on Artificial Intelligence and Statistics, pp. 2741–2750. PMLR (2019)

Schölkopf, B., Platt, J.C., Shawe-Taylor, J., Smola, A.J., Williamson, R.C.: Estimating the support of a high-dimensional distribution. Neural Comput. 13(7), 1443–1471 (2001)

Song, Y., Sohl-Dickstein, J., Kingma, D.P., Kumar, A., Ermon, S., Poole, B.: Score-based generative modeling through stochastic differential equations. In: International Conference on Learning Representations (2020)

Sugiyama, M., Suzuki, T., Kanamori, T.: Density ratio estimation in machine learning. Cambridge University Press (2012)

Sugiyama, M., Suzuki, T., Kanamori, T.: Density-ratio matching under the bregman divergence: a unified framework of density-ratio estimation. Ann. Inst. Stat. Math. 64(5), 1009–1044 (2012)

Tax, D.M., Duin, R.P.: Support vector data description. Mach. Learn. 54(1), 45–66 (2004)

Zenati, H., Romain, M., Foo, C.S., Lecouat, B., Chandrasekhar, V.: Adversarially learned anomaly detection. In: 2018 IEEE International conference on data mining (ICDM), pp. 727–736. IEEE (2018)

Acknowledgement

This work was partially supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP) funded by the Knut and Alice Wallenberg Foundation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zach, C. (2023). Fully Variational Noise-Contrastive Estimation. In: Gade, R., Felsberg, M., Kämäräinen, JK. (eds) Image Analysis. SCIA 2023. Lecture Notes in Computer Science, vol 13886. Springer, Cham. https://doi.org/10.1007/978-3-031-31438-4_12

Download citation

DOI: https://doi.org/10.1007/978-3-031-31438-4_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-31437-7

Online ISBN: 978-3-031-31438-4

eBook Packages: Computer ScienceComputer Science (R0)