Abstract

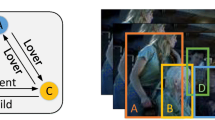

Recognizing social relationships between multiple characters from videos can enable intelligent systems to serve human society better. Previous studies mainly focus on the still image to classify the relationships while ignoring the important data source of the video. With the prosperity of multimedia, the methods of video-based social relationship recognition gradually emerge. However, those methods either only focus on the logical reasoning between multiple characters or only on the direct interaction in each character pair. To that end, inspired by the rules of interpersonal social communication, we propose Overall-Distinctive GCN (OD-GCN) to recognize the relationships of multiple characters in the videos. Specifically, we first construct an overall-level character heterogeneous graph with two types of edges and rich node representation features to capture the implicit relationship of all characters. Then, we design an attention module to find mentioned nodes for each character pair from feature sequences fused with temporal information. Further, we build distinctive-level graphs to focus on the interaction between two characters. Finally, we integrate multimodal global features to classify relationships. We conduct the experiments on the MovieGraphs dataset and validate the effectiveness of our proposed model.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chen, L.C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 801–818 (2018)

Deng, J., Guo, J., Xue, N., Zafeiriou, S.: ArcFace: additive angular margin loss for deep face recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4690–4699 (2019)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. ar**v preprint ar**v:1810.04805 (2018)

Everingham, M., Eslami, S., Van Gool, L., Williams, C.K., Winn, J., Zisserman, A.: The pascal visual object classes challenge: a retrospective. Int. J. Comput. Vis. 111(1), 98–136 (2015)

Goel, A., Ma, K.T., Tan, C.: An end-to-end network for generating social relationship graphs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11186–11195 (2019)

Hara, K., Kataoka, H., Satoh, Y.: Can spatiotemporal 3D CNNs retrace the history of 2D CNNs and imagenet? In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6546–6555 (2018)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Kukleva, A., Tapaswi, M., Laptev, I.: Learning interactions and relationships between movie characters. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9849–9858 (2020)

Li, J., Wong, Y., Zhao, Q., Kankanhalli, M.S.: Dual-glance model for deciphering social relationships. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2650–2659 (2017)

Li, J., Wong, Y., Zhao, Q., Kankanhalli, M.S.: Visual social relationship recognition. Int. J. Comput. Vis. 128(6), 1750–1764 (2020)

Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Liu, X., et al.: Social relation recognition from videos via multi-scale spatial-temporal reasoning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3566–3574 (2019)

Liu, Z., Hou, W., Zhang, J., Cao, C., Wu, B.: A multimodal approach for multiple-relation extraction in videos. Multimedia Tools Appl. 81(4), 4909–4934 (2022)

Teng, Y., Song, C., Wu, B.: Toward jointly understanding social relationships and characters from videos. Appl. Intell. 52(5), 5633–5645 (2022)

Vicol, P., Tapaswi, M., Castrejon, L., Fidler, S.: MovieGraphs: towards understanding human-centric situations from videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8581–8590 (2018)

Wang, L., et al.: Temporal segment networks: towards good practices for deep action recognition. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 20–36. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_2

Wu, S., Chen, J., Xu, T., Chen, L., Wu, L., Hu, Y., Chen, E.: Linking the characters: video-oriented social graph generation via hierarchical-cumulative GCN. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 4716–4724 (2021)

Yan, C., Liu, Z., Li, F., Cao, C., Wang, Z., Wu, B.: Social relation analysis from videos via multi-entity reasoning. In: Proceedings of the 2021 International Conference on Multimedia Retrieval, pp. 358–366 (2021)

Zhang, M., Liu, X., Liu, W., Zhou, A., Ma, H., Mei, T.: Multi-granularity reasoning for social relation recognition from images. In: 2019 IEEE International Conference on Multimedia and Expo (ICME), pp. 1618–1623. IEEE (2019)

Zhang, N., Paluri, M., Taigman, Y., Fergus, R., Bourdev, L.: Beyond frontal faces: improving person recognition using multiple cues. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4804–4813 (2015)

Acknowledgments

This work is supported by the NSFC-General Technology Basic Research Joint Funds under Grant (U1936220), the National Natural Science Foundation of China under Grant (61972047), the National Key Research and Development Program of China (2018YFC0831500).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Hu, Y., Cao, C., Li, F., Yan, C., Qi, J., Wu, B. (2023). Overall-Distinctive GCN for Social Relation Recognition on Videos. In: Dang-Nguyen, DT., et al. MultiMedia Modeling. MMM 2023. Lecture Notes in Computer Science, vol 13833. Springer, Cham. https://doi.org/10.1007/978-3-031-27077-2_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-27077-2_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-27076-5

Online ISBN: 978-3-031-27077-2

eBook Packages: Computer ScienceComputer Science (R0)