Abstract

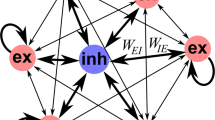

Associative memory has been a prominent candidate for the computation performed by the massively recurrent neocortical networks. Attractor networks implementing associative memory have offered mechanistic explanation for many cognitive phenomena. However, attractor memory models are typically trained using orthogonal or random patterns to avoid interference between memories, which makes them unfeasible for naturally occurring complex correlated stimuli like images. We approach this problem by combining a recurrent attractor network with a feedforward network that learns distributed representations using an unsupervised Hebbian-Bayesian learning rule. The resulting network model incorporates many known biological properties: unsupervised learning, Hebbian plasticity, sparse distributed activations, sparse connectivity, columnar and laminar cortical architecture, etc. We evaluate the synergistic effects of the feedforward and recurrent network components in complex pattern recognition tasks on the MNIST handwritten digits dataset. We demonstrate that the recurrent attractor component implements associative memory when trained on the feedforward-driven internal (hidden) representations. The associative memory is also shown to perform prototype extraction from the training data and make the representations robust to severely distorted input. We argue that several aspects of the proposed integration of feedforward and recurrent computations are particularly attractive from a machine learning perspective.

Funding for the work is received from the Swedish e-Science Research Centre (SeRC), European Commission H2020 program. The authors gratefully acknowledge the HPC RIVR consortium (www.hpc-rivr.si) and EuroHPC JU (eurohpcju.europa.eu) for funding this research by providing computing resources of the HPC system Vega at the Institute of Information Science (www.izum.si).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Douglas, R.J., Martin, K.A.C.: Recurrent neuronal circuits in the neocortex. Curr. Biol. 17, R496–R500 (2007). https://doi.org/10.1016/J.CUB.2007.04.024

van Bergen, R.S., Kriegeskorte, N.: Going in circles is the way forward: the role of recurrence in visual inference. Curr. Opin. Neurobiol. 65, 176–193 (2020). https://doi.org/10.1016/J.CONB.2020.11.009

Stepanyants, A., Martinez, L.M., Ferecskó, A.S., Kisvárday, Z.F.: The fractions of short- and long-range connections in the visual cortex. Proc. Natl. Acad. Sci. U. S. A. 106, 3555–3560 (2009). https://doi.org/10.1073/pnas.0810390106

Hopfield, J.J.: Neural networks and physical systems with emergent collective computational abilities (associative memory/parallel processing/categorization/content-addressable memory/fail-soft devices). Proc. Natl. Acad. Sci. U. S. A. 79, 2554–2558 (1982)

Lansner, A.: Associative memory models: from the cell-assembly theory to biophysically detailed cortex simulations. Trends Neurosci. 32, 178–186 (2009). https://doi.org/10.1016/j.tins.2008.12.002

Hebb, D.O.: The Organization of Behavior. Psychology Press (1949). https://doi.org/10.4324/9781410612403

Lundqvist, M., Herman, P., Lansner, A.: Theta and gamma power increases and alpha/beta power decreases with memory load in an attractor network model. J. Cogn. Neurosci. 23, 3008–3020 (2011). https://doi.org/10.1162/jocn_a_00029

Silverstein, D.N., Lansner, A.: Is attentional blink a byproduct of neocortical attractors? Front. Comput. Neurosci. 5, 13 (2011). https://doi.org/10.3389/FNCOM.2011.00013/BIBTEX

Fiebig, F., Lansner, A.: A spiking working memory model based on Hebbian short-term potentiation. J. Neurosci. 37, 83–96 (2017). https://doi.org/10.1523/JNEUROSCI.1989-16.2016

MacGregor, R.J., Gerstein, G.L.: Cross-talk theory of memory capacity in neural networks. Biol. Cybern. 65, 351–355 (1991). https://doi.org/10.1007/BF00216968

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015). https://doi.org/10.1038/nature14539

Mattar, M.G., Daw, N.D.: Prioritized memory access explains planning and hippocampal replay. Nat. Neurosci. 21, 1609–1617 (2018). https://doi.org/10.1038/S41593-018-0232-Z

Krotov, D., Hopfield, J.J.: Unsupervised learning by competing hidden units. Proc. Natl. Acad. Sci. U. S. A. 116, 7723–7731 (2019). https://doi.org/10.1073/pnas.1820458116

Bartunov, S., Santoro, A., Hinton, G.E., Richards, B.A., Marris, L., Lillicrap, T.P.: Assessing the scalability of biologically-motivated deep learning algorithms and architectures. In: Advances in Neural Information Processing Systems, pp. 9368–9378 (2018)

Illing, B., Gerstner, W., Brea, J.: Biologically plausible deep learning—but how far can we go with shallow networks? Neural Netw. 118, 90–101 (2019). https://doi.org/10.1016/j.neunet.2019.06.001

Ravichandran, N.B., Lansner, A., Herman, P.: Learning representations in Bayesian confidence propagation neural networks. In: Proceedings of the International Joint Conference on Neural Networks. (2020). https://doi.org/10.1109/IJCNN48605.2020.9207061

Ravichandran, N.B., Lansner, A., Herman, P.: Brain-like approaches to unsupervised learning of hidden representations - a comparative study. In: Farkaš, I., Masulli, P., Otte, S., Wermter, S. (eds.) ICANN 2021. LNCS, vol. 12895, pp. 162–173. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-86383-8_13

Pulvermüller, F., Tomasello, R., Henningsen-Schomers, M.R., Wennekers, T.: Biological constraints on neural network models of cognitive function. Nat. Rev. Neurosci. 228(22), 488–502 (2021). https://doi.org/10.1038/s41583-021-00473-5

Mountcastle, V.B.: The columnar organization of the neocortex (1997). https://academic.oup.com/brain/article/120/4/701/372118. https://doi.org/10.1093/brain/120.4.701

Douglas, R.J., Martin, K.A.C.: Neuronal circuits of the neocortex (2004). www.annualreviews.org. https://doi.org/10.1146/annurev.neuro.27.070203.144152

Buxhoeveden, D.P., Casanova, M.F.: The minicolumn hypothesis in neuroscience. Brain 125, 935–951 (2002). https://doi.org/10.1093/BRAIN/AWF110

Carandini, M., Heeger, D.J.: Normalization as a canonical neural computation. Nat. Rev. Neurosci. 131(13), 51–62 (2011). https://doi.org/10.1038/nrn3136

Fransen, E., Lansner, A.: A model of cortical associative memory based on a horizontal network of connected columns. Netw. Comput. Neural Syst. 9, 235–264 (1998). https://doi.org/10.1088/0954-898X_9_2_006

Lansner, A., Ekeberg, Ö.: A one-layer feedback artificial neural network with a Bayesian learning rule. Int. J. Neural Syst. 01, 77–87 (1989). https://doi.org/10.1142/S0129065789000499

Sandberg, A., Lansner, A., Petersson, K.M., Ekeberg, Ö.: A Bayesian attractor network with incremental learning. Netw. Comput. Neural Syst. 13, 179–194 (2002). https://doi.org/10.1080/net.13.2.179.194

Lansner, A., Holst, A.: A higher order Bayesian neural network with spiking units (1996). https://doi.org/10.1142/S0129065796000816

Tully, P.J., Hennig, M.H., Lansner, A.: Synaptic and nonsynaptic plasticity approximating probabilistic inference. Front. Synaptic Neurosci. 6, 8 (2014). https://doi.org/10.3389/FNSYN.2014.00008/ABSTRACT

Johansson, C., Sandberg, A., Lansner, A.: A capacity study of a Bayesian neural network with hypercolumns. Rep. Stud. Artif. Neural Syst. (2001)

George, D., et al.: A generative vision model that trains with high data efficiency and breaks text-based CAPTCHAs. Science (80), 358 (2017). https://doi.org/10.1126/SCIENCE.AAG2612

Yamins, D.L.K., DiCarlo, J.J.: Using goal-driven deep learning models to understand sensory cortex. Nat. Neurosci. 193(19), 356–365 (2016). https://doi.org/10.1038/nn.4244

Felleman, D.J., Van Essen, D.C.: Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex. 1, 1–47 (1991). https://doi.org/10.1093/cercor/1.1.1

Tang, H., et al.: Recurrent computations for visual pattern completion. Proc. Natl. Acad. Sci. U. S. A. 115, 8835–8840 (2018). https://doi.org/10.1073/PNAS.1719397115/SUPPL_FILE/PNAS.1719397115.SAPP.PDF

Roelfsema, P.R.: Cortical algorithms for perceptual grou** (2006). https://doi.org/10.1146/annurev.neuro.29.051605.112939

Wyatte, D., Curran, T., O’Reilly, R.: The limits of feedforward vision: recurrent processing promotes robust object recognition when objects are degraded. J. Cogn. Neurosci. 24, 2248–2261 (2012). https://doi.org/10.1162/jocn_a_00282

Fyall, A.M., El-Shamayleh, Y., Choi, H., Shea-Brown, E., Pasupathy, A.: Dynamic representation of partially occluded objects in primate prefrontal and visual cortex. Elife 6, (2017). https://doi.org/10.7554/eLife.25784

Li, W., Piëch, V., Gilbert, C.D.: Learning to link visual contours. Neuron 57, 442–451 (2008). https://doi.org/10.1016/J.NEURON.2007.12.011

Li, W., Gilbert, C.D.: Global contour saliency and local colinear interactions. J. Neurophysiol. 88, 2846–2856 (2002). https://doi.org/10.1152/JN.00289.2002

Lamme, V.A.F., Roelfsema, P.R.: The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 23, 571–579 (2000). https://doi.org/10.1016/S0166-2236(00)01657-X

Grossberg, S.: Competitive learning: from interactive activation to adaptive resonance. Cogn. Sci. 11, 23–63 (1987). https://doi.org/10.1016/S0364-0213(87)80025-3

Rumelhart, D.E., Zipser, D.: Feature discovery by competitive learning. Cogn. Sci. 9, 75–112 (1985). https://doi.org/10.1016/S0364-0213(85)80010-0

Földiák, P.: Forming sparse representations by local anti-Hebbian learning. Biol. Cybern. 64, 165–170 (1990). https://doi.org/10.1007/BF02331346

Szegedy, C., et al.: Intriguing properties of neural networks. In: International Conference on Learning Representations, ICLR (2014)

Lake, B.M., Ullman, T.D., Tenenbaum, J.B., Gershman, S.J.: Building machines that learn and think like people. Behav. Brain Sci. 40 (2017). https://doi.org/10.1017/S0140525X16001837

Kietzmann, T.C., Spoerer, C.J., Sörensen, L.K.A., Cichy, R.M., Hauk, O., Kriegeskorte, N.: Recurrence is required to capture the representational dynamics of the human visual system. 116 (2019). https://doi.org/10.1073/pnas.1905544116

Rao, R.P.N., Ballard, D.H.: Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 21(2), 79–87 (1999). https://doi.org/10.1038/4580

Bastos, A.M., Usrey, W.M., Adams, R.A., Mangun, G.R., Fries, P., Friston, K.J.: Canonical microcircuits for predictive coding. Neuron 76, 695–711 (2012). https://doi.org/10.1016/J.NEURON.2012.10.038

Tully, P.J., Lindén, H., Hennig, M.H., Lansner, A.: Spike-based Bayesian-Hebbian learning of temporal sequences. PLOS Comput. Biol. 12, e1004954 (2016). https://doi.org/10.1371/JOURNAL.PCBI.1004954

Martinez, R.H., Lansner, A., Herman, P.: Probabilistic associative learning suffices for learning the temporal structure of multiple sequences. PLoS One 14, e0220161 (2019). https://doi.org/10.1371/JOURNAL.PONE.0220161

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ravichandran, N.B., Lansner, A., Herman, P. (2023). Brain-like Combination of Feedforward and Recurrent Network Components Achieves Prototype Extraction and Robust Pattern Recognition. In: Nicosia, G., et al. Machine Learning, Optimization, and Data Science. LOD 2022. Lecture Notes in Computer Science, vol 13811. Springer, Cham. https://doi.org/10.1007/978-3-031-25891-6_37

Download citation

DOI: https://doi.org/10.1007/978-3-031-25891-6_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-25890-9

Online ISBN: 978-3-031-25891-6

eBook Packages: Computer ScienceComputer Science (R0)