Abstract

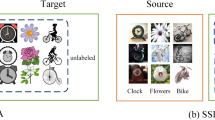

This paper studies a new, practical but challenging problem, called Class-Incremental Unsupervised Domain Adaptation (CI-UDA), where the labeled source domain contains all classes, but the classes in the unlabeled target domain increase sequentially. This problem is challenging due to two difficulties. First, source and target label sets are inconsistent at each time step, which makes it difficult to conduct accurate domain alignment. Second, previous target classes are unavailable in the current step, resulting in the forgetting of previous knowledge. To address this problem, we propose a novel Prototype-guided Continual Adaptation (ProCA) method, consisting of two solution strategies. 1) Label prototype identification: we identify target label prototypes by detecting shared classes with cumulative prediction probabilities of target samples. 2) Prototype-based alignment and replay: based on the identified label prototypes, we align both domains and enforce the model to retain previous knowledge. With these two strategies, ProCA is able to adapt the source model to a class-incremental unlabeled target domain effectively. Extensive experiments demonstrate the effectiveness and superiority of ProCA in resolving CI-UDA. The @scut.edu.cnsource code is available at https://github.com/Hongbin98/ProCA.git.

H. Lin , Y. Zhang and Z. Qiu—Authors contributed equally.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cao, Z., Ma, L., Long, M., Wang, J.: Partial adversarial domain adaptation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11212, pp. 139–155. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01237-3_9

Cao, Z., et al.: Learning to transfer examples for partial domain adaptation. In: CVPR, pp. 2985–2994 (2019)

Castro, F.M., Marín-Jiménez, M.J., Guil, N., Schmid, C., Alahari, K.: End-to-end incremental learning. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11216, pp. 241–257. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01258-8_15

Chen, C., et al.: HOMM: Higher-order moment matching for unsupervised domain adaptation. In: AAAI, pp. 3422–3429 (2020)

Chen, S., Harandi, M., **, X., Yang, X.: Domain adaptation by joint distribution invariant projections. IEEE Trans. Image Process. 29, 8264–8277 (2020)

Chen, Y., et al.: Domain adaptive faster R-CNN for object detection in the wild. In: CVPR, pp. 3339–3348 (2018)

Du, Z., Li, J., Su, H., Zhu, L., Lu, K.: Cross-domain gradient discrepancy minimization for unsupervised domain adaptation. In: CVPR, pp. 3937–3946 (2021)

Ganin, Y., Lempitsky, V.: Unsupervised domain adaptation by backpropagation. In: ICML (2015)

Gong, R., et al.: DLOW: domain flow for adaptation and generalization. In: CVPR, pp. 2477–2486 (2019)

Griffin, G., Holub, A., Perona, P.: Caltech-256 object category dataset (2007)

He, K., et al.: Deep residual learning for image recognition. In: CVPR (2016)

Hoffman, J., et al.: CYCADA: cycle-consistent adversarial domain adaptation. In: ICML (2018)

Hu, D., Liang, J., Hou, Q., Yan, H., Chen, Y.: Adversarial domain adaptation with prototype-based normalized output conditioner. IEEE Trans. Image Process. 30, 9359–9371 (2021)

Hu, J., et al.: Discriminative partial domain adversarial network. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12372, pp. 632–648. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58583-9_38

Inoue, N., et al.: Cross-domain weakly-supervised object detection through progressive domain adaptation. In: CVPR, pp. 5001–5009 (2018)

Kang, G., et al.: Contrastive adaptation network for unsupervised domain adaptation. In: CVPR, pp. 4893–4902 (2019)

Khosla, P., et al.: Supervised contrastive learning. In: NeurIPS (2020)

Kirkpatrick, J., Pascanu, R., Rabinowitz, N., et al.: Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. 114(13), 3521–3526 (2017)

Kundu, J.N., Venkatesh, R.M., Venkat, N., Revanur, A., Babu, R.V.: Class-incremental domain adaptation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12358, pp. 53–69. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58601-0_4

Lao, Q., et al.: Continuous domain adaptation with variational domain-agnostic feature replay. Ar**v (2020)

Li, C., Lee, G.H.: From synthetic to real: Unsupervised domain adaptation for animal pose estimation. In: CVPR. pp. 1482–1491 (2021)

Li, Z., Hoiem, D.: Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 40, 2935–2947 (2018)

Liang, J., Hu, D., Feng, J.: Do we really need to access the source data? Source hypothesis transfer for unsupervised domain adaptation. In: ICML (2020)

Liang, J., Wang, Y., Hu, D., He, R., Feng, J.: A balanced and uncertainty-aware approach for partial domain adaptation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12356, pp. 123–140. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58621-8_8

Liu, X., et al.: Rotate your networks: better weight consolidation and less catastrophic forgetting. In: International Conference on Pattern Recognition, pp. 2262–2268 (2018)

Melas-Kyriazi, L., Manrai, A.K.: PixMatch: unsupervised domain adaptation via pixelwise consistency training. In: CVPR, pp. 12435–12445 (2021)

Na, J., Jung, H., Chang, H.J., Hwang, W.: FixBi: bridging domain spaces for unsupervised domain adaptation. In: CVPR, pp. 1094–1103 (2021)

Niu, S., et al.: Efficient test-time model adaptation without forgetting. In: ICML (2022)

Pan, Y., et al.: Transferrable prototypical networks for unsupervised domain adaptation. In: CVPR (2019)

Panareda Busto, P., Gall, J.: Open set domain adaptation. In: ICCV, pp. 754–763 (2017)

Pei, Z., et al.: Multi-adversarial domain adaptation. In: AAAI (2018)

Qiu, Z., et al.: Source-free domain adaptation via avatar prototype generation and adaptation. In: IJCAI (2021)

Rebuffi, S.A., et al.: ICARL: incremental classifier and representation learning. In: CVPR, pp. 5533–5542 (2017)

Russakovsky, O., Deng, J., Su, H., et al.: Imagenet large scale visual recognition challenge. IJCV 115(3), 211–252 (2015)

Saenko, K., Kulis, B., Fritz, M., Darrell, T.: Adapting visual category models to new domains. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6314, pp. 213–226. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15561-1_16

Saito, K., et al.: Maximum classifier discrepancy for unsupervised domain adaptation. In: CVPR, pp. 3723–3732 (2018)

Sankaranarayanan, S., et al.: Generate to adapt: aligning domains using generative adversarial networks. In: CVPR (2018)

Tang, H., Chen, K., Jia, K.: Unsupervised domain adaptation via structurally regularized deep clustering. In: CVPR (2020)

Tang, S., et al.: Gradient regularized contrastive learning for continual domain adaptation. In: AAAI, pp. 2–13 (2021)

Tzeng, E., et al.: Adversarial discriminative domain adaptation. In: CVPR, pp. 2962–2971 (2017)

Tzeng, E., et al.: Deep domain confusion: Maximizing for domain invariance. Ar**v (2014)

Venkateswara, H., et al.: Deep hashing network for unsupervised domain adaptation. In: CVPR (2017)

Wu, Y., et al.: Large scale incremental learning. In: CVPR, pp. 374–382 (2019)

**a, H., Ding, Z.: HGNet: hybrid generative network for zero-shot domain adaptation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12372, pp. 55–70. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58583-9_4

**e, X., Chen, J., Li, Y., Shen, L., Ma, K., Zheng, Y.: Self-supervised CycleGAN for object-preserving image-to-image domain adaptation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12365, pp. 498–513. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58565-5_30

Xu, M., Islam, M., Lim, C.M., Ren, H.: Class-incremental domain adaptation with smoothing and calibration for surgical report generation. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12904, pp. 269–278. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87202-1_26

Yang, J., et al.: St3d: self-training for unsupervised domain adaptation on 3d object detection. In: CVPR, pp. 10363–10373 (2021)

Yang, J., et al.: St3d: self-training for unsupervised domain adaptation on 3d object detection. In: CVPR, pp. 10368–10378 (2021)

You, K., et al.: Universal domain adaptation. In: CVPR, pp. 2720–2729 (2019)

Zenke, F., Poole, B., Ganguli, S.: Continual learning through synaptic intelligence. In: ICML, pp. 3987–3995 (2017)

Zhang, Y., David, P., Gong, B.: Curriculum domain adaptation for semantic segmentation of urban scenes. In: ICCV, pp. 2039–2049 (2017)

Zhang, Y., et al.: From whole slide imaging to microscopy: deep microscopy adaptation network for histopathology cancer image classification. In: Shen, D., et al. (eds.) MICCAI 2019. LNCS, vol. 11764, pp. 360–368. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-32239-7_40

Zhang, Y., et al.: Unleashing the power of contrastive self-supervised visual models via contrast-regularized fine-tuning. In: NeurIPS (2021)

Zhang, Y., Kang, B., Hooi, B., Yan, S., Feng, J.: Deep long-tailed learning: a survey. Arxiv (2021)

Zhang, Y., et al.: Collaborative unsupervised domain adaptation for medical image diagnosis. IEEE Trans. Image Process. 29, 7834–7844 (2020)

Zou, Y., Yu, Z., Vijaya Kumar, B.V.K., Wang, J.: Unsupervised domain adaptation for semantic segmentation via class-balanced self-training. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11207, pp. 297–313. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01219-9_18

Acknowledgements.

This work was partially supported by National Key R &D Program of China (No.2020AAA0106900), National Natural Science Foundation of China (NSFC) 62072190, Program for Guangdong Introducing Innovative and Enterpreneurial Teams 2017ZT07X183.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lin, H. et al. (2022). Prototype-Guided Continual Adaptation for Class-Incremental Unsupervised Domain Adaptation. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13693. Springer, Cham. https://doi.org/10.1007/978-3-031-19827-4_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-19827-4_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19826-7

Online ISBN: 978-3-031-19827-4

eBook Packages: Computer ScienceComputer Science (R0)