Abstract

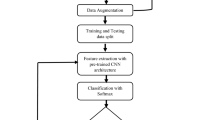

Dynamic Vision Sensor (DVS) is a neuromorphic sensor. Compared with traditional cameras, event cameras based on DVS have the advantages of high time resolution, low power consumption, less motion blur, and low data redundancy, making DVS more suitable for computer vision tasks such as gesture recognition. However, the adaptation of traditional algorithms to computer vision tasks with DVS seems to have unsatisfying limitations. This paper implements a lightweight gesture recognition system using Liquid State Machine (LSM). LSM can use the events generated by DVS as input directly. To achieve better performance of LSM, we use a heuristic search algorithm to obtain an improved parameter configuration for LSM. Our system achieves 98.42% accuracy on the DVS128 Gesture Dataset. Our model contains remarkably 90% fewer parameters than the Inception 3D, which achieves a precision of 99.62% on the DVS128 Gesture Dataset. This work encodes and compresses the event stream into event frames by using Convolutional Neural Network (CNN) to extract features from the frame and then sending the extracted feature to reservoirs to implement the gesture recognition.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Inivation Devices Secifications. https://inivation.com/wp-content/uploads/2021/08/2021-08-inivation-devices-specications.pdf. Accessed 20 Nov 2021

Al Zoubi, O., Awad, M., Kasabov, N.K.: Anytime multipurpose emotion recognition from EEG data using a liquid state machine based framework. Artif. Intell. Med. 86, 1–8 (2018)

Amir, A., et al.: A low power, fully event-based gesture recognition system. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7243–7252 (2017)

Boahen, K.A.: A burst-mode word-serial address-event link-i: transmitter design. IEEE Trans. Circuits Syst. I: Regul. Pap. 51(7), 1269–1280 (2004)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017)

Chen, J., Meng, J., Wang, X., Yuan, J.: Dynamic graph CNN for event-camera based gesture recognition. In: 2020 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–5. IEEE (2020)

Cheng, H., Yang, L., Liu, Z.: Survey on 3d hand gesture recognition. IEEE Trans. Circuits Syst. Video Technol. 26(9), 1659–1673 (2015)

Diehl, P.U., Cook, M.: Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 9, 99 (2015)

Gallego, G., et al.: Event-based vision: a survey. ar**v preprint ar**v:1904.08405 (2019)

George, A.M., Banerjee, D., Dey, S., Mukherjee, A., Balamurali, P.: A reservoir-based convolutional spiking neural network for gesture recognition from DVS input. In: 2020 International Joint Conference on Neural Networks (IJCNN), pp. 1–9. IEEE (2020)

Gerstner, W., Kempter, R., Van Hemmen, J.L., Wagner, H.: A neuronal learning rule for sub-millisecond temporal coding. Nature 383(6595), 76–78 (1996)

Gerstner, W., Ritz, R., Van Hemmen, J.L.: Why spikes? Hebbian learning and retrieval of time-resolved excitation patterns. Biol. Cybern. 69(5), 503–515 (1993)

Innocenti, S.U., Becattini, F., Pernici, F., Del Bimbo, A.: Temporal binary representation for event-based action recognition. In: 2020 25th International Conference on Pattern Recognition (ICPR), pp. 10426–10432. IEEE (2021)

Kasabov, N., et al.: Evolving spatio-temporal data machines based on the neucube neuromorphic framework: design methodology and selected applications. Neural Netw. 78, 1–14 (2016)

Li, J., Xu, S., Qin, X.: A hierarchical model for learning to understand head gesture videos. Pattern Recogn. 121(1), 108256 (2021)

Liu, B., Cai, H., Ju, Z., Liu, H.: RGB-D sensing based human action and interaction analysis: a survey. Pattern Recogn. 94, 1–12 (2019)

Maass, W., Natschläger, T., Markram, H.: Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14(11), 2531–2560 (2002)

Mahowald, M.A.: VLSI analogs of neuronal visual processing: a synthesis of form and function (1992)

Maqueda, A.I., Loquercio, A., Gallego, G., García, N., Scaramuzza, D.: Event-based vision meets deep learning on steering prediction for self-driving cars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5419–5427 (2018)

Nama, T., Deb, S.: Teleportation of human body kinematics for a tangible humanoid robot control. In: Cognitive Computing for Human-Robot Interaction, pp. 231–251. Elsevier (2021)

Nunez, J.C., Cabido, R., Pantrigo, J.J., Montemayor, A.: Convolutional neural networks and long short-term memory for skeleton-based human activity and hand gesture recognition. Pattern Recogn. J. Pattern Recogn. Soc. (2018)

Poli, R., Kennedy, J., Blackwell, T.: Particle swarm optimization. Swarm Intell. 1(1), 33–57 (2007)

Querlioz, D., Bichler, O., Dollfus, P., Gamrat, C.: Immunity to device variations in a spiking neural network with memristive nanodevices. IEEE Trans. Nanotechnol. 12(3), 288–295 (2013)

Rebecq, H., Horstschaefer, T., Scaramuzza, D.: Real-time visual-inertial odometry for event cameras using keyframe-based nonlinear optimization (2017)

Stimberg, M., Brette, R., Goodman, D.F.: Brian 2, an intuitive and efficient neural simulator. Elife 8, e47314 (2019)

Tavanaei, A., Ghodrati, M., Kheradpisheh, S.R., Masquelier, T., Maida, A.: Deep learning in spiking neural networks. Neural Netw. 111, 47–63 (2019)

Wang, S., Kang, Z., Wang, L., Li, S., Qu, L.: A hardware aware liquid state machine generation framework. In: 2021 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–5. IEEE (2021)

Yza, B., Lei, S., Yi, W.C., Ke, C., Jian, C., Hla, B.: Gesture recognition based on deep deformable 3d convolutional neural networks. Pattern Recogn. 107 (2020)

Zhang, Y., Li, P., **, Y., Choe, Y.: A digital liquid state machine with biologically inspired learning and its application to speech recognition. IEEE Trans. Neural Netw. Learn. Syst. 26(11), 2635–2649 (2015)

Acknowledgement

This work is funded by National Key R &D Program of China [grant numbers 2018YFB2202603].

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

**ao, X. et al. (2022). Dynamic Vision Sensor Based Gesture Recognition Using Liquid State Machine. In: Pimenidis, E., Angelov, P., Jayne, C., Papaleonidas, A., Aydin, M. (eds) Artificial Neural Networks and Machine Learning – ICANN 2022. ICANN 2022. Lecture Notes in Computer Science, vol 13531. Springer, Cham. https://doi.org/10.1007/978-3-031-15934-3_51

Download citation

DOI: https://doi.org/10.1007/978-3-031-15934-3_51

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-15933-6

Online ISBN: 978-3-031-15934-3

eBook Packages: Computer ScienceComputer Science (R0)