Abstract

Artificial Intelligence (AI) systems share complex characteristics including opacity, that often do not allow for transparent reasoning behind a given decision. As the use of Machine Leaning (ML) systems is exponentially increasing in decision-making contexts, not being able to understand why and how decisions were made, raises concerns regarding possible discriminatory outcomes that are not in line with the shared fundamental values. However, mitigating (human) discrimination through the application of the concept of fairness in ML systems leaves room for further studies in the field. This work gives an overview of the problem of discrimination in Automated Decision-Making (ADM) and assesses the existing literature for possible legal and technical solutions to defining fairness in ML systems.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

It should be noted that other principles such as the principle of transparency and explainability, reliability and safety, accountability, and other novel principles such as explicability [18] and knowability [33], are equally important fields of study. However, the focus of this article is mainly on the notions of the principle of fairness in ML systems, and due to the limited scope of the paper, there is not enough room to discuss these principles individually.

- 3.

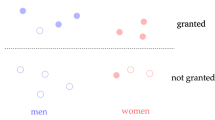

The aforementioned benefit or harm can be quantified through demographic parity if we consider the classification within a certain group as a benefit or harm; for instance, for university admissions, the admitted group entails a benefit.

- 4.

- 5.

- 6.

XAI is AI in which the process of decision-making is understandable by humans unlike the black box effect present in ML techniques [4].

References

Allen, R., Masters, D.: Artificial Intelligence: the right to protection from discrimination caused by algorithms, machine learning and automated decision-making. ERA Forum 20(4), 585–598 (2019). https://doi.org/10.1007/s12027-019-00582-w

Allen, R., Masters, D.: Regulating for an equal AI: a new role for equality bodies meeting the new challenges to equality and non-discrimination from increased digitisation and the use of artificial intelligence. Equinet (2020)

Angelopoulos, C.: Sketching the outline of a ghost: the fair balance between copyright and fundamental rights in intermediary third party liability. Info (2015)

Arrieta, A.B., et al.: Explainable artificial intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 58, 82–115 (2020)

Barocas, S., Hardt, M., Narayanan, A.: Fairness and machine learning (2019). http://www.fairmlbook.org

Beauchamp, T.L., Childress, J.F., et al.: Principles of Biomedical Ethics. Oxford University Press, USA (2001)

van Bekkum, M., Borgesius, F.Z.: Digital welfare fraud detection and the dutch syri judgment. Eur. J. Soc. Secur. 23(4), 323–340 (2021)

Bellamy, R.K., et al.: AI fairness 360: an extensible toolkit for detecting and mitigating algorithmic bias. IBM J. Res. Dev. 63(4/5), 1–4 (2019)

Binns, R.: Fairness in machine learning: lessons from political philosophy. In: Conference on Fairness, Accountability and Transparency, pp. 149–159. PMLR (2018)

Buolamwini, J., Gebru, T.: Gender shades: intersectional accuracy disparities in commercial gender classification. In: Conference on Fairness, Accountability and Transparency, pp. 77–91. PMLR (2018)

Dwork, C., Hardt, M., Pitassi, T., Reingold, O., Zemel, R.: Fairness through awareness. In: Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, pp. 214–226 (2012)

Ebers, M., Hoch, V.R., Rosenkranz, F., Ruschemeier, H., Steinrötter, B.: The european commission’s proposal for an artificial intelligence act–a critical assessment by members of the robotics and ai law society (rails). J. 4(4), 589–603 (2021)

European commission: regulation (eu) 2016/679-( general data protection regulation) (2016). https://eur-lex.europa.eu/legal-content/EN/ALL/?uri=celex%3A32016R0679. Accessed Mar 2022

European commission: regulation (eu) 2016/679-( general data protection regulation) (2016)

European commission: ethics of artificial intelligence: statement of the ege is released (2018). https://ec.europa.eu/info/news/ethics-artificial-intelligence-statement-ege-released-2018-apr-24_en. Accessed Mar 2022

European commission: proposal for a regulation of the european parliament and of the council laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts. com/2021/206 final (2021)

Floridi, L.: The Ethics of Information. Oxford University Press (2013)

Floridi, L., Cowls, J.: A unified framework of five principles for AI in society. In: Floridi, L. (ed.) Ethics, Governance, and Policies in Artificial Intelligence. PSS, vol. 144, pp. 5–17. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-81907-1_2

Floridi, L., et al.: AI 4people-an ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Mind. Mach. 28(4), 689–707 (2018). https://doi.org/10.1007/s11023-018-9482-5

Gerards, J., Xenidis, R.: Algorithmic discrimination in Europe: challenges and opportunities for gender equality and non-discrimination law. european commission (2021)

Giovanola, B., Tiribelli, S.: Beyond bias and discrimination: redefining the ai ethics principle of fairness in healthcare machine-learning algorithms. AI & SOCIETY, pp. 1–15 (2022). https://doi.org/10.1007/s00146-022-01455-6

Giovanola, B., Tiribelli, S.: Weapons of moral construction? on the value of fairness in algorithmic decision-making. Ethics Inf. Technol. 24(1), 1–13 (2022). https://doi.org/10.1007/s10676-022-09622-5

Hacker, P.: Teaching fairness to artificial intelligence: existing and novel strategies against algorithmic discrimination under eu law. Common Mark. Law Rev. 55(4), 1143–1185 (2018)

Helberger, N., Araujo, T., de Vreese, C.H.: Who is the fairest of them all? public attitudes and expectations regarding automated decision-making. Comput. Law Secur. Rev. 39, 105456 (2020)

High level expert group on artificial intelligence: ethics guidelines for trustworthy ai (2019)

Hoehndorf, R., Queralt-Rosinach, N., et al.: Data science and symbolic AI: Synergies, challenges and opportunities. Data Sci. 1(1–2), 27–38 (2017)

Kleinberg, J., Ludwig, J., Mullainathan, S., Rambachan, A.: Algorithmic fairness. Aea Papers Proc. 108, 22–27 (2018)

Köchling, A., Wehner, M.C.: Discriminated by an algorithm: a systematic review of discrimination and fairness by algorithmic decision-making in the context of HR recruitment and HR development. Bus. Res. 13(3), 795–848 (2020). https://doi.org/10.1007/s40685-020-00134-w

Lepri, B., Oliver, N., Letouzé, E., Pentland, A., Vinck, P.: Fair, transparent, and accountable algorithmic decision-making processes. Philos. Technol. 31(4), 611–627 (2018). https://doi.org/10.1007/s13347-017-0279-x

Mittelstadt, B.D., Allo, P., Taddeo, M., Wachter, S., Floridi, L.: The ethics of algorithms: map** the debate. Big Data Soc. 3(2), 2053951716679679 (2016)

Mittelstadt, B.D., Allo, P., Taddeo, M., Wachter, S., Floridi, L.: The ethics of algorithms: map** the debate. Big Data Soc. 3(2), 2053951716679679 (2016). https://doi.org/10.1177/2053951716679679

Morison, J., Harkens, A.: Re-engineering justice? robot judges, computerised courts and (semi) automated legal decision-making. Leg. Stud. 39(4), 618–635 (2019)

Palmirani, M., Sapienza, S.: Big data, explanations and knowability ragion pratica. Ragion pratica 2, 349–364 (2021)

Prins, C.: Digital justice. Comput. Law Secur. Rev. 34(4), 920–923 (2018)

Rajkomar, A., Dean, J., Kohane, I.: Machine learning in medicine. N. Engl. J. Med. 380(14), 1347–1358 (2019)

Rawls, J.: A Theory of Justice. Harvard University Press (1971)

Sachan, S., Yang, J.B., Xu, D.L., Benavides, D.E., Li, Y.: An explainable ai decision-support-system to automate loan underwriting. Expert Syst. Appl. 144, 113100 (2020)

Sales, L.: Algorithms, artificial intelligence and the law. Judicial Rev. 25(1), 46–66 (2020)

Schoeffer, J., Machowski, Y., Kuehl, N.: A study on fairness and trust perceptions in automated decision making (2021). ar**v preprint ar**v:2103.04757

Shin, D., Park, Y.J.: Role of fairness, accountability, and transparency in algorithmic affordance. Comput. Hum. Behav. 98, 277–284 (2019)

Siapka, A.: The ethical and legal challenges of artificial intelligence: the eu response to biased and discriminatory AI. Available at SSRN 3408773 (2018)

Srivastava, M., Heidari, H., Krause, A.: Mathematical notions vs. human perception of fairness: a descriptive approach to fairness for machine learning. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 2459–2468 (2019)

Starke, C., Baleis, J., Keller, B., Marcinkowski, F.: Fairness perceptions of algorithmic decision-making: a systematic review of the empirical literature (2021). ar**v preprint ar**v:2103.12016

University of Montreal: montreal declaration for a responsible development of artificial intelligence (2017). https://www.montrealdeclaration-responsibleai.com/the-declaration. Accessed Mar 2022

Veale, M., Binns, R.: Fairer machine learning in the real world: mitigating discrimination without collecting sensitive data. Big Data Soc. 4(2), 2053951717743530 (2017)

Verma, S., Rubin, J.: Fairness definitions explained. In: 2018 IEEE/ACM International Workshop on Software Fairness (fairware), pp. 1–7. IEEE (2018)

Wachter, S., Mittelstadt, B., Russell, C.: Why fairness cannot be automated: Bridging the gap between eu non-discrimination law and ai. Comput. Law Secur. Rev. 41, 105567 (2021)

Wadsworth, C., Vera, F., Piech, C.: Achieving fairness through adversarial learning: an application to recidivism prediction (2018). ar**v preprint ar**v:1807.00199

Wagner, B., d’Avila Garcez, A.: Neural-symbolic integration for fairness in ai. In: CEUR Workshop Proceedings, vol. 2846 (2021)

Wallach, W., Allen, C.: Moral Machines: Teaching Robots Right from Wrong. Oxford University Press (2008). https://books.google.it/books?id=tMENFHG4CXcC

Wirtz, B.W., Weyerer, J.C., Geyer, C.: Artificial intelligence and the public sector–applications and challenges. Int. J. Public Adm. 42(7), 596–615 (2019)

Zafar, M.B., Valera, I., Gomez Rodriguez, M., Gummadi, K.P.: Fairness beyond disparate treatment & disparate impact: Learning classification without disparate mistreatment. In: Proceedings of the 26th International Conference on World Wide Web, pp. 1171–1180 (2017)

Završnik, A.: Criminal justice, artificial intelligence systems, and human rights. ERA Forum 20(4), 567–583 (2020). https://doi.org/10.1007/s12027-020-00602-0

Žliobaitė, I.: Measuring discrimination in algorithmic decision making. Data Min. Knowl. Disc. 31(4), 1060–1089 (2017). https://doi.org/10.1007/s10618-017-0506-1

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Yousefi, Y. (2022). Notions of Fairness in Automated Decision Making: An Interdisciplinary Approach to Open Issues. In: Kő, A., Francesconi, E., Kotsis, G., Tjoa, A.M., Khalil, I. (eds) Electronic Government and the Information Systems Perspective. EGOVIS 2022. Lecture Notes in Computer Science, vol 13429. Springer, Cham. https://doi.org/10.1007/978-3-031-12673-4_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-12673-4_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-12672-7

Online ISBN: 978-3-031-12673-4

eBook Packages: Computer ScienceComputer Science (R0)