Abstract

Simultaneous recording of electroencephalography (EEG) and functional magnetic resonance imaging (fMRI) have attracted extensive attention and research owing to their high spatial and temporal resolution. However, EEG data are easily influenced by physiological causes, gradient artifact (GA) and ballistocardiogram (BCG) artifact. In this paper, a new blind source separation technique based on dictionary learning is proposed to remove BCG artifact. The dictionary is learned from original data which represents the features of clean EEG signals and BCG artifact. Then, the dictionary atoms are classified according to a list of standards. Finally, clean EEG signals are obtained from the linear combination of the modified dictionary. The proposed method, ICA, AAS, and OBS are tested and compared using simulated data and real simultaneous EEG–fMRI data. The results suggest the efficacy and advantages of the proposed method in the removal of BCG artifacts.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Eelectroencephalography (EEG)

- functional Magnetic Resonance Imaging (fMRI)

- Ballistocardiogram

- Dictionary learning

- Signal processing

1 Introduction

With the development of brain science, the simultaneous acquisition of electroencephalography (EEG) and functional magnetic resonance imaging (fMRI) has attracted extensive attention and research. EEG signal has the characteristics of low spatial resolution and high temporal resolution, while fMRI has the characteristics of low temporal resolution and high spatial resolution. Therefore, the combination of EEG signal and fMRI is very important for the study of brain function, pathogenesis and cognition of mental diseases [1, 2].

However, we face a serious problem: during recording data in an MRI scanner, EEG signals are easy to be affected by the gradient artifact (GA) and ballistocardiogram (BCG) artifact [7]. Fortunately, the gradient artifacts have time-shift invariance and their amplitude is more than 100 times higher than that of an EEG signal [3]. Thus, gradient artifacts are easily discernable, and they can be removed from the EEG signal by average artifact subtraction (AAS) [4]. However, the original EEG signals still contain another serious artifact, the most difficult one to remove: the ballistocardiogram artifact (BCG). The BCG artifact is caused by the hall effect induced by blood flow and the scalp twitch caused by blood pulsation, which mainly covers the frequency of EEG signal at alpha (8–13 Hz) and below [12]. Therefore, BCG artifacts are a major bottleneck to the wide application of simultaneous EEG-fMRI, and it is also a problem we need to solve urgently.

To ensure the quality of EEG signals in EEG-fMRI, many methods have been used to remove BCG artifact. (1) Averaged artifact subtraction (AAS), in which a BCG artifact template is estimated by averaging over the intervals of EEG signal that are corrupted by the artifact and subsequent subtraction of the template from the corrupted segments to obtain clean signal [4]. (2) Independent Component Analysis (ICA) [5] and (3) Principal Component Analysis (PCA) [6] are used to separate the original EEG signal into different components, identify the artifact components and then remove them. The primary challenge with these two methods is the definition of a consistent standard for artificial component selection. There is always a trade-off in selecting the number of components: removing a small number of components may still leave traces in the EEG signal, on the contrary, removing a large number of components may lead to the loss of important information in the EEG signal [11].

In recent years, dictionary learning has been widely used in multiple denoising study areas concerning images and medical signals. Abolghasemi et al. [8] firstly used dictionary learning to removal BCG artifact. The method first learns a sparse dictionary from the given data. Then, the dictionary is used to model the existing BCG contribution in the original signals. The obtained BCG model is then subtracted from the original EEG to achieve clean EEG. The results show that dictionary learning is better than AAS, OBS and DHT. However, their methods lack the study of learned dictionary. Their work proved the reliability from the evaluation index while not from the dictionary itself. The features of atoms is not fully explained and the amount of useful EEG features in the dictionary on real-time data has not been proved.

In this paper, based on the previous approach, we propose a new blind source separation technique for removing BCG artifacts EEG data based on dictionary learning. The original EEG signal is firstly studied through dictionary learning, while the dictionary contains all of the features of the dictionary, which can ensure that all BCG features are fitted. Then, the atoms contain only EEG features are sorted according to a list of standards. Finally, the clean EEG signal is obtained by the linear combination of the sorted atoms. The proposed method is tested on synthetic data and real data, and compared with ICA, AAS and OBS, which proves that the method has better performance.

2 Method

2.1 Dictionary Learning

Dictionary learning is a machine learning algorithm that has been widely used in image denoising and classification, medical signals analyzing and so on. The learning method aims at inferring a dictionary matrix which can give a sparse representation of the input image or signal. Each column of the dictionary is called an atom which describes the features of the input data. The method is also called sparse dictionary learning which means the signal is represented as a sparse linear combination of atoms [13]. Assume a dictionary matrix \( {\mathbf{D}} = [d_{1} , \cdots ,d_{K} ] \in R^{n \times K} ,\;\;n < K \), has been found from the input dataset \( {\mathbf{X}} = [x_{1} , \cdots ,x_{m} ],\;\;x_{i} \in {\text{R}}^{n} \). Each column of the dataset matrix which usually represents a signal \( x_{i} \) can be represented as the linear combination of the atoms of dictionary, i.e. \( x_{i} = {\mathbf{D}} \cdot s_{i} \). Representations \( s_{i} \), the elements of sparse matrix \( {\mathbf{S}} = [s_{1} , \cdots ,s_{m} ] \) need to achieve enough sparsity. Thus, the learning process can be formulated as:

The l0-norm in the minimization problem (1) is commonly substituted by l1-norm for measurement of sparsity since the previous is NP-hard [17]. A usual procedure to solve the problem is iteratively updating dictionary and sparse coding, i.e. solve dictionary D while sparse coding S is fixed then solve S when D is fixed, until convergence. K-SVD [19] is a powerful algorithm that based on Singular Value Decomposition (SVD) and K-means implements the alternating minimization process well. And the paper uses K-SVD as the core algorithm to complete the dictionary learning.

2.2 The Proposed Technique

The input data for the proposed method which is the original signal added by all 32 EEG channels with length L is segmented into m pieces of data of length n. Each segment is a piece of continues EEG signal. The segments would increase the amount of the input which means there would be a certain amount of duplication between each other. This strategy, which is quite similar to the one between decision tree and random forest, has the advantage that it can capture most of the local features in the giving sample [8]. Each segment can be formulated as:

\( P_{i} \) is a binary matrix. Hence the proposed method can be formulated as:

After solving the above problem, a dictionary D with atoms contain all of the features will be obtained.

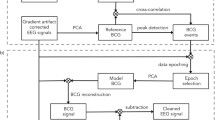

Since the dictionary matrix contains the features of signals, it has similar mathematical forms with Independent Component Analysis (ICA), which the signals can be represented by the linear combination of independent components. Compared with ICA, dictionary learning has allows the dimensionality of atoms to be higher than the number of EEG channels. This property leads to adding redundant information to the dictionary, in this case, which mainly is the clean EEG but also provides an improvement in sparsity and precision of the signal features representation. To improve the result of dictionary learning, we modify the dimension of the dictionary and sort the atoms we learned through atoms classification and rearrangement. For the learned redundant dictionary matrix D, principal component analysis (PCA) is first applied to reduce the dimensionality and figure out the number k of the first principal components (Fig. 1).

The space spanned by the first k principal components is considered to capture the most important characteristics of the original signal that mainly depend on BCG artifacts. For the reduced dictionary atoms, k-means clustering algorithm is applied to classified BCG atoms and EEG atoms. The clustering result can be plotted to 3D-space spanned by the first 3 principal components, as shown on Fig. 2.

After identifying the atoms which describe the features of the BCG, we return BCG atoms to zero to avoid returning artifact information. Therefore, the dictionary would have a better description of clean EEG signal (Fig. 3).

3 Experiment

In this section, we divide the experimental results into two parts: synthetic and real data. The performance of AAS, OBS, ICA and dictionary learning was evaluated by experiments on these two data types, which proved the superiority of the proposed method.

3.1 Data Acquisition

(1) Synthetic Data

The synthetic EEG data used in this paper was proposed by Abolghasemi et al. in 2015 [8]. The synthesized signal consists of two parts: 1/f noise (also known as pink noise) and BCG artifact. For the non-uniform characteristics of EEG signal, 1/f noise is selected as EEG signal [14], while the synthesized BCG artifact is overlapped by cosine waves of different frequencies and amplitudes. For the final synthesized signal, 10 dB white Gaussian noise is added, and these signals and their synthesized signals are shown in Fig. 4.

It can be seen from Fig. 4 that as the 1/f noise of EEG signal, its amplitude is significantly smaller than that of simulated BCG signal. In fact, the EEG signal of synchronous acquisition of EEG and fMRI obtained from actual acquisition is the same.

(2) Public Data

The experimental data is provided by FMRIB research center of Oxford University. The test data (FMRIB_Data.set) downloaded from the open-source EEG signal processing website includes EEG signal, BCG artifact, gradient artifact and other noises [6].

(3) Clinical Data

The experimental data were provided by the sleep neuroimaging center of Southwest University. Two healthy subjects with no history of nerve or heart disease participated in the experiment. Indicates that the object rests in the scanner with the eyes open and closed, without performing any specific tasks. Among the 32 channels recorded, 30 EEG channels used 10–20 international system. In addition, there are two bipolar channels for EMG and ECG. The data sampling rate is 5000 Hz.

3.2 Data Preprocessing

The premise of effectively suppressing BCG artifacts is that there are no gradient artifacts that seriously distort EEG signals in EEG signals, so it is necessary to preprocess the collected EEG data first.

Matlab (2017b; MathWorks, Inc., Natick, MA) and eeglab 12.0 (Delorme and makeig, 2004) were used to preprocess the EEG data. Firstly, the FMRIB 2.1 plug-in in EEGLAB [15] is used to remove the gradient artifact in EEG data of MRI scan. In order to save memory, we can down sample the data to 250 Hz. All EEG data are then bandpass filtered at 0.5–50 Hz. The data is finally converted to a 32 channel EEG with sampling frequency of 250 Hz.

3.3 Evaluation

Various evaluation indexes are used to determine the ability to inhibit BCG artifacts (more importantly, to retain brain activity), and to compare the experimental results of various methods.

The evaluation is mainly carried out from the following aspects: (a) the amplitude changes; (b) Power Spectral Density [16]; (c) Improvement in Normalized Power Spectral Density Ratio (INPS) [9]; (d) Peak-to-peak Value (PPV) [10]. In addition, INPS and PPV can be calculated as follows:

4 Result

Figure 5 gives the results using AAS, OBS and ICA and dictionary learning methods to the synthetic signal.

According to the method in Sect. 3.3, the normalized power spectral density (INPS) is 13.16, the peak-to-peak value is 8.44. The values of the two performance evaluation indexes are much higher than 1, indicating that dictionary learning plays an important role in inhibiting BCG artifacts.

Figure 6 shows a segment of EEG data with a length of 10 s processed by various methods. Visualization results of EEG records before and after BCG artifact correction (AAS, OBS, ICA, proposed) of DATA 3. With the application of BCG removal method, BCG artifact contribution is attenuated, so EEG signal strongly decreases. However, it is obvious that the BCG residuals significantly decreased when using the proposed method.

Figure 7 shows averaged power spectral density of EEG data using different artifact removal algorithms. It shows that our procedure can effectively reduce the artifacts in different EEG frequency bands without affecting alpha (8–13 Hz) and beta (13–15 Hz in Fig. 7) activities.

Figure 8 and Table 1 compares the performance of AAS, OBS, ICA and the proposed method in terms of INPS ratio. It can be concluded that the proposed method is obviously superior to other methods.

Table 2 compares the peak-to-peak ratio of BCG artifact suppression methods based on AAS, OBS and ICA, and the results prove the advantages of dictionary learning.

Table 3 is the averaged INPS and peak-to-peak ratio using different method. it is obvious that the performance indexes of dictionary-based learning methods are almost higher than those of ICA, AAS and OBS methods, except DATA 3, so it can be considered that the dictionary-based learning method studied in this paper is more effective in inhibiting BCG artifacts.

5 Conclusion

In this study, a set of new algorithms based on dictionary learning has been developed to remove BCG artifact from EEG recordings simultaneously acquired with continuous fMRI scanners. This method can reduce the BCG related artifacts in EEG fMRI records without using additional hardware. The validity of this method is verified by the experiments of synthetic data and real data.

It is gratifying that we still have a lot of room for progress. The classification standards still need further study, such as classifying the atoms through mutual information, the features of ECG are considered to sort m atoms with the largest mutual information with the ECG [18]. At present, most of the EEG-fMRI researches are on-line data artifact removal, only a few involve real-time correction. We can consider applying the proposed method to real-time detection and removal of artifacts. One of the limitations of our study is that we did not compare the results with the data recorded outside the MRI. In fact, we can design specific paradigms involving in and out of scanner experiments, so that we can how BCG was considered affecting EEG signals from these recorded data.

References

Mulert, C., Pogarell, O., Hegerl, U.: Simultaneous EEG-fMRI: perspectives in psychiatry. CEN 39(2), 61–64 (2008). https://doi.org/10.1177/155005940803900207

Shams, N., Alain, C., Strother, S.: Comparison of BCG artifact removal methods for evoked responses in simultaneous EEG–fMRI. J. Neurosci. Methods 245, 137–146 (2015)

Iannotti, G.R., Pittau, F., Michel, C.M., Vulliemoz, S., Grouiller, F.: Pulse artifact detection in simultaneous EEG-fMRI recording based on EEG map topography. Brain Topogr. 28(1), 21–32 (2015)

Allen, P.J., Polizzi, G., Krakow, K., Fish, D.R., Lemieux, L.: Identification of EEG events in the MR scanner: the problem of pulse artifact and a method for its subtraction. Neuroimage 8(3), 229–239 (1998)

Bénar, C., Aghakhani, Y., Wang, Y., et al.: Quality of EEG in simultaneous EEG–fMRI for epilepsy. Clin. Neurophysiol. 114(3), 569–580 (2003)

Niazy, K., Beckmann, C.F., Iannetti, G.D., et al.: Removal of FMRI environment artifacts from EEG data using optimal basis sets. Neuroimage 28(3), 720–737 (2005)

Hu, L., Zhang, Z.: EEG Signal Processing and Feature Extraction. Springer, Singapore (2019). https://doi.org/10.1007/978-981-13-9113-2

Abolghasemi, V., Ferdowsi, S.: EEG–fMRI: dictionary learning for removal of ballistocardiogram artifact from EEG. Biomed. Signal Process. Control 18, 186–194 (2015)

Ghaderi, F., Nazarpour, K., Mcwhirter, J.G., et al.: Removal of ballistocardiogram artifacts using the cyclostationary source extraction method. IEEE Trans. Biomed. Eng. 57(11), 2667–2676 (2010)

Mantini, D., Perrucci, M.G., Cugini, S., Ferretti, A., Romani, G.L., Del Gratta, C.: Complete artifact removal for EEG recorded during continuous fMRI using independent component analysis. Neuroimage 34, 598–607 (2007)

Winkler, I., Haufe, S., Tangermann, M.: Automatic classification of artifactual ICA-components for artifact removal in EEG signals. BBF 7, Article no. 30 (2011). https://doi.org/10.1186/1744-9081-7-30

de Munck, J.C., van Houdt, P.J., Gonçalves, S.I., van Wegen, E.E.H., Ossenblok, P.P.W.: Novel artefact removal algorithms for co-registered EEG/fMRI based on selective averaging and subtraction. NeuroImage 64, 407–415 (2013)

Quan, Y., Xu, Y., Sun, Y., Huang, Y., Ji, H.: Sparse coding for classification via discrimination ensemble. In: Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5839–5847 (2016)

Demanuele, C., James, C.J., Sonuga-Barke, E.J.: Behav. Brain Funct. 3, 62 (2007). https://doi.org/10.1186/1744-9081-3-62

The FMRIB Plug-in for EEGLAB. https://fsl.fmrib.ox.ac.uk/eeglab/fmribplugin/

Dressler, O., Schneider, G., Stockmanns, G., Kochs, E.F.: Awareness and the EEG power spectrum: analysis of frequencies. BJA 93, 806–809 (2004). https://doi.org/10.1093/bja/aeh270

Mairal, J., Bach, F., Ponce, J., Sapiro, G.: Online dictionary learning for sparse coding. In: Proceedings of the 26th Annual International Conference on Machine Learning - ICML 2009 (2009)

Liu, Z., de Zwart, J.A., van Gelderen, P., Kuo, L.-W., Duyn, J.: Statistical feature extraction for artifact removal from concurrent fMRI-EEG recordings. Neuroimage 59, 2073–2087 (2012)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Acknowledgement

This work was supported by NSFC (61633010, 61671193, 61602140), National Key Research & Development Project (2017YFE0116800), Key Research & Development Project of Zhejiang Province (2020C04009, 2018C04012).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 IFIP International Federation for Information Processing

About this paper

Cite this paper

Liu, Y., Zhang, J., Zhang, B., Kong, W. (2020). Ballistocardiogram Artifact Removal for Concurrent EEG-fMRI Recordings Using Blind Source Separation Based on Dictionary Learning. In: Shi, Z., Vadera, S., Chang, E. (eds) Intelligent Information Processing X. IIP 2020. IFIP Advances in Information and Communication Technology, vol 581. Springer, Cham. https://doi.org/10.1007/978-3-030-46931-3_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-46931-3_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-46930-6

Online ISBN: 978-3-030-46931-3

eBook Packages: Computer ScienceComputer Science (R0)