Abstract

Salient object detection has recently drawn much attention in computer vision such as image compression and object tracking. Currently, various heuristic computational models have been designed. However, extracting the salient objects with a complex background in the image is still a challenging problem. In this paper, we propose a region merging strategy to extract salient region. Firstly, boundary super-pixels are clustered to generate the initial saliency maps based on the prior knowledge that the image boundaries are mostly background. Next, adjacent regions are merged by sorting the multiple feature values of each region. Finally, we get the final saliency maps by merging adjacent or non-adjacent regions by means of the distance from the region to the image center and the boundary length of overlap** regions. The experiments demonstrate that our method performs favorably on three datasets than state-of-art.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Salient object detection is an essential problem in computer vision which aims at highlighting the visually outstanding regions/object/structures from the surrounding background [1]. It has received substantial attention over the last decade due to its wide range of applications in image compression [2], behavior recognition [3] and co-segmentation [4].

According to the human visual systems, salient object detection methods can be divided into two categories: one is a bottom-up method; the other is the top-down methods. In the top-down approaches, the salient object obtained in a scene is always discrepant for different people. Therefore, it is more complex to construct the top-down salient object detection model, so there are few models for salient object detection. On the contrary, the bottom-up approach has attracted much attention and many salient object detection models have been proposed. In the traditional bottom-up methods, the salient object detection based on spatial and frequency domains. In spatial domains, Sun et al. [5] propose a salient object detection method for region merging. Shen et al. [6] propose a unified approach via low-rank matrix recovery. Peng et al. [7] propose a structured matrix decomposition model to increase the distance between the background and the foreground for salient object detection. Feng et al. [20] propose a salient detection method by fusing image color and spatial information to obtain saliency values to separate foreground and background for images. Xu et al. [21] propose a universal edge-oriented framework to get saliency maps. These salient object detection methods mentioned above could basically complete the salient object detection task, but the results are not accurate. Zhao et al. [24] obtain the saliency map by combining sparse reconstruction and energy optimization. Yu et al. [25] construct the quaternion hypercomplex and multiscale wavelet transform to detect the salient object. Guo et al. [26] obtain the saliency map by combining the boundary contrast map and the geodesics-like map. Marcon et al. [27] propose an update to the traditional pipeline, by adding a preliminary filtering stage to detect the salient object. As the demand for application scenarios continues to increase, co-saliency detection method [8] and moving salient object detection method [9] also introduced into the field of salient object detection, but there are also challenges for its application.

In order to improve the accuracy of the salient object detection results, several frequency domain methods have been developed, Hou et al. [10] argue that a salient detection model for spectral residuals. Guo et al. [11] use the spectral phase diagram of the quaternion Fourier transform to detect the spatiotemporal salient object. Achanta et al. [12] exploit color and luminance features detect the salient region by frequency tuning method. Early studies of salient object detection models are designed to predict human eye fixation. It mainly detects salient points where people look rather than salient regions [13]. In [14] for each color subband, the saliency maps are obtained by an inverse DWT over a set of scale-weighted center-surround responses, where the weights are derived from the high-pass wavelet coefficients of each level. Spectrum analysis is easy to understand, computationally efficient and effective, but the relevant interpretation of the frequency domain is unclear.

Nowadays, the deep learning based methods have been applied to saliency detection. Li et al. [22] propose a CNN based saliency detection methods by fusing deep contrast feature and handcrafted low-level features to acquire saliency maps. Li et al. [23] use optimized convolutional neural network extract the high-level features, and then the high-level features and low-level features were fused to obtain the fusion features. Finally, the fusion features were input into SVM to separate the foreground and background for images. However, these two methods have high time complexity.

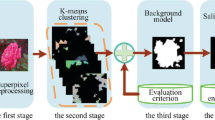

This paper focuses on the incomplete salient region and unclear boundary of the salient regions in the detection results, we propose a super-pixel level salient object detection method based on clustering and region merging strategy to improve the performance of detecting the salient object in a scene. The work flow of our salient object detection framework is described in Fig. 1. Firstly, the SLIC [15] (Simple Linear Iterative Cluster) algorithm is used for super-pixel segmentation. Secondly, generate the initial saliency maps. This step involves two processes: the super-pixels lie in image boundaries are clustered and calculate the distance between the remaining super-pixels and the classes centers. Color background maps and spatial relationship background maps are calculated by calculating the distance between the super-pixels and the boundary class. Then the color background maps and the spatial relation background maps are combined linearly to produce the initial saliency map. Thirdly, the final saliency maps obtained after two steps regions merging. The first stage is based on the initial saliency map where adjacent regions are merged by taking into account color features and spatial information. The second stage is to calculate the saliency value of the regions and merge the regions according to the saliency value. The main contributions are summarized as follows:

-

(1)

We cluster the boundary super-pixels to generate the initial saliency maps based on the prior knowledge that the image boundaries are mostly background.

-

(2)

A two-stage optimization strategy is proposed to further optimize the saliency. In the first stage, we merge adjacent small regions with identical or similar features. In the second stage, we merge adjacent or no-adjacent regions according to the ranking of regional saliency values.

2 Construction of Initial Saliency Map

Given an image \( I \), we represent it by \( N \) super-pixels produced by SLIC. Then \( M \) different classes are generated by clustering boundary super-pixels in the CIELab color space with K-means algorithm. We establish an initial saliency map with three steps: (i) Constructing color background maps. (ii) Constructing spatial correlation background maps. (iii) Constructing initial saliency maps. The color background maps and spatial background maps are merged to construct initial saliency maps. The specific process is as follows.

2.1 Constructing Color Background Map

In this paper, we first use the k-means algorithm to cluster the boundary super-pixels into \( M \) classes. Next, calculate the feature gap between other super-pixels and the \( M \) different classes, this can measure the probability that the super-pixels to be the object regions. In this paper, we build \( M \) background maps \( C_{m} \) based on the number of \( M \) clusters. \( C_{m} \) is defined as the color feature difference between super-pixels \( i(i = 1,2, \cdots ,N) \) and the boundary classed \( m(m = 1,2, \cdots ,M) \). The class maps can be formulated as follows.

where \( c_{m} \) and \( c_{i} \) denotes the color features of each boundary class and super-pixel respectively. \( |c_{m} ,c_{i} | \) denotes the Euclidean distance between each super-pixel and boundary classes in CIELab color space, \( \alpha_{1} ,\eta \) are balance factors, \( p_{m} (m = 1,2, \cdots ,M) \) is the number of super-pixels in different boundary classes. Through lots of experiments, the detection results are more accurate when \( \alpha_{1} = 0.2 \), \( \eta = 10 \), \( M{ = 3} \). The three background maps generated in this paper are shown in Fig. 2.

2.2 Constructing Spatial Correlation Background Map

In salient object detection, distance is always used to measure the spatial correlation between regions. The closer the distance between the two regions is, the greater the degree of influence and association in the space, and vice versa [16]. So we construct the spatial correlation saliency maps between each super-pixel and the boundary class by means of the spatial distance relationship between two regions. We defined \( S_{m} \) as the spatial distance between each super-pixel and class \( m(m = 1,2, \cdots ,M) \), so the \( S_{m} \) can be formulated as follows.

where \( s_{i} ,s_{m} \) denotes the spatial location of super-pixel \( i \) and \( m \) respectively. \( \alpha_{2} \) is control parameter, and it is learned by experience that setting \( \alpha_{2} = 1 \) is most suitable. We calculate the distance between the super-pixels of the first class of background area and the other super-pixels to obtain the 1st class map. In the experiment, we calculated the spatial correlation background maps of three class of background areas, in which the bright part represents the salient area and the dark area represents the no-salient area. The boundary clustering results are shown in Fig. 3.

2.3 Constructing Initial Saliency Map

As shown in the first map of Fig. 4, because there are two black objects, it is very difficult to distinguish their saliency by means of the color feature. But it can be seen from the map that the aircraft is closer to the center of the image than the tree, so we can use spatial distance to enhance the saliency of the aircraft and weaken the saliency of the tree. Let \( S_{m} \) denotes the intensity factor to restrict the \( C_{m} \). The initial saliency map can be written as follows.

The merging result as shown in the third map of Fig. 4. We can see from the result map that the brightness of the aircraft is higher than the tree. That is, the saliency of aircraft is strengthened and the saliency of the tree is weakened. Therefore, the image merging method is effective.

3 Optimization of Merger Strategy

The object region can be revealed after merging, but we can see from the third map in Fig. 4, many background regions are also enhanced as the object regions are brightened. In order to optimize the rough initial saliency maps and detect salient object more accurate, a regional merging model is established in this section, which merges those adjacent background regions with similar features. In this paper, we merge the relevant regions with two stages. Firstly, we merge adjacent small regions with identical or similar features. Secondly, we merge adjacent or non-adjacent regions according to the ranking of regional saliency values.

3.1 The First Phase of the Merging

The relationship tightness of each region with its adjacent regions be calculated first and then merging them according to the degree of the relationship tightness. In this paper, the relationship tightness between regions is represented by color similarity and spatial tightness.

Color Similarity.

Color similarity is defined as the difference in color feature between the super-pixel \( i \) and \( j \), it can be formulated as follows.

where \( c_{i} \) and \( c_{j} \) represent the average color value for super-pixels \( i \) and \( j \) respectively, \( \left\| {c_{i} - c_{j} } \right\| \) is the Euclidean distance between the region \( i \) and adjacent region \( j \).

Spatial Tightness.

The spatial tightness between two regions can measure their degree of association in space. The closer the distance between the two regions is, the greater their spatial tightness. The correlation is measured by the boundary length of the overlap** regions. The longer the length of the boundaries that they co-own is, the greater the degree of association is. Let \( ST(i,j) \), \( S(i,j) \) and \( T(i,j) \) as the spatial tightness, the spatial distance and spatial tightness between the super-pixel \( i \) and adjacent super-pixel \( j \). The formula can be written respectively as follows.

where \( s_{i} ,s_{j} \) denote the spatial location for super-pixel \( i \) and \( j \), \( ||s_{i} - s_{j} || \) is the Euclidean distance between region \( i \) and region \( j \), \( B(i,j) \), \( L(i) \) and \( L(j) \) denote the length of their overlap** boundaries, the length of the boundary of super-pixels \( i \) and \( j \). Finally, we define \( P(i,j) \) as the importance of the region \( j \) to the adjacent region \( i \) by calculating their color similarity and spatial tightness. The region \( i \) and the region \( j \) will be merged if they have higher importance. The importance \( P(i,j) \) can be formulated as follows.

Some small region \( s_{i} \) must be merged into a large region \( s_{j} \) with greater relationship tightness. The merging results are shown in Fig. 5. Through experiments, the detection result is more accurate when \( \omega_{1} = 0.68 \), \( \omega_{2} = 0.32 \).

3.2 The Second Phase of the Merging

Based on the above first merging, it is noted that the majority of background regions are merged. However, there are some non-adjacent or adjacent background regions with greater intensity are not belong to salient regions (as shown in the third map of Fig. 5). Hence, in order to eliminate these background regions, we use \( Sal \) to denote these regional saliency value, utilize the \( Sal \) value to decide whether to merge it with background regions or not. \( Sal \) is influenced by the region area (\( V \)), the distance from regions to image center (\( CS \)) and the overlap** boundary length with image boundaries (\( BL \)). The region with a low value of \( Sal \) is merged with background regions.

Region Area.

Regions with the larger area are more salient than smaller regions, so larger regions will be assigned greater saliency values. The formula can be written as

where \( v \) is the area of each associated object in the image, and \( \hbox{max} (v) \) is the area of the largest associated object region in the first merged maps.

Center Distance.

In general, the center regions of the image more attractive than others, and they are more likely to be salient regions [16]. In this paper, therefore, calculates the region \( Sal \) according to the distance between the regions and the center of the scene.

Where \( nP \) is the number of super-pixel in the region \( P \), \( PC \) is the spatial location of the super-pixels included in the region \( P \), \( R \) is the center position of the image, and \( areaP \) is the area of the region \( P \).

Overlap** Boundary Length.

The longer the overlapped boundary between a region with the image boundaries is, the greater the possibility of being background regions, so \( BL \) can be written as follows.

where \( PB \) is the length of overlapped boundary that between each salient object and image boundaries, and \( RB \) is the total length value of the image boundaries. Hence, the saliency value \( Sal \) is defined as follows.

We merge the small salient objects into the large area by means of the \( Sal \) sorted. When some regions \( Sal \) value is very close, these regions can’t be retained. The results are shown in Fig. 6.

4 Experimental Results and Analysis

We test on ASD, ECSSD and DUT-OMRON datasets. ASD and ECSSD contain 1000 images respectively, the DUT-OMRON dataset contains 5168 images and salient object maps that artificially annotated. Moreover, we compare our method with 5 classic salient detection algorithms. They are SER [17], SS [18], SR [10], SIM [14], FES [19].

4.1 Visually Compare

In Fig. 7, we have selected some representative experimental results from our algorithm and comparison algorithm.

In the first map in Fig. 7, the salient region is very small, and the background region is uniform. Our algorithm result is very close to the GT. There are too many backgrounds are revealed in the SS, SR, SIM, and SER result maps. In the second map, the black object is in sharp contrast with the white background. It can be seen from the map that our algorithm detects more salient object than others, and only a small part is not detected successfully, because we use super-pixel to pre-process image, this small object is segmented into the background, causing it to be viewed as background. In the third and fourth maps, the salient object detected by our algorithm is complete, the boundary is clear. In the fifth map, the background is complex. The salient object detected by our algorithm is complete and the boundary is clear. In the face of complex background, the comparison algorithm will also display the background regions. Although our algorithm will show it in the first stage of the merging, it will be merged with the background in the second stage, which improves the accuracy of our algorithm. The background of the sixth map is complex, with salient background objects and high detection difficulty. Therefore, in the detection results of SS, SR, SIM, and SER, most of the background is revealed, and the salient object is displayed inaccurate. Our algorithm detection results are similar to the GT map and superior to algorithms. The last map is multi-objective images. Therefore, our algorithm is ideal in visual effects than 5 comparison algorithms.

4.2 Objective Evaluations

We evaluate the performance using Precision-recall (P-R) curve and F-measure. The comparison result diagrams are shown as Fig. 8.

Contrast data diagram. The first column denotes recall and precision curves of different methods in ASD dataset, ECSSD dataset and DUT-OMRON dataset (from top to bottom). The second column denotes recall, precision and F-measure value in ASD dataset, ECSSD dataset, DUT-OMRON dataset (from top to bottom).

From (a) and (b), we can see that the numerical line of our algorithm is above the numerical line than other algorithms. This shows that the performance of our algorithm is better than all comparison algorithms. In (d) and (e), the F-measure of our algorithm is higher. In the ECSSD dataset, although the background in the images is more complex, the accuracy and recall of algorithm are also close to 90%, which is much higher than the FES algorithm. The F-measure of the six kinds of algorithms are 0.87, 0.49, 0.20, 0.39, 0.48, and 0.60, respectively. That is, the F-measure of our algorithm is higher than other algorithms. The four data diagrams show that the detection results of our method are more accurate. The images in DUT-OMRON dataset are complex. Many salient objects are small, the background is complex, or there are more than one salient objects, so all algorithms are less effective than 70%. In (c), the curve of our algorithm is higher than other algorithms, but our algorithm curve is lower than the FES algorithm before 0.27. The main reason is that the salient objects in the image are very small and can easily be merged into the background area, which leads to the reduction of the accuracy of our algorithm. The F-measure are 0.55, 0.39, 0.38, 0.31, 0.33, and 0.49 respectively, of which the highest is 0.55 of our algorithm and higher than the FES algorithm which is 0.49. That is to say, our algorithm is superior to the FES algorithm.

In summary, our algorithm can reveal the salient object completely and showing good results.

5 Conclusion

In this paper, a salient object detection model for region merging is proposed. The initial saliency map is obtained by merging the background map, and in order to obtain the final saliency map, effective regions merging model is proposed to optimize the rough initial saliency map. This model makes the optimized saliency map more accurate. Experimental results show that our algorithm is effective and more accurate. In the future work, better merger strategies, such as Bayesian mergers, will be considered during the merge phase.

References

Cheng, M.M., Mitra, N.J., Huang, X., et al.: Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 569–582 (2015)

Itti, L.: Automatic foveation for video compression using a neurobiological model of visual attention. IEEE Trans. Image Process. 13(10), 1304–1318 (2004)

Wang, X.F., Qi, C.: A behavior recognition method using salient object detection. J. **’an Jiaotong Univ. (2018)

Li, R., Li, J.P., Song, C.: Research on co-segmentation of image based on salient object detection. Modern Comput. (16), 19–23 (2017)

Sun, F., Qing, K.H., Sun, W., et al.: Image saliency detection based on region merging. J. Comput. Aided Des. Graph. 28(10), 1679–1687 (2016)

Shen, X., Wu, Y.: A unified approach to salient object detection via low rank matrix recovery. In: Computer Vision and Pattern Recognition, pp. 853–860. IEEE (2012)

Peng, H., Li, B., Ling, H., et al.: Salient object detection via structured matrix decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 818–832 (2017)

Zhang, D., Fu, H., Han, J., et al.: A review of co-saliency detection algorithms: fundamentals, applications, and challenges. ACM Trans. Intell. Syst. Technol. (TIST) 9(4), 38 (2018)

Yazdi, M., Bouwmans, T.: New trends on moving object detection in video images captured by a moving camera: a survey. Comput. Sci. Rev. 28, 157–177 (2018)

Hou, X., Zhang, L.: Saliency detection: a spectral residual approach. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2007)

Guo, C., Ma, Q., Zhang, L.: Spatio-temporal saliency detection using phase spectrum of quaternion Fourier transform. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Achanta, R., Hemami, S., Estrada, F., et al.: Frequency-tuned salient region detection. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1597–1604 (2009)

Zhang, Q., Lin, J., Li, W., Shi, Y., Cao, G.: Salient object detection via compactness and objectness cues. Vis. Comput. 34(4), 473–489 (2017). https://doi.org/10.1007/s00371-017-1354-0

Murray, N., Vanrell, M., Otazu, X., et al.: Saliency estimation using a non-parametric low-level vision model. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 433–440 (2011)

Achanta, R., Shaji, A., Smith, K., et al.: Slic superpixels. EPFL, Technical report 149300, November 2010

Rahtu, E., Kannala, J., Salo, M., Heikkilä, J.: Segmenting salient objects from images and videos. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6315, pp. 366–379. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15555-0_27

Seo, H.J., Milanfar, P.: Static and space-time visual saliency detection by self-resemblance. J. Vis. 9(12), 1–27 (2009)

Hou, X., Harel, J., Koch, C.: Image signature: highlighting sparse salient regions. IEEE Trans. Pattern Anal. Mach. Intell. 34(1), 194–201 (2012)

Rezazadegan Tavakoli, H., Rahtu, E., Heikkilä, J.: Fast and efficient saliency detection using sparse sampling and kernel density estimation. In: Heyden, A., Kahl, F. (eds.) SCIA 2011. LNCS, vol. 6688, pp. 666–675. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-21227-7_62

Feng, L., Wen, P., Miao, Y., et al.: An image saliency detection algorithm based on color and space information. In: 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS). IEEE (2017)

Xu, Q., Wang, F., Gong, Y., et al.: An edge-oriented framework for saliency detection. In: 2017 IEEE 17th International Conference on Bioinformatics and Bioengineering (BIBE). IEEE (2017)

Li, G., Yu, Y.: Visual saliency detection based on multiscale deep CNN features. IEEE Trans. Image Process. 25, 5012–5024 (2016)

Li, H., Chen, J., Lu, H., et al.: CNN for saliency detection with low-level feature integration. Neurocomputing 226, 212–220 (2017)

赵恒, 安维胜, 田怀文. 结合稀疏重构与能量方程优化的显著性检测. 计算机应用研究 (6) (2019)

余映, 吴青龙, 邵凯旋, et al.: 超复数域小波变换的显著性检测. 电子与信息学报 41(9) (2019)

Guo, Y., Liu, Y., Ma, R.: Image saliency detection based on geodesic-like and boundary contrast maps. ETRI J. 41(6), 797–810 (2019)

Marcon, M., Spezialetti, R., Salti, S., Silva, L., Di Stefano, L.: Boosting object recognition in point clouds by saliency detection. In: Cristani, M., Prati, A., Lanz, O., Messelodi, S., Sebe, N. (eds.) ICIAP 2019. LNCS, vol. 11808, pp. 321–331. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-30754-7_32

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 IFIP International Federation for Information Processing

About this paper

Cite this paper

Wei, W., Yang, Y., Wang, W., Zhao, X., Ma, H. (2020). A Salient Object Detection Algorithm Based on Region Merging and Clustering. In: Shi, Z., Vadera, S., Chang, E. (eds) Intelligent Information Processing X. IIP 2020. IFIP Advances in Information and Communication Technology, vol 581. Springer, Cham. https://doi.org/10.1007/978-3-030-46931-3_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-46931-3_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-46930-6

Online ISBN: 978-3-030-46931-3

eBook Packages: Computer ScienceComputer Science (R0)