Abstract

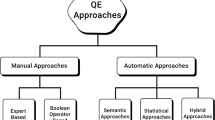

Search engines differ from their modules and parameters; defining the optimal system setting is challenging the more because of the complexity of a retrieval stream. The main goal of this study is to determine which are the most important system components and parameters in system setting, thus which ones should be tuned as the first priority. We carry out an extensive analysis of 20, 000 different system settings applied to three TREC ad-hoc collections. Our analysis includes zooming in and out the data using various data analysis methods such as ANOVA, CART, and data visualization. We found that the query expansion model is the most significant component that changes the system effectiveness, consistently across collections. Zooming in the queries, we show that the most significant component changes to the retrieval model when considering easy queries only. The results of our study are directly re-usable for the system designers and for system tuning.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

A system setting refers to an IR system configured with a retrieval model and an optional query expansion model with its parameters.

- 3.

- 4.

- 5.

We also calculated the Two-way ANOVA considering the main and interaction effects of query expansion (QE) and retrieval model (RMod) factors on AP; query expansion is consistently ranked first as well across the collections.

- 6.

Some combinations are not meaningful and thus were not used (e.g., using 5 documents in query expansion while the “expansion model” used is none).

References

Banks, D., Over, P., Zhang, N.F.: Blind men and elephants: six approaches to trec data. Inf. Retrieval 1(1–2), 7–34 (1999)

Bergstra, J., Bengio, Y.: Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13(Feb), 281–305 (2012)

Bigot, A., Chrisment, C., Dkaki, T., Hubert, G., Mothe, J.: Fusing different information retrieval systems according to query-topics: a study based on correlation in information retrieval systems and trec topics. Inf. Retrieval 14(6), 617 (2011)

Bigot, A., Déjean, S., Mothe, J.: Learning to choose the best system configuration in information retrieval: the case of repeated queries. J. Univ. Comput. Sci. 21(13), 1726–1745 (2015)

Breiman, L.: Classification and Regression Trees. Routledge, Abingdon (2017)

Chifu, A.G., Laporte, L., Mothe, J., Ullah, M.Z.: Query performance prediction focused on summarized letor features. In: The 41st International ACM SIGIR Conference, pp. 1177–1180. ACM (2018)

Chrisment, C., Dkaki, T., Mothe, J., Poulain, S., Tanguy, L.: Recherche d information - analyse des résultats de différents systèmes réalisant la même tâche. Rev. Sci. Technol. l’Inf. 10(1), 31–55 (2005)

Compaoré, J., Déjean, S., Gueye, A.M., Mothe, J., Randriamparany, J.: Mining information retrieval results: significant IR parameters. In: Advances in Information Mining and Management, October 2011

Deerwester, S., Dumais, S.T., Furnas, G.W., Landauer, T.K., Harshman, R.: Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 41(6), 391 (1990)

Dinçer, B.T.: Statistical principal components analysis for retrieval experiments. J. Assoc. Inf. Sci. Technol. 58(4), 560–574 (2007)

Ferro, N.: What does affect the correlation among evaluation measures? ACM Trans. Inf. Syst. 36(2), 19:1–19:40 (2017). https://doi.org/10.1145/3106371

Ferro, N., Silvello, G.: A general linear mixed models approach to study system component effects. In: Proceedings of the 39th International ACM SIGIR Conference, pp. 25–34. ACM (2016)

Harman, D., Buckley, C.: Overview of the reliable information access workshop. Inf. Retrieval 12(6), 615 (2009)

Macdonald, C., McCreadie, R., Santos, R., Ounis, I.: From puppy to maturity: experiences in develo** terrier. In: Proceedings of OSIR at SIGIR, pp. 60–63 (2012)

Miller Jr., R.G.: Beyond ANOVA: Basics of Applied Statistics. Chapman and Hall/CRC, London (1997)

Mizzaro, S., Robertson, S.: Hits hits trec: exploring IR evaluation results with network analysis. In: Proceedings of the 30th ACM SIGIR, pp. 479–486. ACM (2007)

Ponte, J.M., Croft, W.B.: A language modeling approach to information retrieval. In: Proceedings of the 21st ACM SIGIR Conference, pp. 275–281. ACM (1998)

Robertson, S., Zaragoza, H., et al.: The probabilistic relevance framework: BM25 and beyond. Found. Trends Inf. Retrieval 3(4), 333–389 (2009)

Roy, D., Ganguly, D., Mitra, M., Jones, G.J.: Estimating gaussian mixture models in the local neighbourhood of embedded word vectors for query performance prediction. Inf. Process. Manag. 56(3), 1026–1045 (2019)

Salton, G.: The SMART Retrieval System-Experiments in Automatic Document Processing. Prentice-Hall Inc., Upper Saddle River (1971)

Schutze, H., Hull, D.A., Pedersen, J.O.: A comparison of classifiers and document representations for the routing problem (1995)

Shtok, A., Kurland, O., Carmel, D.: Query performance prediction using reference lists. ACM Trans. Inf. Syst. 34(4), 19:1–19:34 (2016)

Taylor, M., Zaragoza, H., Craswell, N., Robertson, S., Burges, C.: Optimisation methods for ranking functions with multiple parameters. In: Proceedings of the 15th ACM International Conference on Information and Knowledge Management, pp. 585–593. CIKM (2006)

Voorhees, E.M., Samarov, D., Soboroff, I.: Using replicates in information retrieval evaluation. ACM Trans. Inf. Syst. (TOIS) 36(2), 12 (2017)

Zobel, J.: How reliable are the results of large-scale information retrieval experiments? In: Proceedings of the 21st Annual International ACM SIGIR Conference, pp. 307–314. ACM (1998)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Déjean, S., Mothe, J., Ullah, M.Z. (2019). Studying the Variability of System Setting Effectiveness by Data Analytics and Visualization. In: Crestani, F., et al. Experimental IR Meets Multilinguality, Multimodality, and Interaction. CLEF 2019. Lecture Notes in Computer Science(), vol 11696. Springer, Cham. https://doi.org/10.1007/978-3-030-28577-7_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-28577-7_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-28576-0

Online ISBN: 978-3-030-28577-7

eBook Packages: Computer ScienceComputer Science (R0)