Abstract

In this paper we analyze the learning process of a neural network-based reinforcement learning algorithm while making comparisons to characteristics of human learning. For the task environment we use the game of Space Fortress which was originally designed to study human instruction strategies in complex skill acquisition. We present our method for mastering Space Fortress with reinforcement learning, identify similarities with the learning curve of humans and evaluate the performance of part-task training which corresponds to earlier findings in humans.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Space Fortress (SF) is an arcade-style game that was developed in the 80’s with support of US DoD DARPA to study human learning strategies in complex skill acquisition [1]. The game has a long history of research in educational psychology (e.g. studying instructional design for skill acquisition [2]), cognitive science (e.g. develo** cognitive models that simulate human learning [3]), and more recently, machine learning (e.g. as a test-bed for addressing challenges in reinforcement learning [4]).

Recent breakthroughs in (deep) reinforcement learning (DRL) have led to algorithms that are capable of learning and mastering a range of classic Atari video games [5, 6]. SF shares many gameplay similarities to the Atari games. This has inspired us to investigate if such algorithms are capable of mastering the game of SF and if so, whether the manner in which such algorithms learn SF share similarities with the human learning process. The underlying motivation stems from the idea that if one could construct a representative model to simulate characteristics of human learning, it could potentially be used to make predictions on human task training, for example, to identify task complexity, predict learning trends or optimize part-task training strategies.

The goal of this study is twofold. First our goal is to acquire a machine learning mechanism that is capable of learning the full game of SF. Others have addressed this game before though only using simplified versions of the game (e.g. [4]). Second our goal is to analyze the learning process of the learner and compare it to characteristics of human learning in SF. For this comparison we employ human data acquired from an earlier study [7]. Detecting similarities in the learning trends is a prerequisite for the learning mechanism to exhibit predictive qualities for skill acquisition, such as transfer learning. This will be evaluated by comparing the performance of part-task training in SF between man and machine.

In this study we take a more opportunistic approach by using an available neural network-based RL implementation that has been proven to learn tasks in game environments similar to SF (e.g. [6]). We recognize that such algorithms have limitations and that the way they learn cannot easily be compared to human learning [9], which we will describe qualitatively in this paper. Still, this does not rule out the existence of any underlying similarities between (parts of the) the learning trends during complex skill acquisition.

The outline of this paper is as follows. Section 2 describes the game of SF and previous machine learning efforts on this game. In Sect. 3 we show how we designed an agent that learns SF with reinforcement learning (RL). In Sect. 4 we analyze and compare the learning curves of the RL algorithm and the human, after which in Sect. 5 we describe results of transfer learning experiments that were conducted. Finally in Sect. 6 we conclude on our findings.

2 Background

2.1 Space Fortress

Space Fortress (SF) was developed in the 80’s as part of the DARPA Learning Strategies Program to study human learning strategies for skill acquisition on a complex task [1]. The game is challenging and difficult enough to keep human subjects engaged for extended periods of practice. It is demanding in terms of perceptual, cognitive and motor skills, as well as knowledge of the game rules and strategies to follow. SF has a long history of research in psychology and cognitive science, focusing on topics such as (computer-aided) instructional strategies to support human learning [2, 10, 11] and understanding human cognition [12, 13]. The game has also shown positive transfer of skill to the operational environment. This has been demonstrated in actual flight performance of fighter pilots where human subjects that practiced on the game performed significantly better than those that did not [14].

Space Fortress Gameplay

A simplified representation of SF is shown in Fig. 1. In the game the player controls a ship in a frictionless environment (cf. space dynamics) and is tasked to destroy a Fortress which is positioned in the center of the environment. Destroying the Fortress requires a delicate procedure: the player first has to make the Fortress vulnerable, which can be achieved by shooting the Fortress ten times with a missile with at least 250 ms between each shot. When it’s vulnerable, the player can destroy the Fortress by executing a ‘double shot’ which is a burst of two shots fired at an interval less than 250 ms. When performing a double shot when the Fortress is not yet vulnerable, the Fortress is reset and the player has to start the procedure from the beginning. The Fortress defends itself by shooting shells at the player. These have to be avoided.

Further, mines appear periodically in the environment. They prevent the player from destroying the Fortress. There are two types of mines: ‘friend’ and ‘foe’ mines. To destroy a mine, the player first has to identify a mine as friend or foe, which is done by the so-called the IFF (identification-friend-or-foe) procedure. This represents a cognitive task also known as the Sternberg task [15], a short-term memory task: On the screen, a letter appears that indicates the mine type (the IFF letter). The player has to associate this letter with a set of letters that are presented prior to the game. If the letter is part of that set, the mine is a foe mine. After identification, to destroy a foe mine, the player has to switch weapon systems. This is a control task where the player has to press a button (the IFF-key) twice with an interval between 250 and 400 ms. Upon completion, the mine can be destroyed. Friend mines can be destroyed (a.k.a. ‘energized’) immediately. A mine disappears either when it is destroyed, when it hits the player, or after 10 s. The player itself has three lives. A life is lost when hit by a Fortress’s shell or upon collision with a mine.

The game has a complex scoring mechanism and points are determined based on the player’s performance of Fortress and mine destruction; weapon control; position control (not too far from, not too close to the Fortress); velocity control (not too slow or too fast); and speed of mine handling.

2.2 Machine Learning in Space Fortress

In recent years Space Fortress (SF) has been used as a relevant task environment for machine learning research with different purposes.

From a cognitive science perspective, SF has been used as a medium for simulating human learning. In [3], the authors present a computational model in the ACT-R cognitive architecture [16]. The model is based on Fitts’ three-phase characterization of human skill learning [17] and it is argued that all three are required to learn to play SF at a human level: (1) the interpretation of declarative instructions, (2) gradual conversion of declarative knowledge to procedural knowledge, and (3) tuning of motor-control variables (e.g. timing shots, aiming, thrust duration and angle). Online learning mechanisms are present in the latter two. For the former, the instructions of the game are interpreted and hand-crafted into the model as declarative instructions.

From a computer science perspective, SF had been considered as a testbed for reinforcement learning. The game has challenging characteristics such: context-dependent strategy changes during gameplay are required (e.g. mine appearance; Fortress becoming vulnerable); actions require time-sensitive control (e.g. millisecond interval control to perform a double shot or switch weapon system); rewards are sparse; and the environment is continuous and frictionless. In [4], SF is introduced as a benchmark for deep reinforcement learning. The writers illustrate an end-to-end learning algorithm, shown to outperform humans, using a PPO (Proximal Policy Optimization) algorithm [18]. It should be noted however that only a simplified version of the game was addressed, without the presence of any mines.

In relation to the above works, our study takes a middle ground. On the one hand, our goal is similar to [3] with respect to simulating (aspects of) human learning (for predictive purposes in our case). On the other hand, we aim to explore predictive qualities of a learner using a more readily available, neural network-based RL algorithm.

2.3 Comparing Human Learning and Machine Learning

Despite recent success of neural network-based (D)RL algorithms for Atari classic games, their shortcomings also quickly become apparent: mastering these games may requires hundreds of hours of gameplay which is considerably more compared to humans. Also certain types of games cannot be mastered at all by RL (e.g. games that require a form of planning), though research efforts are undertaken to address such challenges (e.g. introducing hierarchical concepts [19]).

Underlying these observations are several crucial differences in the way the machine learns compared to humans. An extensive overview of such differences is described in [8]. To summarize a few: humans are equipped with ‘start-up software’ which includes priors developed from childhood such as intuitive physics and psychology, affordances, semantics about specific entities and interactions [20, 21]. Humans have the capability to build causal models of the world and apply these to new situations, allowing them to compose plans of actions and pursue strategies. Additionally humans have the capability of social learning and acquiring knowledge or instructions from others or written text such as reading a game’s instruction manual [22]. In Sect. 3.4 we discuss in more detail how deficiencies of many current algorithms would affect the learning of SF.

3 Learning Space Fortress

In this section we explain the approach we took to teach Space Fortress (SF) to an agent using reinforcement learning (RL).

3.1 Game Definitions

For this study we define the goal of the game as the task to destroy the Fortress as quickly as possible, hereby minimizing the Fortress Destruction Time (FDT). Through continuous training, performance on this task can be improved and can easily be measured throughout the learning process (for the machine as well as human subjects). Three different tasks (games) are defined: two so-called part-tasks (PTs) which represent simplified versions of the game, and the whole task (WT) which represents the full game:

-

SF-PT1: No mines will be present in the game, only the Fortress

-

SF-PT2: Only friend-mines will be present (no IFF procedure or switching weapon systems is required)

-

SF-WT: Friend and foe mines will be present. This represents the full game.

These different tasks are used to analyze and compare the performance of the RL algorithm and human subjects. Later, in Sect. 5 they are used to analyze transfer learning between different tasks.

3.2 Implementation Approach

The SF implementation that was used is a Python version of the game from CogWorks Laboratory [23]. This version was wrapped as an OpenAI gym environment [24] for interfacing with the reinforcement learning (RL) algorithm.

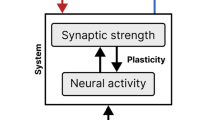

The RL algorithm is partly based on DQN [5]: the input of the network consists of 21 hand-crafted features, rather than pixel-based features as in common in DQN (i.e. no convolutional layers were used). Fully end-to-end learning is not a priority for this study and the game state of SF can easily be captured in features. Figure 2 visualizes the design of the RL algorithm and the reward structure. These are further explained below.

Different types of features were used. First, 18 features encode the current state of the game and include: information about the ship’s position, heading and speed; bearing and distance information in relation to the Fortress; any shell and mine that is present; and the Fortress vulnerability counter. Any location coordinates and bearing vectors were decomposed into a sine and cosine component to model a continuous cyclic dimension. Second, two features were used to cope with the network’s memory limitations. These features allow the network to understand the time-sensitivity required for distinguishing between a single shot or double shot (250 ms interval) and switching weapon systems (pressing the IFF-key twice within an 250–400 ms interval). The features encode the time passed since the last shoot action and the last key-press to switch weapon system. We expect that algorithms with recurring network units such as LSTM (long-short term memory) or GRU (gated recurrent unit) have the memory capability to encode these time-sensitive strategies, hereby making these features potentially obsolete for such algorithms. Finally, the last feature encodes the mine type (friend or foe). For humans playing the game, identifying a mine is achieved by the Sternberg memory task: one has to determine if a letter on the screen (the IFF-letter) associated with a mine is a member of a set of letters displayed prior to the game. This type of task cannot easily be simulated by the used algorithm (or similar algorithms). Therefore we abstract away from this task by calculating it analytically and present the result as feature input.

The game has six actions which correspond to human keyboard input to play the game: add trust, turn left, turn right, fire a missile, press the IFF-key to switch weapon systems and a no-op (no operation).

The reward function is custom designed and built from seven performance metrics (see the reward structure from Fig. 2). Two of these are also represented in the original game’s scoring mechanism (Fortress destroyed and Mine destroyed). Some metrics that are included in the scoring mechanism were found to be not required in the reward function, such as punishing losing a life or wrap** the ship around the screen. Five metrics are introduced to guide the learning process in understanding the rules of the game (e.g. reward a Fortress hit to increase its vulnerability or how to use the weapon system for foe mines).

3.3 Results

Experiments have been performed to learn each of the three game types (SF-PT1, SF-PT2 and SF-WT) . The training time for each experiment was fixed to 60 million game steps. This corresponds to ~833 h of real-time human game play (the game runs at 20 Hz). During training, Fortress Destruction Times were measured and recorded to capture the progress of learning.

Results from the experiments are compared to results from human subjects. Human subject data originated from an earlier study [7]. From this study a dataset is available from 36 participants who each played SF (without prior experience) for 23 sessions of 8 games of 5 min which totals to around 15 h of gameplay per participant. The comparison with humans is shown in Table 1. In Sect. 4 we will compare the learning process itself.

As can be seen from the comparison, the RL algorithm outperforms the best performing human subject for all three game types. From qualitative observations based on video recordings, it is seen that the algorithm behaves conform the optimal strategy of playing SF: that is to circumnavigate around the Fortress in a clock-wise manner. Note that this behavior was not explicitly encouraged by the reward structure. Further discussions on comparisons between humans and the machine are described below.

3.4 Comparison to Human Learning

Two immediate observations can be made when comparing the performance on SF between the machine and human subjects. These are qualitatively explained next.

Task Performance

The machine outperforms humans on all three tasks, converging to faster Fortress destruction times. One explanation is that the machine is not bounded by the perceptual and cognitive limitations of the body and mind that are present in humans. To give some examples: humans have limited perceptual attention and attention has to be divided between different elements in the game world and the information elements displayed on the screen around the game world (e.g. checking the Fortress vulnerability or the IFF-letter when a mine appears, see Fig. 1). In contrast, the machine has instant access to these information elements. Further, human reaction time is far higher than the time a machine requires making a reactive decision: ~200–250 ms versus smaller than 50 ms (time of a single game step). Finally, humans require accurate motor control of the game’s input device (a joystick or keyboard), which is susceptible to human precision errors. The machine performs game actions instantly. Concluding, under the proven assumption that the RL algorithm is capable of learning the game, and with the mentioned advantaged over humans, it can reasonably be expected to outperform humans.

Training Time

The machine requires far more training time to achieve a comparable performance to human subjects. For instance, the best performing human on task SF-PT1 reaches its best time within 10 h (5.15 s) whereas the machine requires almost 400 h in order to reach the same time. In other words, the RL algorithm is sample inefficient, a common problem in RL research. Several factors contribute to this. First, humans are able to acquire the goal and rules of the game beforehand (e.g. reading the game’s manual). They are able to employ this knowledge from the start of the training process. The machine’s algorithm cannot be programmed with this knowledge and has to infer the game’s goal and rules purely based on reward signals (such as the reward structure from Fig. 2). Second, humans are equipped with learned ‘experiences’ prior to training, which facilitate them to translate the rules of the game to a concrete game plan to execute: e.g. they are equipped with a general understanding of physical laws and object dynamics; or they have experience in solving similar problems (e.g. gaming experience). The machine’s algorithm does not follow an a priori strategy but learns its policy through exploration and exploitation (using the ɛ-greedy method in our implementation). Finally, if the algorithm were to learn from pixels, it is expected that sample inefficiency would be even higher because of the undeveloped vision system which in (adult) humans is already present.

4 Learning Curve Analysis

In the previous section it was explained why the machine’s algorithm does not exactly simulate a human learning the game of SF. Still, there may be trends in the machine’s learning process that share characteristics of human learning. In this section we analyze the learning curves of the algorithm and human subjects. Finding similarities is a necessary condition for the algorithm if it is to be used for predictions concerning transfer learning, which we will evaluate in the next section.

For the comparative analysis we use the game type SF-WT . We measured the learning curve for accomplishing the goal of the game: to destroy the Fortress. Figure 3 shows the learning curves representing Fortress Destruction Times in seconds as a function of successive Fortress destructions (also called trials). I.e. the x-axis does not denote the training time.

Learning curve data of the RL algorithm (upper) and human (lower). The blue line represents the measured data. The red line plots Eq. (1) which is described below. (Color figure online)

From the data it is apparent that the RL algorithm starts with much longer destruction times and that it needs much more trials to reach asymptote. This is the direct effect of the sample inefficiency of the algorithm as explained in Sect. 3.4. The curves of both the time series for the algorithm and the time series for the human learner seem to show similar trends that can be described by Eq. (1), which was originally proposed in [7] as an alternative to a so called Power Law of Practice, e.g. [8, 25]:

In the equation, \( T_{n} \) is the time needed to destroy the Fortress in the \( {\text{n}}^{th} \) trial. \( T_{mean } \) is the mean of all preceding (n–1) trial times and \( T_{min} \) is an estimate of the minimum attainable trial time.

Additional analysis is required to determine the fitness of Eq. (1) to the RL algorithm’s learning curve, as well as the effects that the RL algorithm’s hyper-parameters will have on the learning curve during training (e.g. the learning rate or variables for action-selection exploration strategies such as ɛ-greedy). This is left for future study.

5 Transfer Learning

In this section we investigate the potential benefit of part-task training for SF for the RL algorithm. Part-task training is a training strategy in which a whole task is learned progressively using one more part-tasks. Positive transfer in part-task training implies a task can be learned more efficiently when compared to spending full training time solely on the whole task. Part-task training has shown positive results for humans learning SF [26]. In the remainder of this section we describe the transfer learning experiments that were conducted and present our results.

5.1 Transfer Experiment Design

Transfer experiments were conducted using the three tasks (games) defined for SF in Sect. 3.1. To recapitulate, SF-PT1 represents a part-task with only the Fortress (no mines); SF-PT2 represents a part-task including only friendly mines that can be dealt with immediately; and SF-WT represents the full game with friend and foe mines, which requires an IFF-procedure and switching weapon systems in case of a foe mine.

The primary objective of the experiments is to see whether SF-WT can be learned more efficiently by the RL algorithm using part-task training, and how the use of different part-tasks lead to potential positive (or negative) transfer. The experiments are visualized in Fig. 4. The top bar shows the baseline experiment where 100% of the total training time is spent on the whole task. The bottom two bars show the transfer experiments of using either part-task one or part-task two where a certain percentage of the total training time is spent on the part-task before switching to the whole task. For each of the transfer experiments, a range of different part-task – whole task distributions are be tested in order to gain insight into the optimal distribution.

5.2 Implementation and Measurement

Transfer Implementation

The approach we took for implementing transfer learning in SF is quite straightforward because of the tasks’ similarities. First of all, for all three tasks, the algorithm’s input features and action space can be kept the same. The mere consequence is that for sub-tasks such as SF-PT1 (in which no mines appear), certain input features do not change or offer any relevant information (e.g. mine-related features), and certain actions become irrelevant and should be suppressed (e.g. the IFF-key). Further, the reward function can be kept the same since the primary goal of the game remains unchanged (destroying the Fortress). Finally, the actual knowledge that is transferred between different tasks is the current policy function which is represented by the algorithm’s network weights in the hidden and output layers.

Because of the above properties, running a part-task training experiment is similar to running a baseline, whole task experiment. The RL algorithm can be kept running throughout a training session without requiring any change when switching tasks. The only difference is that the game environment can be switched to a new task during training, at pre-configured moments. During this task transfer, the algorithm will be confronted with new game elements and any associated reward signals to learn new sub-policies (e.g. destroying mines). The algorithm is expected to incorporate these into its currently learned policy from the previous task (e.g. circumnavigate and shoot the Fortress).

Transfer Measurement

There are different ways in which transfer can be measured between a learner that uses transfer (part-task training) and one that does not (baseline training). Several common metrics are explained in [27]: A jumpstart measures the increase of initial performance at the start of the target task after training on the source task. Asymptotic performance measures the final performance at the end of training on the target task. Total reward measures the accumulated performance during training. Transfer ratio measures the ratio of the total award accumulated by the two learners. And time to threshold measures the training time needed to achieve some predetermined performance level.

For our analysis we found the Transfer ratio to be the most appropriate metric. It requires measures of the total reward obtained during training on the target task. For this metric we define the total reward (accumulative performance) as the total number of Fortress destructions (FDs) during training on the target task. The Transfer ratio is then calculated as follows:

In the equation, \( \sum\nolimits_{i = s}^{n} {FD_{i} } \) represents the total number of Fortress destructions from the time step where the transfer learner starts on the target task (s) until the final time step of the full training time (n). For all experiments, the full training time is fixed to 60 million time steps. Thus if a transfer learner is configured to learn 50% of the time on a part-task and 50% on the whole task, then Fortress destructions are measured for both the transfer learner and the baseline learner only for the last 30 million time steps.

5.3 Transfer Analysis

Results of the transfer experiments are shown in Fig. 5, illustrating the transfer ratio (y-axis) for the two transfer games for different distributions (x-axis). Each bar is based on eight data points (i.e. training experiments). The error bar represents a confidence interval of .95 (ci = 95). Positive bars imply positive transfer compared to the baseline. The baseline is determined from an average of eight baseline (whole task) experiments.

According to the results, positive transfer can be observed when using SF-PT1 for 37.5–75% of the total training time (up to approximately 11%). For SF-PT2 , spending little training time on this part-task (<50%) shows negative transfer. Both part-tasks tend to show negative transfer when there is too little time spent on learning the whole task (from 87.5%).

We hypothesize that the difference in transfer between the two part-tasks is caused by the nature of the policy change that must be learned. When transferring from SF-PT1 to the whole task requires learning a new, context-dependent policy that operates besides a previously learned policy (i.e. learning a policy on how to deal with mines, besides the existing policy to circumnavigate and destroy the Fortress). However when transferring from SF-PT2 to the whole task requires modifying an existing policy: suddenly mines need to be treated differently in the whole task. This may suggest that adding an additional policy can be learned more efficiently than changing an existing policy.

Finally we compare transfer results of the RL algorithm to transfer results from humans by analyzing data from an earlier study [7]. Human performance data is available for the same part-task strategies as from Fig. 4. Further, the same transfer metric was used as defined in Eq. (2). The results are shown in Fig. 6. Each bar (transfer experiment) is based on two data points, compared to six baselines (all from different humans). Note that the time distributions differ from Fig. 5.

From the results we can see that for both the RL algorithm and the humans, using SF-PT1 leads to better results in terms of transfer than using SF-PT2 . However, no comparisons can be made when looking at the time distributions (e.g. negative or neutral transfer when spending little time on a part-task cannot be observed in the human data). This could be related to the difference in the initial phase of training where the machine requires much training time to reach a comparable performance as humans at the start of their training.

6 Conclusion

In this paper we presented a learning algorithm that is capable of learning Space Fortress (SF) to an extent that has not previously been demonstrated in terms of supported game elements. Performance on the game has shown to reach above human performance. Several task abstractions were made which result in differences compared to how humans learn the game: learning is done based on symbolic features rather than pixels; the Sternberg memory task is solved analytically; and the algorithm does not simulate visual attention/scanning and motor control as required for humans to play the game. Another crucial difference is that humans employ knowledge of the game rules during learning, whereas the algorithm has to ‘discover’ the rules during learning based on the reward function.

We compared the learning curves of the learning algorithm and a human subject, showing similar trends that can be described by an alternative to the Power Law of Practice, originally proposed in [7]. Finally we analyzed transfer learning effects on SF using part-task training. It was seen that for both the machine and humans the same part-task strategy proved to be beneficial, though no comparisons could be made about the time spent on a particular part-task.

This study is an initial exploratory effort to investigate the feasibility of using a reinforcement learning (RL) model as a predictive mechanism for human learning (such as predicting optimal part-task training). Initial findings show that similarities exist between learning curve characteristics and transfer effects of part-task training. However, more thorough analysis is required to gain insight into the extents, limits and qualities of predictions that could be made, knowing that there are inherent differences between human learning and neural network-based RL. This is left for future work.

References

Mané, A., Donchin, E.: The space fortress game. Acta Psychol. 71(1–3), 17–22 (1989)

Frederiksen, J.R., White, B.Y.: An approach to training based upon principled task decomposition. Acta Psychol. 71(1), 89–146 (1989)

Anderson, J.R., Betts, S., Bothell, D., Hope, R., Lebriere, C.: Three Aspects of Skill Acquisition, PsyAr**v (2018)

Akshat, A., Hope, R.: Challenges of context and time in reinforcement learning: introducing space fortress as a benchmark, ar**v (2018)

Mnih, V., et al.: Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015)

Hessel, M., et al.: Rainbow: combining improvements in deep reinforcement learning. In: Proceedings of the thirty-second AAAI Conference on Artificial Intelligence (2018)

Roessingh, J., Kappers, A., Koenderink, J.: Forecasting the learning curve for the acquisition of complex skills from practice. Netherlands Aerrospace Centre NLR-TP-2002-446 (2002)

Newell, A., Rosenbloom, P.: Mechanisms of Skill Acquisition and the Law of Practice. Carnegie-Mellon University (1980)

Lake, B.M., Ullman, T.D., Tenenbaum, J.B., Gershman, S.J.: Building machines that learn and think like people. Behav. Brain Sci. 40, 253 (2017)

Moon, J., Bothell, D., Anderson, J.R.: Using a cognitive model to provide instruction for a dynamic task. In: Proceedings of the 33rd Annual Conference on the Cognitive Science Society (2011)

Ioerger, T.R., Sims, J., Volz, R., Workman, J., Shebilske, W.: On the use of intelligent agents as partners in training systems for complex tasks. In: Proceedings of the 25th Annual Meeting of the Cognitive Science Society (2003)

Boot, W.R., Kramer, A.F., Simons, D.J., Fabiani, M., Gratton, G.: The effects of video game playing on attention, memory and executive control. Acta Psychol. 129(3), 387–398 (2008)

Boot, W.R.: Video games as tools to achieve insight into cognitive processes. Front. Psychol. 6, 3 (2015)

Gopher, D., Well, M., Bareket, T.: Transfer of skill from a computer game trainer to flight. Hum. Factors 36(3), 387–405 (1994)

Sternberg, S.: Memory-scanning: mental processes revealed by reaction-time experiments. Am. Sci. 57(4), 421–457 (1969)

Anderson, J., Bothell, D., Byrne, M., Douglass, S., Lebiere, C., Qin, Y.: An integrated theory of mind. Psychol. Rev. 111(4), 1036 (2004)

Fitts, P.: Perceptual-motor skill learning. Categories Hum. Learn. 47, 381–391 (1964)

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms, ar**v preprint ar**v:1707.06347

Kulkarni, T.D., Narasimhan, K.R., Saeedi, A., Tenenbaum, J.B.: Hierachical deep reinforcement learning: integrating temporal abstraction and intrinsic motivation. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, Curran Associates Inc., pp. 3682–3690 (2016)

Tsividis, P., Pouncy, T., Xu, J., Tenenbaum, J., Gershman, S.: Human learning in Atari. In: The AAAI 2017 Spring Symposium on Science of Intelligence: Computational Principles of Natural and Artificial Intelligence (2017)

Dubey, R., Agrawal, P., Pathak, D., Griffiths, T.L., Efros, A.A.: Investigating human priors for playing video games. ar**v:1802.10217

Tessler, M., Goodman, N., Frank, M.: Avoiding frostbite: it helps to learn from others. Behav. Brain Sci. 40, E279 (2017)

Rensselaer Polytechnic Institute, “SpaceFortress,” CogWorks Laboratory. https://github.com/CogWorks. Accessed 16 Jan. 2019

Brockman, G., et al.: OpenAI Gym. ar**v:1606.01540

De Jong, J.: The effects of increasing skill on cycle time and its consequences for time standards. Ergonomics 1(1), 51–60 (1957)

Roessingh, J., Kappers, A., Koenderink, J.: Transfer between training of part-tasks in complex skill training: model development and some experimental data. In: Human Factors in the Age of Virtual Reality. Shaker Publishing (2003)

Taylor, M.E., Stone, P.: Transfer learning for reinforcement learning domains: a survery. J. Mach. Learn. Res. 10, 1633–1685 (2009)

van Oijen, J., Pop**a, G., Brouwer, O., Aliko, A., Roessingh, J.: Towards modeling the learning process of aviators using deep reinforcement learning. In: 2017 IEEE International Conference on Systems, Man and Cybernetics (SMC), Banff (2017)

Tuyen, L.P., Vien, N.A., Layek, A., Chung, T.: Deep hierarchical reinforcement learning algorithm in partially observable Markov Decision Processes. ar**a & Victor García

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

van Oijen, J., Roessingh, J.J., Pop**a, G., García, V. (2019). Learning Analytics of Playing Space Fortress with Reinforcement Learning. In: Sottilare, R., Schwarz, J. (eds) Adaptive Instructional Systems. HCII 2019. Lecture Notes in Computer Science(), vol 11597. Springer, Cham. https://doi.org/10.1007/978-3-030-22341-0_29

Download citation

DOI: https://doi.org/10.1007/978-3-030-22341-0_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22340-3

Online ISBN: 978-3-030-22341-0

eBook Packages: Computer ScienceComputer Science (R0)