Abstract

The field of particle physics is at the crossroads. The discovery of a Higgs-like boson completed the Standard Model (SM), but the lacking observation of convincing resonances Beyond the SM (BSM) offers no guidance for the future of particle physics. On the other hand, the motivation for New Physics has not diminished and is, in fact, reinforced by several striking anomalous results in many experiments. Here we summarise the status of the most significant anomalies, including the most recent results for the flavour anomalies, the multi-lepton anomalies at the LHC, the Higgs-like excess at around 96 GeV, and anomalies in neutrino physics, astrophysics, cosmology, and cosmic rays. While the LHC promises up to 4 \(\hbox {ab}^{-1}\) of integrated luminosity and far-reaching physics programmes to unveil BSM physics, we consider the possibility that the latter could be tested with present data, but that systemic shortcomings of the experiments and their search strategies may preclude their discovery for several reasons, including: final states consisting in soft particles only, associated production processes, QCD-like final states, close-by SM resonances, and SUSY scenarios where no missing energy is produced. New search strategies could help to unveil the hidden BSM signatures, devised by making use of the CERN open data as a new testing ground. We discuss the CERN open data with its policies, challenges, and potential usefulness for the community. We showcase the example of the CMS collaboration, which is the only collaboration regularly releasing some of its data. We find it important to stress that individuals using public data for their own research does not imply competition with experimental efforts, but rather provides unique opportunities to give guidance for further BSM searches by the collaborations. Wide access to open data is paramount to fully exploit the LHCs potential.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Section editors: Oliver Fischer and Bruce Mellado

The discovery of a scalar resonance that resembles the Higgs boson of the Standard Model (SM) [1,2,3,4] at the Large Hadron Collider (LHC) by the ATLAS [5] and CMS [6] collaborations has opened a new chapter in particle physics. The combined measurements show that this discovered particle has properties that are compatible with those predicted by the SM [7], which makes its discovery a great triumph for experiment and theory. Under the assumption that the discovered scalar particle is indeed the predicted Higgs boson, the SM has exhausted all its predictions pertaining to fundamental particles. While the LHC collaborations continue to measure properties of the Higgs boson and other known particles and processes, the chief focus is now on the observation of new phenomena beyond the SM.

The motivation for the existence of New Physics (NP) is no less than before the discovery of the Higgs boson. The SM itself raises the question of naturalness, i.e. why the Electroweak scale is so much smaller than the Planck scale, which is addressed by Supersymmetry (SUSY) in an elegant way. The observation of Dark Matter (DM) in the Universe, interpreted as a fundamental particle, can be addressed with minimal frameworks beyond the SM (BSM) and also with theories that often introduce an entire dark sector with new particles and forces. The observation of Neutrino Oscillations implies that the neutrinos are massive, which requires a mass-generating mechanism and therefore an extension of the SM. The above list of arguments is incomplete but suggests convincingly that the existence of NP is an established fact. No indication exists, however, in what form the NP manifests itself.

As the nature and energy scale of NP remains unknown, new phenomena could emerge at any experiment. In recent years the field of particle physics has experienced a growing litany of anomalous experimental results. Many of them are statistically significant and continue to grow, and remain unexplained by state-of-the-art calculations based on the SM. In most cases the latter have become increasingly precise and reliable, where vast data sets have been used to provide extensive testing grounds. While some anomalies might eventually find explanations within the framework of the SM, persisting ones could contain hints of NP and may serve as a guide to model building and experimental searches. The most significant anomalies are summarised and discussed in Sect. 2.

While there is overwhelming evidence that the SM is incomplete, guidance is required to resolve the issue as to how the SM will conclusively breakdown in laboratory conditions. Hundreds of BSM models have been proposed over the years, motivated by the big open questions as well as by the various combinations of experimental anomalies. At the same time, extensive exploration of LHC data with respect to inclusive and model dependent signatures performed to date indicates that no striking resonances have been observed in the accessible dynamic range. The absence of clear BSM signatures in LHC data indicates that NP is either inaccessible at the LHC, or that it is driven by more subtle topologies and therefore hidden in the backgrounds. We discuss possible hidden signatures from NP in Sect. 3.

The question arises what the absence of BSM resonances at the LHC implies for our search strategies. Many models have been identified that are not captured by current searches, such that reinterpretation of experimental limits for different models became an important topic of discussion [8, 9], and analysis strategies were developed that are less model-dependent. Since model building is the driving force behind gaining insight into new signatures, the model-centric and the model-independent approach are both necessary. The community is elaborating on data analysis methodologies that display less model dependencies. The use of Machine Learning may play a significant role.

It is high time to scrutinise existing LHC data with respect to clues on NP in the non-strongly interacting sector in order to prepare for the high-luminosity era and beyond. When the High Luminosity LHC starts operating, the data coming out of it has to be used to glean every possible information about existing NP models. Clear guiding principles need to come from a combination of both experimental and theoretical inquiries. Given the present discussions on future colliders all over the world, this guidance is now more crucial than ever before. Guidance for the future could come from NP that is currently hidden in LHC data, but might be accessible with new search strategies.

New search strategies can only be developed from the communication between theorists and experimentalists. One remarkable example are the new means to search for hypothetical new long-lived particles, where discussions between experimentalists and theorists led to the development of new triggers, new external detectors, and influenced the planning of future experiments. To stimulate similar discussions, a testing ground is needed, where physicists can develop new strategies with a quick turnaround. We discuss in Sect. 4 that open data might constitute such a testing ground, as it provides a platform for knowledge and data exchange that has the potential to unleash the discovery potential of the LHC data, where experiments can be given pointers of what corners of the phase-space need particular scrutiny.

CERN has committed to an open data policy in support of open science, promising to “make scientific research more accessible to the community”. Open data is a relatively new experience in the field of particle physics. As open data policies are not intended to compete with experimental efforts, it is essential to establish in this context a well-defined framework, within which knowledge and data are exchanged. In this community effort the experimentalists should remain the competent authority to set down the data access guidelines, while the role of theorists would be to provide directions with new insights and ideas. The theorist’s insight thus may keep the most crucial questions in front of the eyes of everyone involved in the effort. Of course, such questions are numerous and multi-dimensional.

In the workshop “Unveiling Hidden Physics beyond the Standard Model at the LHC”Footnote 1 the existing motivation and insight into possible manifestations of NP were discussed. This included a state-of-the-art overview of significant anomalous experimental results, lessons from theories including model classes that are challenging to detect at the LHC, computing methods, and the CERN open data.

The central aim of the workshop was to highlight the fact that the usage of open LHC data allows the community to test a much larger range of NP than ever before. We emphasize that this is very important given the growth of the number and the significance of anomalies and the continuously evolving vast landscape of ideas. It is therefore important to bring the discussion of an open data format into the open. We therefore dedicate Sect. 4 to this discussion.

The present document includes a review of most significant anomalies in particle physics and a review of model classes that can be hidden in LHC data. The anomalies are grouped appropriately and potential explanations are summarised, where possible interconnections are explored. The review of hidden model classes is non-exhaustive and constitutes an example of the opportunities that model building continues to provide to the physics programme of the LHC.

1.1 Workshop discussion

Contributions: Nishita Desai, Biswarup Mukhopadhyaya

The discussions at the workshop were held after individual talks, in dedicated discussion sessions, and a plenary discussion. There was also some exchange on a dedicated mattermost channel.

The main points were collected and brought into this paper in many different places. Below is a list of few illustrative questions that emerged during the discussions in the opening session of the workshop, which may serve as rudders for future discussion.

-

Hundreds of models have been explored in our quest for new physics, and we have little guidelines yet as to how many additional directions are worthy of exploration. Till such guidelines emerge in firmer outlines, it may be advisable to carry on prediction and analysis of new physics signatures at the LHC in model-independent ways as far as possible, so that we do not miss interesting possibilities due to any bias.

At the same time, a virtue of model-based studies also looms up. The pros and cons of the theoretical characteristics of certain scenarios often become clear when their consequences are pitted against experimental data, and enrich us with wisdom that goes beyond the ambit of those specific scenarios. Thus we derive our ‘lessons from theory’ by occasionally resorting to models as well.

-

The 125-GeV scalar has been observed, and whether it is ‘the Higgs’ or ‘a Higgs’ is still an open question. On the other hand, the electroweak symmetry-breaking sector has led to a good many questions about limitations of the standard model. When the High Luminosity LHC starts operating, the data coming out of it should be used to glean every speck of additional information about this scalar, and look for effects that may serve to unveil physics beyond the standard model.

-

Euclidean continuation is important in understanding global Higgs behaviour. In this context, both time-like and space-like probes at high energies should be complementary.

\(h \rightarrow ZZ\) would be a good start in this connection, because it interferes destructively with the SM box diagram. If the intervention of new physics makes on-shell \(h \rightarrow ZZ\) small, it will enhance sensitivity of off-shell Higgs signal. This is also applicable to di-Higgs production via triple Higgs coupling.

-

High-\(p_T\) Higgs boson physics is complementary to the off-shell Higgs boson signal. Momentum transfer to the Higgs boson production vertex in such events is space-like. So experiments should pay attention to the relatively small number of events in the high-\(p_T\) range, which may accentuate the role of off-shell Higgs boson, and any trace of BSM physics contained there.

-

The running effect of \(m_t(\mu )\), too, depends on features of the deep Euclidean region related to the top Yukawa coupling, although it is difficult to observe the effects at the LHC, because of the uncertainty in the measurement of top quark mass. This, however, can be taken up as a challenge at the high-luminosity runs, since (a) the top Yukawa coupling is related to the issue of naturalness, and (b) the precise relationship of the top pole mass with the running mass at some energy can reveal information of BSM contribution to the relevant renormalisation group equations.

-

Since theoretical scenarios may exist just a little beyond the on-shell reach of the LHC, it is important to think not only about off-shell effects but also in terms of higher-dimensional effective operators which, after all, may turn out to be our major handles. The scale of such operators gets reflected in high-\(p_T\) events which should therefore be probed with great emphasis during the high-luminosity runs. High \(p_T\) events can also serve as probes of top physics in the deep Euclidean region.

-

Observations on dark matter indicate potent new physics options. This includes, as major components, theoretical scenarios with symmetries such as \(Z_2\), or those containing long-lived particles (either DM candidates themselves or others belonging to the dark sector). Since dark matter is a concrete reality, such scenarios should constitute high-priority search areas.

-

On a more theoretical note, the issue of naturalness of the electroweak scale is yet unresolved, especially when no evidence of supersymmetry is found yet, in regions of the parameter space with ‘sensible’ values of naturalness criteria. It is high time to investigate whether the high-luminosity data contain any clue on this in the non-strongly interacting sector.

2 Anomalies

Section editors: Andreas Crivellin, Oliver Fischer and Bruce Mellado

Contributions: Emanuele Bagnaschi, Geoffrey Beck, Benedetta Belfatto, Zurab Berezhiani, Monika Blanke, Bernat Capdevila, Bhupal Dev, Oliver Fischer, Martin Hoferichter, Matthew Kirk, Farvah Mahmoudi, Claudio Andrea Manzari, David Marzocca, Bruce Mellado, Antonio Pich and Luc Schnell

This section summarises a cohort of anomalies in the data that currently do not appear to be explained by the SM. See Ref. [10] for an up-to-date summary of all existing anomalies. The section is structured as follows: Sect. 2.1 gives an overview of flavour anomalies; Sect. 2.2 details the multi-lepton anomalies at the LHC; Sect. 2.3 describes the Higgs-like excess at 96 GeV; Sect. 2.4 discusses anomalies in the neutrino sector; finally, Sects. 2.5–2.7 touch upon anomalies in astrophysics, cosmology and in Ultra-High energy cosmic rays, respectively. Implications of the anomalies for theoretical scenarios need further studies often done by theorists. These studies of for example flavor anomalies, multi-lepton anomalies and Higgs-like excesses require availability of digital results, which can be accessed by frameworks e.g. HEPdata as discussed in Sect. 4.

2.1 Flavour anomalies

Intriguing indirect hints for BSM physics have been accumulated in flavour observables within recent years: Semi-leptonic bottom quark decays (\(b\rightarrow s\ell ^+\ell ^-\)); Tauonic B meson decays (\(b\rightarrow c\tau \nu \)); The anomalous magnetic moment of the muon (\(a_\mu \)); The Cabibbo angle anomaly (CAA); Non-resonant di-electrons (\(q{{\bar{q}}} \rightarrow e^+e^-\)); The difference of the forward–backward asymmetry in \(B\rightarrow D^*\mu \nu \) vs \(B\rightarrow D^*e\nu \) (\(\Delta A_{\mathrm{FB}}\)); Low-energy lepton flavour universality violation (LFUV) in the charged current, including leptonic tau decays (\(\tau \rightarrow \mu \nu \nu \)). Interestingly, all these observables admit an interpretation in terms of LFUV, i.e., NP that distinguishes between muon, electrons and tau leptons. While some of the anomalies are by construction measures of LFUV, also the other observables can be interpreted in this context (see Fig. 1). This unified view suggests a common origin of the anomalies in terms of BSM physics, which reinforces the case for LFUV with important theoretical and experimental implications. In the following, we will review these flavour anomalies and related processes.

2.1.1 \(b\rightarrow s\ell ^+\ell ^-\)

Consistent hints of New Physics have been observed in semileptonic B-meson decays involving \(b\rightarrow s\ell ^+\ell ^-\) transitions. Different experimental collaborations at the LHC, with LHCb playing the leading role, and the Belle experiment have reported deviations from SM expectations at the 2–\(3\sigma \) level in several channels mediated by these transitions. The most relevant discrepancies include observables characterising the \(B^0\rightarrow K^{*0}\mu ^+\mu ^-\) [11] and \(B^+\rightarrow K^{*+}\mu ^+\mu ^-\) [12] decay distributions, in particular the so-called \(P_5^\prime \) observable in two adjacent anomalous bins in the low-\(q^2\) region,

the \(R_K\) [13] and \(R_{K^*}\) [14] ratios, defined as [15]

which measure LFUV in the \(B\rightarrow K\ell ^+\ell ^-\) and \(B\rightarrow K^*\ell ^+\ell ^-\) modes,

and the \(B_s \rightarrow \phi \mu ^+ \mu ^-\) branching ratio [16, 17],

In addition, the branching ratio of the leptonic decay \(B_s\rightarrow \mu ^+\mu ^-\) shows some tension with respect to its SM prediction:

where the quoted value corresponds to the average of the latest LHCb measurement [18, 19] with the results from CMS [20] and ATLAS [21] (see Refs. [22,23,24,25] for further details).

These tensions, together with the discrepancies observed in \(b\rightarrow c\ell \nu \) modes, see Sect. 2.1.2, are commonly referred to in the literature as “B anomalies”. The current situation is exceptional since all deviations in \(b\rightarrow s\ell ^+\ell ^-\) channels are consistent with a deficit in muonic modes and form coherent patterns in global fits, some of which are preferred over the SM with a very high significance. State-of-the-art global analyses of \(b\rightarrow s\ell ^+\ell ^-\) data can be found in Refs. [22,23,24,25,26,27,28,29]. These global fits differ in the treatment of theoretical uncertainties, with the most important differences being the choice of form factors [30,31,32], the parametrisation used to include factorisable and non-factorisable hadronic uncertainties [33,34,35,36] and the approach used in the statistical analysis itself [37,38,39,40,41,42].

However, all the abovementioned global analyses share the same model-independent framework based on the effective Hamiltonian of the Weak Effective Theory (WET), in which heavy degrees of freedom with characteristic scales above the W boson mass – including any potential heavy new particles – are integrated out in short-distance Wilson coefficients \({\mathcal {C}}_i\),

Even though NP could generate further effective operators with structures not present in the SM, because of the processes included in the global fits, most analyses focus their attention to the electromagnetic and semileptonic operators (including their chirally-flipped counterparts)Footnote 2:

where \(\ell =\mu \), e, \(P_{L,R}=(1\mp \gamma _5)/2\) and \(m_b=m_b(\mu _b)\) is the running b-quark mass in the \(\overline{\text {MS}}\) scheme at the characteristic scale of the process \(\mu _b\sim 4.8\,\text {GeV}\). The SM values of the relevant Wilson coefficients are \({\mathcal {C}}^\text {SM}_{7,9\ell ,10\ell }(\mu _b)=-0.29,4.07,-4.31\) and \({\mathcal {C}}^\text {SM}_{7^\prime ,9^\prime \ell ,10^\prime \ell }(\mu _b)\sim 0\), for both \(\ell =\mu \) and \(\ell =e\). In this language, NP effects are parametrised as shifts from their SM values \({\mathcal {C}}_{i\ell } = {\mathcal {C}}_{i\ell }^\text {SM}+{\mathcal {C}}_{i\ell }^\text {NP}\).

Since no deviations have been observed in channels with electrons in the final state, NP contributions to the electronic Wilson coefficients are assumed to be negligible. Then, the most updated global fits to the muonic coefficients reveal the vectorial \({\mathcal {C}}_{9\mu }^\text {NP}\) and left-handed \({\mathcal {C}}_{9\mu }^\text {NP}=-{\mathcal {C}}_{10\mu }^\text {NP}\) structures as the favourite NP scenarios according to current \(b\rightarrow s\ell ^+\ell ^-\) data [22,23,24,25,26,27]. Additionally, restricted fits to LFUV observables and \(B_s\rightarrow \mu ^+\mu ^-\) show a NP signal in \({\mathcal {C}}_{10\mu }^\text {NP}\) with high significance [23,24,25]. Also, scenarios including right-handed couplings (RHC) have been recently found to provide very competitive descriptions of the data [22, 45]. The statistical significance of these scenarios, as measured by the so-called \(\text {Pull}_\text {SM}\), ranges from roughly to well-above \(5\sigma \) depending on the particular details of each analysis.

Notice that the NP scenarios discussed so far are all based on the underlying assumption of LFUV NP, where the NP is entirely attached to the muons. However, some analyses have also started exploring scenarios with lepton flavour universal (LFU) NP effects in addition to LFUV contributions to muons only [26, 45,46,47]. In order to account for these contributions, one possible parametrisation reads,

with \(i=9^{(\prime )}\), \(10^{(\prime )}\). The basis redefinition in Eq. (11) provides a new description of the data with a concrete NP structure, namely, that \(b\rightarrow s\ell ^+\ell ^-\) transitions get a common LFU NP contribution for all charged leptons (electrons, muons and tau leptons), opening new directions and extending the possible interpretations of the global fits. Interestingly, when allowing for LFU NP, the scenario \(({\mathcal {C}}_{9\mu }^\mathrm{V}=-{\mathcal {C}}_{10\mu }^{\mathrm{V}},{\mathcal {C}}_{9}^{\mathrm{U}})\) with an \(SU(2)_L\) LFUV structure emerges as an acceptable NP solution [22, 26]. Also, scenarios with \({\mathcal {C}}_{10(')}^{\mathrm{U}}\), like \(({\mathcal {C}}_{9\mu }^\mathrm{V},{\mathcal {C}}_{10}^{\mathrm{U}})\) and \(({\mathcal {C}}_{9\mu }^\mathrm{V},{\mathcal {C}}_{10'}^{\mathrm{U}})\), get selected with very high significance [22, 45].

It is also important to discuss the implications of the global \(b\rightarrow s\ell ^+\ell ^-\) fits on popular NP models. Now we briefly review those that are able to generate the preferred structures suggested by the global fits.

\({\varvec{\mathcal {C}}}_{{\textbf {9}}}^{{\textbf {NP}}}\): \(Z^\prime \) models with vectorial couplings to leptons preferably yield \({\mathcal {C}}_{9\mu }^{\mathrm{NP}}\)-like solutions in order to avoid gauge anomalies. In this context, \(L_\mu -L_\tau \) models [48,49,50,51,52] are popular since they do not generate effects in electron channels. Fits including \(R_{K^*}\) are also very favourable to models predicting \({\mathcal {C}}_{9\mu }^{\mathrm{NP}}=-3{\mathcal {C}}_{9e}^\mathrm{NP}\) [53]. Concerning leptoquarks (LQs), a \({\mathcal {C}}_{9\mu }^{\mathrm{NP}}\) solution can only be generated by adding two scalar (an \(SU(2)_L\) triplet and an \(SU(2)_L\) doublet with \(Y=7/6\)) or two vector representations (an \(SU(2)_L\) singlet with \(Y=2/3\) and an \(SU(2)_L\) doublet with \(Y=5/6\)).

\({\varvec{\mathcal {C}}}_{{\textbf {9}}{\varvec{\mu }}}^{{\textbf {NP}}} = -{\varvec{\mathcal {C}}}_{{\textbf {10}}{\varvec{\mu }}}^{{\textbf {NP}}}\): This pattern can be achieved in \(Z^\prime \) models with loop-induced couplings [54] or with heavy vector-like fermions [55, 56]. Regarding LQ models, here a single representation (the scalar \(SU(2)_L\) triplet or the vector \(SU(2)_L\) singlet with \(Y=2/3\)) can generate a \({\mathcal {C}}_{9\mu }^{\mathrm{NP}}=-{\mathcal {C}}_{10\mu }^{\mathrm{NP}}\) solution [57,58,59,60,61,62,63]. This pattern can also be obtained in models with loop contributions from three heavy new scalars and fermions [64,65,66,67,68] and in composite Higgs models [69].

RHC: with a value of \(R_K\) closer to one, scenarios with right-handed currents, namely \({\mathcal {C}}_{9\mu }^\mathrm{NP}=-{\mathcal {C}}_{9^\prime \mu }\), \(({\mathcal {C}}_{9\mu }^{\mathrm{NP}}, {\mathcal {C}}_{9'\mu })\) and \(({\mathcal {C}}_{9\mu }^{\mathrm{NP}}, {\mathcal {C}}_{10'\mu })\), seem to emerge. The first two scenarios are naturally generated in \(Z^\prime \) models with certain assumptions on its couplings to right-handed and left-handed quarks, as it was shown in Ref. [48] within the context of a gauged \(L_\mu -L_\tau \) symmetry with vector-like quarks. One could also obtain \({\mathcal {C}}_{9\mu }^{\mathrm{NP}}=-{\mathcal {C}}_{9^\prime \mu }\) by adding a third Higgs doublet to the model of Ref. [51] with opposite U(1) charge. On the other hand, generating the aforementioned contribution in LQ models requires one to add four scalar representations or three vector ones.

\(({\varvec{\mathcal {C}}}_{{\textbf {9}}{\varvec{\mu }}}^{{\textbf {V}}}=-{\varvec{\mathcal {C}}}_{{\textbf {10}}{\varvec{\mu }}}^{{\textbf {V}}}, {\varvec{\mathcal {C}}}_{{\textbf {9}}}^{{\textbf {U}}})\): this scenario can be realised via off-shell photon penguins in a LQ model explaining also \(b\rightarrow c\tau \nu \) data [70] (see Sect. 2.1.8). Remarkably, as we will discuss below, a NP contribution with this structure allows for a model-independent combined explanation of \(b\rightarrow s\ell ^+\ell ^-\) and \(b\rightarrow c\tau \nu \) data with very high statistical significance [22, 45, 70].

\({\varvec{\mathcal {C}}}_{{\textbf {10}}(^{\prime })}^{{\textbf {U}}}\): NP solutions with \({\mathcal {C}}_{10(')}^{\mathrm{U}}\) (see scenarios 9–13 from Refs. [22, 45]) arise naturally in models with modified Z couplings. In this case, \({\mathcal {C}}_{9(')}^{\mathrm{U}}\) contributions are also generated but to a good approximation can be neglected. The \(({\mathcal {C}}_{9\mu }^\mathrm{V}=-{\mathcal {C}}_{10\mu }^{\mathrm{V}},{\mathcal {C}}_{10}^{\mathrm{U}})\) pattern also occurs in Two-Higgs-Doublet models [71]. For scenarios \(({\mathcal {C}}_{9\mu }^{\mathrm{V}},{\mathcal {C}}_{10}^\mathrm{U})\) and \(({\mathcal {C}}_{9\mu }^{\mathrm{V}},{\mathcal {C}}_{10'}^{\mathrm{U}})\), one can also invoke models with vector-like quarks, where modified Z couplings are even induced at tree-level. The LFU effect in \({\mathcal {C}}_{10(')}^{\mathrm{U}}\) can be accompanied by a \({\mathcal {C}}_{9,10(')}^{\mathrm{V}}\) effect from \(Z^\prime \) exchanges [72]. Vector-like quarks with the quantum numbers of right-handed down quarks (left-handed quarks doublets) generate effects in \({\mathcal {C}}_{10}^{\mathrm{U}}\) and \({\mathcal {C}}_{9'}^{\mathrm{V}}\) (\({\mathcal {C}}_{10(')}^{\mathrm{U}}\) and \({\mathcal {C}}_{9}^{\mathrm{V}}\)) for a \(Z^\prime \) boson with vector couplings to muons [72].

Given that LQs should possess very small couplings to electrons in order to avoid dangerous effects in \(\mu \rightarrow e\gamma \), they naturally violate LFU [73]. While \(Z^\prime \) models can easily accommodate LFUV data [74], variants based on the assumption of only LFU NP [75, 76] are now disfavoured. The same is true if one aims at explaining \(P_5'\) via NP in four-quark operators leading to a NP (\(q^2\)-dependent) contribution from charm loops [77].

Finally, we further discuss the scenario \(({\mathcal {C}}_{9\mu }^\mathrm{V}=-{\mathcal {C}}_{10\mu }^{\mathrm{V}},{\mathcal {C}}_{9}^{\mathrm{U}})\) and how its structure allows for a model-independent connection between the \(b\rightarrow s\ell ^+\ell ^-\) anomalies and the deviations in \(b\rightarrow c\tau \nu \) transitions [78]. This connection arises in the SMEFT scenario where \({\mathcal {C}}^{(1)}={\mathcal {C}}^{(3)}\) expressed in terms of gauge-invariant dimension-6 operators [79, 80]. The operator involving third-generation leptons explains \(R_{D^{(*)}}\) and the one involving the second generation gives a LFUV effect in \(b\rightarrow s\mu ^+\mu ^-\) processes. The constraint from \(b\rightarrow c\tau \nu \) and \(SU(2)_L\) invariance leads to large contributions enhancing \(b\rightarrow s\tau ^+\tau ^-\) processes [80], whereas the mixing into \(\mathcal{O}_{9\ell }\) generates \({\mathcal {C}}_{9}^{\mathrm{U}}\) at \(\mu =m_b\) [70]. Therefore, this NP structure correlates \({\mathcal {C}}_9^{\mathrm{U}}\) and \(R_{D^{(*)}}\) in the following way [70, 80]:

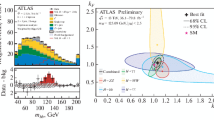

where \(\Lambda \) is the typical scale of NP involved. In Fig. 2, we show the global fit of the pattern \(({\mathcal {C}}_{9\mu }^{\mathrm{V}}=-{\mathcal {C}}_{10\mu }^\mathrm{V},{\mathcal {C}}_{9}^{\mathrm{U}})\) without and with the additional input on \(R_{D(^*)}\) from Ref. [78], taking the scale \(\Lambda =2\) TeV. This connection between neutral and charged anomalies is remarkable as it offers a NP solution that is able to accommodate both sets of data simultaneously, and hence one finds a very high \(\text {Pull}_\text {SM}\) of 8.1\(\sigma \) for the combined fit [22].

Preferred regions at the 1, 2 and 3\(\,\sigma \) level (green) in the \(({\mathcal {C}}_{9\mu }^\mathrm{V}=-{\mathcal {C}}_{10\mu }^{\mathrm{V}},\,{\mathcal {C}}_{9}^{\mathrm{U}})\) plane from \(b\rightarrow s\ell ^+\ell ^-\) data. The red contour lines show the corresponding regions once \(R_{D^{(*)}}\) is included in the fit (for \(\Lambda =2\) TeV). The horizontal blue (vertical yellow) band is consistent with \(R_{D^{(*)}}\) (\(R_{K}\)) at the \(2\,\sigma \) level and the contour lines show the predicted values for these ratios

2.1.2 Tauonic B-meson decays

In addition to the neutral-current \(b\rightarrow s\ell ^+\ell ^-\) transitions discussed above, also charged-current \(b\rightarrow c\tau \nu \) data exhibit tensions with the SM predictions. Of particular interest are the lepton flavour universality (LFU) ratios

for which measurements from BaBar [81, 82], Belle [83,84,85,86] and LHCb [87,88,89] exist, see Fig. 3. The latest HFLAV average combining these data [90]

deviates by \(3.1\sigma \) from the SM prediction. Furthermore, the data for the analogous ratio \(R(J/\psi )\) also seem to hint at an enhancement relative to the SM [91].

Experimental results for the LFU ratios \(R(D^{(*)})\) and their average, provided by the HFLAV collaboration. The SM prediction is indicated by the black cross. From Ref. [90]

NP contributions to \(b\rightarrow c\tau \nu \) transitions can be parametrised by the Wilson coefficients \(C_i\) in the effective Hamiltonian

assuming the absence of light right-handed neutrinos. Here \(O_{V}^L\) is the left-handed current-current operator present already in the SM, \(O_{S}^L\) and \(O_{S}^R\) are the left- and right-handed scalar operators, and \(O_T\) is the tensor operator, as defined, e.g., in Ref. [92].

Global fits to the data, including polarisation observables in \(B\rightarrow D^*\tau \nu \), have been performed in Refs. [47, 93,94,95]. From the results of these fits, several simplified NP models can be identified as potential candidates for an explanation of the \(b\rightarrow c\tau \nu \) anomalies. Due to the rather large size of the required NP contribution with respect to the SM, in all cases new particles contribute to \(b\rightarrow c\tau \nu \) at the tree level, and for the sake of simplicity we restrict our attention to models with a single new state:

Charged \(W'\) bosons: A good fit to the available \(b\rightarrow c\tau \nu \) data is obtained by a shift \(C_V^L\ne 0\) of the SM \((V-A)\otimes (V-A)\) contribution, which could originate from a heavy charged \(W'\) gauge boson coupling to left-handed quarks and leptons [96, 97]. This model, however, is challenged by LHC high-\(p_T\) di-\(\tau \) data [98] as well as by precision measurements of Z-pole observables [99].

Charged Higgs boson \(H^\pm \): This scenario [100,101,102,103], leading to non-zero \(C_S^{L,R}\), currently provides the best fit to the low-energy \(b\rightarrow c\tau \nu \) data, as – in contrast to the other simplified models – it allows one to accommodate the measured \(D^*\) polarisation, \(F_L(D^*)\) [104], at the \(1\sigma \) level. However, this solution is in tension with the LHC mono-\(\tau \) data [105], and it induces a large branching ratio \(\text {BR}(B_c\rightarrow \tau \nu )>50\%\). While no direct experimental bound on the latter exists, upper limits of 30% [106] and even 10% [107] have been estimated in the literature. On the other hand, a critical reassessment reached the conclusion that values even as large as 60% cannot be excluded at present [92, 95]. A recent update on the SM prediction of the \(B_c\) lifetime supports the latter reasoning [108].

Scalar leptoquarks: The scalar \(SU(2)_L\)-singlet leptoquark \(S_1\) [109,110,111], giving rise to the scenario \(C_V^L, C_S^L=-4C_T\ne 0\), offers a good fit to the \(b\rightarrow c\tau \nu \) data, predicts only modest contributions to the decay \(B_c\rightarrow \tau \nu \), and passes the mono-\(\tau \) test. The scalar \(SU(2)_L\)-doublet leptoquark \(S_2\), inducing \(C_S^L=4C_T\), on the other hand, can be brought in agreement with the \(b\rightarrow c\tau \nu \) data only in the presence of complex, i.e., CP-violating couplings [112]. The latter scenario predicts a significant contribution to \(\text {BR}(B_c\rightarrow \tau \nu )\sim 20\%\), and its best-fit point is on the verge of being tested by the mono-\(\tau \) searches. There are also stringent LHC constraints on these LQs from their pair-production and t-channel mediated dilepton processes [113, 114].

Vector leptoquark: Last but not least also an \(SU(2)_L\)-singlet vector leptoquark \(U_1\) [58, 60, 61, 115,116,117,118,119] provides a good fit to the \(b\rightarrow c\tau \nu \) data, both with only left-handed couplings (\(C_V^L \ne 0\)) and in the presence of an additional small right-handed \(b\tau \) coupling (\(C_V^L, C_S^R \ne 0\)). As in the case of the scalar \(SU(2)_L\)-singlet leptoquark, also here the contributions to \(B_c\rightarrow \tau \nu \) are small and the model evades the current LHC mono-\(\tau \) searches. One of the most stringent constraints on models with an \(SU(2)_L\)-singlet vector leptoquark stems instead from LHC searches for colour-octet resonances, which are often introduced together with the leptoquark in UV-complete models [120,121,122].

In addition to these simplified models parametrised by the effective interactions in Eq. (15), models with light right-handed neutrinos have been examined in the literature [123,124,125,126,127]. While it is possible to accommodate the low-energy \(b\rightarrow c\tau \nu \) data in this case, a very large NP contribution is required due to the absence of interference with the SM contribution. Consequently the constraints from direct LHC searches, particularly mono-\(\tau \), tend to be even more severe.

To further disentangle the NP structure at work, a major role will be played by the measurement of differential and angular observables [92, 128,129,130,131,132,133], such as the \(D^*\) and \(\tau \) polarisations \(F_L(D^*)\) and \(P_\tau (D^{(*)})\), whose correlations turn out to discriminate well between the different scenarios. To fully exploit their model-discriminating potential, both precise measurements and a better theoretical understanding of the underlying form factors are necessary. A measurement of the baryonic LFU ratio

will instead provide an experimental consistency check for the \(R(D^{(*)})\) anomaly, thanks to a model-independent sum-rule [92] relating \(R(\Lambda _c)\) to R(D) and \(R(D^*)\), with the current prediction [95]

In addition to the constraints mentioned above, further tensions may arise in concrete UV completions. For example, in certain models electroweak \(SU(2)_L\) symmetry implies large contributions to the decays \(B\rightarrow K^{(*)}\nu {\bar{\nu }}\), \(B_s\rightarrow \tau ^+\tau ^-\) and \(B\rightarrow K^{(*)}\tau ^+\tau ^-\) [61, 134], and significant rates for \(\Upsilon \rightarrow \tau ^+\tau ^-\) or \(\psi \rightarrow \tau ^+\tau ^-\) are expected [135]. In summary it is fair to say that stringent constraints on all NP scenarios for the \(R(D^{(*)})\) anomaly exist, challenging a full resolution of the latter in the context of NP.

2.1.3 Anomalous magnetic moments of charged leptons

Ever since Schwinger’s famous prediction \(a_\ell =(g-2)_\ell /2=\alpha /(2\pi )\) [136] (and its experimental verification [137]), the anomalous magnetic moments of the electron and muon have been critical precision tests of the SM. For the electron, the current best direct measurement [138]

can be contrasted with its SM prediction once independent input for the fine-structure constant \(\alpha \) is specified. With the mass-independent 4-loop QED coefficient known semi-analytically [139], the dominant uncertainties now arise from the numerical evaluation of the 5-loop coefficient [140] and hadronic corrections [141], both of which enter at the level of \(10^{-14}\) (for the 5-loop QED coefficient there is a \(4.8\sigma \) tension between Refs. [140, 142] regarding the contribution of diagrams without closed lepton loops). However, the current most precise measurements of \(\alpha \) in atom interferometry, using Cs [143] and Rb [144] atoms, respectively, differ by \(5.4\sigma \),

resulting in a difference to Eq. (18) of \(-2.5\sigma \) and \(+1.6\sigma \).

The world average of the muon \(g-2\) is determined by the Run 1 results from the Fermilab experiment [145,146,147,148] and the Brookhaven measurement [149]

with a combined precision of \(0.35\,\text {ppm}\). Comparison with the current SM prediction [150]

then reveals a \(4.2\sigma \) tension. Experimental efforts to corroborate or refute this tension are underway at subsequent runs at Fermilab [151] and at J-PARC [152], with a precision goal of \(0.14\,\text {ppm}\) and \(0.45\,\text {ppm}\), respectively, and the J-PARC experiment pioneering a new experimental technique that does not rely on the magic momentum in a storage ring, see Ref. [153] for a more detailed comparison of the two methods. The SM prediction in Eq. (21), currently at \(0.37\,\text {ppm}\), represents a coherent theory effort organised in the Muon \(g-2\) Theory Initiative [150], and is mainly based on the underlying work from Refs. [140, 141, 154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171]. The uncertainty is completely dominated by hadronic contributions, with hadronic vacuum polarisation (HVP) and hadronic light-by-light scattering (HLbL) at \(0.34\,\text {ppm}\) and \(0.15\,\text {ppm}\), respectively. Improvements on both HVP and HLbL will continue over the next years, including new \(e^+e^-\rightarrow \text {hadrons}\) data, lattice-QCD calculations at a similar level of precision, and direct input on space-like HVP from the proposed MUonE experiment [172, 173]. Some recent developments include the first lattice calculation of HVP reporting subpercent precision [174], with subsequent work exploring the consequences of the emerging \(2.1\sigma \) tension with the data-driven determination [175,176,177,178,179], new \(e^+e^-\rightarrow \pi ^+\pi ^-\) data from SND [180], improved radiative corrections [181], a lattice-QCD calculation of HLbL at a similar level of precision as the phenomenological evaluation [182], and work aimed at refining the subleading contributions to HLbL [183,184,185,186,187,188].

New Physics explanations The absolute value of the difference between measurement and theory prediction exceeds the size of the EW contribution of the SM. Therefore, some form of enhancement mechanism is required to explain the current \(4.2\sigma \) tension with BSM physics, and well-motivated scenarios do exist. One possibility is that NP involves heavy particles at or above the EW scale, with an enhanced chirality flip originating from an interaction between new particles with the SM Higgs boson, with a coupling strength that is larger than the muon Yukawa. Depending on the model, this type of chiral enhancement allows for viable solutions for new particles with masses up to tens of TeV. Such an enhancement can be achieved in models with new scalars and fermions [189] with the MSSM being a specific example. Alternatively, the anomaly can be explained by new, light (or very light) weakly coupled states, such an axion-like particles (ALPs) or a dark photon \(Z_d\). For a more detailed overview of various models in light of the most recent measurement, we refer the reader to Ref. [190].

The Minimal Supersymmetric Standard Model One possible theoretical framework of NP above the electroweak scale is the MSSM. Here, the chiral enhancement is provided by the factor \(\tan \beta \equiv v_u/v_d\), where \(v_u\) and \(v_d\) are the vacuum expectation values of the two Higgs doublets of the model, \(H_u\) and \(H_d\) (which give mass to up-type and down-type fermions respectively). A large value of \(\tan \beta \approx 50\) can be motivated by top–bottom Yukawa coupling unification [191, 192], and thus this would provide a natural explanation for a large enhancement factor [193,194,195].

The leading MSSM contributions arise from one-loop diagrams involving a loop of either a neutralino and smuon (\(a^{{\tilde{\chi }}^0}_{\mu }\)) or a chargino and a sneutrino (\(a^{{\tilde{\chi }}^{\pm }}_{\mu }\)) (see the left plot of Fig. 4) [196,197,198,199,200,201,202,203].Footnote 3

The relevant phenomenological question is how to account for relatively light SUSY particles (and explain \((g-2)_{\mu }\)) while meeting constraints from the LHC searches, DM phenomenology, and other observables. Incorporating LHC Run 2 limits [190, 223,224,225,226,227,228,229,230,231,232,233,234,235,236,237,238,239,240,241,242,243,244,245,246,247,248,249,250,251,252,253,254,255,256,257], with a varying degree of sophistication and diverse focuses (model building, collider, DM etc.). Furthermore, several global studies, trying to incorporate and correlate LHC Run 2 bounds with limits coming from different sectors, have been performed as well, both in the context of scenarios with universal and minimal SUSY breaking mechanisms [258,259,260,261], and for more phenomenological oriented models [262, 263]. In the former case, LHC constraints push the mass of the SUSY states to the TeV scale, such that the \(\tan \beta \) enhancement is insufficient to provide a sufficiently large contribution to \(a_{\mu }\). For the latter scenarios, if sufficiently freedom is allowed, SUSY contributions can still account for the observed discrepancy [263].

The possibility of explaining the anomaly in non-minimal supersymmetric extensions of the SM, in light of recent LHC constraints, has also been extensively studied in the literature, cf., e.g., Refs. [265,266,267,268,269,270,271,272,273,274,275,276,277,278,279,280,281,282,283].

Leptoquarks Another possible explanation, which also provides a viable solution to the hints for lepton flavour universality violation in semi-leptonic B decays, is given by leptoquarks. Indeed, two scalar LQ representations can provide a chiral enhancement factor of \(m_t/m_\mu \approx 1600\) [284,285,286,287,288,289]. This allows for a TeV scale explanation with perturbative couplings that is not in conflict with direct LHC searches. It is furthermore very predictive as it involves, besides the LQ mass only two couplings, whose product is fixed by requiring that \(g-2\) be explained. Therefore, correlated effects in \(h\rightarrow \mu ^+\mu ^-\) [289], and to a lesser extent in \(Z\rightarrow \mu ^+\mu ^-\) [288, 290], arise which can be used to (indirectly) distinguish the two LQ representations at future colliders. In fact, correlations with \(h\rightarrow \mu ^+\mu ^-\) and \(Z\rightarrow \mu ^+\mu ^-\) become of interest for a wide range of chirally enhanced scenarios, see Ref. [291].

Left: Plot from Ref. [249] showing the neutralino-smuon mass range still allowed after considering LHC constraints (shaded blue areas) for a MSSM \((g-2)_{\mu }\) solution at \(1\sigma \) (\(2\sigma \)) in orange (yellow), with a bino-like LSP, degenerate smuons and \(\tan \beta =30\). Right: Plot from Ref. [264] showing in green (light blue) the preferred region by the current \((g-2)_{\mu }\) (\((g-2)_{e}\)) measurement in the plane of the kinetic mixing parameter \(\epsilon \) and of the mass the dark \(Z_d\) mediator; the dotted and dashed lines corresponds to the limits from the \(Q_{\mathrm {WEAK}}\) and APV experiments respectively, while the solid lines represent their combinations; the \(\delta \) parameter is related to the Z–\(Z_d\) mass mixing

Interplay with the lepton EDMs A consequence of explanations via chiral enhancement concerns the phase of the Wilson coefficient of the dipole operator, which emerges as a free parameter. In particular, such scenarios in general violate the scaling expected from MFV [189, 292], which may result in a large muon EDM well above MFV projections derived from the limit on the electron EDM [293]. A large part of the parameter space in which the phase is \({\mathcal O}(1)\), as well possible from an EFT perspective [189, 294, 295], could be covered by a proposed dedicated muon EDM experiment at PSI [296]. In fact, the corresponding non-MFV flavour structure is not at odds with naturalness arguments, since, in the limit of vanishing neutrino masses, lepton flavour is conserved, and thus it is possible to completely disentangle the muon from the electron EDM via a symmetry, meaning that no fine tuning is necessary. This could for example be achieved via an \(L_\mu -L_\tau \) symmetry [297,298,299], which can naturally give rise to the observed neutrino mixing matrix [300,301,302], and, even after its breaking, protects the electron EDM and \(g-2\) from BSM contributions [50].

Other solutions via heavy NP In addition, there exists a plethora of alternative BSM explanations of the muon \(g-2\), including composite or extra-dimensional models [303,304,305] or models with vector-like leptons [50, 68, 189, 291, 292, 306,307,308,309], including in addition a second Higgs doublet [310,311,312].

Also a pure 2HDM can provide a solution. This is either possible via Barr-Zee diagrams in the 2HDM-X [103, 190, 310, 313,314,315,316,317,318,319,320,321,322,323], where the external photon couples to an internal charged fermion loop, which then couples to the muon line via one of the new Higgs bosons and a photon (as in Fig. 4 right, but with the sfermion replaced by a fermion) or including vector-like leptons. Alternatively, a lepton flavour violating \(\tau \mu \) couplings can provide a \(m_\tau /m_\mu \) enhancement [71, 324,325,326], which is however strongly constrained from \(h\rightarrow \tau \mu \) searches.

Weakly coupled models with new light states Another possibility to explain the anomaly is to have weakly coupled new states (sometimes called generally Feebly Interacting Particles – FIPs) that however can provide a significant contribution to their small mass, a case for which a rich literature is available [264, 327,328,329,330,331,332,333]. Below we mention only a small selection of these studies. In the case of a spin-1 explanation, an example is given by dark Z models. In Fig. 5 we show a plot taken from Ref. [264] where a dark \(Z_d\) mediator model was studied. In the plot, the interplay with other current and future low-energy experiments is also shown. Concerning the possibility of an axion-like explanation, a recent paper [332] points out that this prospect poses some problems due to the fact that it seems to require an axion decay constant of \({\mathcal {O}}(10)\) GeV, which in turn implies the existence of new states at low scales, creating phenomenological issues which are not easily addressable. Another interesting possibility is given by ALP-portal explanations, where the ALP assumes the role of mediator with a dark sector [331]. The possibility of explaining simultaneously \((g-2)_{\mu }\) and the flavour anomalies using FIPs has been recently presented in Ref. [333].

2.1.4 Cabibbo angle anomaly

One of the fundamental predictions of the SM is the unitarity of the CKM matrix. In particular, for the first row of CKM elements it implies the condition

which in practice reduces to the Cabibbo universality (\(\vert V_{ud} \vert \approx \cos \theta _{12}\), \(\vert V_{us} \vert \approx \sin \theta _{12}\)), since the last entry is negligibly small: \(\vert V_{ub} \vert ^2 < 2\times 10^{-5}\) [334]. At present, with the improved control of theoretical uncertainties in the determinations of \(\vert V_{us} \vert \) and \(\vert V_{ud} \vert \), anomalies are emerging that could be a signal of NP at the TeV scale [335,336,337,338,339,340]. The present situation is shown in Fig. 6 and can be summarised as

The first two results A and B are extracted from data on kaon semileptonic \(K_{\ell 3}\) and leptonic \(K_{\mu 2}\) decays [334], respectively, using the most accurate lattice QCD calculations for the vector form factor \(f_+(0)\) and for the decay constants ratio \(f_K/f_\pi \) [341]. The precision of the third result C crucially depends on the knowledge of radiative corrections to be applied in \(\beta \) decays [342,343,344,345,346,347,348]. Using the value of the Fermi constant \(G_F = 1.1663787(6)\times 10^{-5}\) \(\hbox {GeV}^{-2}\) from muon decay [349], the value of \(\vert V_{ud} \vert \) is then obtained from the latest update of \(\mathcal{F}t\) values in superallowed \(0^+\)–\(0^+\) nuclear transitions, \(\mathcal{F}t=3072.24(1.85)\)s [350], but affected by additional nuclear corrections [350, 351]. The extracted value of \(\vert V_{ud} \vert \) is consistent with a determination via neutron decay (included in C above), based on the average of the neutron lifetimes measured by the eight latest experiments using the neutron trap method, \(\tau _n = 879.4(6)\)s, and employing the latest experimental average \(g_A=1.27625(50)\) for the axial coupling.Footnote 4 Even when using the currently most precise measurements, \(\tau _n = 877.75(0.28)^{+0.22}_{-0.16}\)s [357] and \(g_A=1.27641(45)(33)\) [358], the determination from neutron decay is not yet competitive with superallowed \(\beta \) decays, but will provide a powerful independent determination in the future. In addition, note that there is also a deficit in the first-column CKM unitarity relation

less significant than the tension in the first row, but strengthening the possibility of NP related to the determination of \(V_{ud}\).

Updated plot of Ref. [335] for the data in Eq. (23) in \(V_{us}\)–\(V_{ud}\) plane. The \(1\sigma \), \(2\sigma \) and \(3\sigma \) contours (green circles) of the fit are in tension with CKM unitarity (black solid curve). The projections on \(\vert V_{us}\vert \) axis show the values \(\vert V_{us}\vert _B\) and \(\vert V_{us}\vert _C\) obtained from the unitarity condition

A fit of the data in Eq. (23) shows the deviation from unitarity at the \(3\sigma \) level (see Fig. 6). Alternatively, by employing unitarity, these data can be translated into three different results for the Cabibbo angle: \(\vert V_{us} \vert _A = 0.22326(55)\), \(\vert V_{us}\vert _B= 0.22535(45)\) and \(\vert V_{us}\vert _C= 0.2284(11)\), which are in obvious tension with each other. It has also been shown that the discrepancy between the \(K_{\ell 3}\) and \(K_{\mu 2}\) results \(\vert V_{us} \vert _A\) and \(\vert V_{us} \vert _B\) is unlikely to be due to radiative corrections [359, 360].

In fact, the three determinations of the Cabibbo angle do not necessarily correspond to quite the same values in the presence of NP. The amplitudes of the \(K_{\ell 3}\) and \(K_{\mu 2}\) decays are proportional to vector \({\overline{u}} \gamma ^\mu s\) and axial \({\overline{u}} \gamma ^\mu \gamma ^5 s\) currents, respectively. On the other hand, superallowed nuclear transitions are only sensitive to vector current \({\overline{u}} \gamma ^\mu d\), and the Fermi constant, which is fixed by the muon decay width, could also be affected by NP [361]. Therefore, the Cabibbo angle anomaly (CAA) can be a signal of BSM physics, which can also have other phenomenological implications and be related to other existing anomalies. The different possible explanations can be broadly grouped into three categories: modifications of the \(W\) quark vertex, modifications to the \(W\) lepton vertex, or effects in four-fermion contact interaction operators.Footnote 5

Modifying the \(W\) quark vertex The W couplings to quarks are modified after EW symmetry breaking by the two operators: \(Q_{\phi q}^{(3)ij}\) and \(Q_{\phi ud}^{ij}\,\) (see Ref. [79] for the definitions of the operators). The latter generates right-handed W-quarks couplings and it has been showed that the interplay between \(C_{\phi ud}^{11}\,\) and \(C_{\phi ud}^{12}\,\) can solve the tension in the CAA [339] and bring the determinations of \(|V_{us}|\) from \(K_{\ell 3}\) and \(K_{\mu 2}\) into agreement. \(Q_{\phi q}^{(3)ij}\) generates left-handed W-quark couplings and the CAA requires \(C_{\phi q}^{(3)11}\approx -(9\,\text {TeV})^{-2}\). These operators can be induced via the mixing of SM quarks with vector-like quarks [335, 340, 363,364,365]. Note however, that because of \(SU(2)\) invariance this operator generates also effects in \(\Delta F=2\) processes which would rule out this solution, unless these effects are suppressed by assuming that \(Q_{\phi q}^{(3)ij}\) respect a global \(U(2)^2\) flavour symmetry.

Modifying the W lepton vertex The SMEFT coefficient \(C_{\phi \ell }^{(3)}\) corresponds to modifications of the \(W\ell \nu \) and \(Z\ell \ell \) leptonic currents after EW symmetry breaking. This is also interesting for another reason – namely that since this coefficient carries flavour indices such that NP could be related to LFUV [336, 337], which ties in to many of the other anomalies discussed in this white paper. In order to explain the CAA, this coefficient must be approximately \(C_{\phi \ell }^{(3)22} \approx (9\,\text {TeV})^{-2}\) [366, 367].

There are four different vector-like leptons (VLLs), as well as an \(SU(2)_L\) triplet vector boson, that can be responsible for generating this operator, and hence explain the CAA. The phenomenology of VLLs for the CAA has been studied in detail [366,367,368,369] and the vector triplet idea has been examined in Refs. [366, 370].

For the VLLs, extra phenomenological consequences arise since the \(SU(2)\) singlet operator \(Q_{H\ell }^{(1)}\) is generated. This operator alters leptonic \(Z\) decays, which are strongly constrained by EW precision observables from LEP and the LHC. Such effects allow us to distinguish between the different models. E.g., it has been shown that extending the SM with a single VLL [366, 371] leads to tensions between the region of parameter space favoured by the CAA and EW observables. Adding multiple new representations, each coupling to a single different SM lepton can provide a better fit to data [367]. For the vector triplet boson, a minimal model leads to tensions with EW precision observables [366], which can be eased in a less minimal setup [370].

Four-fermion operators There are several four-fermion operators in the SMEFT that can affect the determination of the Fermi constant or directly alter semi-leptonic decays [361]. Starting with four-lepton operators, the severe constraints from the Michel parameter, muonium–anti-muonium oscillations and the upper bounds on LFV processes lead to the conclusion that the only viable solution to the CAA proceeds via a modification of the SM operator \(Q_{\ell \ell }^{2112}\) with a Wilson coefficient \(C_{\ell \ell }^{2112} \approx -(8\,\text {TeV})^{-2}\). Simple models generating this contribution via a singly charged scalar have been recently proposed in Refs. [372,373,374]. This option was also proposed in Ref. [335] via a generic flavour-changing boson (see also Ref. [375]), which can be induced by gauge bosons of chiral inter-family symmetry [376, 377]. All these possibilities lead to constructive interfere with the SM in muon decay such that the Fermi constant of the Lagrangian \(G_F\) is smaller than the one measured from by the muon lifetime. We note that while this type of solution resolves the tension between A/B determinations with C, it can only slightly alleviate the tension between A and B themselves and leads to additional tensions in the EW fit [361].

Concerning 2-quark–2-lepton operators, only \(Q_{\ell q}^{(3)1111}\) is able to give a sizable BSM effect in \(\beta \) decays via interference with the SM and the CAA requires \(C_{\ell q}^{(3)1111}\approx (11\,\text {TeV})^{-2}\). Possible extensions of the SM that induce this operator are LQs [365, 378] or a colour-neutral vector triplet [370]. It is also worth noting that the size required to explain the CAA is compatible with the one preferred by CMS searches for \(pp\rightarrow e^+e^-\), see Sect. 2.1.6. Finally, scalar interactions are typically negligible for first-generation fermions, while most severely constrained from processes that display chiral enhancement [379,380,381,382].

2.1.5 Lepton flavour universality in the charged current

The recently observed anomalies in \(b\rightarrow c\tau \nu \) and \(b\rightarrow s\mu ^+\mu ^-\) transitions suggest a possible violation of lepton universality in other processes where strong constraints on the universality of the leptonic \(W^\pm \) couplings \(g_\ell \) (\(\ell = e, \mu , \tau \)), emerging from the measured weak decays of the \(\mu \), \(\tau \), \(\pi \) and K, exist. The most accurate phenomenological tests of the universality of the leptonic charged-current couplings are summarised in Table 1, which updates Ref. [383].

The leptonic decays \(\ell \rightarrow \ell '{\bar{\nu }}_{\ell '}\nu _\ell \) provide very clean measurements of the \(W^\pm \) couplings. The \(\tau \rightarrow \mu /\tau \rightarrow e\) ratio directly constrains \(|g_\mu /g_e|\), while the comparison of \(\tau \rightarrow e,\mu \) with \(\mu \rightarrow e\) provides information on \(|g_\tau /g_\mu |\) and \(|g_\tau /g_e|\). Taking into account the different lepton masses involved and the small higher-order electroweak corrections, the current data confirm the universality of the leptonic \(W^\pm \) couplings with a 0.15% precision.

A slightly better sensitivity on \(|g_\mu /g_e|\) has been obtained from the precisely measured ratio of the \(\pi ^-\rightarrow e^-{\bar{\nu }}_e\) and \(\pi ^-\rightarrow \mu ^-{\bar{\nu }}_\mu \) decay widths [334]. At this level of precision, a good control of radiative QED corrections is compulsory [384, 385]. Comparable accuracies have been also reached from the corresponding \(e/\mu \) ratios in \(K_{\ell 2}\) and \(K_{\ell 3}\) decays [386].

The comparison of the \(\tau ^-\rightarrow P^-\nu _\tau \) and \(P^-\rightarrow \mu ^-{\bar{\nu }}_\mu \) (\(P=\pi ,K\)) decay widths allows for an independent determination of \(|g_\tau /g_\mu |\). The radiative corrections to these ratios involve low-energy hadronic effects that have been recently re-evaluated [387], using Chiral Perturbation Theory techniques and the large-\(N_C\) expansion. While this updated calculation agrees with previous evaluations, the estimated hadronic uncertainties are found to be slightly larger. Nevertheless, one obtains a quite accurate test of universality at the \(0.4\%\) (\(0.8\%\)) level from the \(\pi \) (K) ratios.

The decays \(W\rightarrow \ell {\bar{\nu }}_\ell \) provide a more direct access to the leptonic W couplings. However, with the limited statistics collected at LEP it was only possible to reach precisions of \(O(1\%)\) [389]. The LEP data exhibited a slight excess of \(W\rightarrow \tau {\bar{\nu }}_\tau \) events, implying \(2.7\%\) and \(2.4\%\) deviations from lepton universality in \(|g_\tau /g_\mu |\) and \(|g_\tau /g_e|\), respectively. This was very difficult to reconcile with the much more precise indirect constraints from \(\tau ,\mu , \pi \) and K decays [390].

The large amount of data provided by the LHC has made it possible to perform more precise tests of the leptonic W decays. The recent ATLAS determination of \(\Gamma _{W\rightarrow \tau }/\Gamma _{W\rightarrow \mu }\) [388] agrees well with the SM expectation. The ATLAS measurement alone would imply \(|g_\tau /g_\mu | =0.996\pm 0.007\). The larger error quoted in Table 1 reflects the sizable discrepancy with the old LEP value. A preliminary CMS measurement of the W leptonic branching fractions [391], not yet included in Table 1, fully confirms the ATLAS result, eliminating the long-standing \(W\rightarrow \tau \) anomaly. The separate results from LEP and the LHC experiments are collected in Table 2, which also displays the preliminary world averages including the Tevatron data. Following the PDG prescription, the errors of the \(|g_\tau /g_\mu |\) and \(|g_\tau /g_e|\) averages have been increased to account for the discrepancy with the LEP values.

Clearly, the current data verify the universality of the leptonic W couplings to the \(0.15\%\) level. In Table 1 one can only identify two small deviations that do not reach the \(2\sigma \) level: there is a slight (\(1.9\sigma \)) excess of \(\tau \rightarrow \mu \) versus \(\mu \rightarrow e\) events, and a small deficit (\(1.8\sigma \)) of \(\tau \rightarrow K\) versus \(K\rightarrow \mu \) transitions. The relatively large hadronic uncertainty involved [387] could easily explain the second deviation, although a systematic deficit of kaon final states seems to be present in \(\tau \) decays [383], leading to a determination of \(|V_{us}|\) slightly lower than the one obtained from kaon decays [392,393,394]. The slight excess of \(\tau \rightarrow \mu \) events could be correlated with a possible explanation of the Cabibbo anomaly through a slight violation of lepton universality [337, 372, 373]. In any case, more precise experimental studies are needed.

The different universality tests provide complementary information, since they are sensitive to different types of NP contributions. While the decays of the W boson probe directly its leptonic couplings, the indirect constraints from low-energy leptonic and semileptonic decays test the potential presence of additional intermediate particles, which could modify each analysed process in a different way. From this point of view, it is worth to mention the universality test extracted from the ratio of \(B\rightarrow D^{(*)}\mu \nu \) and \(B\rightarrow D^{(*)}e\nu \) transitions: \(|g_\mu /g_e| = 0.989\; (12)\) [395]. Although much less precise that the other indirect determinations in Table 1, it severely restricts the type of possible explanations to the \(b\rightarrow c\tau \nu \) anomaly.

2.1.6 Non-resonant di-electrons

A search for LFUV in the non-resonant production of di-leptons was recently performed by CMS, observing a \(\approx \) \(4\,\sigma \) excess in electron pairs with an invariant mass greater than \(1.8\,\)TeV in the \(pp\rightarrow e^+e^-\) channel. As the muon channel agrees with the SM expectation, this measurement points towards LFUV. Also ATLAS [398] and HERA [399] found more di-electrons than expected in their studies of quark-lepton contact interactions.

In addition to the total cross-section, the CMS collaboration provided the differential cross-section ratio

for different \(m_{\ell \ell }\) (\(\ell = e, \mu \)) bins. For each bin, they quoted two values, distinguishing the cases where zero (at least one) of the di-leptons were detected in the endcaps, corresponding to barrel only (endcap) measurements. These were then compared to the SM predictions obtained from Monte Carlo simulations

In this double ratio, many of the experimental and theoretical uncertainties cancel [400]. It was further normalised to one in the bin from 200 to 400 GeV to correct for the relative sensitivity to electrons and muons. The measurements are indicated by the black squares (circles) for the barrel only (endcap) measurements in Fig. 7. A trend towards values smaller than 1 is visible for large \(m_{\ell \ell }\).

ATLAS followed a different strategy, measuring the differential cross-section

integrated over the signal regions [2.2, 6.0] TeV and [2.7, 6.0] TeV for searches of NP interfering constructively and destructively with the SM, respectively [398]. Comparing these results to the SM predictions, they derived exclusion limits for NP coupling equally to up and down quarks of both chiralities. Interestingly, also ATLAS measured more di-electrons than expected in the constructive channel, but their measurements are still consistent with the SM prediction.

Potential explanations to the di-electron excess in the CMS measurements, including the constraints from the ATLAS analysis, have recently been discussed in Refs. [365, 401, 402]. The presence of NP was studied within an EFT approach, considering only operators coupling to first-generation quarks and leptons. The Wilson coefficients \(C_i\) of the relevant operators are reported in Table 3. For each of them, the ratio \(R_{\mu \mu / ee, ij}^{\text {SM+NP}}(C_i) \big / R_{\mu \mu / ee, ij}^{\text {SM}}\) was computed and fitted to CMS data using a \(\chi ^2\) statistical analysis with

The double ratio values \(R_{\mu \mu /e e, ij} \big / R_{\mu \mu / e e, ij}^{\text {SM}}\) measured by the CMS collaboration in nine \(m_{\ell \ell }\) bins between 200 and 3000 GeV are shown (black circles and squares) together with the best fit curves for different 2-quark–2-lepton operator scenarios (coloured lines)

where \(\sigma _{ij}\) are the corresponding uncertainties reported in Ref. [403]. Additionally, the \(\text {ATLAS}\) exclusion limits were recast for the cases where the NP couples differently to up and down quarks and to the different chiralities. The preferred (excluded) values for the Wilson coefficient, given by the CMS (ATLAS) analysis are shown in Table 3 and the best fits to CMS data are displayed in Fig. 7. NP interfering constructively with the SM contribution is preferred and the operators considered can improve the fit with a pull of up to \(3.3 \sigma \). While the corresponding Wilson coefficient values are not excluded by the ATLAS analysis, constraints coming from kaon decays, \(K^0\)–\({\bar{K}}^0\) mixing or \(D^0\)–\({\bar{D}}^0\) mixing are more stringent and need to be considered when addressing the CMS excess. Finally, it is important to note that the operator \([Q_{\ell q}^{(3)}]_{1111}\) can also provide an explanation to the Cabibbo Angle Anomaly, discussed in Sect. 2.1.4. In Ref. [402] it was shown that \([Q_{\ell q}^{(3)}]_{1111}\) can provide a simultaneous explanation for these two anomalies and a best-fit value of \([C_{\ell q}^{(3)}]_{1111}=1.1/(10\, \mathrm TeV)^2\) was extracted.

2.1.7 Forward–backward asymmetry in semi leptonic B decays

The observable \(\Delta A_{\mathrm {FB}}\) encodes the difference of the forward–backward asymmetry in \(B\rightarrow D^*\mu \nu \) vs \(B\rightarrow D^*e\nu \). Like for \(R(K^{(*)})\) the muon and electron mass can both be neglected such that the form-factor dependence cancels and the SM prediction is, to the currently relevant precision, zero. Even though the corresponding measurements of the total branching ratios are consistent with the SM expectations [404, 405], recently Ref. [406] unveiled a \(\approx \!4\sigma \) tension in \(\Delta A_{\mathrm {FB}}\), extracted from \(B\rightarrow D^*\ell {\bar{\nu }}\) data of BELLE [407].

A good fit to data requires a non-zero Wilson coefficient of the tensor operators. Importantly, among the set of renormalisable models, only two scalar LQ can generate this operator at tree level and only the \(SU(2)_L\) singlet gives a good fit to data [408]. However, even in this case, due to the constraints from other asymmetries, \(\Delta A_{FB}\) cannot be fully explained but the global fit to \(b\rightarrow c\mu \nu \) and \(b\rightarrow c e\nu \) data can be improved by more than \(3\sigma \) [408].

2.1.8 Combined explanations

In the presence of the deviations from the SM discussed above, it is reasonable to explore NP models that are able to address, in a coherent manner, more than one anomaly. While it is often possible to simply combine independent solutions for each anomaly, we consider here combined explanations in the sense that the mediators are either directly connected from a UV perspective or their joint contribution to some anomalous observable is crucial for a successful explanation.

Neutral and Charged-current B -anomalies When combining \(b\rightarrow s\ell ^+\ell ^-\) and \(R(D^{(*)})\) anomalies, the viable scenarios are dictated by those that offer a good explanation of \(R(D^{(*)})\), since this observable is the one that requires the lowest NP scale. As discussed in Sect. 2.1.2, the possible solutions necessarily involve LQs, specifically the vector \(U_1 \sim (\mathbf{3}, \mathbf{1}, 2/3)\) or the scalars singlet \(S_1 \sim ({\bar{\mathbf{3}}}, \mathbf{1}, 1/3)\) or doublet \(R_2 \sim (\mathbf{3}, \mathbf{2}, 7/6)\). Of these, only the vector LQ \(U_1\) also generates a viable contribution to \(b\rightarrow s \ell \ell \), and is thus the only single-mediator scenario for combined explanations of B-anomalies [26, 62, 70, 113, 115,116,117,118, 120, 409,410,411,412,413]. Alternatively, two scalar leptoquarks can also provide a good explanation, by adding the triplet \(S_3 \sim ({{\bar{\mathbf{3}}}}, \mathbf{3}, 1/3) \), that mediates successfully \(b\rightarrow s \ell \ell \), to \(S_1\) or \(R_2\).

The \(S_1 + S_3\) scenario [120, 134, 414,415,416,417,418,419,420,421] has two viable possibilities: if the \(S_1\) couplings to right-handed fermions vanish, then both \(S_1\) and \(S_3\) contribute to \(R(D^{(*)})\) via \(C_V^L\); if instead also right-handed couplings are involved, then the largest contribution to \(R(D^{(*)})\) arises from \(S_1\) via \(C_S^L \approx - 4 C_T\) coefficients. From a UV perspective, these two LQ could arise as pseudo-Nambu–Goldstone bosons from a strongly-coupled UV sector, together with the SM Higgs [414, 420].

In the \(R_2 + S_3\) scenario the two leptoquarks contribute mostly independently to \(R(D^{(*)})\) and \(b\rightarrow s \ell \ell \), respectively [114, 422, 423]. However, these two scalars have been proposed as arising from the same GUT scenario [112].

B-anomalies and anomalous muon magnetic moment This scenario can be seen as a further phenomenological requirement on the models discussed in the previous paragraphs. Of the three setups presented as combined explanations of B-anomalies, only those involving the scalar leptoquarks \(S_1\) and \(R_2\) can also provide a satisfactorily contribution to \(a_\mu \), thanks to the \(m_t / m_\mu \) enhancement. In this case, however, given the presence of couplings to both left and right-handed muons and tau, a too-large contribution to \(\tau \rightarrow \mu \gamma \) is induced. This can be cancelled by other contributions from the same mediator, with a tuning at the level of one part in three at the amplitude level [416, 417, 419].

B-anomalies, anomalous muon magnetic moment, and CAA Possible combined explanations for all these anomalies has been recently proposed involving the scalar LQ \(S_1\) and a scalar charged singlet \(\phi ^+\) [374]. In this scenario \(S_1\) contributes to \(R(D^{(*)})\) and \(a_\mu \) in the same way as described above, while \(\phi ^+\) generates a tree-level contribution to the muon decay \(\mu ^- \rightarrow e^- \nu _\mu \bar{\nu }_e\) that, shifting the Fermi constant, improves the fit of the Cabibbo angle [361, 372, 373, 424]. The contribution to \(b\rightarrow s \mu \mu \) is instead induced via a one-loop box diagram involving both \(S_1\) and \(\phi \). While this model is able to pass all present bounds, the masses for these scalars are required to be at about 5 TeV, with some couplings reaching somewhat large values of \(\approx 3\), which are at the threshold of limits from perturbative unitarity [425].

An alternative BSM scenario that can account for both neutral and charged-current B-anomalies, as well as the muon \(g-2\) is based on a minimal R-parity violating (RPV) SUSY framework with relatively light third-generation sfermions [426,427,428]. Although the LQD-type RPV couplings of squarks somewhat resemble the scalar LQ couplings, there are some key differences in case of RPV, such as the possibility of explaining muon \(g-2\) purely via LLE-type couplings, same chiral gauge structure as in the SM, natural flavour violation, as well as many other attractive inbuilt features of SUSY, such as radiative stability of the Higgs boson, radiative neutrino masses, radiative electroweak symmetry breaking, stability of the electroweak vacuum, gauge coupling unification, (gravitino) dark matter and electroweak baryogenesis.

2.2 Multi-lepton anomalies at the LHC

One of the implications of a two-Higgs doublet model with an additional singlet scalar S (2HDM+S), is the production of multiple-leptons through the decay chain \(H\rightarrow Sh,SS\) [429], where H is the heavy CP-even scalar and h is considered as the SM Higgs boson with mass \(m_h=125\) GeV. Excesses in multi-lepton final states were reported in Ref. [430]. In order to further explore results with more data and new final states, while avoiding biases and look-else-where effects, the parameters of the model were fixed in 2017 according to Refs. [429, 430]. This includes setting the scalar masses to \(m_H=270\) GeV, \(m_S=150\) GeV,Footnote 6 treating S as a SM Higgs-like scalar and assuming the dominance of the decays \(H\rightarrow Sh,SS\). Statistically compelling excesses in opposite sign di-leptons, same-sign di-leptons, and three leptons, with and without the presence of b-tagged hadronic jets were reported in Refs. [432,433,434]. The possible connection with the anomalous magnetic moment of the muon \(g-2\) was reported in Ref. [435]. Interestingly, the model can explain anomalies in astro-physics (the positron excess of AMS-02 [436] and the excess in gamma-ray fluxes from the galactic center measured by Fermi-LAT [437]) (see Sect. 2.5) if it is supplemented by a Dark Matter candidate [438].

We give a succinct description of the different final states and corners of the phase-space that are affected by the anomalies. The anomalies are reasonably well captured by a 2HDM+S model. Here, H is predominantly produced through gluon-gluon fusion and decays mostly into \(H\rightarrow SS,Sh\) with a total cross-section in the rage 10–25 pb [432]. Due to the relative large Yukawa coupling to top quarks needed to achieve the above mentioned direct production cross-section, the production of H in association with a single top-quark.Footnote 7 These production mechanisms together with the dominance of \(H\rightarrow SS,Sh\) over other decays, where S behaves like a SM Higgs-like boson, lead to a number of final states that can be classified into several groups of final states. There are three groups of final states where the excesses are statistically compelling: opposite sign (OS) leptons (\(\ell =e,\mu \)); same sign (SS) and three leptons (\(3\ell \)) in association with b-quarks; SS and \(3\ell \) without b-quarks. In the sections below a brief description of the final states is given with emphasis on the emergence of new excesses in addition to those reported in Refs. [430, 432, 434], when appropriate. It is important to reiterate that the new excesses reported here are not the result of scanning the phase-space, but the result of looking at pre-defined final states and corners of the phase-space, as predicted by the model described above.

2.2.1 Opposite sign di-leptons

The production chain \(pp\rightarrow H\rightarrow SS,Sh\rightarrow \ell ^+\ell ^-+X\), is the most copious multi-lepton final state. Using the benchmark parameter space in Ref. [439], the dominant of the singlet are \(S\rightarrow W^+W^-,b{\overline{b}}\). This will lead to OS leptons with without b-quarks. The most salient characteristics of the final states are such that the di-lepton invariant mass \(m_{\ell \ell }<100\) GeV where the bulk of the signal is produced with low b-jet multiplicity, \(n_b<2\) [432]. The dominant SM background in events with b-jets is \(t{\overline{t}}+Wt\). It is important to note that the b-jet and light-quark of the signal is significantly different from that of top-quark related production mechanisms. As a matter of fact, excesses are seen when applying a full jet veto, top-quark backgrounds become suppressed and where the dominant backgrounds is non-resonant \(W^+W^-\) production [430, 440, 441].Footnote 8 A review of the NLO and EW corrections to the relevant processes can be found in Refs. [432, 441], where to date the \(m_{\ell \ell }\) spectra at low masses remains unexplained by MC tools. A measurement of the differential distributions in OS events with b-jets with Run 2 data further corroborates the inability of current MC tools to describe the \(m_{\ell \ell }\) distribution [443]. A summary of deviations for this class of excesses is given in Table 4.

2.2.2 SS and \(3\ell \) with b-quarks

The associated production of H with top quarks lead to the anomalous production of SS and \(3\ell \) in association with b-quarks with moderate scalar sum of leptons and jets, \(H_T\). The elevated \(t{\overline{t}}W^{\pm }\) cross-section measured by the ATLAS and CMS experiments can be accommodated by the above mentioned model [432, 433]. Based on a number of excesses involving Z bosons, in Ref. [439] it was suggested that the CP-odd scalar of the 2HDM+S model could be as heavy as \(m_A\approx 500\) GeV, where the two leading decays would be \(A\rightarrow t{\overline{t}},ZH\). The cross-section for the associated production \(pp\rightarrow t{\overline{t}}A\) with \(A\rightarrow t{\overline{t}}\) would correspond to \(\approx 10\) fb. This is consistent with the elevated \(t{\overline{t}}t{\overline{t}}\) cross-section reported by ATLAS and CMS [444,445,446]. The combined significance of the excesses related to the cross-section measurements of \(t{\overline{t}}W^{\pm }\) and \(t{\overline{t}}t{\overline{t}}\) surpass 3\(\sigma \), as detailed in Table 4. It is important to note that the ATLAS collaboration has reported a small excess in the production of four leptons with a same flavour OS pair consistent with a Z boson, where the four-lepton invariant mass, \(m_{4\ell }<400\) GeV [447]. This excess can also be accommodated by the direct production of \(A\rightarrow ZH\).

2.2.3 SS and \(3\ell \) without b-quarks