Abstract

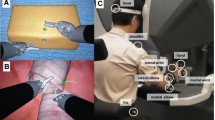

Data on surgical robots are not openly accessible, limiting further study of the operation trajectory of surgeons’ hands. Therefore, a trajectory monitoring system should be developed to examine objective indicators reflecting the characteristic parameters of operations. 20 robotic experts and 20 first-year residents without robotic experience were included in this study. A dry-lab suture task was used to acquire relevant hand performance data. Novices completed training on the simulator and then performed the task, while the expert team completed the task after warm-up. Stitching errors were measured using a visual recognition method. Videos of operations were obtained using the camera array mounted on the robot, and the hand trajectory of the surgeons was reconstructed. The stitching accuracy, robotic control parameters, balance and dexterity parameters, and operation efficiency parameters were compared. Experts had smaller center distance (p < 0.001) and larger proximal distance between the hands (p < 0.001) compared with novices. The path and volume ratios between the left and right hands of novices were larger than those of experts (both p < 0.001) and the total volume of the operation range of experts was smaller (p < 0.001). The surgeon trajectory optical monitoring system is an effective and non-subjective method to distinguish skill differences. This demonstrates the potential of pan-platform use to evaluate task completion and help surgeons improve their robotic learning curve.

Similar content being viewed by others

Availability of data and materials

Data and materials are available on reasonable request from the corresponding author.

References

Mohiuddin K, Swanson SJ (2013) Maximizing the benefit of minimally invasive surgery. J Surg Oncol 108:315–319. https://doi.org/10.1002/jso.23398

Weigl M, Stefan P, Abhari K, Wucherer P, Fallavollita P, Lazarovici M, Weidert S, Euler E, Catchpole K (2016) Intra-operative disruptions, surgeon’s mental workload, and technical performance in a full-scale simulated procedure. Surg Endosc 30:559–566. https://doi.org/10.1007/s00464-015-4239-1

Lee YL, Kilic GS, Phelps JY (2011) Medicolegal review of liability risks for gynecologists stemming from lack of training in robot-assisted surgery. J Minim Invasive Gynecol 18:512–515. https://doi.org/10.1016/j.jmig.2011.04.002

Smith R, Patel V, Satava R (2014) Fundamentals of robotic surgery: a course of basic robotic surgery skills based upon a 14-society consensus template of outcomes measures and curriculum development. Int J Med Robot Comput Assist Surg 10:379–384. https://doi.org/10.1002/rcs.1559

Dawe SR, Pena GN, Windsor JA, Broeders JA, Cregan PC, Hewett PJ, Maddern GJ (2014) Systematic review of skills transfer after surgical simulation-based training. Br J Surg 101:1063–1076. https://doi.org/10.1002/bjs.9482

Meier M, Horton K, John H (2016) Da Vinci© Skills Simulator™: is an early selection of talented console surgeons possible? J Robot Surg 10:289–296. https://doi.org/10.1007/s11701-016-0616-6

Martin JA, Regehr G, Reznick R, MacRae H, Murnaghan J, Hutchison C, Brown M (1997) Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg 84:273–278. https://doi.org/10.1046/j.1365-2168.1997.02502.x

Goh AC, Goldfarb DW, Sander JC, Miles BJ, Dunkin BJ (2012) Global evaluative assessment of robotic skills: validation of a clinical assessment tool to measure robotic surgical skills. J Urol 187:247–252. https://doi.org/10.1016/j.juro.2011.09.032

Satava RM, Stefanidis D, Levy JS et al (2020) Proving the effectiveness of the fundamentals of robotic surgery (FRS) skills curriculum: a single-blinded, multispecialty, multi-institutional randomized control trial. Ann Surg 272:384–392. https://doi.org/10.1097/SLA.0000000000003220

Uemura M, Tomikawa M, Kumashiro R, Miao T, Souzaki R, Ieiri S, Ohuchida K, Lefor AT, Hashizume M (2014) Analysis of hand motion differentiates expert and novice surgeons. J Surg Res 188:8–13. https://doi.org/10.1016/j.jss.2013.12.009

Ghasemloonia A, Maddahi Y, Zareinia K, Lama S, Dort JC, Sutherland GR (2017) Surgical skill assessment using motion quality and smoothness. J Surg Educ 74:295–305. https://doi.org/10.1016/j.jsurg.2016.10.006

Zia A, Essa I (2018) Automated surgical skill assessment in RMIS training. Int J Comput Assist Radiol Surg 13:731–739. https://doi.org/10.1007/s11548-018-1735-5

Chen J, Cheng N, Cacciamani G, Oh P, Lin-Brande M, Remulla D, Gill IS, Hung AJ (2019) Objective assessment of robotic surgical technical skill: a systematic review. J Urol 201:461–469. https://doi.org/10.1016/j.juro.2018.06.078

Olivas-Alanis LH, Calzada-Briseno RA, Segura-Ibarra V, Vazquez EV, Diaz-Elizondo JA, Flores-Villalba E, Rodriguez CA (2020) LAPKaans: tool-motion tracking and grip** force-sensing modular smart laparoscopic training system. Sensors 20:6937. https://doi.org/10.3390/s20236937

Kumar R, Jog A, Vagvolgyi B, Nguyen H, Hager G, Chen CC, Yuh D (2012) Objective measures for longitudinal assessment of robotic surgery training. J Thorac Cardiovasc Surg 143:528–534. https://doi.org/10.1016/j.jtcvs.2011.11.002

Shaharan S, Nugent E, Ryan DM, Traynor O, Neary P, Buckley D (2016) Basic surgical skill retention: can patriot motion tracking system provide an objective measurement for it? J Surg Educ 73:245–249. https://doi.org/10.1016/j.jsurg.2015.10.001

Jiang JY, **. Comput Methods Programs Biomed 152:71–83. https://doi.org/10.1016/j.cmpb.2017.09.007

Franz AM, Haidegger T, Birkfellner W, Cleary K, Peters TM, Maier-Hein L (2014) Electromagnetic tracking in medicine—a review of technology, validation, and applications. IEEE Trans Med Imaging 33:1702–1725. https://doi.org/10.1109/TMI.2014.2321777

Shangguan ZY, Wang LY, Zhang JQ, Dong WB (2019) Vision-based object recognition and precise localization for space body control. Int J Aerosp Eng 2019:1–10. https://doi.org/10.1155/2019/7050915

Camp CL, Loushin S, Nezlek S, Fiegen AP, Christoffer D, Kaufman K (2021) Are wearable sensors valid and reliable for studying the baseball pitching motion? An independent comparison with marker-based motion capture. Am J Sports Med 49:3094–3101. https://doi.org/10.1177/03635465211029017

Stenmark M, Omerbasic E, Magnusson M, Andersson V, Abrahamsson M, Tran PK (2022) Vision-based tracking of surgical motion during live open-heart surgery. J Surg Res 271:106–116. https://doi.org/10.1016/j.jss.2021.10.025

Chowriappa AJ, Shi Y, Raza SJ, Ahmed K, Stegemann A, Wilding G, Kaouk J, Peabody JO, Menon M, Hassett JM, Kesavadas T, Guru KA (2013) Development and validation of a composite scoring system for robot-assisted surgical training—the robotic skills assessment score. J Surg Res 185:561–569. https://doi.org/10.1016/j.jss.2013.06.054

Ganni S, Botden SMBI, Chmarra M, Goossens RHM, Jakimowicz JJ (2018) A software-based tool for video motion tracking in the surgical skills assessment landscape. Surg Endosc 32:2994–2999. https://doi.org/10.1007/s00464-018-6023-5

Lefor AK, Harada K, Dosis A, Mitsuishi M (2020) Motion analysis of the JHU-ISI gesture and skill assessment working set using robotics video and motion assessment software. Int J Comput Assist Radiol Surg 15:2017–2025. https://doi.org/10.1007/s11548-020-02259-z

Dubin AK, Julian D, Tanaka A, Mattingly P, Smith R (2018) A model for predicting the GEARS score from virtual reality surgical simulator metrics. Surg Endosc 32:3576–3581. https://doi.org/10.1007/s00464-018-6082-7

Dubin AK, Smith R, Julian D, Tanaka A, Mattingly P (2017) A comparison of robotic simulation performance on basic virtual reality skills: simulator subjective versus objective assessment tools. J Minim Invasive Gynecol 24:1184–1189. https://doi.org/10.1016/j.jmig.2017.07.019

Fard MJ, Ameri S, Ellis RD, Chinnam RB, Pandya AK, Klein MD (2018) Automated robot-assisted surgical skill evaluation: Predictive analytics approach. Int J Med Robot Comput Assist Surg 14:e1850. https://doi.org/10.1002/rcs.1850

Hung AJ, Chen J, Jarc A, Hatcher D, Djaladat H, Gill IS (2018) Development and validation of objective performance metrics for robot-assisted radical prostatectomy: a pilot study. J Urol 199:296–304. https://doi.org/10.1016/j.juro.2017.07.081

Nguyen XA, Ljuhar D, Pacilli M, Nataraja RM, Chauhan S (2019) Surgical skill levels: Classification and analysis using deep neural network model and motion signals. Comput Methods Programs Biomed 177:1–8. https://doi.org/10.1016/j.cmpb.2019.05.008

Ahmidi N, Tao L, Sefati S, Gao Y, Lea C, Haro BB, Zappella L, Khudanpur S, Vidal R, Hager GD (2017) A dataset and benchmarks for segmentation and recognition of gestures in robotic surgery. IEEE Trans Biomed Eng 64:2025–2041. https://doi.org/10.1109/TBME.2016.2647680

Gao Y, Vedula SS, Reiley CE, et al. JHU-ISI gesture and skill assessment working set (JIGSAWS): a surgical activity dataset for human motion modeling. In: MICCAI workshop: M2CAI 2014, p. 3.

Acknowledgements

The authors would like to thank all volunteers in this study.

Funding

This work was supported by the Provincial Teaching and Research Project of Colleges and Universities in Hubei Province (Grant No. 2020055) and the Zhongnan Hospital of Wuhan University Science, Technology and Innovation Seed Fund (Grant No. znpy2019003).

Author information

Contributions

Gaojie Chen and Lu Li wrote the main manuscript text; Jacques Hubert and Bin Luo provided guidance and technical support; Kun Yang and **nghuan Wang recruited volunteers and coordinate the project. All the authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

Gaojie Chen, Lu Li, Jacques Hubert, Bin Luo, Kun Yang, and **nghuan Wang have no conflicts of interest to disclose.

Ethical approval

This study was approved by the Medical Ethics Committee of Zhongnan Hospital of Wuhan University (2023114K). All the participants provided informed consent and volunteered to participate in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, G., Li, L., Hubert, J. et al. Effectiveness of a vision-based handle trajectory monitoring system in studying robotic suture operation. J Robotic Surg 17, 2791–2798 (2023). https://doi.org/10.1007/s11701-023-01713-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11701-023-01713-9