Abstract

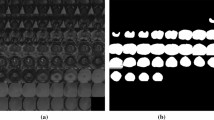

Auto-crop** of the prostate is one f the most significant tasks to detect the desired Region of Interest (ROI) and reduce the image size for increased accuracy of Computer Aided Diagnosis (CAD) systems for furthermore detection of Prostate Cancer. Prostate cancer (PCa) is responsible for many deaths worldwide and CAD systems can help radiologists in evaluating prostate cancer using Magnetic Resonance Imaging (MRI), as it offers improved visualization of soft tissues as compared to other imaging modalities. PCa is more commonly found in the prostate's Peripheral zone (PZ) rather than the Transition zone (TZ) or Central zone (CZ). By implementing advanced auto-crop** and denoising techniques, this research can lead to more precise identification of prostate cancer regions, significantly reducing the rate of misdiagnosis and ensuring patients receive appropriate treatments earlier.Automating the tedious and error-prone tasks of image crop** and noise reduction can free up valuable time for clinicians and radiologists, allowing them to focus more on patient care rather than image analysis.Thus, there exist different assessment criteria for diverse regions within the Prostate imaging announcing and information framework (PI-RADS). In examining suspected prostate cancer, PI-RADS is a standardized reporting scheme for multiparametric prostate MRI. Traditional approaches used by doctors were manual and time-consuming, thus CAD systems significantly helped in the early detection while doctors and radiologists were at ease. This paper proposes a deep learning-based auto crop** methodology named MRI-CropNet for automatic crop** of PCa region from MRI. This architecture involves an increase in the dilation rate in the newly added patch of convolution layer over the state of art methods. The expansion in filter size guarantees more adaptable element extraction, thus an increment in the exactness of the result. Based on the experimental analysis, values of Mathews correlation coefficient (MCC), Dice similarity coefficient (DSC), F1-Score, Average precision (AP), and Loss for auto-crop** using MRI-CropNet were observed to be 0.98, 0.99, 0.98, 98.81 and 0.07 respectively and therefore giving an edge to the proposed approach while outperforming state of the art approaches. Auto crop** is one of the most crucial steps in the detection of PCa because it is crucial that it be found early on so that treatment can begin promptly. To develop preventive measures, improve treatment outcomes, and ultimately mitigate the impact of this widespread illness, urgent research and awareness campaigns are imperative. A deep learning-based auto crop** methodology was suggested in this paper, which helped create a system that was quicker and performed better. Tailored solutions for prostate cancer detection employing deep learning techniques are imperative due to inherent anatomical and histological heterogeneity within the prostate gland. Tumors exhibit diverse morphological characteristics across glandular zones, influencing their appearance, size, and grade. Deep learning algorithms trained on region-specific datasets can effectively capture these nuanced features, optimizing detection accuracy.

Similar content being viewed by others

Data Availability

Data is not available publicly.

References

Afef, L., Rania, T., Hanen, C., Lamia, S., Ahmed, B. H. (2018) Comparison study for computer assisted detection and diagnosis CAD systems dedicated to prostate cancer detection using MRImp modalities. In: 2018 4th international conference on advanced technologies for signal and image processing (ATSIP) (pp. 1–6), IEEE.

Mohler, J., Bahnson, R. R., Boston, B., Busby, J. E., D’Amico, A., Eastham, J. A., Enke, C. A., George, D., Horwitz, E. M., Huben, R. P., & Kantoff, P. (2010). Prostate cancer. Journal of the National Comprehensive Cancer Network., 8(2), 162–200.

Goldenberg, S. L., Nir, G., & Salcudean, S. E. (2019). A new era: Artificial intelligence and machine learning in prostate cancer. Nature Reviews Urology, 16(7), 391–403.

Lu, Z., Zhao, M., & Pang, Y. (2020). CDA-net for automatic prostate segmentation in MR images. Applied Sciences, 10(19), 6678.

Esteva, A., Kuprel, B., Novoa, R. A., Ko, J., Swetter, S. M., Blau, H. M., & Thrun, S. (2017). Dermatologist-level classification of skin cancer with deep neural networks. Nature, 542(7639), 115–118.

Chen, J., Bai, G., Liang, S., Li, Z. (2016) Automatic image crop**: A computational complexity study. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 507–515)

Vincent, G., Guillard, G., & Bowes, M. (2012). Fully automatic segmentation of the prostate using active appearance models. MICCAI Grand Challenge: Prostate MR Image Segmentation., 2012, 2.

Doi, K. (2007). Computer-aided diagnosis in medical imaging: Historical review, current status and future potential. Computerized Medical Imaging and Graphics, 31, 198–211.

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 25, 1097–1105.

Simonyan, K., & Zisserman, A. J. (2014). Very deep convolutional networks for large-scale image recognition. ar**v. https://doi.org/10.48550/ar**v.1409.1556

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. (2016) Rethinking the inception architecture for computer vision. Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2818–2826)

He, K., Zhang, X., Ren, S., Sun, J. (2016) Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. (pp. 770–778)

Hassan, S. A., Sayed, M. S., Abdalla, M. I., & Rashwan, M. A. (2020). Breast cancer masses classification using deep convolutional neural networks and transfer learning. Multimedia Tools & Applications. https://doi.org/10.1007/s11042-020-09518-w

Zhu, X., Yao, J., Huang, J. (2016) Deep convolutional neural network for survival analysis with pathological images. In: 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM). (pp. 544–547) IEEE

Rehman, A., et al. (2020). A deep learning-based framework for automatic brain tumors classification using transfer learning. Circuits, Systems, and Signal Processing, 39, 757–775.

Kaur, T., & Gandhi, T. K. (2020). Deep convolutional neural networks with transfer learning for automated brain image classification. Machine vision and applications, 31, 1–16.

Abbas, A., Abdelsamea, M. M., & Gaber, M. M. (2020). Detrac: Transfer learning of class decomposed medical images in convolutional neural networks. IEEE Access, 8, 74901–74913.

Liu, Z., Jiang, W., Lee, K. H., Lo, Y. L., Ng, Y. L., Dou, Q., Vardhanabhuti, V., Kwok, K. W. (2019) A two-stage approach for automated prostate lesion detection and classification with mask R-CNN and weakly supervised deep neural network. In Workshop on artificial intelligence in radiation therapy (pp. 43-51). Springer, Cham

Long, J., Shelhamer, E. & Darrell, T. (2015) Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, (pp. 3431– 3440).

Yoo, S., Gujrathi, I., Haider, M. A., & Khalvati, F. (2019). Prostate cancer detection using deep convolutional neural networks. Scientific Reports, 9(1), 19518.

Girshick, R., Donahue, J., Darrell, T., Malik, J. (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 580-587).

Yang, X., Liu, C., Wang, Z., Yang, J., Le Min, H., Wang, L., & Cheng, K. T. T. (2017). Co-trained convolutional neural networks for automated detection of prostate cancer in multi-parametric MRI. Medical Image Analysis, 42, 212–227.

Girshick, R. (2015). Fast R-CNN. In: Proceedings of the IEEE international conference on computer vision (pp. 1440-1448)

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster R-CNN: Towards real-time object detection with region proposal networks. Advances in Neural Information Processing Systems, 28, 1.

He, K., Gkioxari, G., Dollár, P., Girshick, R. (2017). Mask R-CNN. In: Proceedings of the IEEE international conference on computer vision (pp. 2961-2969)

Lu, Z., Zhao, M., **ao, Y., Pang, Y. (2020). Prostate localization in 2d sequence mr with fusion of center position prior and sequence correlation. In: 2020 39th Chinese Control Conference (CCC) (pp. 6464-6469). IEEE.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany Proceedings, (pp. 234-241). Springer International Publishing.

Li, J., Sarma, K. V., Ho, K. C., Gertych, A., Knudsen, B. S., Arnold, C. W. (2017). A multi-scale U-net for semantic segmentation of histological images from radical prostatectomies. In: AMIA Annual Symposium Proceedings (p. 1140)

Lemaitre, G., Mart, R., Freixenet, J., Vilanova, J. C., Walker, P. M., & Meriaudeau, F. (2015). Computer-aided detection and diagnosis for prostate cancer based on mono and multi-parametric MRI: A review. Computers in Biology and Medicine, 60, 8–31. https://doi.org/10.1016/j.compbiomed.2015.02.009

Fabijanska, A. (2016). A novel approach for quantification of time intensity curves in a DCE-MRI image series with an application to prostate cancer. Computer Biology Medicine, 73, 119–130. https://doi.org/10.1016/j.compbiomed.2016.04.010

Lemaitre, G., Massich, J., Martí, R., Freixenet, J., Vilanova, J. C., Walker, P. M., .Sidibe, D., Mériaudeau, F. (2015) A boosting approach for prostate cancer detection using multi-parametric MRI. In: Proceedings: SPIE 9534, twelfth international conference on quality control by artificial vision (pp. 95340A). https://doi.org/10.1117/12.2182772

Lemaitre G, Rastgoo M, Massich J, Vilanova JC, Walker PM, Freixenet J, Meyer-Baese A, Meriaudeau F,Mart R (2016) Normalization of t2w-mri prostate images using rician a priori. In: Proceedings: SPIE 9785, medical imaging: Computer-aided diagnosis (pp 978529). https://doi.org/10.1117/12.2216072

Trigui, R., Miteran, J., Sellami, L., Walker, P., Hamida, A. B. (2016) A classification approach to prostate cancer localization in 3T multiparametric MRI. In: IEEE international conference on advanced technologies for signal and image processing (ATSIP) (pp 113–118). https://doi.org/10.1109/ATSIP.2016.7523064

Trigui, R., Mitran, J., Walker, P. M., Sellami, L., & Hamida, A. B. (2017). Automatic classification and localization of prostate cancer using multi-parametric MRI/MRS. Biomedical Signal Processing and Control, 31, 189–198. https://doi.org/10.1016/j.bspc.2016.07.015

Cahan, A., & Cimino, J. J. (2017). A learning health care system using computer-aided diagnosis. Journal of Medical Internet Research, 19(3), e54. https://doi.org/10.2196/jmir.6663

Garg, G., & Juneja, M. (2016). Anatomical visions of prostate cancer in different modalities. Indian Journal of Science and Technology. https://doi.org/10.17485/ijst/2016/v9i44/105093

Garg, G., & Juneja, M. (2018). A survey of prostate segmentation techniques in different imaging modalities. Current Medical Imaging Reviews, 14(1), 19.

Garg, G., & Juneja, M. (2018). A survey on computer-aided detection techniques of prostate Cancer. In: Progress in advanced computing and intelligent engineering: Proceedings of ICACIE 2016, Volume 2 (pp. 115-125). Springer Singapore

Garg, G., Juneja, M. (2018) Cancer detection with prostate zonal segmentation–A review. In: proceedings of the international conference on computing and communication systems, Springer, Singapore (pp 829–835)

Holupka, E. J., Kaplan, I. D., & Burdette, E. C. (1998) Ultrasound localization and image fusion for the treatment of prostate cancer. Newton, both of MA (US); Everette C. Burdette, Champaign, IL

Bollman, J. E., Rao, R. L., Venable, D. L., & Eschbach, R. (1999) Inventors; Xerox Corp, assignee. Automatic image crop**. United States patent US 5,978,519. 1999 Nov 2.

Han, G., Zhou, W., Sun, N., Liu, J., & Li, X. (2019). Feature fusion and adversary occlusion networks for object detection. IEEE Access, 7, 124854–124865. https://doi.org/10.1109/ACCESS.2019.2938535

Li, Z., Peng, C., Yu, G., Zhang, X., Deng, Y., & Sun, J. (2018). Detnet: Design backbone for object detection. In: Proceedings of the European conference on computer vision (ECCV). (pp. 334–350).

Khan, Z., Yahya, N., Alsaih, K., Ali, S. S. A., & Meriaudeau, F. (2020). Evaluation of deep neural networks for semantic segmentation of prostate in T2W MRI. Sensors, 20(11), 3183.

Matterport, I. (2018). Mask R-CNN for object detection and instance segmentation on keras and tensorflow. [Online]. Available: https://github.com/matterport/Mask_RCN.

Parihar, A. S., Chakraborty, S. K., Sharma, A., et al. (2023). A comparative study and proposal of a novel distributed mutual exclusion in UAV assisted flying ad hoc network using density-based clustering scheme. Wireless Networks, 29, 2635–2648. https://doi.org/10.1007/s11276-023-03327-3

Refinetti, M., Goldt, S., Krzakala, F., & Zdeborová, L. (2021). Classifying high-dimensional gaussian mixtures: Where kernel methods fail and neural networks succeed. In: International conference on machine learning (pp. 8936–8947). PMLR.

Acknowledgements

The authors are grateful to the Ministry of Human Resource Development (MHRD), Govt. of India for funding this project (17-11/2015-PN-1) under the sub-theme Medical Devices & Restorative Technologies (MDaRT) of the Design and Innovation Centre (DIC).

Funding

The project was funded by the Ministry of Human Resource Development (MHRD), Govt. of India with grant number (17–11/2015-PN-1).

Author information

Authors and Affiliations

Contributions

All authors have equally contributed to the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Juneja, M., Saini, S.K., Chanana, C. et al. MRI-CropNet for Automated Crop** of Prostate Cancer in Magnetic Resonance Imaging. Wireless Pers Commun (2024). https://doi.org/10.1007/s11277-024-11335-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s11277-024-11335-5